k8s的ceph分布式存储方案

ceph官网地址:https://ceph.com/en/

rook官网地址:https://rook.io/

Rook是什么

- Rook本身并不是一个分布式存储系统,而是利用 Kubernetes 平台的强大功能,通过 Kubernetes Operator 为每个存储提供商提供服务。它是一个存储“编排器”,可以使用不同的后端(例如 Ceph、EdgeFS 等)执行繁重的管理存储工作,从而抽象出很多复杂性。

- Rook 将分布式存储系统转变为自我管理、自我扩展、自我修复的存储服务。它自动执行存储管理员的任务:部署、引导、配置、供应、扩展、升级、迁移、灾难恢复、监控和资源管理

- Rook 编排了多个存储解决方案,每个解决方案都有一个专门的 Kubernetes Operator 来实现自动化管理。目前支持Ceph、Cassandra、NFS。

- 目前主流使用的后端是Ceph,Ceph 提供的不仅仅是块存储;它还提供与 S3/Swift 兼容的对象存储和分布式文件系统。Ceph 可以将一个卷的数据分布在多个磁盘上,因此可以让一个卷实际使用比单个磁盘更多的磁盘空间,这很方便。当向集群添加更多磁盘时,它会自动在磁盘之间重新平衡/重新分配数据。

ceph-rook 与k8s集成方式

- Rook 是一个开源的cloud-native storage编排, 提供平台和框架;为各种存储解决方案提供平台、框架和支持,以便与云原生环境本地集成。

- Rook 将存储软件转变为自我管理、自我扩展和自我修复的存储服务,它通过自动化部署、引导、配置、置备、扩展、升级、迁移、灾难恢复、监控和资源管理来实现此目的。

- Rook 使用底层云本机容器管理、调度和编排平台提供的工具来实现它自身的功能。

- Rook 目前支持Ceph、NFS、Minio Object Store和CockroachDB。

- Rook使用Kubernetes原语使Ceph存储系统能够在Kubernetes上运行

rook + ceph 提供的能力

- Rook 帮我们创建好StorageClass

- pvc 只需要指定存储类,Rook 自动调用 StorageClass 里面的 Provisioner 供应商,接下来对 ceph 集群操作

- Ceph

- Block:块存储。RWO(ReadWriteOnce)单节点读写【一个Pod操作一个自己专属的读写区】,适用于(有状态副本及)

- Share FS(Ceph File System):共享存储。RWX(ReadWriteMany)多节点读写【多个Pod操作同一个存储区,可读可写】,适用于无状态应用。(文件系统)

- 总结:无状态应用随意复制多少份,一定用到RWX能力。有状态应用复制任意份,每份都只是读写自己的存储,用到RWO(优先)或者RWX。

- 直接通过Rook可以使用到任意能力的存储。

前置环境:

实验环境:

- 1、vmware虚拟机部署了2台centos7机器,一个master节点,一个woker节点

- 2、k8s版本:1.17.4

- 3、网络插件:flannel

- 4、将master节点不能调度的污点去除(非必须,电脑配置低,那master当woker用)

- kubectl taint nodes k8s-master node-role.kubernetes.io/master:NoSchedule-

sudo yum install -y lvm2

一、需要一个未分区即无文件系统的磁盘

- Raw devices (no partitions or formatted filesystems): 原始磁盘,无分区或者格式化

- Raw partitions (no formatted filesystem): 原始分区,无格式化文件系统

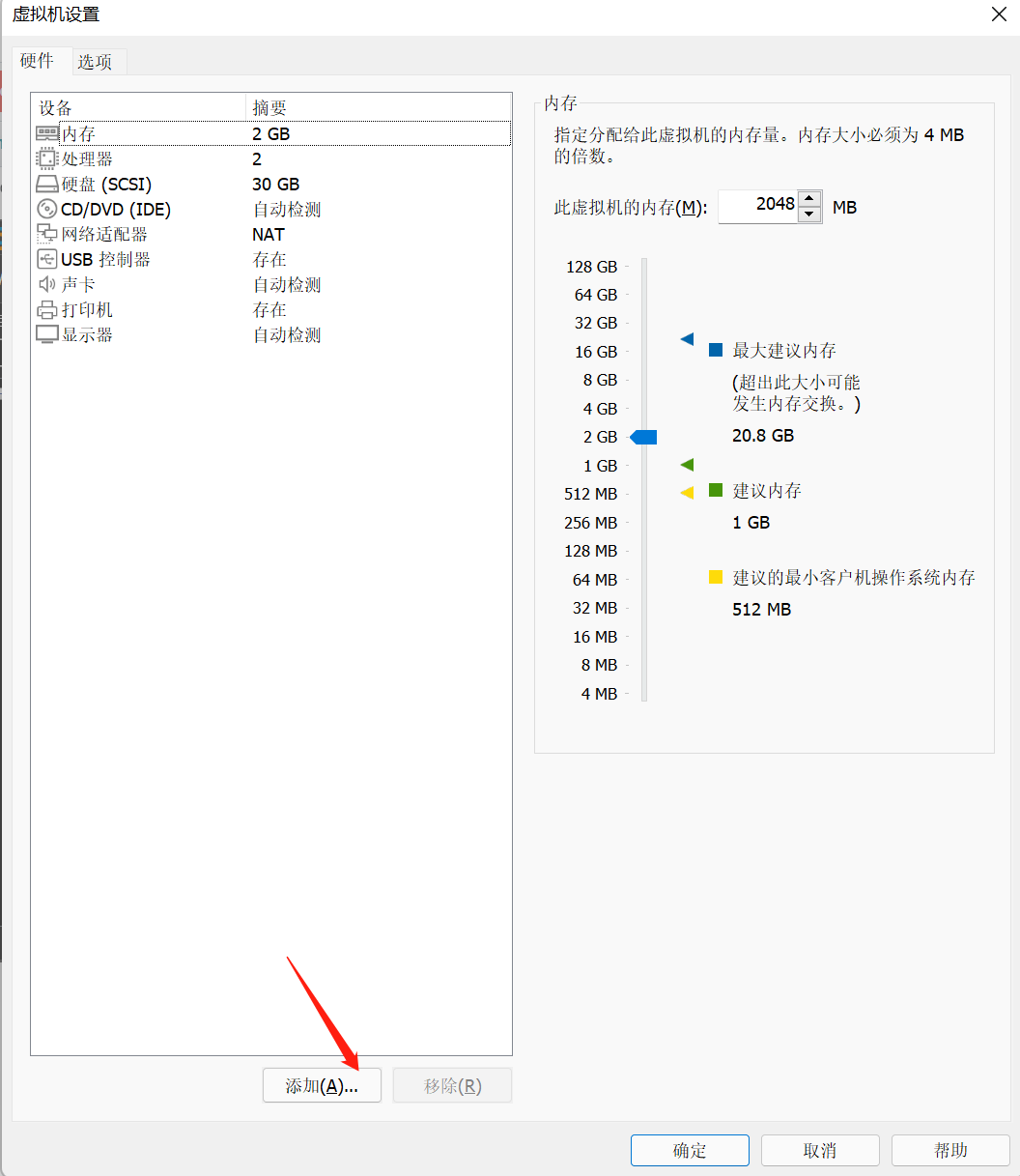

虚拟机中如何添加无分区或格式化的硬盘:

- 虚拟机设置,点击添加

- 选择硬盘,下一步

- SCSI,下一步

- 创建新虚拟磁盘,下一步

- 选择容量大小,下一步

- 完成,启动虚拟机

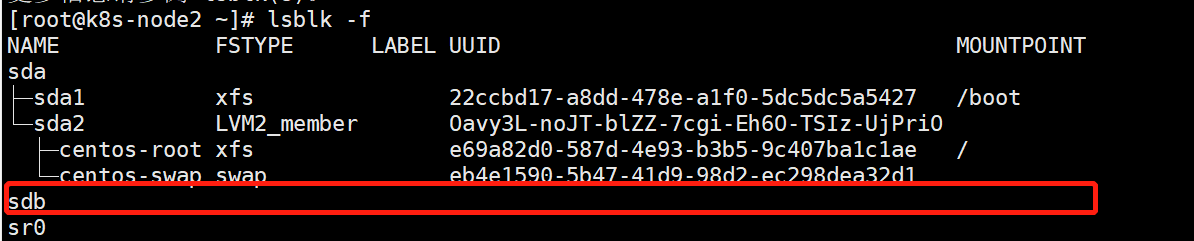

lsblk -f ## 磁盘清零(如果是云厂商的挂阿紫的硬盘,必须要低格,不然会遇到问题) dd if=/dev/zero of=/dev/sdb bs=1M status=progress

二、Deploy the Rook Operator(发布Rook控制器)

下载源码包 git clone --single-branch --branch v1.7.11 https://github.com/rook/rook.git cd rook/cluster/examples/kubernetes/ceph # 1、修改operator.yaml中的镜像,因为镜像是在谷歌服务器,国内访问不到 # ROOK_CSI_CEPH_IMAGE: "quay.io/cephcsi/cephcsi:v3.4.0" # ROOK_CSI_REGISTRAR_IMAGE: "k8s.gcr.io/sig-storage/csi-node-driver-registrar:v2.3.0" # ROOK_CSI_RESIZER_IMAGE: "k8s.gcr.io/sig-storage/csi-resizer:v1.3.0" # ROOK_CSI_PROVISIONER_IMAGE: "k8s.gcr.io/sig-storage/csi-provisioner:v3.0.0" # ROOK_CSI_SNAPSHOTTER_IMAGE: "k8s.gcr.io/sig-storage/csi-snapshotter:v4.2.0" # ROOK_CSI_ATTACHER_IMAGE: "k8s.gcr.io/sig-storage/csi-attacher:v3.3.0" ROOK_CSI_CEPH_IMAGE: "quay.io/cephcsi/cephcsi:v3.4.0" ROOK_CSI_REGISTRAR_IMAGE: "registry.aliyuncs.com/google_containers/csi-node-driver-registrar:v2.3.0" ROOK_CSI_RESIZER_IMAGE: "registry.aliyuncs.com/google_containers/csi-resizer:v1.3.0" ROOK_CSI_PROVISIONER_IMAGE: "registry.aliyuncs.com/google_containers/csi-provisioner:v3.0.0" ROOK_CSI_SNAPSHOTTER_IMAGE: "registry.aliyuncs.com/google_containers/csi-snapshotter:v4.2.0" ROOK_CSI_ATTACHER_IMAGE: "registry.aliyuncs.com/google_containers/csi-attacher:v3.3.0" kubectl create -f crds.yaml -f common.yaml -f operator.yaml # verify the rook-ceph-operator is in the `Running` state before proceeding kubectl -n rook-ceph get pod

三、Create a Ceph Cluster(创建ceph集群)

# 修改 cluster.yaml 配置 #注意 mon.count :这个是奇数个,且不能超过k8s工作节点的个数 mon: # Set the number of mons to be started. Generally recommended to be 3. # For highest availability, an odd number of mons should be specified. count: 1 # The mons should be on unique nodes. For production, at least 3 nodes are recommended for this reason. # Mons should only be allowed on the same node for test environments where data loss is acceptable. allowMultiplePerNode: false mgr: # When higher availability of the mgr is needed, increase the count to 2. # In that case, one mgr will be active and one in standby. When Ceph updates which # mgr is active, Rook will update the mgr services to match the active mgr. count: 1 modules: # Several modules should not need to be included in this list. The "dashboard" and "monitoring" modules # are already enabled by other settings in the cluster CR. - name: pg_autoscaler enabled: true storage: # cluster level storage configuration and selection useAllNodes: false useAllDevices: false #deviceFilter: config: # crushRoot: "custom-root" # specify a non-default root label for the CRUSH map # metadataDevice: "md0" # specify a non-rotational storage so ceph-volume will use it as block db device of bluestore. # databaseSizeMB: "1024" # uncomment if the disks are smaller than 100 GB # journalSizeMB: "1024" # uncomment if the disks are 20 GB or smaller osdsPerDevice: "2" # this value can be overridden at the node or device level # encryptedDevice: "true" # the default value for this option is "false" # Individual nodes and their config can be specified as well, but 'useAllNodes' above must be set to false. Then, only the named # nodes below will be used as storage resources. Each node's 'name' field should match their 'kubernetes.io/hostname' label. nodes: - name: "k8s-master" devices: - name: "sdb" - name: "k8s-node1" devices: - name: "sdb" - name: "k8s-node2" devices: - name: "sdb" # nodes: # - name: "172.17.4.201" # devices: # specific devices to use for storage can be specified for each node # - name: "sdb" # - name: "nvme01" # multiple osds can be created on high performance devices # config: # osdsPerDevice: "5" # - name: "/dev/disk/by-id/ata-ST4000DM004-XXXX" # devices can be specified using full udev paths # config: # configuration can be specified at the node level which overrides the cluster level config # - name: "172.17.4.301" # deviceFilter: "^sd." # when onlyApplyOSDPlacement is false, will merge both placement.All() and placement.osd # 发布集群 kubectl create -f cluster.yaml # 检查各组件是否创建成功 kubectl -n rook-ceph get pod NAME READY STATUS RESTARTS AGE csi-cephfsplugin-provisioner-d77bb49c6-n5tgs 5/5 Running 0 140s csi-cephfsplugin-provisioner-d77bb49c6-v9rvn 5/5 Running 0 140s csi-cephfsplugin-rthrp 3/3 Running 0 140s csi-rbdplugin-hbsm7 3/3 Running 0 140s csi-rbdplugin-provisioner-5b5cd64fd-nvk6c 6/6 Running 0 140s csi-rbdplugin-provisioner-5b5cd64fd-q7bxl 6/6 Running 0 140s rook-ceph-crashcollector-minikube-5b57b7c5d4-hfldl 1/1 Running 0 105s rook-ceph-mgr-a-64cd7cdf54-j8b5p 1/1 Running 0 77s rook-ceph-mon-a-694bb7987d-fp9w7 1/1 Running 0 105s rook-ceph-mon-b-856fdd5cb9-5h2qk 1/1 Running 0 94s rook-ceph-mon-c-57545897fc-j576h 1/1 Running 0 85s rook-ceph-operator-85f5b946bd-s8grz 1/1 Running 0 92m rook-ceph-osd-0-6bb747b6c5-lnvb6 1/1 Running 0 23s rook-ceph-osd-1-7f67f9646d-44p7v 1/1 Running 0 24s rook-ceph-osd-2-6cd4b776ff-v4d68 1/1 Running 0 25s rook-ceph-osd-prepare-node1-vx2rz 0/2 Completed 0 60s rook-ceph-osd-prepare-node2-ab3fd 0/2 Completed 0 60s rook-ceph-osd-prepare-node3-w4xyz 0/2 Completed 0 60s

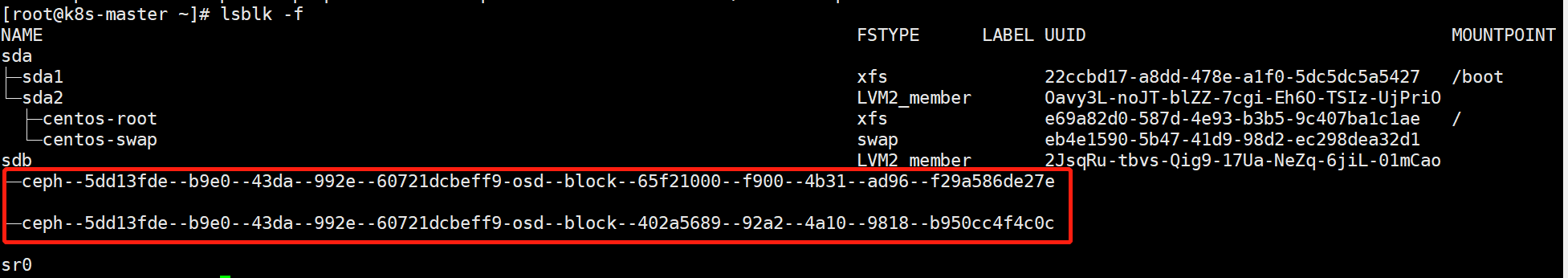

集群创建完后,再次查看挂载的 sdb 磁盘的信息

lsblk -f

可以看到新挂载的无文件系统的磁盘已经被ceph管理,变为ceph的文件系统

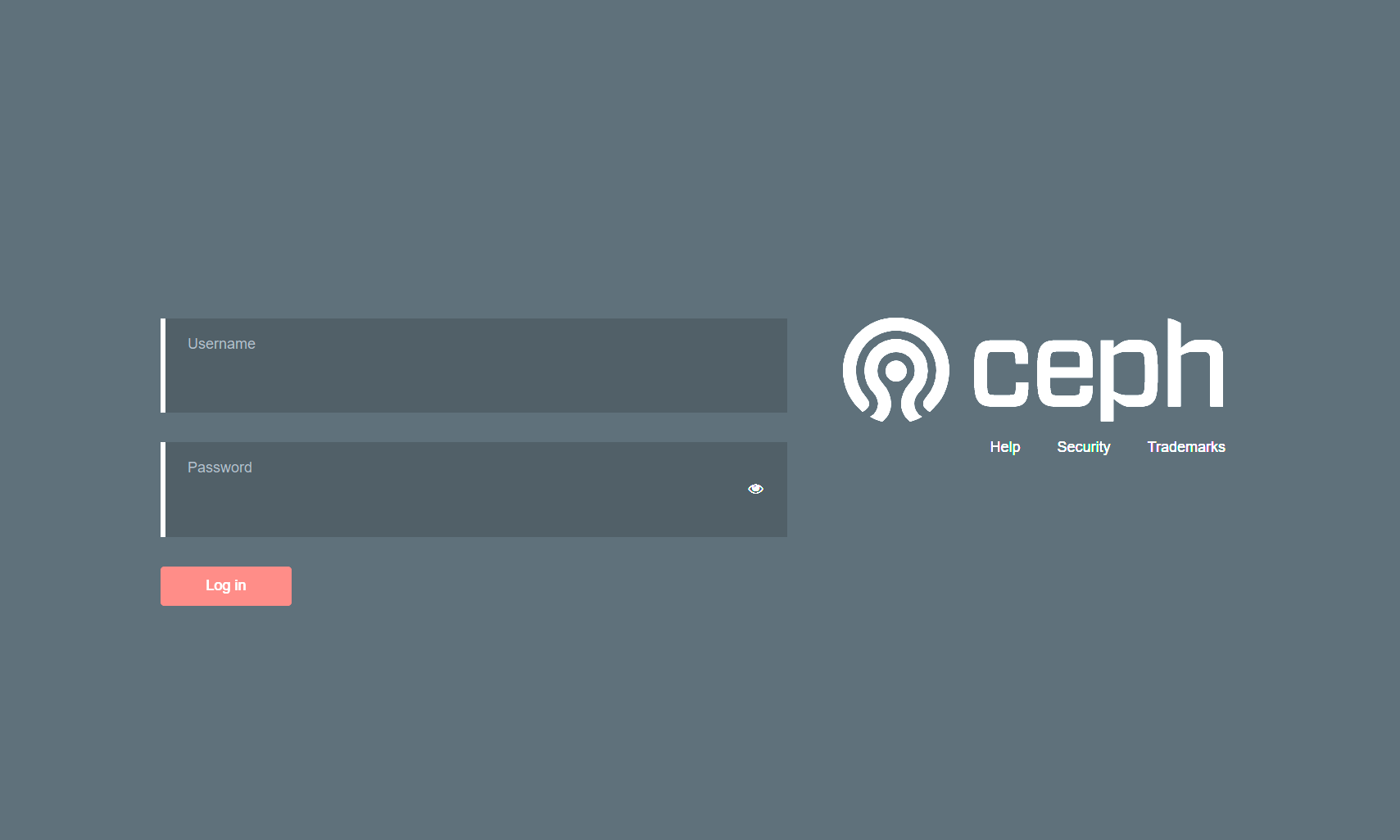

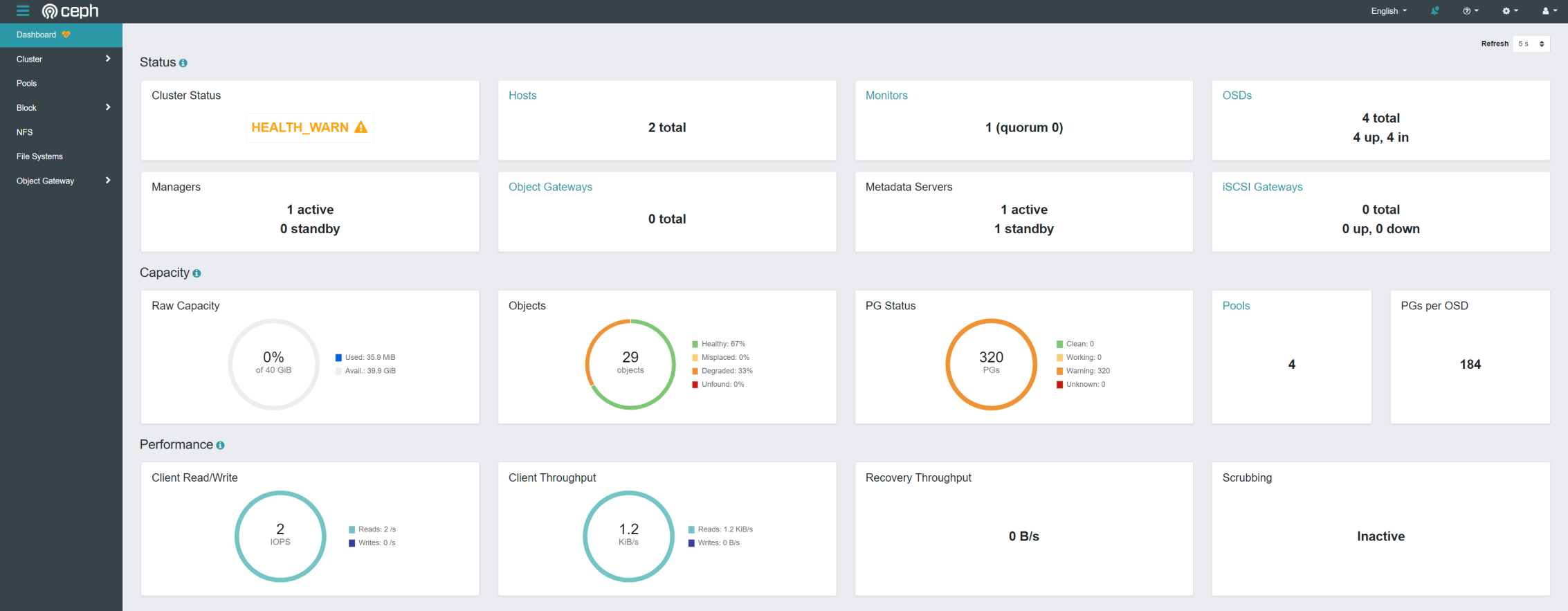

四、配置ceph dashboard

默认的ceph 已经安装的ceph-dashboard,但是其svc地址为service clusterIP,并不能被外部访问

kubectl apply -f dashboard-external-https.yaml # kubectl get svc -n rook-ceph|grep dashboard rook-ceph-mgr-dashboard ClusterIP 10.107.32.153 <none> 8443/TCP 23m rook-ceph-mgr-dashboard-external-https NodePort 10.106.136.221 <none> 8443:31840/TCP 7m58s

浏览器访问:https://192.168.30.130:31840/

用户名默认是admin,至于密码可以通过以下代码获取:

kubectl -n rook-ceph get secret rook-ceph-dashboard-password -o jsonpath="{['data']['password']}"|base64 --decode && echo

密码太复杂了,可以进入系统后再改密码: admin/admin123

五、块存储(RDB)

RDB:RADOS Block Devices

RADOS:Reliable,Autonomic Distributed Object Store

不能是RWX模式

1、部署 CephBlockPool 和StorageClass

# 创建 CephBlockPool cd rook/cluster/examples/kubernetes/ceph kubectl apply -f pool.yaml # 创建 StorageClass 供应商 cd csi/rbd kubectl apply -f storageclass.yaml [root@k8s-master rbd]# kubectl apply -f storageclass.yaml [root@k8s-master rbd]# kubectl get sc -n rook-ceph NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE rook-ceph-block rook-ceph.rbd.csi.ceph.com Delete Immediate true 12s

以后有状态应用 申明 pvc 只要写上 storageClassName 为 rook-ceph-block 就可以了,供应商会自动给你分配 pv 存储

2、案例

# pvc.yaml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: rbd-pvc spec: accessModes: - ReadWriteOnce resources: requests: storage: 1Gi storageClassName: rook-ceph-block # pod.yaml apiVersion: v1 kind: Pod metadata: name: csirbd-demo-pod spec: containers: - name: web-server image: nginx volumeMounts: - name: mypvc mountPath: /var/lib/www/html volumes: - name: mypvc persistentVolumeClaim: claimName: rbd-pvc readOnly: false

kubectl apply -f pvc.yaml kubectl apply -f pod.yaml [root@k8s-master rbd]# kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE rbd-pvc Bound pvc-af052c81-9dad-4597-943f-7b62002e551c 1Gi RWO rook-ceph-block 32m

六、文件存储(CephFS)

1、部署 CephFilesystem 和 StorageClass

# 部署 CephFilesystem cd rook/cluster/examples/kubernetes/ceph kubectl apply -f filesystem.yaml # 创建 StorageClass 供应商 cd csi/cephfs/ kubectl apply -f storageclass.yaml [root@k8s-master cephfs]# kubectl get sc NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE rook-ceph-block rook-ceph.rbd.csi.ceph.com Delete Immediate true 48m rook-cephfs rook-ceph.cephfs.csi.ceph.com Delete Immediate true 17s #storageclass为rook-cephfs的就是共享文件(RWX)的供应商

无状态应用声明 storageClassName: rook-cephfs ,供应商直接给你动态分配pv

2、案例:

# nginx.yaml apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deploy namespace: default labels: app: nginx spec: selector: matchLabels: app: nginx replicas: 2 template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx:latest ports: - containerPort: 80 name: nginx volumeMounts: - name: localtime mountPath: /etc/localtime - name: nginx-html mountPath: /usr/share/nginx/html volumes: - name: localtime hostPath: path: /usr/share/zoneinfo/Asia/Shanghai - name: nginx-html persistentVolumeClaim: claimName: nginx-html --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: nginx-html namespace: default spec: storageClassName: rook-cephfs accessModes: - ReadWriteMany resources: requests: storage: 1Gi --- apiVersion: v1 kind: Service metadata: name: nginx-service labels: app: nginx spec: type: NodePort selector: app: nginx ports: - port: 80 targetPort: 80 nodePort: 32500

kubectl apply -f nginx.yaml [root@k8s-master ~]# kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE nginx-html Bound pvc-1beafc19-2687-4394-ae19-a42768f64cd5 1Gi RWX rook-cephfs 24h

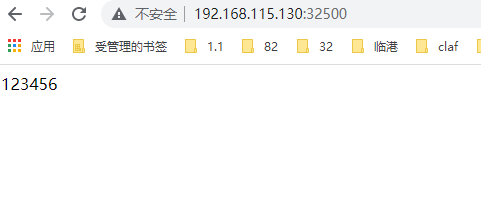

然后进入容器,在/usr/share/nginx/html中创建 index.html,并在里面写入123456,访问nginx主页面如下:

/usr/share/nginx/html 是挂载在 ceph 集群的文件系统下面的。

七、ceph客户端工具 toolbox

rook toolbox可以在Kubernetes集群中作为部署运行,您可以在其中连接并运行任意Ceph命令

cd rook/cluster/examples/kubernetes/ceph # 部署工具 kubectl create -f toolbox.yaml # 查看pod运行状态,知道为 running 状态: kubectl -n rook-ceph get pod -l "app=rook-ceph-tools" # 一旦运行成功,你可以使用下面的命令进入容器中使用ceph客户端命令 kubectl -n rook-ceph exec -it $(kubectl -n rook-ceph get pod -l "app=rook-ceph-tools" -o jsonpath='{.items[0].metadata.name}') bash

Example:

- ceph status

- ceph osd status

- ceph df

- rados df

每次都需要进入pod才能使用客户端,可以在集群任意节点安装ceph-common客户端工具:

1、安装ceph-common

# 安装 yum install ceph-common -y

2、进入 toolbox 容器,将 ceph.conf 和 keyring 文件内容拷到 k8s master节点中

# 进入容器 kubectl -n rook-ceph exec -it $(kubectl -n rook-ceph get pod -l "app=rook-ceph-tools" -o jsonpath='{.items[0].metadata.name}') bash # 查看配置文件 ls /etc/ceph [root@rook-ceph-tools-64fc489556-xvm7s ceph]# ls /etc/ceph ceph.conf keyring

3、在master主机的 /etc/ceph 目录中新建 ceph.conf 、keyring两个文件,并且将第二步骤中的文件中的内容拷贝到 对应 文件中;

验证是否成功:

[root@k8s-master ~]# ceph version ceph version 15.2.8 (bdf3eebcd22d7d0b3dd4d5501bee5bac354d5b55) octopus (stable)

4、查看系统中的文件系统

[root@k8s-master ~]# ceph fs ls name: myfs, metadata pool: myfs-metadata, data pools: [myfs-data0 ]

八、将ceph集群中的文件系统挂载到master物理节点目录中

1、master节点新建一个目录用来挂载cephfs文件系统

mkdir -p /k8s/data

2、挂载文件系统

# Detect the mon endpoints and the user secret for the connection mon_endpoints=$(grep mon_host /etc/ceph/ceph.conf | awk '{print $3}') my_secret=$(grep key /etc/ceph/keyring | awk '{print $3}') # Mount the filesystem mount -t ceph -o mds_namespace=myfs,name=admin,secret=$my_secret $mon_endpoints:/ /k8s/data # See your mounted filesystem df -h 10.96.224.164:6789:/ 11G 0 11G 0% /k8s/data # 查看文件夹中的数据 ls /k8s/data [root@k8s-master 9d4e99e0-0a18-4c21-81e3-4244e03cf274]# ls /k8s/data volumes # 在下面的目录中可以看到容器nginx中挂载的index.html文件 [root@k8s-master ~]# ls /k8s/data/volumes/csi/csi-vol-3ca5cb69-9a9b-11ec-97b1-9a59f1d96a01/9d4e99e0-0a18-4c21-81e3-4244e03cf274 index.html

进入nginx容器,在 /usr/share/nginx/html 中新建 login.html

[root@k8s-master ~]# kubectl exec -it nginx-deploy-7d7c77bdcf-7bs6k -- /bin/bash root@nginx-deploy-7d7c77bdcf-7bs6k:/# cd /usr/share/nginx/html/ root@nginx-deploy-7d7c77bdcf-7bs6k:/usr/share/nginx/html# ls index.html root@nginx-deploy-7d7c77bdcf-7bs6k:/usr/share/nginx/html# echo 222 >> login.html

退出容器,在主机挂载的目录上查看是否有login.html

[root@k8s-master ~]# ls /k8s/data/volumes/csi/csi-vol-3ca5cb69-9a9b-11ec-97b1-9a59f1d96a01/9d4e99e0-0a18-4c21-81e3-4244e03cf274 index.html login.html

这样就可以在本机上查看容器中挂载的目录了

3、如果想取消挂载,则

umount /k8s/data

4、/k8s/data/volumes/csi/这个目录下如何区分哪个目录是哪个pod挂载的?

[root@k8s-master ~]# ls /k8s/data/volumes/csi csi-vol-3ca5cb69-9a9b-11ec-97b1-9a59f1d96a01

首先,查看所有的 pv

kubectl get pv [root@k8s-master ~]# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pvc-1beafc19-2687-4394-ae19-a42768f64cd5 1Gi RWX Delete Bound default/nginx-html rook-cephfs 27h

查看 pvc-1beafc19-2687-4394-ae19-a42768f64cd5 详情:

kubectl describe pv pvc-1beafc19-2687-4394-ae19-a42768f64cd5 [root@k8s-master ~]# kubectl describe pv pvc-1beafc19-2687-4394-ae19-a42768f64cd5 Name: pvc-1beafc19-2687-4394-ae19-a42768f64cd5 Labels: <none> Annotations: pv.kubernetes.io/provisioned-by: rook-ceph.cephfs.csi.ceph.com Finalizers: [kubernetes.io/pv-protection] StorageClass: rook-cephfs Status: Bound Claim: default/nginx-html Reclaim Policy: Delete Access Modes: RWX VolumeMode: Filesystem Capacity: 1Gi Node Affinity: <none> Message: Source: Type: CSI (a Container Storage Interface (CSI) volume source) Driver: rook-ceph.cephfs.csi.ceph.com VolumeHandle: 0001-0009-rook-ceph-0000000000000001-3ca5cb69-9a9b-11ec-97b1-9a59f1d96a01 ReadOnly: false VolumeAttributes: clusterID=rook-ceph fsName=myfs pool=myfs-data0 storage.kubernetes.io/csiProvisionerIdentity=1646222524067-8081-rook-ceph.cephfs.csi.ceph.com subvolumeName=csi-vol-3ca5cb69-9a9b-11ec-97b1-9a59f1d96a01 Events: <none>

如上所示,卷目录名称是:subvolumeName=csi-vol-3ca5cb69-9a9b-11ec-97b1-9a59f1d96a01

所以:目录 /k8s/data/volumes/csi/csi-vol-3ca5cb69-9a9b-11ec-97b1-9a59f1d96a01 中的内容就是 pod 挂载目录所放的内容。

目前自己部署遇到的坑:

1、我搭建的k8s集群版本:1.21.0,网络插件为:calico时,部署ceph的operator.yaml,一直不成功,换成flannel插件就成功了。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 周边上新:园子的第一款马克杯温暖上架

· Open-Sora 2.0 重磅开源!

· 分享 3 个 .NET 开源的文件压缩处理库,助力快速实现文件压缩解压功能!

· Ollama——大语言模型本地部署的极速利器

· DeepSeek如何颠覆传统软件测试?测试工程师会被淘汰吗?