Flinkcdc sink clickhouse失败故障处理

Record

故障现象

flink同步hive到ck的数据同步不完整

告警日志

flink 任务运行日志:

复制代码

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

```log

2023-03-01 05:21:08,021 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph [] - Job insert-into_uniData.regionMap.dws_cal_resource_capacity_analysis_instance_df (f0777bfaa62eb9e0c1d8a80a57b4468a) switched from state RUNNING to FAILING.

org.apache.flink.runtime.JobException: Recovery is suppressed by NoRestartBackoffTimeStrategy

at org.apache.flink.runtime.executiongraph.failover.flip1.ExecutionFailureHandler.handleFailure(ExecutionFailureHandler.java:138) ~[flink-dist_2.12-1.13.5.jar:1.13.5]

at org.apache.flink.runtime.executiongraph.failover.flip1.ExecutionFailureHandler.getFailureHandlingResult(ExecutionFailureHandler.java:82) ~[flink-dist_2.12-1.13.5.jar:1.13.5]

at org.apache.flink.runtime.scheduler.DefaultScheduler.handleTaskFailure(DefaultScheduler.java:216) ~[flink-dist_2.12-1.13.5.jar:1.13.5]

at org.apache.flink.runtime.scheduler.DefaultScheduler.maybeHandleTaskFailure(DefaultScheduler.java:206) ~[flink-dist_2.12-1.13.5.jar:1.13.5]

at org.apache.flink.runtime.scheduler.DefaultScheduler.updateTaskExecutionStateInternal(DefaultScheduler.java:197) ~[flink-dist_2.12-1.13.5.jar:1.13.5]

………………

Caused by: ru.yandex.clickhouse.except.ClickHouseUnknownException: ClickHouse exception, code: 1002, host: 10.150.121.54, port: 8123; Code: 243. DB::Exception: Cannot reserve 1.00 MiB, not enough space: While executing SinkToOutputStream. (NOT_ENOUGH_SPACE) (version 21.9.3.30 (official build))

at ru.yandex.clickhouse.except.ClickHouseExceptionSpecifier.getException(ClickHouseExceptionSpecifier.java:91) ~[clickhouse-jdbc-0.2.4.jar:?]

at ru.yandex.clickhouse.except.ClickHouseExceptionSpecifier.specify(ClickHouseExceptionSpecifier.java:55) ~[clickhouse-jdbc-0.2.4.jar:?]

at ru.yandex.clickhouse.except.ClickHouseExceptionSpecifier.specify(ClickHouseExceptionSpecifier.java:28) ~[clickhouse-jdbc-0.2.4.jar:?]

at ru.yandex.clickhouse.ClickHouseStatementImpl.checkForErrorAndThrow(ClickHouseStatementImpl.java:875) ~[clickhouse-jdbc-0.2.4.jar:?]

at ru.yandex.clickhouse.ClickHouseStatementImpl.sendStream(ClickHouseStatementImpl.java:851) ~[clickhouse-jdbc-0.2.4.jar:?]

at ru.yandex.clickhouse.Writer.send(Writer.java:106) ~[clickhouse-jdbc-0.2.4.jar:?]

at ru.yandex.clickhouse.Writer.send(Writer.java:102) ~[clickhouse-jdbc-0.2.4.jar:?]

at site.gaoxiaoming.table.ClickHouseSinkFunction.invoke(ClickHouseSinkFunction.java:61) ~[flink-connector-clickhouse-1.13.2-SNAPSHOT.jar:?]

at site.gaoxiaoming.table.ClickHouseSinkFunction.invoke(ClickHouseSinkFunction.java:22) ~[flink-connector-clickhouse-1.13.2-SNAPSHOT.jar:?]

at org.apache.flink.table.runtime.operators.sink.SinkOperator.processElement(SinkOperator.java:65) ~[flink-table-blink_2.12-1.13.5.jar:1.13.5]

at org.apache.flink.streaming.runtime.tasks.ChainingOutput.pushToOperator(ChainingOutput.java:108) ~[flink-dist_2.12-1.13.5.jar:1.13.5]

at org.apache.flink.streaming.runtime.tasks.ChainingOutput.collect(ChainingOutput.java:89) ~[flink-dist_2.12-1.13.5.jar:1.13.5]

…………

at org.apache.flink.runtime.taskmanager.Task.run(Task.java:566) ~[flink-dist_2.12-1.13.5.jar:1.13.5]

at java.lang.Thread.run(Thread.java:748) ~[?:1.8.0_251]

Caused by: java.lang.Throwable: Code: 243. DB::Exception: Cannot reserve 1.00 MiB, not enough space: While executing SinkToOutputStream. (NOT_ENOUGH_SPACE) (version 21.9.3.30 (official build))

at ru.yandex.clickhouse.except.ClickHouseExceptionSpecifier.specify(ClickHouseExceptionSpecifier.java:53) ~[clickhouse-jdbc-0.2.4.jar:?]

at ru.yandex.clickhouse.except.ClickHouseExceptionSpecifier.specify(ClickHouseExceptionSpecifier.java:28) ~[clickhouse-jdbc-0.2.4.jar:?]

at ru.yandex.clickhouse.ClickHouseStatementImpl.checkForErrorAndThrow(ClickHouseStatementImpl.java:875) ~[clickhouse-jdbc-0.2.4.jar:?]

at ru.yandex.clickhouse.ClickHouseStatementImpl.sendStream(ClickHouseStatementImpl.java:851) ~[clickhouse-jdbc-0.2.4.jar:?]

at ru.yandex.clickhouse.Writer.send(Writer.java:106) ~[clickhouse-jdbc-0.2.4.jar:?]

at ru.yandex.clickhouse.Writer.send(Writer.java:102) ~[clickhouse-jdbc-0.2.4.jar:?]

at site.gaoxiaoming.table.ClickHouseSinkFunction.invoke(ClickHouseSinkFunction.java:61) ~[flink-connector-clickhouse-1.13.2-SNAPSHOT.jar:?]

at site.gaoxiaoming.table.ClickHouseSinkFunction.invoke(ClickHouseSinkFunction.java:22) ~[flink-connector-clickhouse-1.13.2-SNAPSHOT.jar:?]

at org.apache.flink.table.runtime.operators.sink.SinkOperator.processElement(SinkOperator.java:65) ~[flink-table-blink_2.12-1.13.5.jar:1.13.5]

at org.apache.flink.streaming.runtime.tasks.ChainingOutput.pushToOperator(ChainingOutput.java:108) ~[flink-dist_2.12-1.13.5.jar:1.13.5]

at org.apache.flink.streaming.runtime.tasks.ChainingOutput.collect(ChainingOutput.java:89) ~[flink-dist_2.12-1.13.5.jar:1.13.5]

………………

at java.lang.Thread.run(Thread.java:748) ~[?:1.8.0_251]

2023-03-01 05:21:08,025 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph [] - Job insert-into_uniData.regionMap.dws_cal_resource_capacity_analysis_instance_df (f0777bfaa62eb9e0c1d8a80a57b4468a) switched from state FAILING to FAILED.

```

分析

这个错误通常表示在尝试将数据写入ClickHouse时,Flink无法在系统中找到足够的可用空间。这可能是由于以下原因之一导致的:

-

系统磁盘空间不足:

Flink将数据写入磁盘时需要使用可用空间,如果磁盘空间不足,就会出现这个错误。您可以通过检查系统磁盘空间来确定是否存在此问题。 -

ClickHouse磁盘空间不足:类似地,如果

ClickHouse中的磁盘空间不足,Flink可能无法将数据写入ClickHouse,并报告此错误。您可以检查ClickHouse的磁盘使用情况以确定是否存在此问题。 -

磁盘写入速度过慢:如果磁盘写入速度过慢,Flink可能无法及时写入数据,并最终出现此错误。您可以检查

磁盘的I/O性能以确定是否存在此问题。 -

Flink配置错误:最后,此错误可能是由于Flink配置错误导致的。例如,如果

Flink的默认缓冲区设置过小,可能会导致无法将足够的数据缓冲到内存中,从而导致无法将数据写入ClickHouse。您可以检查Flink的配置文件以确定是否存在此问题。

故障处理

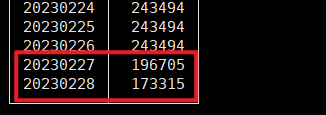

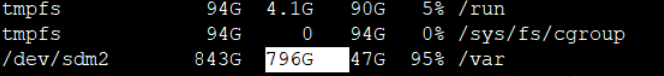

登录到ck所在机器发现 /var 目录几乎已经快满

目前删除部分历史数据缓解保证业务数据正常落库,后续对数据库进行扩容

参考资料

clickhouse插入数据报错Cannot reserve 1.00 MiB, not enough space (version 20.8.3.18)

本文作者:livebetter

本文链接:https://www.cnblogs.com/livebetter/p/17173932.html

版权声明:本作品采用知识共享署名-非商业性使用-禁止演绎 2.5 中国大陆许可协议进行许可。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步