Python实现数据采集

前提是配置好hadoop的相关环境

1、分析网页,确定采集的数据

我们需要获取到该网页的如下几个信息:

请求信息: url——网站页面地址

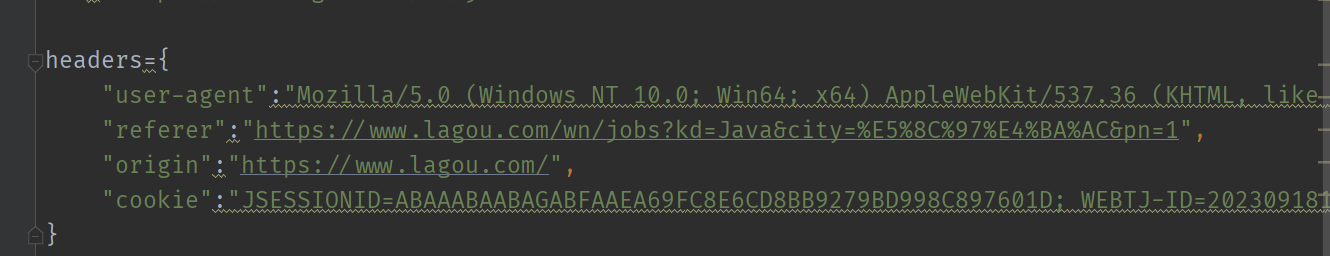

设置这个请求的请求头: headers——(user-agent/referer/origin/cookie)

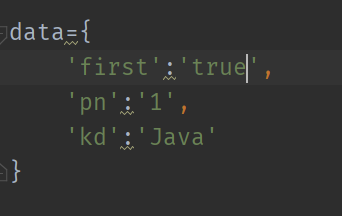

设置这个请求的传递数据: data——(first/pn/kd)------>解决编码

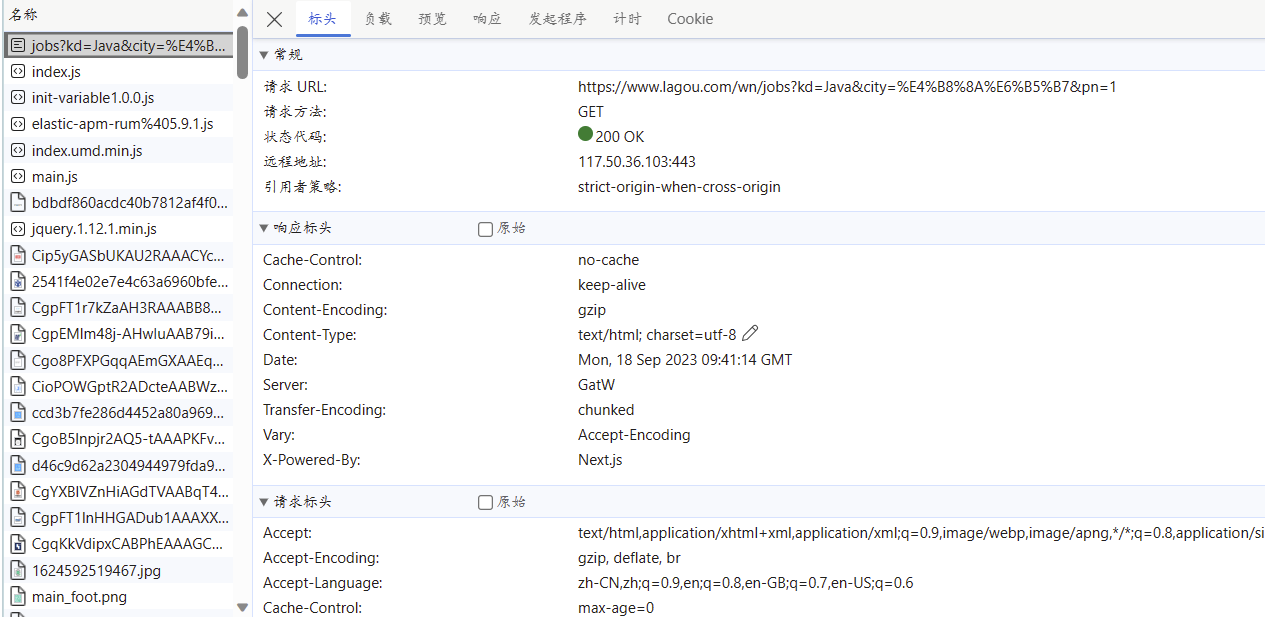

如下图所示:

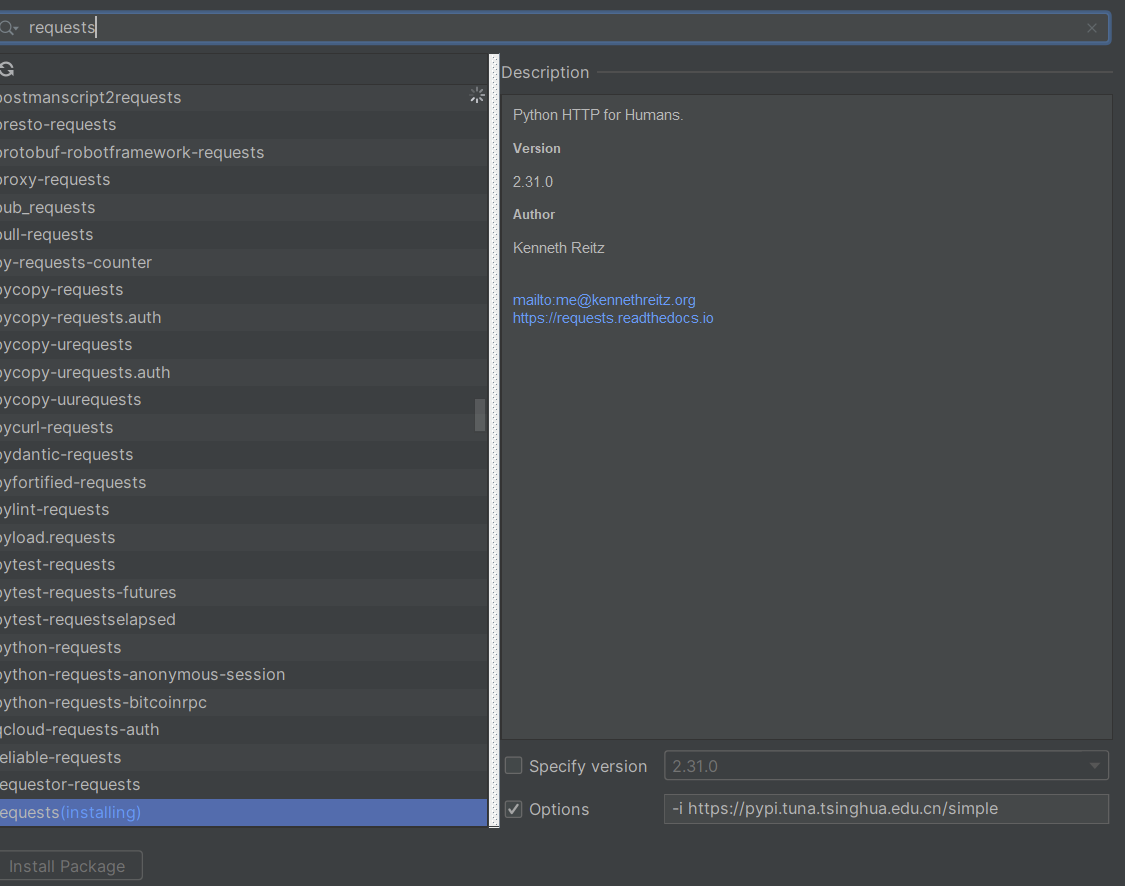

先安装python需要的相关的包:

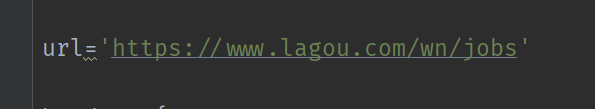

url:

https://www.lagou.com/wn/jobs

headers:

这里的数据使用快捷键Ctrl+Shift+i进入到web界面的检查页面,然后Ctrl+R刷新网络模块:

然后,信息就都在这里出现啦:

headers数据:

data:

提取数据:

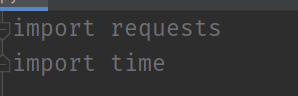

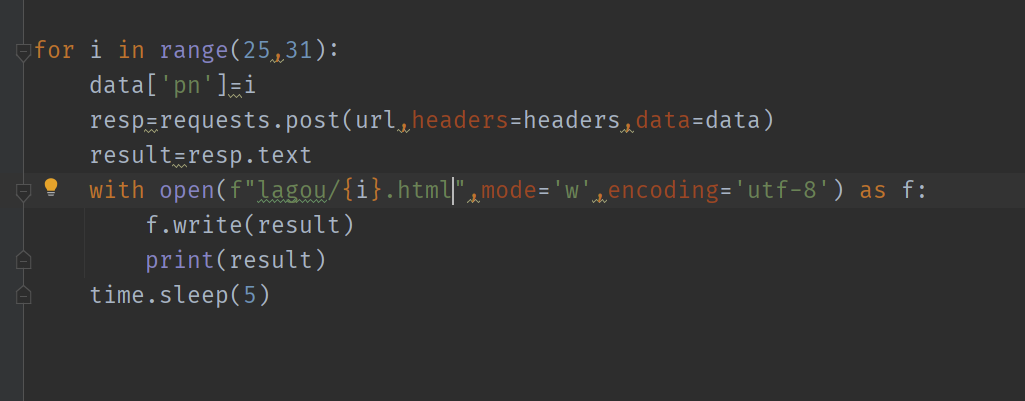

具体代码:

import requests

import time

url='https://www.lagou.com/wn/jobs'

headers={

"user-agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/117.0.0.0 Safari/537.36 Edg/117.0.2045.31",

"referer":"https://www.lagou.com/wn/jobs?kd=Java&city=%E5%8C%97%E4%BA%AC&pn=1",

"origin":"https://www.lagou.com/",

"cookie":"JSESSIONID=ABAAABAABAGABFAAEA69FC8E6CD8BB9279BD998C897601D; WEBTJ-ID=20230918164455-18aa776cf0f95c-068f79df6bc8cf-78505774-1327104-18aa776cf102dd; RECOMMEND_TIP=true; privacyPolicyPopup=false; Hm_lvt_4233e74dff0ae5bd0a3d81c6ccf756e6=1695026697; user_trace_token=20230918164457-cfb25219-7bd9-4f7d-9dc5-bc171648a693; LGSID=20230918164457-4880bcac-8e67-486b-8bb3-6aee05cc4147; LGUID=20230918164457-ed1f5207-a2ee-421f-922a-d4fc89bf7739; _ga=GA1.2.1911198389.1695026697; _gid=GA1.2.302334427.1695026697; sajssdk_2015_cross_new_user=1; sensorsdata2015session=%7B%7D; index_location_city=%E5%85%A8%E5%9B%BD; gate_login_token=v1####245d299e0d1ed714bf5e0c9709c8fab2553f894311014f16e954f5fdb12a0e64; LG_HAS_LOGIN=1; _putrc=81B878065C7103C6123F89F2B170EADC; login=true; hasDeliver=0; unick=%E5%88%98%E7%B4%AB%E9%94%A6; __SAFETY_CLOSE_TIME__26494154=1; TG-TRACK-CODE=index_navigation; __lg_stoken__=16e86fbfab228abce480d7c20e2a438c1a95f406ed5488e30fe83a2948fe63626ea8863aa1c6cec421d046c81340519584424cfd74494e3b9b45bd06928ed96691bf89e7027a; X_HTTP_TOKEN=5d8ce7eae99bedb73309205961ac07a8fe736a0c27; LGRID=20230918172353-055032eb-1cb1-48cc-a0d5-12a9700fcf93; Hm_lpvt_4233e74dff0ae5bd0a3d81c6ccf756e6=1695029038; _ga_DDLTLJDLHH=GS1.2.1695026697.1.1.1695029038.60.0.0; sensorsdata2015jssdkcross=%7B%22distinct_id%22%3A%2226494154%22%2C%22first_id%22%3A%2218aa776d37b331-02514a8c212ba9-78505774-1327104-18aa776d37c19af%22%2C%22props%22%3A%7B%22%24latest_traffic_source_type%22%3A%22%E7%9B%B4%E6%8E%A5%E6%B5%81%E9%87%8F%22%2C%22%24latest_search_keyword%22%3A%22%E6%9C%AA%E5%8F%96%E5%88%B0%E5%80%BC_%E7%9B%B4%E6%8E%A5%E6%89%93%E5%BC%80%22%2C%22%24latest_referrer%22%3A%22%22%2C%22%24os%22%3A%22Windows%22%2C%22%24browser%22%3A%22Chrome%22%2C%22%24browser_version%22%3A%22117.0.0.0%22%7D%2C%22%24device_id%22%3A%2218aa776d37b331-02514a8c212ba9-78505774-1327104-18aa776d37c19af%22%7D"

}

data={

'first':'true',

'pn':'1',

'kd':'Java'

}

for i in range(25,31):

data['pn']=i

resp=requests.post(url,headers=headers,data=data)

result=resp.text

with open(f"lagou-{i}.json",mode='w',encoding='utf-8') as f:

f.write(result)

print(result)

time.sleep(5)

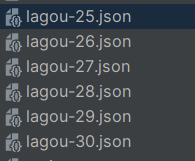

存放数据的文件: