ELK Nxlog->Kafka->ElasticSearch

Windows 系统下,log4日志通过kafka发送到elasticsearch; windows 下nxlog没有找到直接发送数据到kafka的插件,所以采用logstash中转下

Nxlog-->logstash(tcp)-->kafka(producer)-->logstash(consumer)-->elasticsearch

nxlog配置

<Input kafkalog4>

Module im_file

File "E:\\log\\webapi\\\kafka.txt"

SavePos TRUE

</Input>

<Output kafkalog4out>

Module om_tcp

Host 127.0.0.1

Port 6666

</Output>

<Route jsonruby>

Path kafkalog4 => kafkalog4out

</Route>

Logstash配置

input {

tcp {

port=>6666

codec => multiline {

charset =>"locale"

pattern => "^\d{4}\-\d{2}\-\d{2} \d{2}\:\d{2}\:\d{2}\,\d{3}"

negate => true

what => "previous"

}

type=>"kafkain"

}

kafka {

zk_connect => "127.0.0.1:2181"

topic_id => "test"

codec => plain

reset_beginning => false

consumer_threads => 5

decorate_events => true

}

}

output {

if [type=="kafkain"]

{

kafka {

bootstrap_servers => "localhost:9092"

topic_id => "test"

codec => plain {

format => "%{message}"

}

}

}

else{

elasticsearch {

hosts => ["localhost:9200"]

index => "test-kafka-%{+YYYY-MM}"

}

}

}

Logstash 文件在使用前用

logstash -f logstash.conf --configtest --verbose

检测下是否正确

显示OK的话起动logstash如下命令

Logstash agent -f logstash.conf

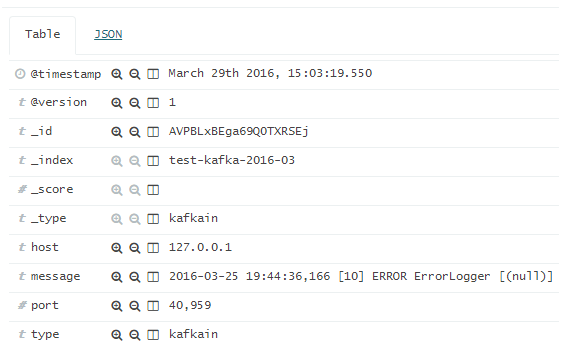

kibana显示日志如下