计算机视觉标准数据集整理

VOC2007 与 VOC2012 此数据集可以用于图像分类,目标检测,图像分割!!!数据集下载镜像网站: http://pjreddie.com/projects/pascal-voc-dataset-mirror/

VOC2012: Train/Validation Data(1.9GB),Test Data(1.8GB),主页: http://host.robots.ox.ac.uk:8080/pascal/VOC/voc2012/

VOC2007: Train/Validation Data(439MB),Test Data(431MB),主页: http://host.robots.ox.ac.uk:8080/pascal/VOC/voc2007/

MNIST手写体数据集(用作10类图像分类)

包含了60,000张28x28的二值(手写数字的)训练图像,10,000张28x28的二值(手写数字的)测试图像.用作分类任务,可以分成0-9这10个类别!

引用:Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner. "Gradient-based learning applied to document recognition." Proceedings of the IEEE, 86(11):2278-2324, November 1998.

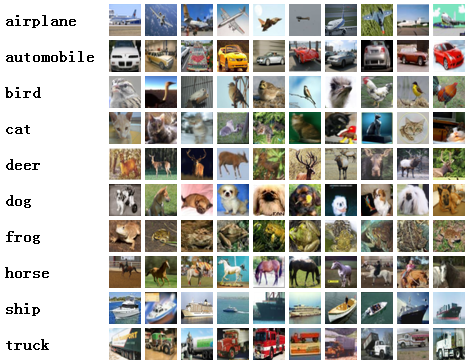

CIFAR-10(用作10类图像分类)

此数据集包含了60,000张32x32的RGB图像,总共有10类图像,大约6000张图像/类,50,000张做训练,10,000张做测试!

此数据集有三个版本的数据可供下载: Python版本(163MB), MATLAB版本(175MB), 二值版本(162MB)!

CIFAR-100(用作100类图像分类)

这个数据集和CIFAR-10相比,它具有100个类,大约600张/类,每类500张训练,500张测试.这100类又可以grouped成20个大类.

此数据集也有三个版本的数据可供下载: Python版本(161MB), MATLAB版本(175MB), 二值版本(161MB)!

引用: Learning Multiple Layers of Features from Tiny Images, Alex Krizhevsky, 2009

CIFAR-10和CIFAR-100都是80 million tiny images dataset的子集!

80 million tiny images dataset

这个数据集包含了79,302,017张32x32的RGB图像,下载时包含了5个文件,网站上也提供了示例代码教你如何加载这些数据!

1. Image binary (227GB)

2. Metadata binary (57GB)

3. Gist binary (114GB)

4. Index data (7MB)

5. Matlab Tiny Images toolbox (150kB)

Caltech_101(用作101类图像分类)

这个数据集包含了101类的图像,每类大约有40~800张图像,大部分是50张/类,在2003年由lifeifei收集,每张图像的大小大约是300x200.

数据集下载: 101_ObjectCategories.tar.gz(131MB)

Caltech_256(用作256类图像分类)

此数据集和Caltech_101相似,包含了30,607张图像,数据集下载: 256_ObjectCategroies.tar(1.2GB)

IMAGENET Large Scale Visual Recognition Challenge(ILSVRC)

从2010年开始,每年举办的ILSVRC图像分类和目标检测大赛,数据集下载: http://image-net.org/download-images

Detection

- PASCAL VOC 2009 dataset

- Classification/Detection Competitions, Segmentation Competition, Person Layout Taster Competition datasets

- LabelMe dataset

- LabelMe is a web-based image annotation tool that allows researchers to label images and share the annotations with the rest of the community. If you use the database, we only ask that you contribute to it, from time to time, by using the labeling tool.

- BioID Face Detection Database

- 1521 images with human faces, recorded under natural conditions, i.e. varying illumination and complex background. The eye positions have been set manually.

- CMU/VASC & PIE Face dataset

- Yale Face dataset

- Caltech

- Cars, Motorcycles, Airplanes, Faces, Leaves, Backgrounds

- Caltech 101

- Pictures of objects belonging to 101 categories

- Caltech 256

- Pictures of objects belonging to 256 categories

- Daimler Pedestrian Detection Benchmark

- 15,560 pedestrian and non-pedestrian samples (image cut-outs) and 6744 additional full images not containing pedestrians for bootstrapping. The test set contains more than 21,790 images with 56,492 pedestrian labels (fully visible or partially occluded), captured from a vehicle in urban traffic.

- MIT Pedestrian dataset

- CVC Pedestrian Datasets

- CVC Pedestrian Datasets

- CBCL Pedestrian Database

- MIT Face dataset

- CBCL Face Database

- MIT Car dataset

- CBCL Car Database

- MIT Street dataset

- CBCL Street Database

- INRIA Person Data Set

- A large set of marked up images of standing or walking people

- INRIA car dataset

- A set of car and non-car images taken in a parking lot nearby INRIA

- INRIA horse dataset

- A set of horse and non-horse images

- H3D Dataset

- 3D skeletons and segmented regions for 1000 people in images

- HRI RoadTraffic dataset

- A large-scale vehicle detection dataset

- BelgaLogos

- 10000 images of natural scenes, with 37 different logos, and 2695 logos instances, annotated with a bounding box.

- FlickrBelgaLogos

- 10000 images of natural scenes grabbed on Flickr, with 2695 logos instances cut and pasted from the BelgaLogos dataset.

- FlickrLogos-32

- The dataset FlickrLogos-32 contains photos depicting logos and is meant for the evaluation of multi-class logo detection/recognition as well as logo retrieval methods on real-world images. It consists of 8240 images downloaded from Flickr.

- TME Motorway Dataset

- 30000+ frames with vehicle rear annotation and classification (car and trucks) on motorway/highway sequences. Annotation semi-automatically generated using laser-scanner data. Distance estimation and consistent target ID over time available.

- PHOS (Color Image Database for illumination invariant feature selection)

- Phos is a color image database of 15 scenes captured under different illumination conditions. More particularly, every scene of the database contains 15 different images: 9 images captured under various strengths of uniform illumination, and 6 images under different degrees of non-uniform illumination. The images contain objects of different shape, color and texture and can be used for illumination invariant feature detection and selection.

- CaliforniaND: An Annotated Dataset For Near-Duplicate Detection In Personal Photo Collections

- California-ND contains 701 photos taken directly from a real user's personal photo collection, including many challenging non-identical near-duplicate cases, without the use of artificial image transformations. The dataset is annotated by 10 different subjects, including the photographer, regarding near duplicates.

- USPTO Algorithm Challenge, Detecting Figures and Part Labels in Patents

- Contains drawing pages from US patents with manually labeled figure and part labels.

- Abnormal Objects Dataset

- Contains 6 object categories similar to object categories in Pascal VOC that are suitable for studying the abnormalities stemming from objects.

- Human detection and tracking using RGB-D camera

- Collected in a clothing store. Captured with Kinect (640*480, about 30fps)

- Multi-Task Facial Landmark (MTFL) dataset

- This dataset contains 12,995 face images collected from the Internet. The images are annotated with (1) five facial landmarks, (2) attributes of gender, smiling, wearing glasses, and head pose.

Classification

- PASCAL VOC 2009 dataset

- Classification/Detection Competitions, Segmentation Competition, Person Layout Taster Competition datasets

- Caltech

- Cars, Motorcycles, Airplanes, Faces, Leaves, Backgrounds

- Caltech 101

- Pictures of objects belonging to 101 categories

- Caltech 256

- Pictures of objects belonging to 256 categories

- ETHZ Shape Classes

- A dataset for testing object class detection algorithms. It contains 255 test images and features five diverse shape-based classes (apple logos, bottles, giraffes, mugs, and swans).

- Flower classification data sets

- 17 Flower Category Dataset

- Animals with attributes

- A dataset for Attribute Based Classification. It consists of 30475 images of 50 animals classes with six pre-extracted feature representations for each image.

- Stanford Dogs Dataset

- Dataset of 20,580 images of 120 dog breeds with bounding-box annotation, for fine-grained image categorization.

- Video classification USAA dataset

- The USAA dataset includes 8 different semantic class videos which are home videos of social occassions which feature activities of group of people. It contains around 100 videos for training and testing respectively. Each video is labeled by 69 attributes. The 69 attributes can be broken down into five broad classes: actions, objects, scenes, sounds, and camera movement.

- McGill Real-World Face Video Database

- This database contains 18000 video frames of 640x480 resolution from 60 video sequences, each of which recorded from a different subject (31 female and 29 male).

Recognition

- Face and Gesture Recognition Working Group FGnet

- Face and Gesture Recognition Working Group FGnet

- Feret

- Face and Gesture Recognition Working Group FGnet

- PUT face

- 9971 images of 100 people

- Labeled Faces in the Wild

- A database of face photographs designed for studying the problem of unconstrained face recognition

- Urban scene recognition

- Traffic Lights Recognition, Lara's public benchmarks.

- PubFig: Public Figures Face Database

- The PubFig database is a large, real-world face dataset consisting of 58,797 images of 200 people collected from the internet. Unlike most other existing face datasets, these images are taken in completely uncontrolled situations with non-cooperative subjects.

- YouTube Faces

- The data set contains 3,425 videos of 1,595 different people. The shortest clip duration is 48 frames, the longest clip is 6,070 frames, and the average length of a video clip is 181.3 frames.

- MSRC-12: Kinect gesture data set

- The Microsoft Research Cambridge-12 Kinect gesture data set consists of sequences of human movements, represented as body-part locations, and the associated gesture to be recognized by the system.

- QMUL underGround Re-IDentification (GRID) Dataset

- This dataset contains 250 pedestrian image pairs + 775 additional images captured in a busy underground station for the research on person re-identification.

- Person identification in TV series

- Face tracks, features and shot boundaries from our latest CVPR 2013 paper. It is obtained from 6 episodes of Buffy the Vampire Slayer and 6 episodes of Big Bang Theory.

- ChokePoint Dataset

- ChokePoint is a video dataset designed for experiments in person identification/verification under real-world surveillance conditions. The dataset consists of 25 subjects (19 male and 6 female) in portal 1 and 29 subjects (23 male and 6 female) in portal 2.

- Hieroglyph Dataset

- Ancient Egyptian Hieroglyph Dataset.

- Rijksmuseum Challenge Dataset: Visual Recognition for Art Dataset

- Over 110,000 photographic reproductions of the artworks exhibited in the Rijksmuseum (Amsterdam, the Netherlands). Offers four automatic visual recognition challenges consisting of predicting the artist, type, material and creation year. Includes a set of baseline features, and offer a baseline based on state-of-the-art image features encoded with the Fisher vector.

- The OU-ISIR Gait Database, Treadmill Dataset

- Treadmill gait datasets composed of 34 subjects with 9 speed variations, 68 subjects with 68 subjects, and 185 subjects with various degrees of gait fluctuations.

- The OU-ISIR Gait Database, Large Population Dataset

- Large population gait datasets composed of 4,016 subjects.

- Pedestrian Attribute Recognition At Far Distance

- Large-scale PEdesTrian Attribute (PETA) dataset, covering more than 60 attributes (e.g. gender, age range, hair style, casual/formal) on 19000 images.

- FaceScrub Face Dataset

- The FaceScrub dataset is a real-world face dataset comprising 107,818 face images of 530 male and female celebrities detected in images retrieved from the Internet. The images are taken under real-world situations (uncontrolled conditions). Name and gender annotations of the faces are included.

Tracking

- BIWI Walking Pedestrians dataset

- Walking pedestrians in busy scenarios from a bird eye view

- "Central" Pedestrian Crossing Sequences

- Three pedestrian crossing sequences

- Pedestrian Mobile Scene Analysis

- The set was recorded in Zurich, using a pair of cameras mounted on a mobile platform. It contains 12'298 annotated pedestrians in roughly 2'000 frames.

- Head tracking

- BMP image sequences.

- KIT AIS Dataset

- Data sets for tracking vehicles and people in aerial image sequences.

- MIT Traffic Data Set

- MIT traffic data set is for research on activity analysis and crowded scenes. It includes a traffic video sequence of 90 minutes long. It is recorded by a stationary camera.

- Shinpuhkan 2014 dataset: Multi-Camera Pedestrian Dataset for Tracking People across Multiple Cameras

- This dataset consists of more than 22,000 images of 24 people which are captured by 16 cameras installed in a shopping mall "Shinpuh-kan". All images are manually cropped and resized to 48x128 pixels, grouped into tracklets and added annotation.

- ATC shopping center dataaset

- The tracking environment consists of multiple 3D range sensors, covering an area of about 900 m2, in the "ATC" shopping center in Osaka, Japan.

- Human detection and tracking using RGB-D camera

- Collected in a clothing store. Captured with Kinect (640*480, about 30fps)

- Multiple Camera Tracking

- Hallway Corridor - Multiple Camera Tracking: An indoor camera network dataset with 6 cameras (contains ground plane homography).

- Multiple Object Tracking Benchmark

- A centralized benchmark for multi-object tracking.

Segmentation

- Image Segmentation with A Bounding Box Prior dataset

- Ground truth database of 50 images with: Data, Segmentation, Labelling - Lasso, Labelling - Rectangle

- PASCAL VOC 2009 dataset

- Classification/Detection Competitions, Segmentation Competition, Person Layout Taster Competition datasets

- Motion Segmentation and OBJCUT data

- Cows for object segmentation, Five video sequences for motion segmentation

- Geometric Context Dataset

- Geometric Context Dataset: pixel labels for seven geometric classes for 300 images

- Crowd Segmentation Dataset

- This dataset contains videos of crowds and other high density moving objects. The videos are collected mainly from the BBC Motion Gallery and Getty Images website. The videos are shared only for the research purposes. Please consult the terms and conditions of use of these videos from the respective websites.

- CMU-Cornell iCoseg Dataset

- Contains hand-labelled pixel annotations for 38 groups of images, each group containing a common foreground. Approximately 17 images per group, 643 images total.

- Segmentation evaluation database

- 200 gray level images along with ground truth segmentations

- The Berkeley Segmentation Dataset and Benchmark

- Image segmentation and boundary detection. Grayscale and color segmentations for 300 images, the images are divided into a training set of 200 images, and a test set of 100 images.

- Weizmann horses

- 328 side-view color images of horses that were manually segmented. The images were randomly collected from the WWW.

- Saliency-based video segmentation with sequentially updated priors

- 10 videos as inputs, and segmented image sequences as ground-truth

- Daimler Urban Segmentation Dataset

- The dataset consists of video sequences recorded in urban traffic. The dataset consists of 5000 rectified stereo image pairs. 500 frames come with pixel-level semantic class annotations into 5 classes: ground, building, vehicle, pedestrian, sky. Dense disparity maps are provided as a reference.

Foreground/Background

- Wallflower Dataset

- For evaluating background modelling algorithms

- Foreground/Background Microsoft Cambridge Dataset

- Foreground/Background segmentation and Stereo dataset from Microsoft Cambridge

- Stuttgart Artificial Background Subtraction Dataset

- The SABS (Stuttgart Artificial Background Subtraction) dataset is an artificial dataset for pixel-wise evaluation of background models.

Saliency Detection (source)

- AIM

- 120 Images / 20 Observers (Neil D. B. Bruce and John K. Tsotsos 2005).

- LeMeur

- 27 Images / 40 Observers (O. Le Meur, P. Le Callet, D. Barba and D. Thoreau 2006).

- Kootstra

- 100 Images / 31 Observers (Kootstra, G., Nederveen, A. and de Boer, B. 2008).

- DOVES

- 101 Images / 29 Observers (van der Linde, I., Rajashekar, U., Bovik, A.C., Cormack, L.K. 2009).

- Ehinger

- 912 Images / 14 Observers (Krista A. Ehinger, Barbara Hidalgo-Sotelo, Antonio Torralba and Aude Oliva 2009).

- NUSEF

- 758 Images / 75 Observers (R. Subramanian, H. Katti, N. Sebe1, M. Kankanhalli and T-S. Chua 2010).

- JianLi

- 235 Images / 19 Observers (Jian Li, Martin D. Levine, Xiangjing An and Hangen He 2011).

- Extended Complex Scene Saliency Dataset (ECSSD)

- ECSSD contains 1000 natural images with complex foreground or background. For each image, the ground truth mask of salient object(s) is provided.

Video Surveillance

- CAVIAR

- For the CAVIAR project a number of video clips were recorded acting out the different scenarios of interest. These include people walking alone, meeting with others, window shopping, entering and exitting shops, fighting and passing out and last, but not least, leaving a package in a public place.

- ViSOR

- ViSOR contains a large set of multimedia data and the corresponding annotations.

- CUHK Crowd Dataset

- 474 video clips from 215 crowded scenes, with ground truth on group detection and video classes.?

- TImes Square Intersection (TISI) Dataset

- A busy outdoor dataset for research on visual surveillance.

- Educational Resource Centre (ERCe) Dataset

- An indoor dataset collected from a university campus for physical event understanding of long video streams.

Multiview

- 3D Photography Dataset

- Multiview stereo data sets: a set of images

- Multi-view Visual Geometry group's data set

- Dinosaur, Model House, Corridor, Aerial views, Valbonne Church, Raglan Castle, Kapel sequence

- Oxford reconstruction data set (building reconstruction)

- Oxford colleges

- Multi-View Stereo dataset (Vision Middlebury)

- Temple, Dino

- Multi-View Stereo for Community Photo Collections

- Venus de Milo, Duomo in Pisa, Notre Dame de Paris

- IS-3D Data

- Dataset provided by Center for Machine Perception

- CVLab dataset

- CVLab dense multi-view stereo image database

- 3D Objects on Turntable

- Objects viewed from 144 calibrated viewpoints under 3 different lighting conditions

- Object Recognition in Probabilistic 3D Scenes

- Images from 19 sites collected from a helicopter flying around Providence, RI. USA. The imagery contains approximately a full circle around each site.

- Multiple cameras fall dataset

- 24 scenarios recorded with 8 IP video cameras. The first 22 first scenarios contain a fall and confounding events, the last 2 ones contain only confounding events.

- CMP Extreme View Dataset

- 15 wide baseline stereo image pairs with large viewpoint change, provided ground truth homographies.

- KTH Multiview Football Dataset II

- This dataset consists of 8000+ images of professional footballers during a match of the Allsvenskan league. It consists of two parts: one with ground truth pose in 2D and one with ground truth pose in both 2D and 3D.

- Disney Research light field datasets

- This dataset includes: camera calibration information, raw input images we have captured, radially undistorted, rectified, and cropped images, depth maps resulting from our reconstruction and propagation algorithm, depth maps computed at each available view by the reconstruction algorithm without the propagation applied.

Action

- UCF Sports Action Dataset

- This dataset consists of a set of actions collected from various sports which are typically featured on broadcast television channels such as the BBC and ESPN. The video sequences were obtained from a wide range of stock footage websites including BBC Motion gallery, and GettyImages.

- UCF Aerial Action Dataset

- This dataset features video sequences that were obtained using a R/C-controlled blimp equipped with an HD camera mounted on a gimbal.The collection represents a diverse pool of actions featured at different heights and aerial viewpoints. Multiple instances of each action were recorded at different flying altitudes which ranged from 400-450 feet and were performed by different actors.

- UCF YouTube Action Dataset

- It contains 11 action categories collected from YouTube.

- Weizmann action recognition

- Walk, Run, Jump, Gallop sideways, Bend, One-hand wave, Two-hands wave, Jump in place, Jumping Jack, Skip.

- UCF50

- UCF50 is an action recognition dataset with 50 action categories, consisting of realistic videos taken from YouTube.

- ASLAN

- The Action Similarity Labeling (ASLAN) Challenge.

- MSR Action Recognition Datasets

- The dataset was captured by a Kinect device. There are 12 dynamic American Sign Language (ASL) gestures, and 10 people. Each person performs each gesture 2-3 times.

- KTH Recognition of human actions

- Contains six types of human actions (walking, jogging, running, boxing, hand waving and hand clapping) performed several times by 25 subjects in four different scenarios: outdoors, outdoors with scale variation, outdoors with different clothes and indoors.

- Hollywood-2 Human Actions and Scenes dataset

- Hollywood-2 datset contains 12 classes of human actions and 10 classes of scenes distributed over 3669 video clips and approximately 20.1 hours of video in total.

- Collective Activity Dataset

- This dataset contains 5 different collective activities : crossing, walking, waiting, talking, and queueing and 44 short video sequences some of which were recorded by consumer hand-held digital camera with varying view point.

- Olympic Sports Dataset

- The Olympic Sports Dataset contains YouTube videos of athletes practicing different sports.

- SDHA 2010

- Surveillance-type videos

- VIRAT Video Dataset

- The dataset is designed to be realistic, natural and challenging for video surveillance domains in terms of its resolution, background clutter, diversity in scenes, and human activity/event categories than existing action recognition datasets.

- HMDB: A Large Video Database for Human Motion Recognition

- Collected from various sources, mostly from movies, and a small proportion from public databases, YouTube and Google videos. The dataset contains 6849 clips divided into 51 action categories, each containing a minimum of 101 clips.

- Stanford 40 Actions Dataset

- Dataset of 9,532 images of humans performing 40 different actions, annotated with bounding-boxes.

- 50Salads dataset

- Fully annotated dataset of RGB-D video data and data from accelerometers attached to kitchen objects capturing 25 people preparing two mixed salads each (4.5h of annotated data). Annotated activities correspond to steps in the recipe and include phase (pre-/ core-/ post) and the ingredient acted upon.

- Penn Sports Action

- The dataset contains 2326 video sequences of 15 different sport actions and human body joint annotations for all sequences.

- CVRR-HANDS 3D

- A Kinect dataset for hand detection in naturalistic driving settings as well as a challenging 19 dynamic hand gesture recognition dataset for human machine interfaces.

- TUM Kitchen Data Set

- Observations of several subjects setting a table in different ways. Contains videos, motion capture data, RFID tag readings,...

- TUM Breakfast Actions Dataset

- This dataset comprises of 10 actions related to breakfast preparation, performed by 52 different individuals in 18 different kitchens.

- MPII Cooking Activities Dataset

- Cooking Activities dataset.

- GTEA Gaze+ Dataset

- This dataset consists of seven meal-preparation activities, each performed by 10 subjects. Subjects perform the activities based on the given cooking recipes.

Human pose/Expression

- AFEW (Acted Facial Expressions In The Wild)/SFEW (Static Facial Expressions In The Wild)

- Dynamic temporal facial expressions data corpus consisting of close to real world environment extracted from movies.

- ETHZ CALVIN Dataset

- CALVIN research group datasets

- HandNet (annotated depth images of articulating hands)

- This dataset includes 214971 annotated depth images of hands captured by a RealSense RGBD sensor of hand poses. Annotations: per pixel classes, 6D fingertip pose, heatmap. Images -> Train: 202198, Test: 10000, Validation: 2773. Recorded at GIP Lab, Technion.

Image stitching

- IPM Vision Group Image Stitching datasets

- Images and parameters for registeration

Medical

- VIP Laparoscopic / Endoscopic Dataset

- Collection of endoscopic and laparoscopic (mono/stereo) videos and images

- Mouse Embryo Tracking Database

- DB Contains 100 examples with the uncompressed frames, up to the 10th frame after the appearance of the 8th cell; a text file with the trajectories of all the cells, from appearance to division; a movie file showing the trajectories of the cells.

Misc

- Zurich Buildings Database

- ZuBuD Image Database contains over 1005 images about Zurich city building.

- Color Name Data Sets

- Mall dataset

- The mall dataset was collected from a publicly accessible webcam for crowd counting and activity profiling research.

- QMUL Junction Dataset

- A busy traffic dataset for research on activity analysis and behaviour understanding.

- Miracl-VC1

- Miracl-VC1 is a lip-reading dataset including both depth and color images. Fifteen speakers positioned in the frustum of a MS Kinect sensor and utter ten times a set of ten words and ten phrases.