Mysql MGR单主

1.安装mysql

此部分略,mysql版本8.0.19,注意初始安装不应该直接安装mgr的配置

2.安装mgr

2.1、应提前配置/etc/hosts解析

[root@im ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.2.83.141 scp

10.2.22.228 im

10.2.83.140 test

2.2 、修改配置文件

***注意如果是新安裝的数据库在初始化配置文件的時候需要在配置文件加lower_case_table_names=1

节点一:

[root@test ~]# cat /etc/my.cnf

[mysqld]

basedir=/usr/local/mysql

datadir=/data/mysql

socket=/tmp/mysql.sock

server_id = 3

gtid_mode = on

enforce_gtid_consistency = on

master_info_repository=TABLE

relay_log_info_repository=TABLE

binlog_checksum=NONE

log_bin = /data/mysql/mysql-bin

log-slave-updates = 1

binlog_format = row

sync-master-info = 1

sync_binlog = 1

skip_slave_start = 1

port=3306

lower_case_table_names=1

#group replication

binlog_transaction_dependency_tracking = WRITESET

transaction_write_set_extraction=XXHASH64 ## 指定用于对事务期间提取的写进行哈希处理的算法,对于组复制, transaction_write_set_extraction 必须将其设置为XXHASH64。

plugin_load="group_replication=group_replication.so" ##加载插件

loose-group_replication_group_name="dc04ba33-bcf4-11ea-85bf-000c295111ae" ##组的名字可以随便起,但不能用主机的GTID! 所有节点的这个组名必须保持一致!

loose-group_replication_start_on_boot=off ##启动mysql时不自动启动组复制

loose-group_replication_local_address= "10.2.83.140:24901" ###本机IP地址,24901用于接收来自其他组成员的传入连接

loose-group_replication_group_seeds= "10.2.22.228:24901,10.2.83.141:24901,10.2.83.140:24901" ### 当前主机成员需要加入组时,Server先访问这些种子成员中的一个,然后它请求重新配置以允许它加入组,

loose-group_replication_bootstrap_group=off ### 是否自动引导组。此选项只能在一个server实例上使用,通常是首次引导组时(或在整组成员关闭的情况下),如果多次引导,可能出现脑裂。

loose-group_replication_member_weight=50 ### 通过设置此从参数来控制单主模式切换的顺序,前提MGR多节点的版本为统一版本,默认50

loose-group_replication_ip_whitelist="10.2.83.0/24,127.0.0.1/8,10.2.22.0/24" ###群组白名单

#***********base***************

skip-name-resolve

skip-slave-start=1

lower_case_table_names=1

transaction-isolation=READ-COMMITTED

[mysql]

socket=/tmp/mysql.sock

配置节点一:

set sql_log_bin=0;

set global super_read_only=0;

set global read_only=0;

create user 'rpl_mgr'@'%' identified by '123456';

grant replication slave on *.* to 'rpl_mgr'@'%';

ALTER USER rpl_mgr@'%' IDENTIFIED WITH mysql_native_password BY '123456';

set sql_log_bin=1;

change master to master_user='rpl_mgr', master_password='123456' for channel 'group_replication_recovery';

install plugin group_replication soname 'group_replication.so';

set global group_replication_bootstrap_group=on; #只在第一个节点执行即可

show plugins;

mysql> set global slave_preserve_commit_order=on; #设置此参数是为了控制Slave上的binlog提交顺序和Master上的binlog的提交顺序一样,保证GTID的顺序。

start group_replication;

set global group_replication_bootstrap_group= off;

SELECT * FROM performance_schema.replication_group_members;

节点二:

[root@scp ~]# cat /etc/my.cnf

[mysqld]

basedir=/usr/local/mysql

datadir=/data/mysql

socket=/tmp/mysql.sock

server_id = 2

gtid_mode = on

enforce_gtid_consistency = on

master_info_repository=TABLE

relay_log_info_repository=TABLE

binlog_checksum=NONE

log_bin = /data/mysql/mysql-bin

log-slave-updates = 1

binlog_format = row

sync-master-info = 1

sync_binlog = 1

skip_slave_start = 1

lower_case_table_names=1

autocommit=ON

#group replication参数

plugin_load="group_replication=group_replication.so"

binlog_transaction_dependency_tracking = WRITESET

transaction_write_set_extraction=XXHASH64

loose-group_replication_group_name="dc04ba33-bcf4-11ea-85bf-000c295111ae"

loose-group_replication_start_on_boot=off

loose-group_replication_local_address= "10.2.83.141:24901"

loose-group_replication_group_seeds= "10.2.22.228:24901,10.2.83.141:24901,10.2.83.140:24901"

loose-group_replication_bootstrap_group=off

loose-group_replication_member_weight=50

loose-group_replication_ip_whitelist="10.2.83.0/24,127.0.0.1/8,10.2.22.0/24"

#***********base***************

skip-name-resolve

skip-slave-start=1

lower_case_table_names=1

transaction-isolation=READ-COMMITTED

[mysql]

socket=/tmp/mysql.sock

节点三:

[root@im ~]# cat /etc/my.cnf

[mysqld]

basedir=/usr/local/mysql

datadir=/data/mysql

socket=/tmp/mysql.sock

server_id = 4

gtid_mode = on

enforce_gtid_consistency = on

master_info_repository=TABLE

relay_log_info_repository=TABLE

binlog_checksum=NONE

log_bin = /data/mysql/mysql-bin

log-slave-updates = 1

binlog_format = row

sync-master-info = 1

sync_binlog = 1

skip_slave_start = 1

port=3340

lower_case_table_names=1

#group replication

binlog_transaction_dependency_tracking = WRITESET

transaction_write_set_extraction=XXHASH64

plugin_load="group_replication=group_replication.so"

loose-group_replication_group_name="dc04ba33-bcf4-11ea-85bf-000c295111ae"

loose-group_replication_start_on_boot=off

loose-group_replication_local_address= "10.2.22.228:24901"

loose-group_replication_group_seeds= "10.2.22.228:24901,10.2.83.141:24901,10.2.83.140:24901"

loose-group_replication_bootstrap_group=off

loose-group_replication_member_weight=50

loose-group_replication_ip_whitelist="10.2.83.0/24,127.0.0.1/8,10.2.22.0/24"

#***********base***************

skip-name-resolve

skip-slave-start=1

lower_case_table_names=1

transaction-isolation=READ-COMMITTED

[mysql]

socket=/tmp/mysql.sock

节点二和三的配置相同:

set sql_log_bin=0;

set global super_read_only=0;

set global read_only=0;

create user 'rpl_mgr'@'%' identified by '123456';

grant replication slave on *.* to 'rpl_mgr'@'%';

ALTER USER rpl_mgr@'%' IDENTIFIED WITH mysql_native_password BY '123456';

set sql_log_bin=1;

change master to master_user='rpl_mgr', master_password='123456' for channel 'group_replication_recovery';

install plugin group_replication soname 'group_replication.so';

show plugins;

set global slave_preserve_commit_order=on;

start group_replication;

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION |

+---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+

| group_replication_applier | 1be36b1a-7b2b-11eb-af48-005056a67ae0 | scp | 3306 | ONLINE | PRIMARY | 8.0.19 |

| group_replication_applier | 419a6bf5-7b62-11eb-b731-005056a6b4be | test | 3306 | ONLINE | SECONDARY | 8.0.19 |

| group_replication_applier | e45006f8-7b2c-11eb-b6e1-005056a6a972 | im | 3340 | ONLINE | SECONDARY | 8.0.19 |

+---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+

至此安装完成

以下示例显示了单主模式下部署时,如何确定当前哪个server成员时主服务器

select variable_value from performance_schema.global_status where variable_name='group_replication_primary_member';

3.性能,异常判断

1.查询组同步是否正常

已从组中接收并在应用队列(中继日志)中排队的事务。

mysql> select * from performance_schema.replication_connection_status\G;

CHANNEL_NAME: group_replication_applier

GROUP_NAME: dc04ba33-bcf4-11ea-85bf-000c295111ae 如果此服务器是组的成员,则显示该服务器所属组的名称。

SOURCE_UUID: dc04ba33-bcf4-11ea-85bf-000c295111ae server_uuid来自源 的值。

THREAD_ID: NULL I / O线程ID。

SERVICE_STATE: ON ON(线程存在并且处于活动状态或空闲状态),OFF(线程不再存在)或CONNECTING(线程存在并且正在连接到源)。

COUNT_RECEIVED_HEARTBEATS: 0 自上次重新启动或重置副本以来,副本收到的心跳信号总数,或CHANGE REPLICATION SOURCE TO| CHANGE MASTER TO

LAST_HEARTBEAT_TIMESTAMP: 0000-00-00 00:00:00.000000 格式的时间戳,显示副本何时接收到最新的心跳信号。 'YYYY-MM-DD hh:mm:ss[.fraction]'

RECEIVED_TRANSACTION_SET: dc04ba33-bcf4-11ea-85bf-000c295111ae:1-10 与该副本接收的所有事务相对应的一组全局事务ID(GTID)。如果未使用GTID,则为空。

LAST_ERROR_NUMBER: 0 导致I / O线程停止的最新错误的错误号和错误消息。错误号0和空字符串的消息表示“无错误。”如果 LAST_ERROR_MESSAGE值不为空,误差值也出现在副本的错误日志。发出RESET MASTER或 RESET REPLICA | SLAVE重置这些列中显示的值。

LAST_ERROR_MESSAGE:

LAST_ERROR_TIMESTAMP: 0000-00-00 00:00:00.000000

LAST_QUEUED_TRANSACTION: dc04ba33-bcf4-11ea-85bf-000c295111ae:10 排队到中继日志中的最后一个事务的全局事务ID(GTID)。

LAST_QUEUED_TRANSACTION_ORIGINAL_COMMIT_TIMESTAMP: 2021-03-02 23:29:00.454239 时间戳格式,显示中继日志中排队的最后一个事务何时在原始源上提交。

LAST_QUEUED_TRANSACTION_IMMEDIATE_COMMIT_TIMESTAMP: 0000-00-00 00:00:00.000000

LAST_QUEUED_TRANSACTION_START_QUEUE_TIMESTAMP: 2021-03-02 16:52:30.627401 该时间戳显示该I / O线程何时将最后一个事务放置在中继日志队列中。

LAST_QUEUED_TRANSACTION_END_QUEUE_TIMESTAMP: 2021-03-02 16:52:30.627419 用于显示最后一个事务何时排队到中继日志文件中。

QUEUEING_TRANSACTION: 中继日志中当前排队事务的全局事务ID(GTID)。

QUEUEING_TRANSACTION_ORIGINAL_COMMIT_TIMESTAMP: 0000-00-00 00:00:00.000000

QUEUEING_TRANSACTION_IMMEDIATE_COMMIT_TIMESTAMP: 0000-00-00 00:00:00.000000

QUEUEING_TRANSACTION_START_QUEUE_TIMESTAMP: 0000-00-00 00:00:00.000000

下表显示了replication_connection_status 列与 SHOW REPLICA | SLAVE STATUS列之间的对应关系 。

replication_connection_status SHOW REPLICA | SLAVE STATUS

SOURCE_UUID Master_UUID

THREAD_ID 没有

SERVICE_STATE Replica_IO_Running

RECEIVED_TRANSACTION_SET Retrieved_Gtid_Set

LAST_ERROR_NUMBER Last_IO_Errno

LAST_ERROR_MESSAGE Last_IO_Error

LAST_ERROR_TIMESTAMP Last_IO_Error_Timestamp

2.replication_group_member_stats

复制组中的每个成员都会验证并应用组提交的事务,replication_group_member_stats表提供与认证过程相关的信息。

mysql> select * from performance_schema.replication_group_member_stats\G

*************************** 1. row ***************************

CHANNEL_NAME: group_replication_applier 组复制通道名称

VIEW_ID: 16420412370467165:7

MEMBER_ID: 781ad356-5e16-11ec-b41a-005056a608b0 此为当前我们连接到的server的UUID信息

COUNT_TRANSACTIONS_IN_QUEUE: 0 队列中等待冲突检测检查的事务数,冲突检查通过后,他们排队等待应用

COUNT_TRANSACTIONS_CHECKED: 0 表示已经进行冲突检查的事务数

COUNT_CONFLICTS_DETECTED: 0 表示未通过冲突检测检查的事务数

COUNT_TRANSACTIONS_ROWS_VALIDATING: 0

TRANSACTIONS_COMMITTED_ALL_MEMBERS: dc04ba33-bcf4-11ea-85bf-000c295111ae:1-58:1000027-1000031:2000027-2000040 已在复制组的所有成员上成功提交的事务,显示为 GTID Sets。这以固定的时间间隔更新。

LAST_CONFLICT_FREE_TRANSACTION: 检查的最后一个无冲突事务的事务标识符。

COUNT_TRANSACTIONS_REMOTE_IN_APPLIER_QUEUE: 1 此成员从复制组收到的等待应用的事务数。

COUNT_TRANSACTIONS_REMOTE_APPLIED: 0 此成员已从组收到并应用的事务数。

COUNT_TRANSACTIONS_LOCAL_PROPOSED: 0

COUNT_TRANSACTIONS_LOCAL_ROLLBACK: 0

这些字段对于监控组中的成员的性能很重要,例如假设组的成员之一出现延迟,并且不能与其他组成员同步,此时查看

可以首先通过在备库执行如下命令确认主备之间是否有延迟:

select COUNT_TRANSACTIONS_IN_QUEUE,LAST_CONFLICT_FREE_TRANSACTION from performance_schema.replication_group_member_stats where MEMBER_ID=@@server_uuid;

如果COUNT_TRANSACTIONS_IN_QUEUE>0,那么就是有延迟了。

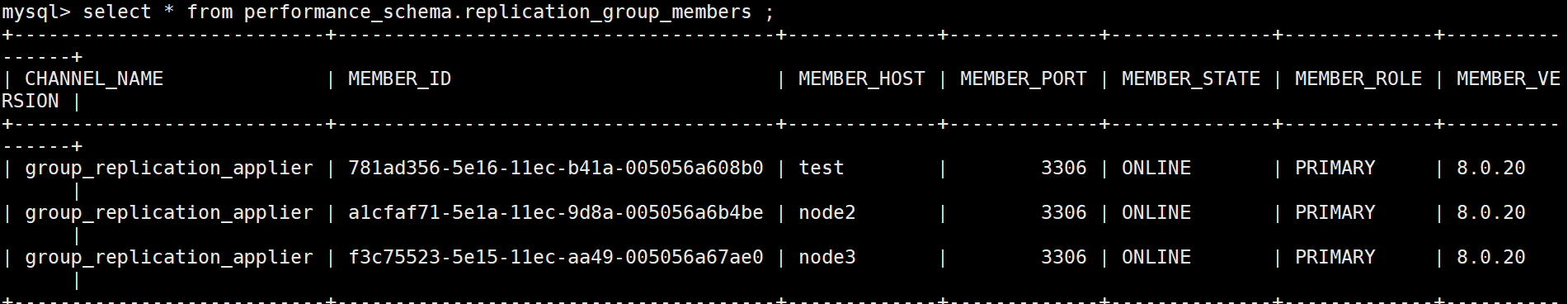

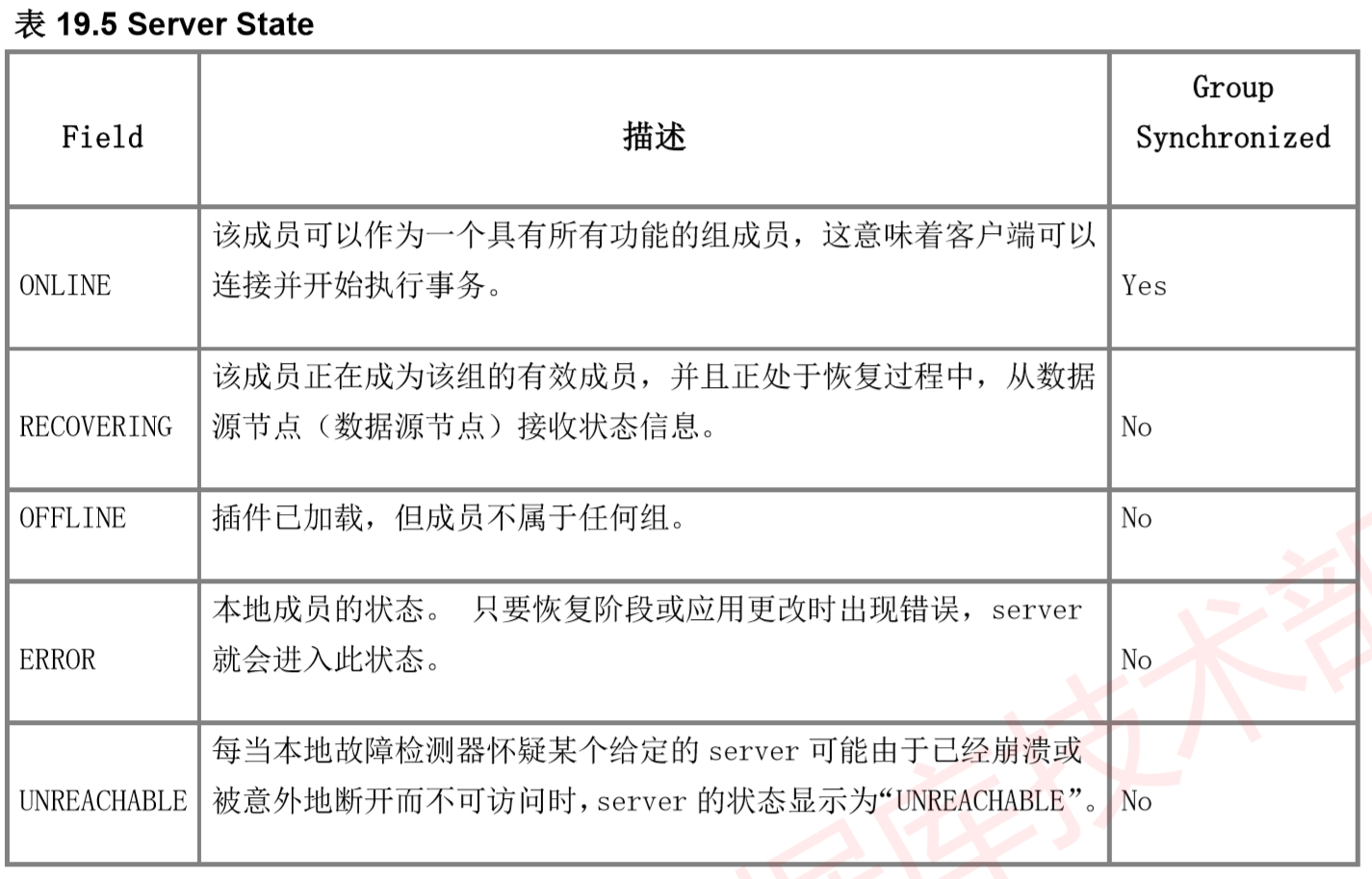

3.replication_group_members

查询组中server的状态是否在线

4 在线切换谁作为主

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION |

+---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+

| group_replication_applier | 1be36b1a-7b2b-11eb-af48-005056a67ae0 | scp | 3306 | ONLINE | PRIMARY | 8.0.19 |

| group_replication_applier | 419a6bf5-7b62-11eb-b731-005056a6b4be | test | 3306 | ONLINE | SECONDARY | 8.0.19 |

| group_replication_applier | e45006f8-7b2c-11eb-b6e1-005056a6a972 | im | 3340 | ONLINE | SECONDARY | 8.0.19 |

+---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+

mysql> SELECT group_replication_set_as_primary('e45006f8-7b2c-11eb-b6e1-005056a6a972');

查看切换过程:

SELECT event_name, work_completed, work_estimated FROM performance_schema.events_stages_cu rrent WHERE event_name LIKE "%stage/group_rpl%";

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION |

+---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+

| group_replication_applier | 1be36b1a-7b2b-11eb-af48-005056a67ae0 | scp | 3306 | ONLINE | SECONDARY | 8.0.19 |

| group_replication_applier | 419a6bf5-7b62-11eb-b731-005056a6b4be | test | 3306 | ONLINE | SECONDARY | 8.0.19 |

| group_replication_applier | e45006f8-7b2c-11eb-b6e1-005056a6a972 | im | 3340 | ONLINE | PRIMARY | 8.0.19 |

+---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+

测试切换,手动停机主节点,主节点可以自动切换

使用场景:

1.平滑升级

2.节点扩容

5 单主切换到多主方法

1)修改每个节点的配置文件新增

#multi master

loose-group_replication_single_primary_mode=off

loose-group_replication_enforce_update_everywhere_checks=on

2)重启每个节点

3)在其中任何一个节点执行

mysql> SET GLOBAL group_replication_bootstrap_group=ON;

Query OK, 0 rows affected (0.00 sec)

mysql> START GROUP_REPLICATION;

Query OK, 0 rows affected (3.17 sec)

mysql> SET GLOBAL group_replication_bootstrap_group=OFF;

Query OK, 0 rows affected (0.00 sec)

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION |

+---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+

| group_replication_applier | 1be36b1a-7b2b-11eb-af48-005056a67ae0 | scp | 3306 | ONLINE | PRIMARY | 8.0.19 |

+---------------------------+--------------------------------------+-------------+-------------+--------------+-------------+----------------+

其他两个节点执行

mysql> START GROUP_REPLICATION;

7.多主修改为单主

1)所有节点执行

stop group_replication;

set global group_replication_enforce_update_everywhere_checks=OFF;

set global group_replication_single_primary_mode=ON;

2)选择一个节点执行

SET GLOBAL group_replication_bootstrap_group=ON;

START GROUP_REPLICATION;

SET GLOBAL group_replication_bootstrap_group=OFF;

3)其他节点执行

START GROUP_REPLICATION;

4)查看同步状态

SELECT * FROM performance_schema.replication_group_members;

记得都要修改配置文件

##多主

##loose-group_replication_single_primary_mode = off

##單主

loose-group_replication_single_primary_mode = on

##多主

#loose-group_replication_enforce_update_everywhere_checks = on

##單主

loose-group_replication_enforce_update_everywhere_checks = off

7.注意不论是单主和多主MGR架构,要求必须要主键

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 25岁的心里话

· 闲置电脑爆改个人服务器(超详细) #公网映射 #Vmware虚拟网络编辑器

· 零经验选手,Compose 一天开发一款小游戏!

· 通过 API 将Deepseek响应流式内容输出到前端

· 因为Apifox不支持离线,我果断选择了Apipost!