openpose-opencv 的body数据多人体姿态估计

介绍

opencv除了支持常用的物体检测模型和分类模型之外,还支持openpose模型,同样是线下训练和线上调用。这里不做特别多的介绍,先把源代码和数据放出来~

实验模型获取地址:https://github.com/CMU-Perceptual-Computing-Lab/openpose

基于body数据的代码实现:

1 import cv2 2 import time 3 import numpy as np 4 from random import randint 5 6 image1 = cv2.imread("E:\\usb_test\\example\\yolov3\\OpenPose-Multi-Person\\111.jpg") 7 8 9 protoFile = "E:\\usb_test\\example\\yolov3\\OpenPose-Multi-Person\\pose\\body_25\\pose_deploy.prototxt" 10 weightsFile = "E:\\usb_test\\example\\yolov3\\OpenPose-Multi-Person\\pose\\body_25\\pose_iter_584000.caffemodel" 11 nPoints = 25 12 # COCO Output Format 13 keypointsMapping = ['Nose', 'Neck', 'RShoulder', 'RElbow', 'RWrist', 'LShoulder', 'LElbow', 'LWrist', 'MidHip', 'RHip', 'RKnee', 14 'RAnkle', 'LHip', 'LKnee', 'LAnkle', 'REye', 'LEye', 'REar', "LEar", 'LBigToe', 'LSmallToe', "LHeel", 'RBigToe', 'RSmallToe' , 15 'RHeel'] 16 17 POSE_PAIRS = [[1,8], [1,2], [1,5], [2,3], [3,4], [5,6], 18 [6,7],[8,9],[9,10],[10,11], [8,12], [12,13], 19 [13,14], [1,0], [0,15],[15,17],[0,16],[16,18], 20 [2,17], [5,18], [14,19], [19,20], [14,21],[11,22], 21 [22,23] ,[11,24]] 22 23 24 # index of pafs correspoding to the POSE_PAIRS 25 # e.g for POSE_PAIR(1,2), the PAFs are located at indices (31,32) of output, Similarly, (1,5) -> (39,40) and so on. 26 27 mapIdx = [[26,27], [40,41], [48,49], [42,43], [44,45], [50,51], 28 [52,53], [32,33], [28,29], [30,31], [34,35],[36,37], 29 [38,39], [56,57], [58,59], [62,63], [60,61], [64,65], 30 [46,47], [54,55], [66,67], [68,69], [70,71],[72,73], 31 [74,75],[76,77]] 32 33 colors = [[255, 0, 0], [255, 85, 0], [255, 170, 0], 34 [255, 255, 0], [170, 255, 0], [85, 255, 0], 35 [0, 255, 0], [0, 255, 85], [0, 255, 170], 36 [0, 255, 255], [0, 170, 255], [0, 85, 255], 37 [0, 0, 255], [85, 0, 255], [170, 0, 255], 38 [255, 0, 255], [255, 0, 170], [255, 0, 85], 39 [255, 170, 85], [255, 170, 170], [255, 170, 255], 40 [255, 85, 85], [255, 85, 170], [255, 85, 255], 41 [170, 170, 170]] 42 43 44 def getKeypoints(probMap, threshold=0.1): 45 46 mapSmooth = cv2.GaussianBlur(probMap,(3,3),0,0) 47 48 mapMask = np.uint8(mapSmooth>threshold) 49 keypoints = [] 50 51 #find the blobs 52 _, contours, hierarchy = cv2.findContours(mapMask, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE) 53 54 #for each blob find the maxima 55 for cnt in contours: 56 #print(cnt) 57 blobMask = np.zeros(mapMask.shape) 58 blobMask = cv2.fillConvexPoly(blobMask, cnt, 1) 59 maskedProbMap = mapSmooth * blobMask 60 _, maxVal, _, maxLoc = cv2.minMaxLoc(maskedProbMap) 61 keypoints.append(maxLoc + (probMap[maxLoc[1], maxLoc[0]],)) 62 63 return keypoints 64 65 66 # Find valid connections between the different joints of a all persons present 67 def getValidPairs(output): 68 valid_pairs = [] 69 invalid_pairs = [] 70 n_interp_samples = 15 71 paf_score_th = 0.1 72 conf_th = 0.7 73 # loop for every POSE_PAIR 74 for k in range(len(mapIdx)): 75 # A->B constitute a limb 76 pafA = output[0, mapIdx[k][0], :, :] 77 pafB = output[0, mapIdx[k][1], :, :] 78 pafA = cv2.resize(pafA, (frameWidth, frameHeight)) 79 pafB = cv2.resize(pafB, (frameWidth, frameHeight)) 80 81 # Find the keypoints for the first and second limb 82 candA = detected_keypoints[POSE_PAIRS[k][0]] 83 candB = detected_keypoints[POSE_PAIRS[k][1]] 84 nA = len(candA) 85 nB = len(candB) 86 87 # If keypoints for the joint-pair is detected 88 # check every joint in candA with every joint in candB 89 # Calculate the distance vector between the two joints 90 # Find the PAF values at a set of interpolated points between the joints 91 # Use the above formula to compute a score to mark the connection valid 92 93 if( nA != 0 and nB != 0): 94 valid_pair = np.zeros((0,3)) 95 for i in range(nA): 96 max_j=-1 97 maxScore = -1 98 found = 0 99 for j in range(nB): 100 # Find d_ij 101 d_ij = np.subtract(candB[j][:2], candA[i][:2]) 102 norm = np.linalg.norm(d_ij) 103 if norm: 104 d_ij = d_ij / norm 105 else: 106 continue 107 # Find p(u) 108 interp_coord = list(zip(np.linspace(candA[i][0], candB[j][0], num=n_interp_samples), 109 np.linspace(candA[i][1], candB[j][1], num=n_interp_samples))) 110 # Find L(p(u)) 111 paf_interp = [] 112 for k in range(len(interp_coord)): 113 paf_interp.append([pafA[int(round(interp_coord[k][1])), int(round(interp_coord[k][0]))], 114 pafB[int(round(interp_coord[k][1])), int(round(interp_coord[k][0]))] ]) 115 # Find E 116 paf_scores = np.dot(paf_interp, d_ij) 117 avg_paf_score = sum(paf_scores)/len(paf_scores) 118 119 # Check if the connection is valid 120 # If the fraction of interpolated vectors aligned with PAF is higher then threshold -> Valid Pair 121 if ( len(np.where(paf_scores > paf_score_th)[0]) / n_interp_samples ) > conf_th : 122 if avg_paf_score > maxScore: 123 max_j = j 124 maxScore = avg_paf_score 125 found = 1 126 # Append the connection to the list 127 if found: 128 valid_pair = np.append(valid_pair, [[candA[i][3], candB[max_j][3], maxScore]], axis=0) 129 130 # Append the detected connections to the global list 131 valid_pairs.append(valid_pair) 132 else: # If no keypoints are detected 133 print("No Connection : k = {}".format(k)) 134 invalid_pairs.append(k) 135 valid_pairs.append([]) 136 return valid_pairs, invalid_pairs 137 138 139 140 # This function creates a list of keypoints belonging to each person 141 # For each detected valid pair, it assigns the joint(s) to a person 142 def getPersonwiseKeypoints(valid_pairs, invalid_pairs): 143 # the last number in each row is the overall score 144 personwiseKeypoints = -1 * np.ones((0, 26)) 145 146 for k in range(len(mapIdx)): 147 if k not in invalid_pairs: 148 partAs = valid_pairs[k][:,0] 149 partBs = valid_pairs[k][:,1] 150 indexA, indexB = np.array(POSE_PAIRS[k]) 151 152 for i in range(len(valid_pairs[k])): 153 found = 0 154 person_idx = -1 155 for j in range(len(personwiseKeypoints)): 156 if personwiseKeypoints[j][indexA] == partAs[i]: 157 person_idx = j 158 found = 1 159 break 160 print("find",found) 161 if found: 162 personwiseKeypoints[person_idx][indexB] = partBs[i] 163 personwiseKeypoints[person_idx][-1] += keypoints_list[partBs[i].astype(int), 2] + valid_pairs[k][i][2] 164 165 # if find no partA in the subset, create a new subset 166 elif not found and k < 24: 167 row = -1 * np.ones(26) 168 row[indexA] = partAs[i] 169 row[indexB] = partBs[i] 170 # add the keypoint_scores for the two keypoints and the paf_score 171 row[-1] = sum(keypoints_list[valid_pairs[k][i,:2].astype(int), 2]) + valid_pairs[k][i][2] 172 personwiseKeypoints = np.vstack([personwiseKeypoints, row]) 173 return personwiseKeypoints 174 175 176 frameWidth = image1.shape[1] 177 frameHeight = image1.shape[0] 178 179 t = time.time() 180 net = cv2.dnn.readNetFromCaffe(protoFile, weightsFile) 181 182 # Fix the input Height and get the width according to the Aspect Ratio 183 inHeight = 360 184 inWidth = int((inHeight/frameHeight)*frameWidth) 185 186 inpBlob = cv2.dnn.blobFromImage(image1, 1.0 / 255, (inWidth, inHeight), 187 (0, 0, 0), swapRB=False, crop=False) 188 print("2222", inpBlob.shape ) 189 net.setInput(inpBlob) 190 #net.setPreferableBackend(cv2.dnn.DNN_BACKEND_OPENCV) 191 #net.setPreferableTarget(cv2.dnn.DNN_TARGET_OPENCL) 192 output = net.forward() 193 print(output.shape) 194 print("Time Taken in forward pass = {}".format(time.time() - t)) 195 196 detected_keypoints = [] 197 keypoints_list = np.zeros((0,3)) 198 keypoint_id = 0 199 threshold = 0.1 200 201 for part in range(nPoints): 202 probMap = output[0,part,:,:] 203 probMap = cv2.resize(probMap, (image1.shape[1], image1.shape[0])) 204 keypoints = getKeypoints(probMap, threshold) 205 print("Keypoints - {} : {}".format(keypointsMapping[part], keypoints)) 206 keypoints_with_id = [] 207 for i in range(len(keypoints)): 208 keypoints_with_id.append(keypoints[i] + (keypoint_id,)) 209 keypoints_list = np.vstack([keypoints_list, keypoints[i]]) 210 keypoint_id += 1 211 212 detected_keypoints.append(keypoints_with_id) 213 print("detected_keypoints",detected_keypoints) 214 215 216 frameClone = image1.copy() 217 for i in range(nPoints): 218 for j in range(len(detected_keypoints[i])): 219 cv2.circle(frameClone, detected_keypoints[i][j][0:2], 3, colors[i], -1, cv2.LINE_AA) 220 cv2.imshow("Keypoints",frameClone) 221 222 valid_pairs, invalid_pairs = getValidPairs(output) 223 personwiseKeypoints = getPersonwiseKeypoints(valid_pairs, invalid_pairs) 224 225 for i in range(24): 226 for n in range(len(personwiseKeypoints)): 227 index = personwiseKeypoints[n][np.array(POSE_PAIRS[i])] 228 if -1 in index: 229 continue 230 B = np.int32(keypoints_list[index.astype(int), 0]) 231 A = np.int32(keypoints_list[index.astype(int), 1]) 232 cv2.line(frameClone, (B[0], A[0]), (B[1], A[1]), colors[i], 2, cv2.LINE_AA) 233 234 235 cv2.imshow("Detected Pose" , frameClone) 236 cv2.waitKey(0)

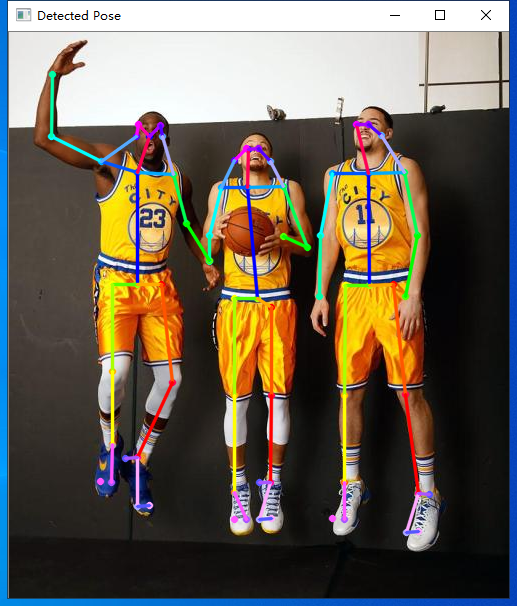

实验效果