学习抓包

抓包

1 tcpdump -i any tcp port 80 -w weimingliu_client_bash.cap

2

3 然后下载wireshark导入来看

4 https://linuxhint.com/install_wireshark_ubuntu/

https://drive.google.com/file/d/1mq6kAILG3d3fL_IigNZABDxmn9Pyb3lC/view?usp=sharing

爬取网站电影

https://www.nunuyy10.top/dianshiju/35491.html

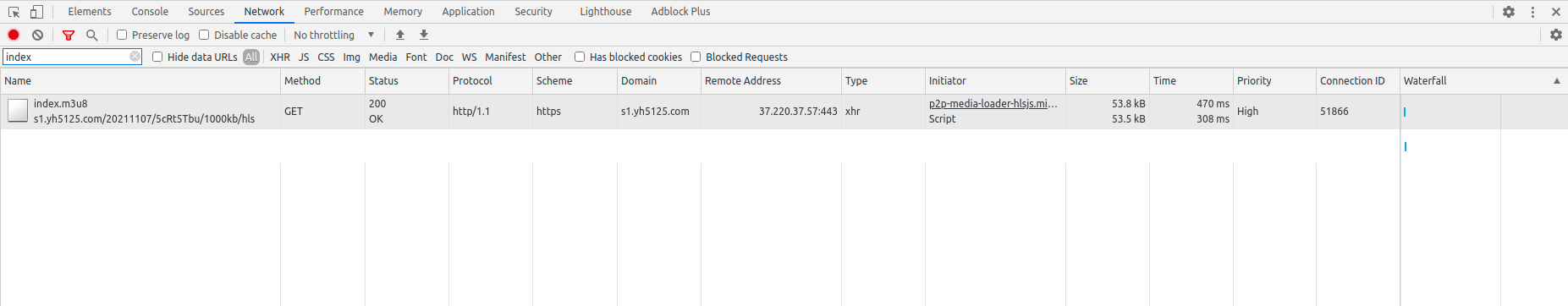

这个网站太卡了,一看原来是用了m3u8的点播模式下载的,下载的都是xxx.ts文件,把所有文件下载好,ffmpeg转一下就好了

ls | sort -n | xargs cat >> all.ts

ffmpeg -i all.ts -bsf:a aac_adtstoasc -acodec copy -vcodec copy all.mp4

import os import requests import shutil import time from concurrent.futures import ThreadPoolExecutor def log_time(func): def wrapper(*args, **kw): begin_time = time.time() func(*args, **kw) print('{} total cost time = {}'.format(func.__name__, time.time() - begin_time)) return wrapper base_url = 'https://s1.yh5125.com' # base_url = 'https://s1.yh5125.com//20211107/5cRt5Tbu/1000kb/hls/pOn9e9Ab.ts' total = [ 'https://s1.yh5125.com/20211107/ENZ8K1Zo/1000kb/hls/index.m3u8', 'https://s1.yh5125.com/20211107/kC0T2DEu/1000kb/hls/index.m3u8', 'https://s1.yh5125.com/20211107/fGAKN7Mw/1000kb/hls/index.m3u8', 'https://s1.yh5125.com/20211107/OuCTDOUA//1000kb/hls/index.m3u8', 'https://s1.yh5125.com/20211107/FEfKtKmq/1000kb/hls/index.m3u8', 'https://s1.yh5125.com/20211107/ekIwEE0J/1000kb/hls/index.m3u8', 'https://s1.yh5125.com/20211107/p6AmYyl2/1000kb/hls/index.m3u8', 'https://s1.yh5125.com/20211107/6JGmKUpl/1000kb/hls/index.m3u8', 'https://s1.yh5125.com/20211107/4Y8rYM3E/1000kb/hls/index.m3u8', 'https://s1.yh5125.com/20211107/5Sic3mHR/1000kb/hls/index.m3u8', 'https://s1.yh5125.com/20211107/xqK9rX6v/1000kb/hls/index.m3u8', 'https://s1.yh5125.com/20211107/VYrG9Lvm/1000kb/hls/index.m3u8', 'https://s1.yh5125.com/20211107/LssuBvgC/1000kb/hls/index.m3u8', 'https://s1.yh5125.com/20211107/XZwtp4Ce/1000kb/hls/index.m3u8', 'https://s1.yh5125.com/20211107/NUfNoQeE/1000kb/hls/index.m3u8', 'https://s1.yh5125.com/20211107/5cRt5Tbu/1000kb/hls/index.m3u8', ] max_workers = 30 worker_pool = ThreadPoolExecutor(max_workers=max_workers) headers = { 'sec-ch-ua': '" Not A;Brand";v="99", "Chromium";v="90"', 'Referer': '', 'sec-ch-ua-mobile': '?0', 'User-Agent': 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/90.0.4430.72 Safari/537.36', } @log_time def catch_it(idx, url): path = '{}/{}'.format(os.path.abspath('.'), idx) try: shutil.rmtree(path) except Exception: pass os.makedirs(path) index = requests.get(url).text.split('\n') job_list = [] item_idx = 0 for item in index: if 'hls' in item and '.ts' in item: item_idx += 1 @log_time def real_do(item_idx, item): name = '{}_{}'.format(item_idx, item[item.rfind('/')+1:]) content = requests.get('{}/{}'.format(base_url, item), headers=headers).content with open('{}/{}'.format(path, name), 'wb') as fp: fp.write(content) job_list.append(worker_pool.submit(real_do, item_idx, item)) for job in job_list: job.result() @log_time def main(): start_from = 0 for idx in range(start_from, len(total)): print('now we are doing: {}'.format(idx)) catch_it(idx, total[idx]) if __name__ == '__main__': main()

import os import requests import shutil import time from concurrent.futures import ThreadPoolExecutor import functools import logging def log_time(func): def wrapper(*args, **kw): begin_time = time.time() ret = func(*args, **kw) print('{} total cost time = {}'.format(func.__name__, time.time() - begin_time)) return ret return wrapper def retry(retry_count=2, exceptions=(Exception,), sleep_time=None, ignore_error=False, func_name=None): def wrapper(func): @functools.wraps(func) def inner(*args, **kw): for cnt in range(retry_count + 1): try: return func(*args, **kw) except exceptions: method = func_name if func_name else func.__name__ if cnt == retry_count: if ignore_error: logging.exception( '[notice] exceed retry count for doing {}'.format(method) ) return None else: logging.exception( '[critical] exceed retry count for doing {}'.format(method) ) raise logging.exception( 'retry {} for {}/{} count, sleep_time: {} s'.format(method, cnt + 1, retry_count, sleep_time) ) if sleep_time is not None: time.sleep(sleep_time) return inner return wrapper base_url = 'http://vip5.bobolj.com' base_url = 'http://lajiao-bo.com' total = [ 'https://lajiao-bo.com/20190525/d3riCcsu/800kb/hls/index.m3u8', ] max_workers = 300 worker_pool = ThreadPoolExecutor(max_workers=max_workers) headers = { 'sec-ch-ua': '" Not A;Brand";v="99", "Chromium";v="90"', 'sec-ch-ua-mobile': '?0', 'User-Agent': 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/90.0.4430.72 Safari/537.36', } @log_time def catch_it(idx, url): path = '{}/{}'.format(os.path.abspath('.'), idx) try: shutil.rmtree(path) except Exception: pass os.makedirs(path) @log_time @retry(retry_count=10, ignore_error=False, sleep_time=2) def get_index(): index = requests.get(url, timeout=100).text.split('\n') return index index = get_index() job_list = [] item_idx = 0 for item in index: if 'hls' in item and '.ts' in item: item_idx += 1 @log_time @retry(ignore_error=True, sleep_time=2) def real_do(item_idx, item): name = '{}_{}'.format(item_idx, item[item.rfind('/')+1:]) content = requests.get('{}/{}'.format(base_url, item), headers=headers, timeout=100).content with open('{}/{}'.format(path, name), 'wb') as fp: fp.write(content) job_list.append(worker_pool.submit(real_do, item_idx, item)) for job in job_list: job.result() @log_time def main(): start_from = 401 for idx in range(start_from, min(600, len(total))): print('now we are doing: {}'.format(idx)) catch_it(idx, total[idx]) if __name__ == '__main__': main()

import os import subprocess import time import functools import logging def retry(retry_count=2, exceptions=(Exception,), sleep_time=None, ignore_error=False, func_name=None): def wrapper(func): @functools.wraps(func) def inner(*args, **kw): for cnt in range(retry_count + 1): try: return func(*args, **kw) except exceptions: method = func_name if func_name else func.__name__ if cnt == retry_count: if ignore_error: logging.exception( '[notice] exceed retry count for doing {}'.format(method) ) return None else: logging.exception( '[critical] exceed retry count for doing {}'.format(method) ) raise logging.exception( 'retry {} for {}/{} count, sleep_time: {} s'.format(method, cnt + 1, retry_count, sleep_time) ) if sleep_time is not None: time.sleep(sleep_time) return inner return wrapper @retry(ignore_error=True, retry_count=0) def do(cwd): subprocess.run('ls | grep -i ".ts" | sort -n | xargs cat >> all.ts', shell=True, cwd=cwd) subprocess.run('ffmpeg -y -i all.ts -bsf:a aac_adtstoasc -acodec copy all.mp4', shell=True, cwd=cwd) subprocess.run('ls | grep -v "all.mp4" | xargs rm -f', shell=True, cwd=cwd) def main(): path = '/home/weimingliu/audio' a = os.listdir() for item in a: cwd = '{}/{}'.format(path, item) print(cwd) do(cwd) if __name__ == '__main__': main()

既然选择了远方,就要风雨兼程~

posted on 2021-03-16 18:15 stupid_one 阅读(170) 评论(0) 编辑 收藏 举报