Filebat跨公网传输日志

💮 实验背景

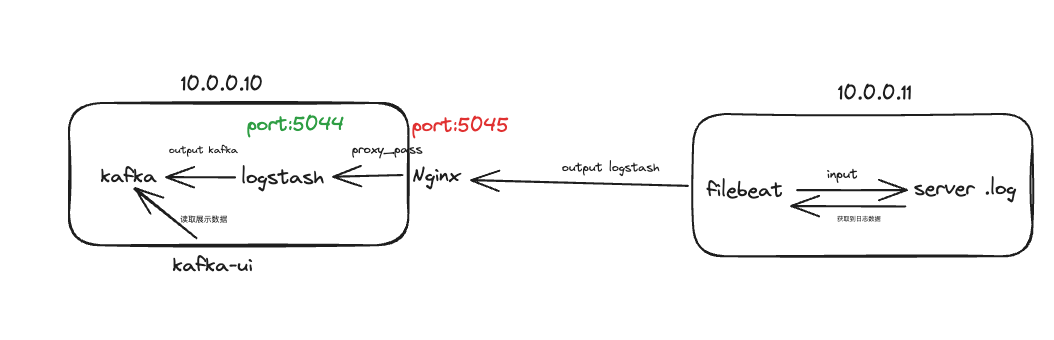

所属公司数据创业型公司,公司基于业务和成本考虑将各服务器分布于各区域IDC以及各云厂商,每个环境中的服务器通过内网相互访问,跨公网访问时通过Nginx进行代理转发。

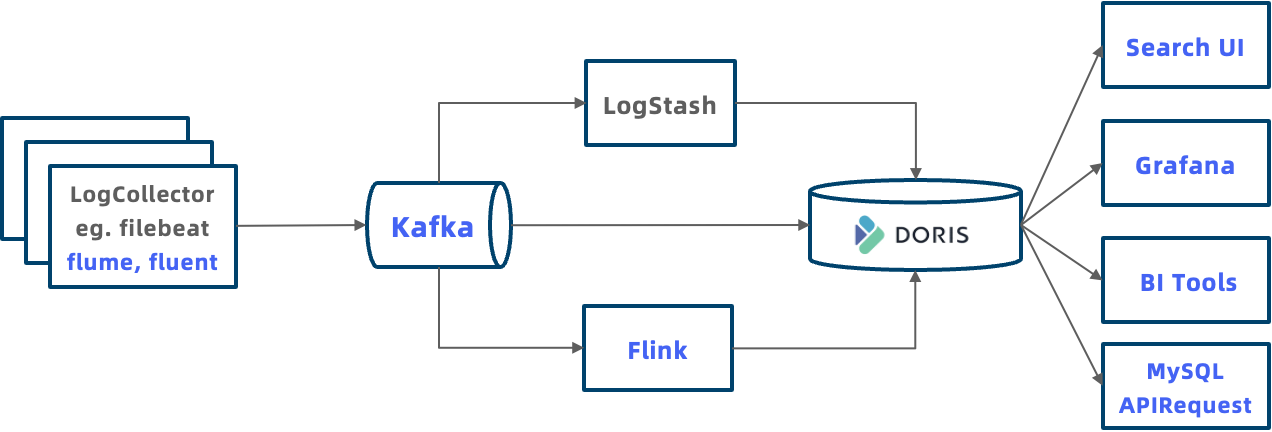

在此环境下需要基于Doris2.x版本搭建一套日志系统

🌘 实验目的

找到一种安全可靠的方式进行跨公网传输filebeat收集的日志数据

🌖 实验环境

logstash(接收端)

- IP地址: 10.0.0.10

- os:Ubuntu 20.04.4 LTS

- logstash: 8.10.4

- nginx:nginx/1.20.2 (Ubuntu 编译安装 --with-stream)

- kafka:2.13-3.5.1

- Kafka_ui:provectuslabs/kafka-ui:latest

filebeat(采集端)

- IP地址:10.0.0.11

- os:Ubuntu 20.04.4 LTS

- Filebeat :filebeat version 8.10.4 (arm64), libbeat 8.10.4 [10b198c985eb95c16405b979c63847881a199aba built 2023-10-11 19:23:16 +0000 UTC]

- server.log : Java业务日志

参考文档:

- 🍉 logstash支持负载均衡 以及SOCKS5代理 [配置 Logstash 输出 |Filebeat 参考手册 8.10] |弹性的 (elastic.co)

- 🐃 https://www.elastic.co/guide/en/beats/filebeat/current/configuring-ssl-logstash.html#configuring-ssl-logstash

- 🏋️♂️ https://www.elastic.co/guide/en/beats/filebeat/current/http-endpoint.html

- 🐋 elasticsearch - Filebeat over HTTPS - 堆栈溢出 (stackoverflow.com)

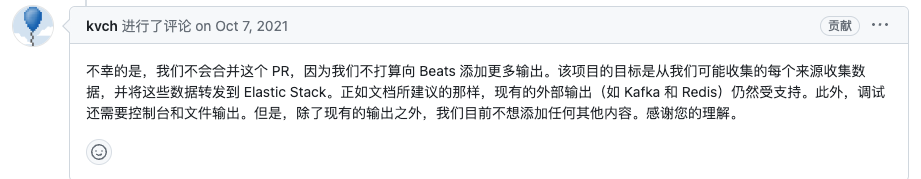

- 🐳 误导人的垃圾文章[filebeat http output-掘金 (juejin.cn)](https://juejin.cn/s/filebeat http output) 报错:

Exiting: error initializing publisher: output type http undefined" - 👒 如何让FileBeat支持http的output插件-CSDN博客 没有实验成功

- 🤼♀️ TCP/TLS Output for Beats · Issue #33107 · elastic/beats (github.com)

🚶 环境准备

🚶♀️ logstash(接收端)

更换国内源

sudo cp /etc/apt/sources.list /etc/apt/sources.list_backup

sudo vim /etc/apt/sources.list

删除原有的内容,添加以下:

# 默认注释了源码仓库,如有需要可自行取消注释

deb https://mirrors.ustc.edu.cn/ubuntu-ports/ focal main restricted universe multiverse

# deb-src https://mirrors.ustc.edu.cn/ubuntu-ports/ focal main main restricted universe multiverse

deb https://mirrors.ustc.edu.cn/ubuntu-ports/ focal-updates main restricted universe multiverse

# deb-src https://mirrors.ustc.edu.cn/ubuntu-ports/ focal-updates main restricted universe multiverse

deb https://mirrors.ustc.edu.cn/ubuntu-ports/ focal-backports main restricted universe multiverse

# deb-src https://mirrors.ustc.edu.cn/ubuntu-ports/ focal-backports main restricted universe multiverse

deb https://mirrors.ustc.edu.cn/ubuntu-ports/ focal-security main restricted universe multiverse

sudo apt-get update

sudo apt-get upgrade

下载java jdk

apt update --fix-missing

apt list --upgradable

apt install -y openjdk-8-jdk

java -version

vim /etc/profile

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-arm64/jre

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib

export PATH=${JAVA_HOME}/bin:$PATH

source /etc/profile

echo $JAVA_HOME

echo $JRE_HOME

echo $CLASSPATH

echo $PATH

设置时区以及时间同步

# 24小时制

echo '/etc/default/locale' > /etc/default/locale

# 设置时区

timedatectl set-timezone Asia/Shanghai

# 时间同步

apt install -y ntpdate

/usr/sbin/ntpdate ntp1.aliyun.com

crontab -l > crontab_conf ; echo "* * * * * /usr/sbin/ntpdate ntp1.aliyun.com >/dev/null 2>&1" >> crontab_conf && crontab crontab_conf && rm -f crontab_conf

关闭swap

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

free -h

设置系统参数

vim /etc/sysctl.conf

vm.max_map_count=2000000

sysctl -p

sysctl -w vm.max_map_count=2000000

ulimit -n 65536

sudo tee -a /etc/security/limits.conf << EOF

* hard nofile 65536

* soft nofile 65536

root hard nofile 65536

root soft nofile 65536

* soft nproc 65536

* hard nproc 65536

root soft nproc 65536

root hard nproc 65536

* soft core 65536

* hard core 65536

root soft core 65536

root hard core 65536

EOF

安装kafka

wget http://mirrors.aliyun.com/apache/kafka/3.5.1/kafka_2.13-3.5.1.tgz

tar zxvf kafka_2.13-3.5.1.tgz

cd kafka_2.13-3.5.1

sudo bin/zookeeper-server-start.sh -daemon config/zookeeper.properties

sudo bin/kafka-server-start.sh -daemon config/server.properties

安装kafka-ui

(容器方式直接启动)

mkdir /root/kafka_ui/

cd /root/kafka_ui/

vim config.yml

kafka:

clusters:

- name: kafka

bootstrapServers: 127.0.0.1:9092

metrics:

port: 9094

type: JMX

- name: OTHER_KAFKA_CLUSTER_NAME

bootstrapServers: 127.0.0.1:9092

metrics:

port: 9094

type: JMX

spring:

jmx:

enabled: true

security:

user:

name: admin

password: admin

auth:

type: DISABLED #LOGIN_FORM # DISABLED

server:

port: 8080

logging:

level:

root: INFO

com.provectus: INFO

reactor.netty.http.server.AccessLog: INFO

management:

endpoint:

info:

enabled: true

health:

enabled: true

endpoints:

web:

exposure:

include: "info,health"

# 安装docker docker-compose

apt install -y docker.io docker-compose

# 配置docker-compose.yml

cat docker-compose.yml

version: '3'

services:

kafka-ui:

network_mode: host

container_name: kafka-ui

image: provectuslabs/kafka-ui:latest

ports:

- 8080:8080

environment:

DYNAMIC_CONFIG_ENABLED: 'true'

volumes:

- ./config.yml:/etc/kafkaui/dynamic_config.yaml

# 启动容器

docker-compose up -d

安装logstash

方式一:

wget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -

sudo apt install apt-transport-https -y

echo "deb https://artifacts.elastic.co/packages/8.x/apt stable main" | sudo tee /etc/apt/sources.list.d/elastic-8.x.list

apt update -y

apt install -y logstash lrzsz net-tools

安装nginx

apt install libpcre3 libpcre3-dev gcc zlib1g zlib1g-dev libssl-dev make -y

wget http://nginx.org/download/nginx-1.20.2.tar.gz

useradd nginx -M -s /sbin/nologin

tar zxvf nginx-1.20.2.tar.gz

cd nginx-1.20.2

./configure --prefix=/etc/nginx/ --sbin-path=/usr/local/bin/ --user=nginx --group=nginx --with-http_ssl_module --with-http_stub_status_module --with-stream --conf-path=/etc/nginx/nginx.conf --error-log-path=/var/log/nginx/error.log --http-log-path=/var/log/nginx/access.log --pid-path=/var/run/nginx.pid

make && make install

cat >/etc/systemd/system/nginx.service<<EOF

[Unit]

Description=The NGINX HTTP and reverse proxy server

After=network.target remote-fs.target nss-lookup.target

[Service]

Type=forking

PIDFile=/var/run/nginx.pid

ExecStartPre=/usr/local/bin/nginx -t

ExecStart=/usr/local/bin/nginx

ExecReload=/usr/local/bin/nginx -s reload

ExecStop=/bin/kill -s QUIT $MAINPID

PrivateTmp=true

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl start nginx.service

systemctl enable nginx.service

☸️ filebeat(采集端)

更换国内源

sudo cp /etc/apt/sources.list sources_backup.list

sudo vim /etc/apt/sources.list

删除原有的内容,添加以下:

# 默认注释了源码仓库,如有需要可自行取消注释

deb https://mirrors.ustc.edu.cn/ubuntu-ports/ focal main restricted universe multiverse

# deb-src https://mirrors.ustc.edu.cn/ubuntu-ports/ focal main main restricted universe multiverse

deb https://mirrors.ustc.edu.cn/ubuntu-ports/ focal-updates main restricted universe multiverse

# deb-src https://mirrors.ustc.edu.cn/ubuntu-ports/ focal-updates main restricted universe multiverse

deb https://mirrors.ustc.edu.cn/ubuntu-ports/ focal-backports main restricted universe multiverse

# deb-src https://mirrors.ustc.edu.cn/ubuntu-ports/ focal-backports main restricted universe multiverse

deb https://mirrors.ustc.edu.cn/ubuntu-ports/ focal-security main restricted universe multiverse

sudo apt-get update

sudo apt-get upgrade

安装filebeat

方式一:

wget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -

sudo apt install apt-transport-https -y

echo "deb https://artifacts.elastic.co/packages/8.x/apt stable main" | sudo tee /etc/apt/sources.list.d/elastic-8.x.list

apt update -y

apt install -y filebeat lrzsz net-tools

上传server.log

# 借助lrzsz上传,我这里放到了/var/log/目录下

🍷 实验一:filebeat output tcp/udp logstash

🥀 filebeat(采集端)

修改filebeat配置文件

# server.log

filebeat.inputs:

- type: log

enabled: true

id: "javalog"

paths:

- /var/log/server.log

processors:

- script:

lang: javascript

id: javalog

source: >

function process(event) {

var logLine = event.Get("message");

var regex = /\d{4}-\d{2}-\d{2} \d{2}:\d{2}:\d{2}.\d{3}/g;

var match = logLine.match(regex).toString();

var newMessage = logLine.substring(24);

if (match) {

event.Put("time", match);

event.Put("newMessage", newMessage);

}

}

fields_under_root: true

multiline.pattern: '^[0-9]{4}-[0-9]{2}-[0-9]{2}.[0-9]{3}'

multiline.negate: true

multiline.match: "after"

output.logstash:

hosts: ["10.0.0.10:5045"]

👩🎨 logstash(接收端)

修改logstash文件/etc/logstash/conf.d/logstash-sample.conf

# cat /etc/logstash/conf.d/logstash-sample.conf

# Sample Logstash configuration for creating a simple

# Beats -> Logstash -> Elasticsearch pipeline.

input {

beats {

port => 5044

}

}

output {

kafka {

bootstrap_servers => "localhost:9092"

topic_id => "test"

#compression_codec => "snappy" # string (optional), one of ["none", "gzip", "snappy"], default: "none"

}

}

启动logstash

systemctl start logstash

修改Nginx配置(tcp)

(ngx_stream_core_module模块自1.9.0版本起提供。该模块不是默认构建的,应该使用--withstream配置参数来启用它。)

stream {

upstream logstash {

server 127.0.0.1:5044 max_fails=3 fail_timeout=30s;

}

server {

listen 5045;

proxy_connect_timeout 1s;

proxy_timeout 3s;

proxy_pass logstash;

}

}

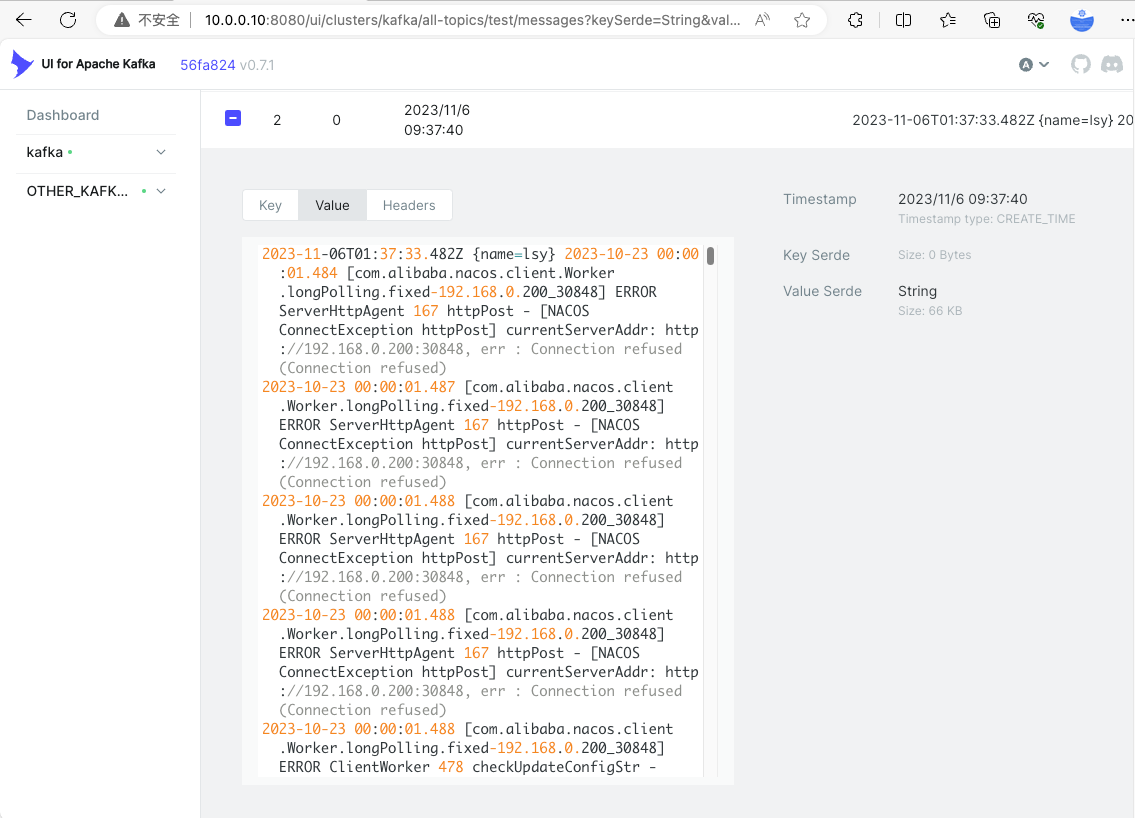

👩🍳 TCP效果

👩⚕️ 结论

filebeat可以通过input到Nginx代理的tcp 应对跨公网传输日志数据

修改Nginx配置(udp)

(ngx_stream_core_module模块自1.9.0版本起提供。该模块不是默认构建的,应该使用--withstream配置参数来启用它。)

stream {

upstream logstash {

server 127.0.0.1:5044 max_fails=3 fail_timeout=30s;

}

server {

listen 5045 udp reuseport;

proxy_timeout 3s;

proxy_pass logstash;

}

}

👰♀️ UDP效果

filebeat日志 messages部分

Failed to publish events caused by: write tcp 10.0.0.11:46146->10.0.0.10:5045: write: broken pipe

ttempting to reconnect to backoff(async(tcp://10.0.0.10:5045)) with 4 reconnect attempt(s)

😟 结论

filebeat默认使用tcp传输log数据,使用udp传输filebeat会有以上报错,因考虑到日志数据本身也不应该才有有可能会丢失数据的udp协议来传输数据,所以不再研究如何使filebeat支持udp传输

🤸♀️ 实验二:filebeat output http filebeat

filebeat output 插件编译不过,无法继续进行实验,详情参考实验四

👩🚀 实验三:filebeat output HTTPS logstash

filebeat output 插件编译不过,无法继续进行实验,详情参考实验四

🤷♀️ 实验四:filebeat output filebeat

编译安装filebeat

# 安装go-lang环境

wget https://golang.google.cn/dl/go1.21.3.linux-arm64.tar.gz

tar zxvf go1.21.3.linux-arm64.tar.gz -C /usr/local

# 配置GOPATH

vim /etc/profile

export GOROOT=/usr/local/go

export GOPATH=/root/go

export PATH=$GOPATH/bin:$GOROOT/bin:$PATH

source /etc/profile

# 查看安装的版本

go version

go version go1.21.3 linux/arm64

# 克隆源码

mkdir -p ${GOPATH}/src/github.com/elastic

#git clone https://gitclone.com/github.com/elastic/beats.git ${GOPATH}/src/github.com/elastic/beats

git clone https://github.com/elastic/beats.git ${GOPATH}/src/github.com/elastic/beats

# 更换tag v7.17.9

git clone -b v7.17.9 https://github.com/elastic/beats.git ${GOPATH}/src/github.com/elastic/beats

git clone -b v7.17.9 https://gitclone.com/github.com/elastic/beats.git ${GOPATH}/src/github.com/elastic/beats

cd /root/go/src/github.com/elastic/beats

apt install -y make

# 设置阿里源

go env -w GOPROXY=https://mirrors.aliyun.com/goproxy/,direct

go env | grep GOPROXY

# Mage 类似make&&rake 基于golang 的build 工具

# https://github.com/magefile/mage.git

# github.com/raboof/beats-output-http/http

go get github.com/magefile/mage

#go get gitee.com/shiya_liu/mage

# 设置环境变量

#cp /usr/bin/go/bin/mage /usr/local/bin/

cp /root/go/bin/mage /usr/local/bin/

# beats-output-http

cd ~

git clone https://gitclone.com/github.com/raboof/beats-output-http.git

mkdir go/src/github.com/elastic/beats/libbeat/outputs/http

mv beats-output-http/* go/src/github.com/elastic/beats/libbeat/outputs/http/

cd /root/go/src/github.com/elastic/beats/filebeat

vim main.go

import (

"os"

_ "github.com/elastic/beats/v7/libbeat/outputs/http"

"github.com/elastic/beats/v7/filebeat/cmd"

inputs "github.com/elastic/beats/v7/filebeat/input/default-inputs"

)

# 进行编译 最好用可以访问国外的网络,否则会出现go: github.com/magefile/mage: zip: not a valid zip file错误

make mage

# 进入filebeat

cd filebeat

# 编辑main.go

vim main.go

package main

import (

"os"

#_ "github.com/raboof/beats-output-http/http"

_ "gitee.com/shiya_liu/beats-output-http/http"

"github.com/elastic/beats/v8/filebeat/cmd"

inputs "github.com/elastic/beats/v7/filebeat/input/default-inputs"

)

func main() {

if err := cmd.RootCmd.Execute(); err != nil {

os.Exit(1)

}

}

# 进行build

mage build

build失败:

root@lsy:~/go/src/github.com/elastic/beats/filebeat# mage build

>> build: Building filebeat

go: downloading github.com/elastic/ecs v1.8.0

go: downloading google.golang.org/grpc v1.29.1

../libbeat/publisher/processing/default.go:23:2: zip: not a valid zip file

/root/go/pkg/mod/github.com/containerd/containerd@v1.3.3/errdefs/grpc.go:24:2: zip: not a valid zip file

/root/go/pkg/mod/github.com/containerd/containerd@v1.3.3/errdefs/grpc.go:25:2: zip: not a valid zip file

main.go:22:9: no required module provides package github.com/raboof/beats-output-http/http; to add it:

go get github.com/raboof/beats-output-http/http

Error: running "go build -o filebeat -ldflags -s -X github.com/elastic/beats/v7/libbeat/version.buildTime=2023-11-02T07:15:49Z -X github.com/elastic/beats/v7/libbeat/version.commit=b03a935282bec78b3160e74e66dfe29e2df8ee69" failed with exit code 1

mage build失败的解决方法:How to build and integrate this plugin with Filebeat · Issue #7 · raboof/beats-output-http (github.com)

参考issue后仍然失败:,branch已经更改为v7.17.9 和issue当时的版本应该差不太多(具体对应filebeat的版本 没有找到,只看到在main.og看到引入的是v7)

root@lsy:~/go/src/github.com/elastic/beats/filebeat# mage build

>> build: Building filebeat

go: downloading github.com/elastic/sarama v1.19.1-0.20210823122811-11c3ef800752

go: downloading k8s.io/api v0.21.1

go: downloading github.com/miekg/dns v1.1.41

go: downloading github.com/docker/engine v0.0.0-20191113042239-ea84732a7725

go: downloading github.com/h2non/filetype v1.1.1

../libbeat/mime/byte.go:26:2: zip: not a valid zip file

../libbeat/common/docker/watcher.go:30:2: zip: not a valid zip file

../libbeat/common/docker/watcher.go:31:2: zip: not a valid zip file

../libbeat/common/docker/watcher.go:32:2: zip: not a valid zip file

../libbeat/common/docker/client.go:27:2: zip: not a valid zip file

../libbeat/common/kubernetes/types.go:24:2: zip: not a valid zip file

../libbeat/common/kubernetes/types.go:25:2: zip: not a valid zip file

../libbeat/common/kubernetes/types.go:26:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:22:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:23:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:24:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:26:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:27:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:28:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:29:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:30:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:31:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:32:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:33:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:34:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:36:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:37:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:38:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:39:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:40:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:42:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:43:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:44:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:45:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:46:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:47:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:48:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:49:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:50:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:51:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:52:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:53:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:54:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:55:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:56:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:57:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:58:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:59:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:60:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:61:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:62:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:63:2: zip: not a valid zip file

/root/go/pkg/mod/k8s.io/client-go@v0.21.1/kubernetes/scheme/register.go:64:2: zip: not a valid zip file

../libbeat/processors/dns/resolver.go:27:2: zip: not a valid zip file

../libbeat/outputs/kafka/client.go:29:2: zip: not a valid zip file

main.go:22:9: no required module provides package github.com/raboof/beats-output-http/http; to add it:

go get github.com/raboof/beats-output-http/http

Error: running "go build -o filebeat -buildmode pie -ldflags -s -X github.com/elastic/beats/v7/libbeat/version.buildTime=2023-11-06T06:51:57Z -X github.com/elastic/beats/v7/libbeat/version.commit=46a321e17b1906b4710f010d9a96d67742eb51da" failed with exit code 1

放弃编译output http这条路径之前我曾尝试

- 🔧 查看官方文档,(https://www.elastic.co/guide/en/beats/filebeat/current/logstash-output.html) 在这里找到了支持SOCKS5代理logstash支持负载均衡 以及SOCKS5代理

- 🏡 翻阅stackoverflow (https://stackoverflow.com/questions/63236676/filebeat-over-https)

- 🌧️ 查阅野文 https://juejin.cn/s/filebeat 如何让FileBeat支持http的output插件-CSDN博客

- 👩💻 翻阅GitHub中issue部分的问题:TCP/TLS Output for Beats · Issue #33107 · elastic/beats (github.com)

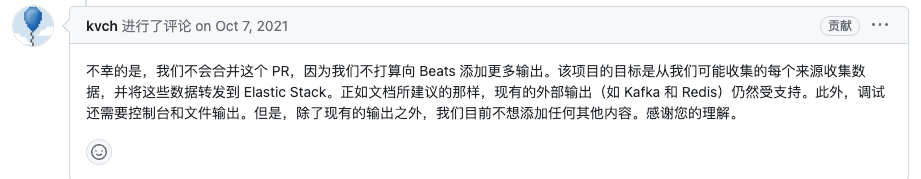

- 💠 官方曾正式回复不会支持output http

🚴♀️ 总结

通过Nginx stream 代理logstash tcp端口,filebeat配置Nginx代理出来的tcp端口 可以实现跨公网传输日志数据,filebeat output http 无法编译通过,放弃该路径。

浙公网安备 33010602011771号

浙公网安备 33010602011771号