浅析Golang的内存管理(中篇): go runtime的内存管理模型与内存分配器

文章目录

- go runtime的基本内存模型

- 三级存储体系(MCache,MCentral,Mheap)

- 对象分配流程

一、go runtime的基本内存模型

go runtime 借鉴了C++的内存模型和TCMalloc(Thread-Caching Malloc)内存分配机制,再开始之前,先了解一些go runtime对内存对象抽象的名词概念,它们分别是 Page、mspan、SizeClass、ObjectSize,Span Class。

-

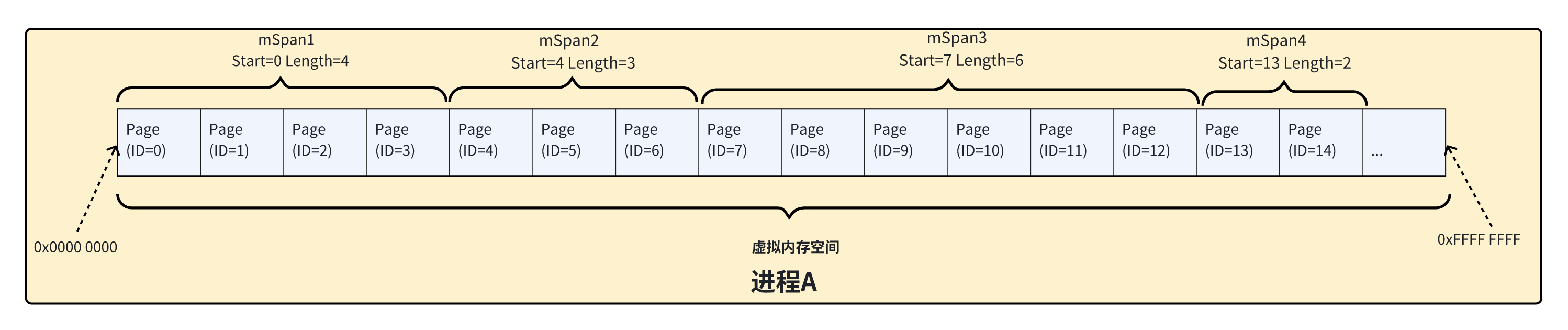

Page :虚拟内存的页大小,将进程虚拟内存空间划分为多份同等大小的Page。

-

mspan: 内存管理单元,多个Page组成一个mSpan,包含起始Page编号Start,连续多少个Page数量Length。mspan的数据结构为双向链表

View Code

View Code1 //go:notinheap 2 type mspan struct { 3 next *mspan // next span in list, or nil if none 4 prev *mspan // previous span in list, or nil if none 5 list *mSpanList // For debugging. TODO: Remove. 6 7 startAddr uintptr // address of first byte of span aka s.base() 8 npages uintptr // number of pages in span 9 10 manualFreeList gclinkptr // list of free objects in mSpanManual spans 11 ...

其中Page和mSpan关系(如图1)所示

-

-

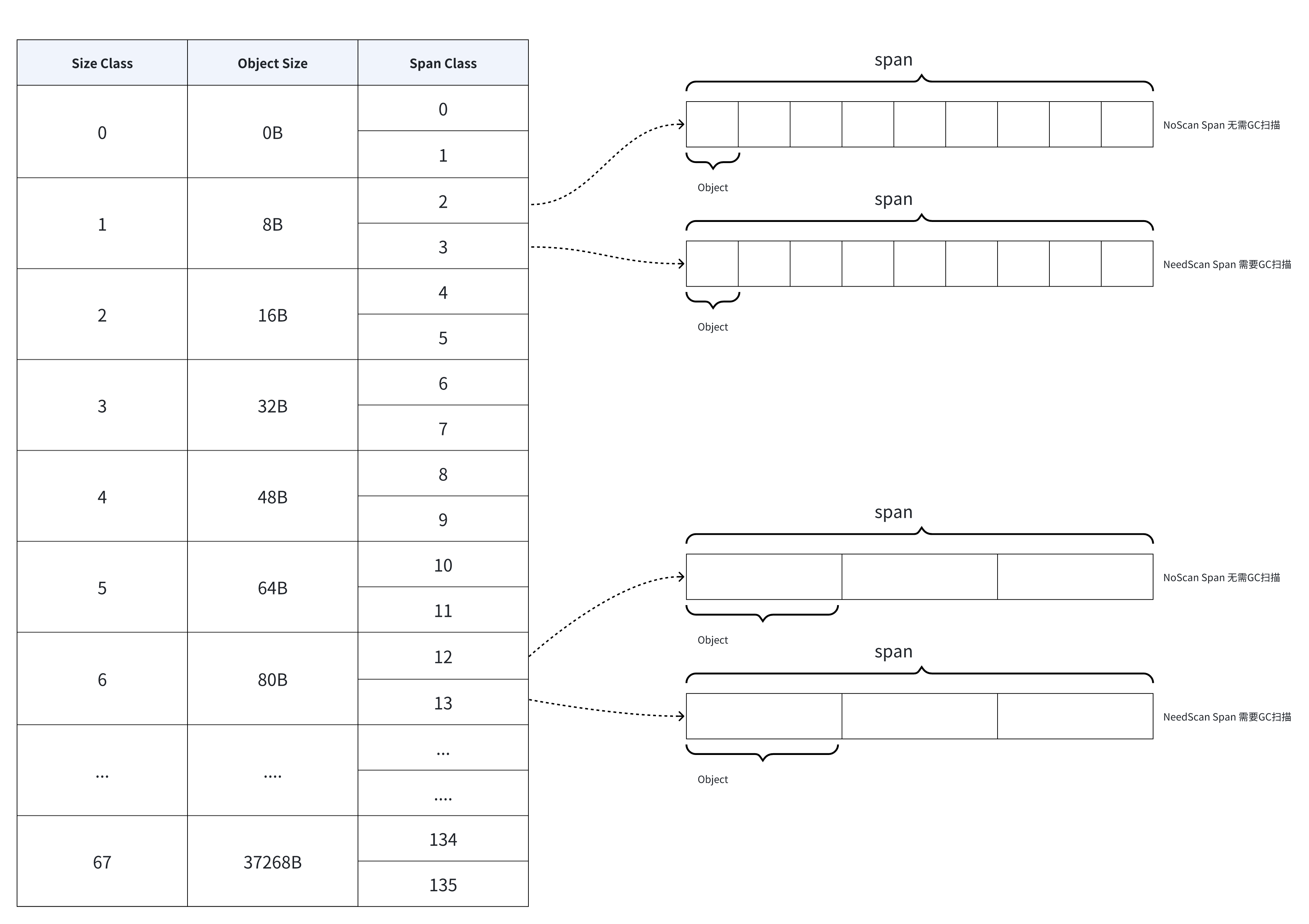

Size Class: 同一类别大小内存集合(即不同Span) 称为Size Class,Size Class集合类似一个刻度列表,例如8字节、16字节、32字节等。在申请内存时,会找到与所申请内存向上最匹配的那个Size Class的Span内存块返回给内存申请调用方。go runtime根据刻度大小,共分为67个SizeClass。https://github.com/golang/go/blob/master/src/runtime/sizeclasses.go

-

ObjectSize : goroutine一次向go 进程内存申请的对象的Object大小。object是go runtime对内存更细化的管理单元,一个mSpan将会被拆分为多个obejct,每一个mSpan会有一个属性Number Of Obejct,表示持有Object的数量。例如mSpan大小为8KB,ObjectSize为8B,则Number of Object 为1024;ObjectSize与Size Class也有对应的映射关系,例如1~8B的Object的Size Class为1, 8-16B的Object的Size Class为2。

- 这里着重比对下Object和Page。Object是go内存管理内部对象存储内存的基本单元;Page则是go内存管理与操作系统内存交互时衡量容量的基本单元(即向操作系统申请内存时的内存管理单元)。

-

Span Class:go runtime针对span特性定义的相关属性,一个Size Class对应的span中可能包含需要GC扫描的对象(这部分对象包含指针),和不需要GC扫描的对象(这部分对象不包含指针)。根据这两部分对象的特性,则可以表达出,一个Size Class对应的两个Span Class(一个需要GC扫描的span对象列表,一个无需GC扫描的span对象列表)https://github.com/golang/go/blob/fba83cdfc6c4818af5b773afa39e457d16a6db7a/src/runtime/mheap.go#L583

1 // A spanClass represents the size class and noscan-ness of a span. 2 // 3 // Each size class has a noscan spanClass and a scan spanClass. The 4 // noscan spanClass contains only noscan objects, which do not contain 5 // pointers and thus do not need to be scanned by the garbage 6 // collector. 7 type spanClass uint8 8 9 const ( 10 numSpanClasses = _NumSizeClasses << 1 11 tinySpanClass = spanClass(tinySizeClass<<1 | 1) 12 ) 13 14 func makeSpanClass(sizeclass uint8, noscan bool) spanClass { 15 return spanClass(sizeclass<<1) | spanClass(bool2int(noscan)) 16 }

上述对象的全景图如下所示(图2)

二、go runtime的三级存储体系(MCache,MCentral,Mheap)

go runtime 通过MCache、MCentral,Mheap这三个对象完成对进程虚拟内存的抽象和管理。MCache对应TCMalloc中的Thread Cache,因为是线程/协程独享,所以对它使用时无需加锁。MCentral为go runtime全局缓存,当MCache空间不足时,会想MCentral申请新的对象;最后是MHeap,该对象是对进程堆空间的抽象,上游是MCentral,下游是操作系统,当MCentral空间不足时,会向MHeap申请内存,MHeap空间不足时,会直接向操作系统申请内存扩容,同时,go runtime针对一些大对象的内存分配也会直接分配在这块区域。

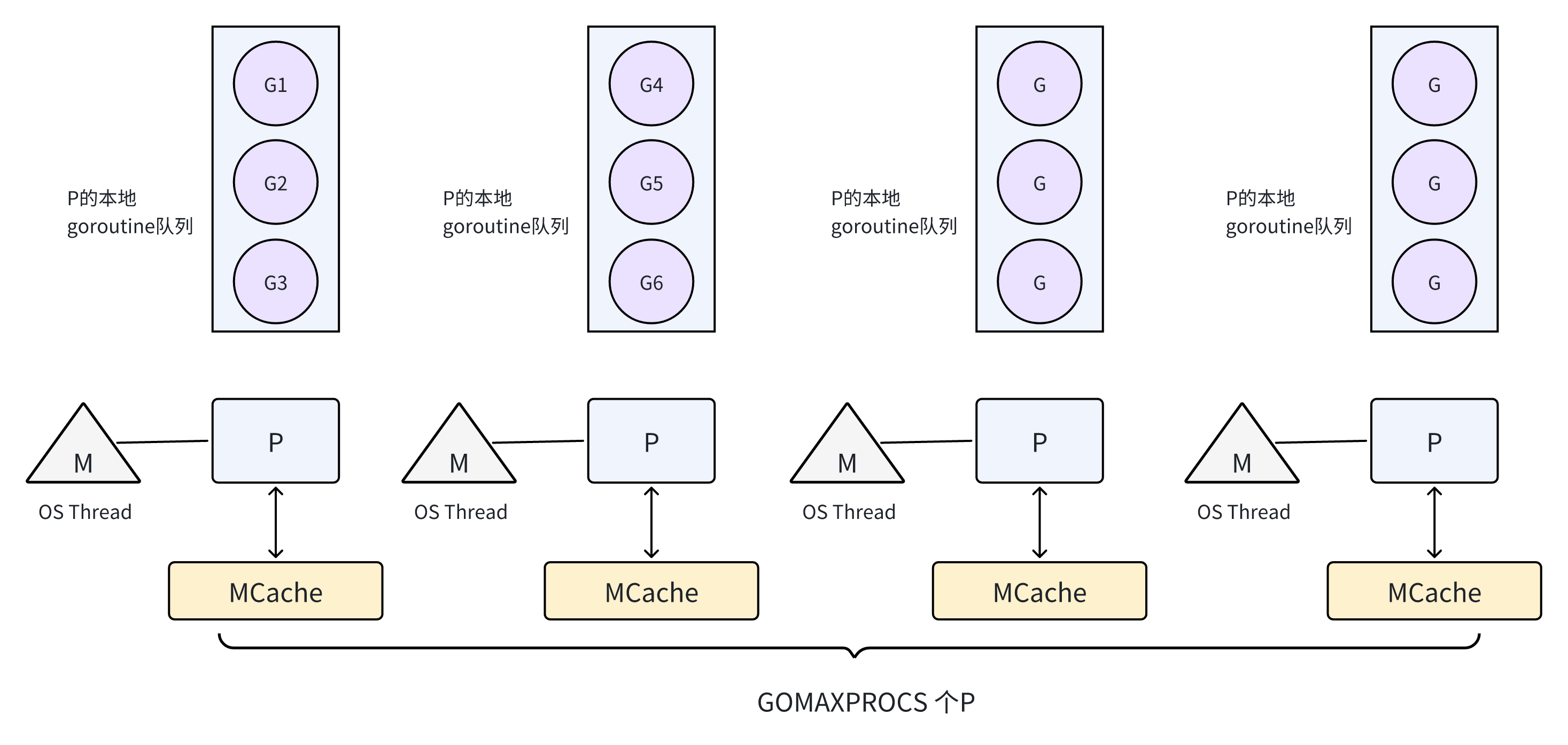

MCache将直接与go runtime的调度模型角色“P”绑定,P向MCache申请内存的基本单元便是Object,MCache与MCentral交换内存的基本单元是Span,MCentral与MHeap交换内存的基本单元为Page。下面针对这三者一一展开描述。

MCache

根据如下代码,可得出P在初始化时,会初始化自己专属的MCache空间。MCache 与P 绑定,因为在实际进程运行中,某个时刻一个P只会跟一个M(线程)绑定,因此该部分内存使用时无需加锁,使用MCache分配内存这无疑加快了内存的分配速度。它们之间的关系(如图3)所示

https://github.com/golang/go/blob/fba83cdfc6c4818af5b773afa39e457d16a6db7a/src/runtime/proc.go#L5624C1-L5625C1

https://github.com/golang/go/blob/fba83cdfc6c4818af5b773afa39e457d16a6db7a/src/runtime/mcache.go#L86C1-L87C1

1 func (pp *p) init(id int32) { 2 pp.id = id 3 pp.status = _Pgcstop 4 pp.sudogcache = pp.sudogbuf[:0] 5 pp.deferpool = pp.deferpoolbuf[:0] 6 pp.wbBuf.reset() 7 if pp.mcache == nil { 8 if id == 0 { 9 if mcache0 == nil { 10 throw("missing mcache?") 11 } 12 // Use the bootstrap mcache0. Only one P will get 13 // mcache0: the one with ID 0. 14 pp.mcache = mcache0 15 } else { 16 pp.mcache = allocmcache() 17 } 18 } 19 ...

1 func allocmcache() *mcache { 2 var c *mcache 3 systemstack(func() { 4 lock(&mheap_.lock) 5 c = (*mcache)(mheap_.cachealloc.alloc()) 6 c.flushGen = mheap_.sweepgen 7 unlock(&mheap_.lock) 8 }) 9 for i := range c.alloc { 10 c.alloc[i] = &emptymspan 11 } 12 c.nextSample = nextSample() 13 return c 14 }

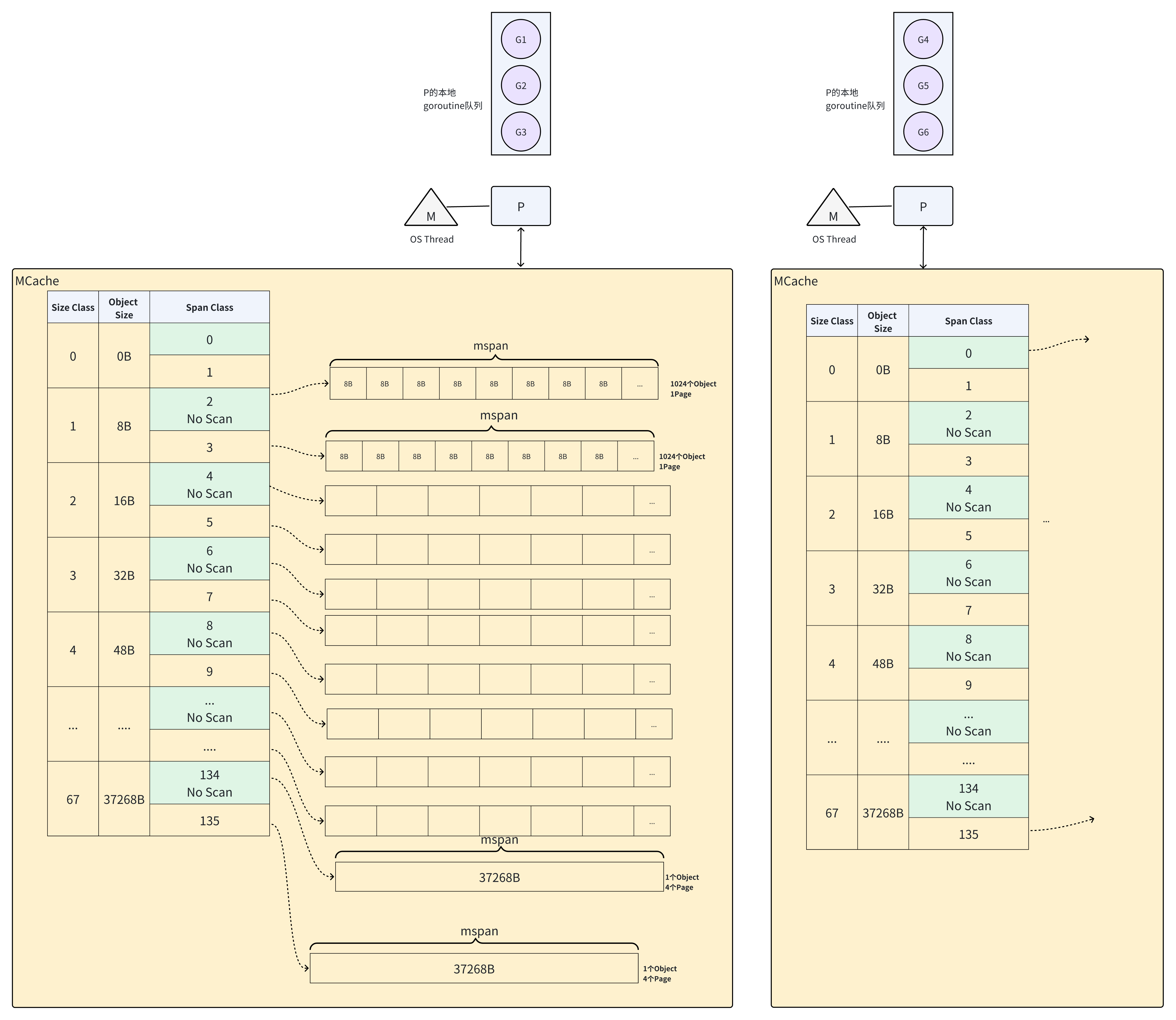

接下来看下MCache的数据结构定义,“alloc”属性定义了MCache上可以分配的对象,MCache上每一个Span Class都对应了一个mspan对象,根据Span Class的跨度不同,mspan的长度则不同。拿Span Class为4(见图2)的mspan举例,内存大小为8KB,对外提供的每一个Object大小为16B,所以共存在512个Obejct。当goroutine向MCache申请内存时,当与申请内存匹配的Span Class的MSpan没有可提供的Object时候,就会从MCentral申请新的存储空间,当然,这一步需要加锁处理。

type mcache struct { // The following members are accessed on every malloc, // so they are grouped here for better caching. nextSample uintptr // trigger heap sample after allocating this many bytes scanAlloc uintptr // bytes of scannable heap allocated // Allocator cache for tiny objects w/o pointers. // See "Tiny allocator" comment in malloc.go. // tiny points to the beginning of the current tiny block, or // nil if there is no current tiny block. // // tiny is a heap pointer. Since mcache is in non-GC'd memory, // we handle it by clearing it in releaseAll during mark // termination. // // tinyAllocs is the number of tiny allocations performed // by the P that owns this mcache. tiny uintptr tinyoffset uintptr tinyAllocs uintptr // The rest is not accessed on every malloc. alloc [numSpanClasses]*mspan // spans to allocate from, indexed by spanClass stackcache [_NumStackOrders]stackfreelist // flushGen indicates the sweepgen during which this mcache // was last flushed. If flushGen != mheap_.sweepgen, the spans // in this mcache are stale and need to the flushed so they // can be swept. This is done in acquirep. flushGen uint32 }

type spanClass uint8

const (

numSpanClasses = _NumSizeClasses << 1

tinySpanClass = spanClass(tinySizeClass<<1 | 1)

)

根据(图2)可以看到,Span Class为0和1刻度的内存,MCache并没有分配任何内存(Object Size)也为0,因为go runtime针对内存为0的数据做了特殊处理。针对go routine申请内存大小为0的对象时,go runtime 直接返回一个固定内存地址(例如struct{}),这么做的好处显而易见,可以节省部分内存空间。go runtime代码如下:

https://github.com/golang/go/blob/bdcd6d1b653dd7a5b3eb9a053623f85433ff9e6b/src/runtime/malloc.go#L1007C1-L1017C3

func mallocgc(size uintptr, typ *_type, needzero bool) unsafe.Pointer { ... // Short-circuit zero-sized allocation requests. if size == 0 { return unsafe.Pointer(&zerobase) } ... }

MCache全景图(图4)

MCentral

MCentral代码如下所示,可以看到,MCentral核心的属性为“partial”和“full”,它们分别为spanSet,表示msapn的集合。

-

Partial Span Set 表示的是还有可用空间的Span集合,这个集合中的所有Span元素都至少还有一个可用的Object空间;且当MCache向MCentral申请空间时,从该集合返回mSpan,在使用完退还时,也会将退换的Span加入Partial mSpan Set List中。

-

Full Span Set,表示的是没有可用空间的Span链表,该集合上的Span都不确定是否还有空间的Object空间。且当MCache向MCentral申请空间时,从该集合返回Span,在使用完退还时,也会将退换的Span加入Full Span Set中。

当MCache的某个Size Class对应的mSpan,被goroutine以申请object形式一次次取走,下次再申请时MCache不能返回对应object,此时MCache会向MCentral申请一个对应的mSpan。MCentral设计为两个mspan Set集合的原因是为了更高效的管理和区分内存块的状态,以及优化GC的分配,回收效率。其中partial 集合表示mSpan只有部分被使用,当它所有对象都被分配完后,该mSpan会被移动至full集合。这样,MCentral可以优先从partial中分配内存,避免造成内存碎片。partial和full都有两个spanSet集合,分别代表GC“已经清扫” 和“尚未清扫”的mspan,在每一个GC周期中,一方面可以高效的管理mspan状态,确保未被使用的内存可以及时回收,而正在使用或者已被分配完的内存不会被过度清扫,另一方面垃圾回收器和内存分配器并行执行的情况下,通过将已清扫和为清扫的mspan分开管理,可以避免mspan在分配的同时也被清扫,减少竞态条件的发生。

type mcentral struct { spanclass spanClass // partial and full contain two mspan sets: one of swept in-use // spans, and one of unswept in-use spans. These two trade // roles on each GC cycle. The unswept set is drained either by // allocation or by the background sweeper in every GC cycle, // so only two roles are necessary. // // sweepgen is increased by 2 on each GC cycle, so the swept // spans are in partial[sweepgen/2%2] and the unswept spans are in // partial[1-sweepgen/2%2]. Sweeping pops spans from the // unswept set and pushes spans that are still in-use on the // swept set. Likewise, allocating an in-use span pushes it // on the swept set. // // Some parts of the sweeper can sweep arbitrary spans, and hence // can't remove them from the unswept set, but will add the span // to the appropriate swept list. As a result, the parts of the // sweeper and mcentral that do consume from the unswept list may // encounter swept spans, and these should be ignored. partial [2]spanSet // list of spans with a free object full [2]spanSet // list of spans with no free objects } // A spanSet is a set of *mspans. // // spanSet is safe for concurrent push and pop operations. type spanSet struct { // A spanSet is a two-level data structure consisting of a // growable spine that points to fixed-sized blocks. The spine // can be accessed without locks, but adding a block or // growing it requires taking the spine lock. // // Because each mspan covers at least 8K of heap and takes at // most 8 bytes in the spanSet, the growth of the spine is // quite limited. // // The spine and all blocks are allocated off-heap, which // allows this to be used in the memory manager and avoids the // need for write barriers on all of these. spanSetBlocks are // managed in a pool, though never freed back to the operating // system. We never release spine memory because there could be // concurrent lock-free access and we're likely to reuse it // anyway. (In principle, we could do this during STW.) spineLock mutex spine unsafe.Pointer // *[N]*spanSetBlock, accessed atomically spineLen uintptr // Spine array length, accessed atomically spineCap uintptr // Spine array cap, accessed under lock

MCentral全景图(如图5)

MHeap

MHeap是对操作系统进程堆对象的抽象,上游是MCentral,当MCentral中的Span对象不足时,会找MHeap申请(此过程也会加锁保证线程安全);下游是操作系统,当MHeap内存不够时,会向操作系统申请虚拟内存空间。

MHeap管理的内存基本单元为HeapArena,HeapArena是对多个"Page"的抽象,在Linux 64位的操作系统上,每一个HeapArena占用64MB的内存,每一个HeapArena包含多个Page,每个Page由不同的mSpan来管理。MHeap中,根据“arens”属性对HeapArena进行定义和管理。从下图mheap定义中,还有几个关键属性需要关注

central属性:根据该属性可以发现,MCentral为MHeap的一部分。

arenaHints属性:用于指示为Arena分配更多地址时,在堆的什么地址分配。

https://github.com/golang/go/blob/bdcd6d1b653dd7a5b3eb9a053623f85433ff9e6b/src/runtime/mheap.go#L148

type mheap struct { ...

// bitmap stores the pointer/scalar bitmap for the words in

// this arena. See mbitmap.go for a description. Use the

// heapBits type to access this.

bitmap [heapArenaBitmapBytes]byte

// arenas is the heap arena map. It points to the metadata for // the heap for every arena frame of the entire usable virtual ...

arenas [1 << arenaL1Bits]*[1 << arenaL2Bits]*heapArena ...

// arenaHints is a list of addresses at which to attempt to

// add more heap arenas. This is initially populated with a

// set of general hint addresses, and grown with the bounds of

// actual heap arena ranges.

arenaHints *arenaHint

...

// central free lists for small size classes. // the padding makes sure that the mcentrals are // spaced CacheLinePadSize bytes apart, so that each mcentral.lock // gets its own cache line. // central is indexed by spanClass. central [numSpanClasses]struct { mcentral mcentral pad [cpu.CacheLinePadSize - unsafe.Sizeof(mcentral{})%cpu.CacheLinePadSize]byte } ... }

接下来是HeapArena,它是由多个Page组成的的抽象管理单元,HeapArea的bitmap属性,表示这个HeapArena的内存使用情况,即当前标记对应的地址中是否存在对象以及是否被GC标记过。

// A heapArena stores metadata for a heap arena. heapArenas are stored // outside of the Go heap and accessed via the mheap_.arenas index. // //go:notinheap type heapArena struct { // bitmap stores the pointer/scalar bitmap for the words in // this arena. See mbitmap.go for a description. Use the // heapBits type to access this. bitmap [heapArenaBitmapBytes]byte // spans maps from virtual address page ID within this arena to *mspan. // For allocated spans, their pages map to the span itself. // For free spans, only the lowest and highest pages map to the span itself. // Internal pages map to an arbitrary span. // For pages that have never been allocated, spans entries are nil. // // Modifications are protected by mheap.lock. Reads can be // performed without locking, but ONLY from indexes that are // known to contain in-use or stack spans. This means there // must not be a safe-point between establishing that an // address is live and looking it up in the spans array. spans [pagesPerArena]*mspan ... }

MHeap全景图(图6、图7)

三、对象分配流程

由下段源码分析可知,go runtime 将对象大小分为微对象、小对象、大对象:

-

微对象:大小为[1,16B)

-

小对象:大小为[16B,32KB]

-

大对象为(32KB,+∞)

https://github.com/golang/go/blob/b38b0c0088039b03117b87eee61583ac4153f2b7/src/runtime/malloc.go#L113

https://github.com/golang/go/blob/b38b0c0088039b03117b87eee61583ac4153f2b7/src/runtime/malloc.go#L1049

const ( maxTinySize = _TinySize maxSmallSize = _MaxSmallSize // Tiny allocator parameters, see "Tiny allocator" comment in malloc.go. _TinySize = 16 ... const ( _MaxSmallSize = 32768 .. )

// 为对象分配

// Actually do the allocation.

var x unsafe.Pointer

var elemsize uintptr

if size <= maxSmallSize-mallocHeaderSize {

if typ == nil || !typ.Pointers() {

if size < maxTinySize {

x, elemsize = mallocgcTiny(size, typ, needzero)

}

为了减少内存碎片和加快内存分配效率,在MCache中,有一块独立的区域“Tiny空间”,且Tiny空间自带字节对齐,从SpanClass为4或者5的MCache中申请的一个16B的Object,专门用来给微对象分配空间,当前的这个“Tiny空间”满了以后,会在从MCache中申请一个16B的Object,继续为微对象分配内存。只有“Tiny空间”上所有微对象都被定义为“垃圾”后,GC才会将这个object整体回收。

接下来详细看一下go runtime针对小对象的分配 和 大对象的分配。 runtime的实现源码为src/runtime/malloc.go的mallocgc函数(https://github.com/golang/go/blob/b38b0c0088039b03117b87eee61583ac4153f2b7/src/runtime/malloc.go#L1007)

小对象分配

小对象的分配时,从MCache获取内存返回,当前空间内存不足时,依次向上层空间,MCentral,MHeap获取内存。可以依次分为如下几个步骤:

-

P向go runtime申请一个对象所需的内存空间, MCache收到请求后,根据对象内存空间计算出具体的Size,Size大于16B时,根据Size匹配对应的Size Class,再根据是否包含指针(nosacn 还是 scan),匹配具体某个刻度的SpanClass。

-

如果选定刻度的SpanClass存在未占用(可用)的Object,则返回Object给到P,假如不存在MCache则向MCentral申请一个Span。

-

MCentral会从对应刻度的SpanClass的 Partial Set取出一个Span返回给MCache(这一使用MCentral的全局斥锁保证线程安全)。

-

如果MCentral没有符合的Span,则向MHeap申请内存,MHeap从HeapArena取出一部分Pages返回给MCentral,当MHeap没有足够内存时,向操作系统申请内存。这样,最终使得P得到所需内存。 全流程图如(图8)

大对象分配

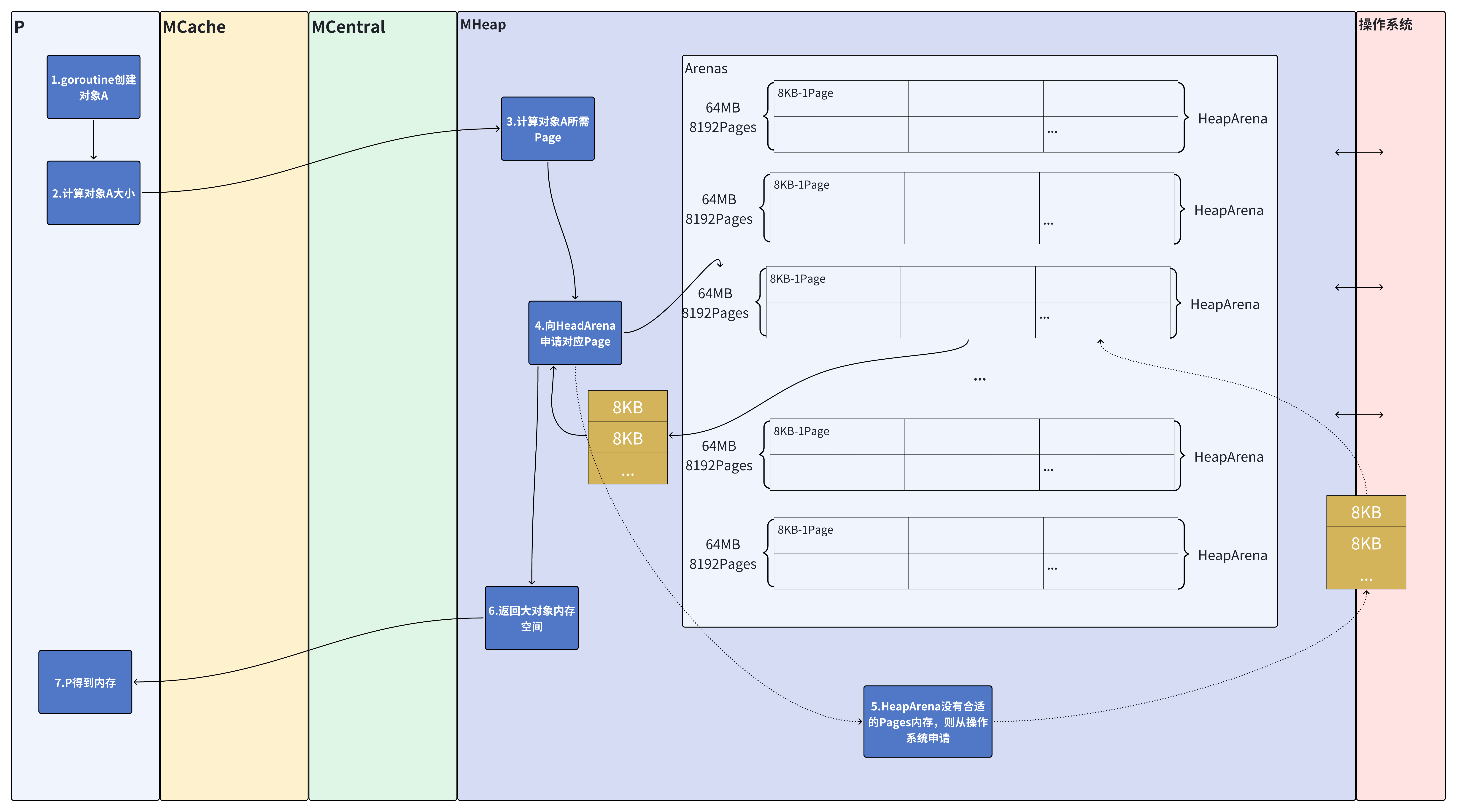

go runtime针对大对象分配直接从MHeap中分配内存,大对象分配直接从MHeap申请所需要的适当的Pages。可以分为如下几个步骤:

- P向go runtime申请大对象所需内存, MHeap收到请求后,计算出对象的Size,并根据Size大小计算所需Pages ,直接向Arenas申请对应的Pages。

-

假如MHeap空间不足,则向操作系统申请虚拟内存,将申请到的虚拟内存填充到Arenas中。MHeap返回大对象的内存空间,P得到内存,流程结束。 全流程图如(图9)

最后总结一下,go runtime 使用了MCache、MCentral、MHeap三层分配模型,MCache直接与P绑定,且优先从MCache进行内存对象分配,避免了高并发场景下的互斥锁征用问题,很好的利用了计算机的局部性原理。同时,通过SizeClass将对象划分为多个mSpan集合,分配对象时先找到与自己最为匹配的mSpan集合,以及进程中,大多数对象为小于16B的微对象,将微对象合并处理(分配、回收),这一系列的机制很好的避免了产生大量内存碎片,并加快了内存的分配效率。

参考资料:

https://github.com/golang/go/blob/master/src/runtime/malloc.go

https://book.douban.com/subject/36403287/

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY