https://docs.ceph.com/en/latest/rbd/rbd-kubernetes

https://github.com/ceph/ceph-csi/blob/devel/docs/deploy-rbd.md

https://support.huaweicloud.com/dpmg-kunpengcpfs/kunpengk8sceph_04_0001.html

http://elrond.wang/2021/06/19/Kubernetes%E9%9B%86%E6%88%90Ceph/#6-troubleshooting

https://www.cnblogs.com/lianngkyle/p/14772121.html

1. 建立kubernetes存储池

# ceph osd pool create kubernetes 128

# rbd pool init kubernetes

2. 配置csi-config-map

# ceph mon dump

fsid 83baa63b-c421-480a-be24-0e2c59a70e17

min_mon_release 15 (octopus)

0: [v2:192.168.100.201:3300/0,v1:192.168.100.201:6789/0] mon.vm-201

1: [v2:192.168.100.202:3300/0,v1:192.168.100.202:6789/0] mon.vm-202

2: [v2:192.168.100.203:3300/0,v1:192.168.100.203:6789/0] mon.vm-203

cat > /tmp/csi-config-map.yaml << EOF

apiVersion: v1

kind: ConfigMap

data:

config.json: |-

[

{

"clusterID": "83baa63b-c421-480a-be24-0e2c59a70e17",

"monitors": [

"192.168.100.201:6789",

"192.168.100.202:6789",

"192.168.100.203:6789"

]

}

]

metadata:

name: ceph-csi-config

EOF

kubectl apply -f /tmp/csi-config-map.yaml

3. 配置csi-rbd-secret

# ceph auth get-or-create client.kubernetes mon 'profile rbd' osd 'profile rbd pool=kubernetes' mgr 'profile rbd pool=kubernetes'

[client.kubernetes]

key = AQD7QJxhQ4xJARAAHbBdXZ43xxSiTRscbynLWA==

# ceph auth get client.kubernetes

exported keyring for client.kubernetes

[client.kubernetes]

key = AQD7QJxhQ4xJARAAHbBdXZ43xxSiTRscbynLWA==

caps mgr = "profile rbd pool=kubernetes"

caps mon = "profile rbd"

caps osd = "profile rbd pool=kubernetes"

cat > /tmp/csi-rbd-secret.yaml << EOF

apiVersion: v1

kind: Secret

metadata:

name: csi-rbd-secret

stringData:

userID: kubernetes

userKey: AQD7QJxhQ4xJARAAHbBdXZ43xxSiTRscbynLWA==

EOF

kubectl apply -f /tmp/csi-rbd-secret.yaml

4. 部署ceph-csi插件

curl -k https://raw.fastgit.org/ceph/ceph-csi/v3.4.0/deploy/rbd/kubernetes/csi-provisioner-rbac.yaml -o /tmp/csi-provisioner-rbac.yaml

curl -k https://raw.fastgit.org/ceph/ceph-csi/v3.4.0/deploy/rbd/kubernetes/csi-nodeplugin-rbac.yaml -o /tmp/csi-nodeplugin-rbac.yaml

kubectl apply -f /tmp/csi-provisioner-rbac.yaml

kubectl apply -f /tmp/csi-nodeplugin-rbac.yaml

curl -k https://raw.fastgit.org/ceph/ceph-csi/v3.4.0/deploy/rbd/kubernetes/csi-rbdplugin.yaml -o /tmp/csi-rbdplugin.yaml

curl -k https://raw.fastgit.org/ceph/ceph-csi/v3.4.0/deploy/rbd/kubernetes/csi-rbdplugin-provisioner.yaml -o /tmp/csi-rbdplugin-provisioner.yaml

sed -e 's|k8s.gcr.io|192.168.100.198:5000|g' -e 's|quay.io|192.168.100.198:5000|g' -i /tmp/csi-rbdplugin.yaml

sed -e 's|k8s.gcr.io|192.168.100.198:5000|g' -e 's|quay.io|192.168.100.198:5000|g' -i /tmp/csi-rbdplugin-provisioner.yaml

# 注释 ceph-csi-encryption-kms-config path

......

#- name: ceph-csi-encryption-kms-config

#mountPath: /etc/ceph-csi-encryption-kms-config/

#- name: ceph-csi-encryption-kms-config

#configMap:

#name: ceph-csi-encryption-kms-config

......

kubectl apply -f /tmp/csi-rbdplugin.yaml

kubectl apply -f /tmp/csi-rbdplugin-provisioner.yaml

# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

csi-rbdplugin-b6d69 3/3 Running 0 15m 192.168.100.207 vm-207 <none> <none>

csi-rbdplugin-gc4bv 3/3 Running 0 15m 192.168.100.197 vm-197 <none> <none>

csi-rbdplugin-lqg74 3/3 Running 0 84s 192.168.100.208 vm-208 <none> <none>

csi-rbdplugin-provisioner-7695454cb7-7zp5g 7/7 Running 0 13m 10.240.2.155 vm-197 <none> <none>

csi-rbdplugin-provisioner-7695454cb7-88h6g 7/7 Running 0 13m 10.240.7.193 vm-208 <none> <none>

csi-rbdplugin-provisioner-7695454cb7-bpqgd 7/7 Running 0 13m 10.240.36.21 vm-207 <none> <none>

5. 配置csi-rbd-sc

cat > /tmp/csi-rbd-sc.yaml << EOF

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: csi-rbd-sc

namespace: default

provisioner: rbd.csi.ceph.com

parameters:

clusterID: 83baa63b-c421-480a-be24-0e2c59a70e17

pool: kubernetes

imageFeatures: layering

csi.storage.k8s.io/provisioner-secret-name: csi-rbd-secret

csi.storage.k8s.io/provisioner-secret-namespace: default

csi.storage.k8s.io/controller-expand-secret-name: csi-rbd-secret

csi.storage.k8s.io/controller-expand-secret-namespace: default

csi.storage.k8s.io/node-stage-secret-name: csi-rbd-secret

csi.storage.k8s.io/node-stage-secret-namespace: default

csi.storage.k8s.io/fstype: xfs

reclaimPolicy: Delete

allowVolumeExpansion: true

mountOptions:

- discard

EOF

kubectl apply -f /tmp/csi-rbd-sc.yaml

# kubectl get sc -o wide

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

csi-rbd-sc rbd.csi.ceph.com Delete Immediate true 3h11m

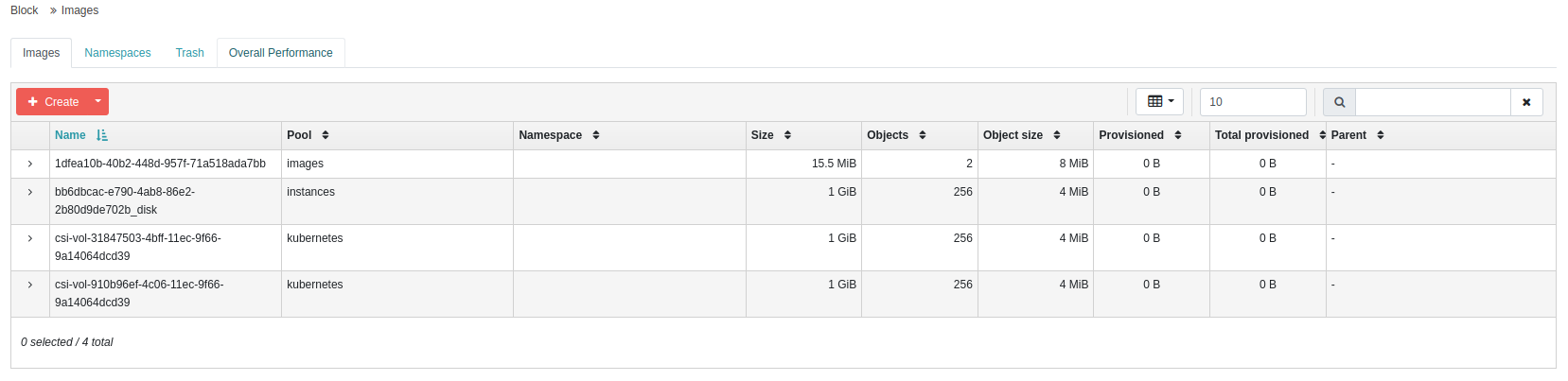

6. 验证 - volumeMode Block

cat > /tmp/raw-block-pvc.yaml << EOF

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: raw-block-pvc

namespace: default

spec:

accessModes:

- ReadWriteOnce

volumeMode: Block

resources:

requests:

storage: 1Gi

storageClassName: csi-rbd-sc

EOF

kubectl apply -f /tmp/raw-block-pvc.yaml

# kubectl get pvc -o wide

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE VOLUMEMODE

raw-block-pvc Bound pvc-beb3a8c6-b659-44c6-bc5c-5fbd3ead1706 1Gi RWO csi-rbd-sc 10s Block

cat > /tmp/raw-block-pod.yaml << EOF

---

apiVersion: v1

kind: Pod

metadata:

name: pod-with-raw-block-volume

namespace: default

spec:

containers:

- name: centos-pod

image: 192.168.100.198:5000/centos:centos7.9.2009

command: ["/bin/sh", "-c"]

args: ["tail -f /dev/null"]

volumeDevices:

- name: data

devicePath: /dev/xvda

volumes:

- name: data

persistentVolumeClaim:

claimName: raw-block-pvc

EOF

kubectl apply -f /tmp/raw-block-pod.yaml

7. 验证 - volumeMode Filesystem

cat > /tmp/pvc.yaml << EOF

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: rbd-pvc

spec:

accessModes:

- ReadWriteOnce

volumeMode: Filesystem

resources:

requests:

storage: 1Gi

storageClassName: csi-rbd-sc

EOF

kubectl apply -f /tmp/pvc.yaml

# kubectl get pvc -o wide

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE VOLUMEMODE

raw-block-pvc Bound pvc-beb3a8c6-b659-44c6-bc5c-5fbd3ead1706 1Gi RWO csi-rbd-sc 81m Block

rbd-pvc Bound pvc-3a57e9e7-31c1-4fe6-958e-df4a985654c5 1Gi RWO csi-rbd-sc 29m Filesystem

cat > /tmp/pod.yaml << EOF

---

apiVersion: v1

kind: Pod

metadata:

name: csi-rbd-demo-pod

spec:

containers:

- name: web

image: 192.168.100.198:5000/nginx:1.21

volumeMounts:

- name: mypvc

mountPath: /var/lib/www/html

volumes:

- name: mypvc

persistentVolumeClaim:

claimName: rbd-pvc

readOnly: false

EOF

kubectl apply -f pod.yaml

# kubectl exec -it csi-rbd-demo-pod -- df -h

Filesystem Size Used Avail Use% Mounted on

overlay 20G 4.8G 16G 24% /

tmpfs 64M 0 64M 0% /dev

tmpfs 1.9G 0 1.9G 0% /sys/fs/cgroup

/dev/sda1 20G 4.8G 16G 24% /etc/hosts

shm 64M 0 64M 0% /dev/shm

/dev/rbd0 976M 2.6M 958M 1% /var/lib/www/html <-- 存储空间已挂载

tmpfs 1.9G 12K 1.9G 1% /run/secrets/kubernetes.io/serviceaccount

tmpfs 1.9G 0 1.9G 0% /proc/acpi

tmpfs 1.9G 0 1.9G 0% /proc/scsi

tmpfs 1.9G 0 1.9G 0% /sys/firmware

* 解决 * failed to provision volume with StorageClass "csi-rbd-sc": rpc error: code = Internal desc = failed to get connection: connecting failed: rados: ret=-13, Permission denied

# kubectl get pvc -o wide

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE VOLUMEMODE

raw-block-pvc Pending csi-rbd-sc 25m Block

# kubectl describe pvc raw-block-pvc

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning ProvisioningFailed 7m40s (x14 over 26m) rbd.csi.ceph.com_csi-rbdplugin-provisioner-7695454cb7-7zp5g_eb83fd9a-a85f-45ff-a9d9-195990a911f1 failed to provision volume with StorageClass "csi-rbd-sc": rpc error: code = Internal desc = failed to get connection: connecting failed: rados: ret=-13, Permission denied

Normal Provisioning 5m31s (x15 over 26m) rbd.csi.ceph.com_csi-rbdplugin-provisioner-7695454cb7-7zp5g_eb83fd9a-a85f-45ff-a9d9-195990a911f1 External provisioner is provisioning volume for claim "default/raw-block-pvc"

Normal ExternalProvisioning 66s (x102 over 26m) persistentvolume-controller

# kubectl logs csi-rbdplugin-provisioner-7695454cb7-7zp5g -c csi-rbdplugin

I1115 06:31:09.182564 1 utils.go:177] ID: 33 Req-ID: pvc-a0a2cebf-f60a-4f12-9c80-6745d1f6a4c3 GRPC call: /csi.v1.Controller/CreateVolume

I1115 06:31:09.183942 1 utils.go:181] ID: 33 Req-ID: pvc-a0a2cebf-f60a-4f12-9c80-6745d1f6a4c3 GRPC request: {"capacity_range":{"required_bytes":1073741824},"name":"pvc-a0a2cebf-f60a-4f12-9c80-6745d1f6a4c3","parameters":{"clusterID":"83baa63b-c421-480a-be24-0e2c59a70e17","csi.storage.k8s.io/pv/name":"pvc-a0a2cebf-f60a-4f12-9c80-6745d1f6a4c3","csi.storage.k8s.io/pvc/name":"raw-block-pvc","csi.storage.k8s.io/pvc/namespace":"default","imageFeatures":"layering","pool":"kubernetes"},"secrets":"***stripped***","volume_capabilities":[{"AccessType":{"Block":{}},"access_mode":{"mode":1}}]}

I1115 06:31:09.185200 1 rbd_util.go:1316] ID: 33 Req-ID: pvc-a0a2cebf-f60a-4f12-9c80-6745d1f6a4c3 setting disableInUseChecks: false image features: [layering] mounter: rbd

E1115 06:31:09.206513 1 controllerserver.go:301] ID: 33 Req-ID: pvc-a0a2cebf-f60a-4f12-9c80-6745d1f6a4c3 failed to connect to volume : failed to get connection: connecting failed: rados: ret=-13, Permission denied

E1115 06:31:09.206759 1 utils.go:186] ID: 33 Req-ID: pvc-a0a2cebf-f60a-4f12-9c80-6745d1f6a4c3 GRPC error: rpc error: code = Internal desc = failed to get connection: connecting failed: rados: ret=-13, Permission denied

最后检查是 /tmp/csi-rbd-secret.yaml 的 UserID 写成 client.kubernetes

持久卷相关操作参考

https://hub.fastgit.org/ceph/ceph-csi/tree/devel/examples/rbd

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· 阿里巴巴 QwQ-32B真的超越了 DeepSeek R-1吗?

· 【译】Visual Studio 中新的强大生产力特性

· 【设计模式】告别冗长if-else语句:使用策略模式优化代码结构

· AI与.NET技术实操系列(六):基于图像分类模型对图像进行分类