openshift3.11社区版部署

安装注意事项

1、保证能联网

2、开启Selinux

3、操作系统语言不能是中文

4、infra节点会自动部署router,lb不要放在infra节点上,所以80端口不能冲突

5、如果web console访问端口改成443,lb不能放一起,端口冲突

6、硬盘格式XFS才支持overlay2

7、如果lb和master在一个节点上,会有8443端口已被占用的问题,建议安装时lb不要放在master节点上

8、如果etcd放在master节点上,会以静态pod形式启动。如果放在node节点上,会以系统服务的形式启动。我在安装过程中,一个etcd放在了master上,另一个放在了node上,导致etcd启动失败。建议安装时etcd要么全放在master节点上,要么全放在node节点上。

9、安装过程中,直接安装了带有nfs持久存储的监控,需要提前安装java-1.8.0-openjdk-headless python-passlib,这一点官网没有提及,不提前装安装会报错。

10、docker 启用配置参数–selinux-enabled=false ,但是操作系统Selinux必须开启,否则安装报错前奏

本篇基于openshift3.11进行的集群安装,安装环境是在VMWare16 pro上安装centos7.9虚拟机,一台master两台slave。通过该方式模拟实际服务器的集群搭建openshift

从OCP3.10开始,如果您使用RHEL作为主机的底层操作系统则使用RPM方法在该主机上安装OCP组件,在RHEL上使用RPM时,所有服务都通过包管理从外部源安装和更新,这些包修改了同一用户空间中主机的现有配置

如果您使用RHEL Atomic Host则在该主机上使用系统容器方法, 该方法使OCP的每个组件都作为一个容器,在一个独立的包中,使用主机的内核运行。更新后的新容器会替换您主机上的任何现有容器

这两种安装类型都为集群提供了相同的功能,但您使用的操作系统决定了您管理服务和主机更新的方式

从OCP3.10开始,Red Hat Enterprise Linux系统不再支持容器化安装方法

生产级硬件要求

master节点

在etcd集群单独部署时,高可用性OCP集群master节点需要满足最低要求:

每1000个pod具有1个CPU和1.5GB内存

2000个pod的OCP集群建议master节点是4个CPU内核和19GB内存

node节点

node节点的大小取决于其工作负载的预期大小。作为OCP集群管理员,您需要计算预期工作负载并增加大约10%的开销。对于生产环境请分配足够的资源,以便节点主机故障不会影响您的最大容量,| 超额调度node节点上的物理资源会影响 Kubernetes调度程序在pod放置期间的资源保证

安装过程分为5部分:

1、集群主从机环境准备

2、安装依赖包,提前安装使用Ansible安装OpenShift集群所依赖的第三方包

3、Ansible执行安装:使用Ansible Playbook进行自动化安装

4、OpenShift系统配置:在使用Ansible执行安装完成之后的系统配置。

5、测试问题处理

环境准备

| IP | OS | CPU | Mem | Disk | |

|---|---|---|---|---|---|

| master.lab.example.com | 172.16.186.110/24 | CentOS7.9 | 2vCPU | 6G | 20G(OS) / 30G(Docker) |

| node1.lab.example.com | 172.16.186.111/24 | CentOS7.9 | 2vCPU | 6G | 20G(OS) / 30G(Docker) |

| node2.lab.example.com | 172.16.186.112/24 | CentOS7.9 | 2vCPU | 6G | 20G(OS) / 30G(Docker) |

注:infra节点可单独部署,hosts中设置好,即可在/etc/ansible/hosts中加入infra.ocp.cn openshift_node_group_name='node-config-infra'

存储管理

| 目录 | 作用 | 规格 | 预期增长 |

|---|---|---|---|

| /var/lib/openshift | 仅在单主模式下用于etcd存储且etcd嵌入在atomic-openshift-master进程中 | 小于10GB | 仅存储元数据(随着环境慢慢成长) |

| /var/lib/etcd | 在多主模式下或当etcd由管理员独立时用于etcd存储 | 小于20GB | 仅存储元数据(随着环境慢慢成长) |

| /var/lib/docker | 当运行时为docker时这是挂载点, 用于活动容器运行时(包括 pod)和本地图像存储(不用于注册表存储)的存储。挂载点应由docker-storage管理而不是手动管理 | 当节点内存为16GB时为50GB,每增加8GB内存就增加20-25GB | 增长受限于运行容器的容量 |

| /var/lib/container | 当运行时为CRI-O时这是挂载点,用于活动容器运行时(包括pod)和本地图像存储(不用于注册表存储)的存储 | 当节点内存为16GB时为50GB,每增加8GB内存就增加20-25GB | 增长受限于运行容器的容量 |

| /var/lib/origin/openshift.local.volumes | 用于pod的临时卷存储。这包括在运行时挂载到容器中的任何外部内容。包括不受持久存储PV支持的环境变量、kube机密和数据卷 | 变化 | 如果需要存储的pod使用持久卷则最小化 如果使用临时存储这可快速增长 |

| /var/logs | 所有组件的日志文件 | 10~30GB | 日志文件可以快速增长;大小可通过不断增长的磁盘来管理,也可用日志轮换来管理 |

安装

前期配置

# 关于所有节点的SELinux

[root@ * ~]# grep -v ^# /etc/selinux/config | grep -v ^$

SELINUX=enforcing # 官方文档推荐开启SELINUX,否则会导致安装失败

SELINUXTYPE=targeted

# 所有节点关闭防火墙

[root@ * ~]# systemctl stop firewalld && systemctl disable firewalld

# 修改hosts文件

[root@master ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.16.186.110 master.lab.example.com master

172.16.186.111 node1.lab.example.com node1

172.16.186.112 node2.lab.example.com node2

[root@master ~]# for i in 1 2;do scp /etc/hosts root@172.16.186.11$i:/etc;done

# 配置免密登录

[root@master ~]# ssh-keygen -t rsa -P ''

[root@master ~]# ssh-copy-id master

[root@master ~]# ssh-copy-id master.lab.example.com

[root@master ~]# for i in {0..2};do ssh-copy-id 172.16.186.11$i;done

[root@master ~]# for i in {1..2};do ssh-copy-id node$i;done

[root@master ~]# for i in {1..2};do ssh-copy-id node$i.lab.example.com;done

安装依赖包

# 所有主机下安装OpenShift依赖的软件包

yum -y install wget git net-tools bind-utils iptables-services bridge-utils bash-completion kexec-tools sos psacct bash-completion.noarch python-passlib NetworkManager vim

# 所有主机安装docker

yum -y install docker-1.13.1

# 所有主机配置docker使用的存储

vim /etc/sysconfig/docker-storage-setup

DEVS=/dev/nvme0n2

VG=DOCKER

SETUP_LVM_THIN_POOL=yes

DATA_SIZE="100%FREE"

[root@* ~]# rm -rf /var/lib/docker/

[root@* ~]# wipefs --all /dev/nvme0n2

# 就是创建vg、Thinpool、lv的过程

[root@* ~]# docker-storage-setup

INFO: Volume group backing root filesystem could not be determined

INFO: Writing zeros to first 4MB of device /dev/nvme0n2

记录了4+0 的读入

记录了4+0 的写出

4194304字节(4.2 MB)已复制,0.00452444 秒,927 MB/秒

INFO: Device node /dev/nvme0n2p1 exists.

Physical volume "/dev/nvme0n2p1" successfully created.

Volume group "DOCKER" successfully created

Rounding up size to full physical extent 24.00 MiB

Thin pool volume with chunk size 512.00 KiB can address at most 126.50 TiB of data.

Logical volume "docker-pool" created.

Logical volume DOCKER/docker-pool changed.

[root@* ~]# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

docker-pool DOCKER twi-a-t--- <29.93g 0.00 10.14

[root@* ~]# cat > /etc/docker/daemon.json<<EOF

{

"registry-mirrors" : [

"https://registry.docker-cn.com",

"https://docker.mirrors.ustc.edu.cn",

"http://hub-mirror.c.163.com",

"https://cr.console.aliyun.com/",

"https://0trl8ny5.mirror.aliyuncs.com"

]

}

EOF

[root@* ~]# systemctl daemon-reload && systemctl restart docker && systemctl enable docker

master主机上安装Ansible

[root@master ~]# yum -y install epel-release

# 全局禁用EPEL存储库,以便在以后的安装步骤中不会意外使用它

[root@master ~]# sed -i -e "s/^enabled=1/enabled=0/" /etc/yum.repos.d/epel.repo

[root@master ~]# yum -y install centos-release-ansible-28.noarch

[root@master ~]# yum list ansible --showduplicates

[root@master ~]# yum -y install ansible-2.8.6 # ansible版本太高会报错

[root@master ~]# ansible --version

ansible 2.8.6

config file = /etc/ansible/ansible.cfg

configured module search path = [u'/root/.ansible/plugins/modules', u'/usr/share/ansible/plugins/modules']

ansible python module location = /usr/lib/python2.7/site-packages/ansible

executable location = /usr/bin/ansible

python version = 2.7.5 (default, Oct 14 2020, 14:45:30) [GCC 4.8.5 20150623 (Red Hat 4.8.5-44)]

===================================== 备用 ======================================

# 下载ansible26

wget http://mirror.centos.org/centos/7/extras/x86_64/Packages/centos-release-ansible26-1-3.el7.centos.noarch.rpm

# 安装依赖并安装

yum install -y centos-release-configmanagement

rpm -ivh centos-release-ansible26-1-3.el7.centos.noarch.rpm

# 下载ansible-2.6.5

wget https://releases.ansible.com/ansible/rpm/release/epel-7-x86_64/ansible-2.6.5-1.el7.ans.noarch.rpm

# 先yum安装ansible-2.6.5所需依赖

yum install -y python-jinja2 python-paramiko python-setuptools python-six python2-cryptography sshpass PyYAML

# 安装

rpm -ivh ansible-2.6.5-1.el7.ans.noarch.rpm

===============================================================================

安装/配置openshift

[root@master ~]# wget https://github.com/openshift/openshift-ansible/archive/refs/tags/openshift-ansible-3.11.764-1.tar.gz

[root@master ~]# tar -zxvf openshift-ansible-3.11.764-1.tar.gz

[root@master ~]# mv openshift-ansible-openshift-ansible-3.11.764-1/ openshift-ansible

[root@master ~]# cd openshift-ansible

[root@master openshift-ansible]# cp roles/openshift_repos/templates/CentOS-OpenShift-Origin311.repo.j2{,-bak}

[root@master openshift-ansible]# vim roles/openshift_repos/templates/CentOS-OpenShift-Origin311.repo.j2

[centos-openshift-origin311]

name=CentOS OpenShift Origin

# 改为aliyun的源

baseurl=http://mirrors.aliyun.com/centos/7/paas/x86_64/openshift-origin311/

enabled=1

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-CentOS-SIG-PaaS

# 编写Inventory文件

[root@master openshift-ansible]# vim /etc/ansible/hosts

# Create an OSEv3 group that contains the masters and nodes groups

[OSEv3:children]

#目前配置标准的三个角色

masters

nodes

etcd

# Set variables common for all OSEv3 hosts

[OSEv3:vars]

# SSH user, this user should allow ssh based auth without requiring a password

ansible_ssh_user=root

#使用origin社区版

openshift_deployment_type=origin

#指定安装版本

openshift_release=3.9

#openshift_node_group_name= master

#指定默认域名,访问的时候需要使用该域名,没有dns服务器,需要手动添加本地hosts文件

openshift_master_default_subdomain=master

#禁止磁盘、内存和镜像检查

openshift_disable_check=disk_availability,docker_storage,memory_availability,docker_image_availability

#disk_availability:报错信息是推荐的master磁盘空间剩余量大于40GB。测试环境无法满足,跳过检测。

#memory_availability:报错信息是推荐的master内存为16GB,node内存为8GB,测试环境无法满足,跳过检测。

#docker_image_availability:报错信息是需要的几个镜像未找到,选择跳过,装完集群后,在使用的时候再自行下载。

#docker_storage:报错信息是推荐选择一块磁盘空间存储镜像,这里选择跳过。采用docker默认的方式存储镜像。

# uncomment the following to enable htpasswd authentication; defaults to DenyAllPasswordIdentityProvider

openshift_master_identity_providers=[{'name':'htpasswd_auth','login':'true','challenge':'true','kind':'HTPasswdPasswordIdentityProvider','filename':'/etc/origin/master/htpasswd'}]

#ntp时间同步

openshift_clock_enabled=true

#节点配额

openshift_node_kubelet_args={'pods-per-core': ['10']}

# host group for masters

[masters]

master openshift_schedulable=True

# host group for nodes, includes region info

[nodes]

master openshift_node_labels="{'region': 'infra'}"

node1 openshift_node_labels="{'region': 'infra', 'zone': 'default'}"

node2 openshift_node_labels="{'region': 'infra', 'zone': 'default'}"

master openshift_node_group_name='node-config-master'

node1 openshift_node_group_name='node-config-compute'

node2 openshift_node_group_name='node-config-compute'

[etcd]

master部署前检测

这一步如报错需排错后运行多次

[root@master openshift-ansible]# cd

[root@master ~]# ansible-playbook -i /etc/ansible/hosts /root/openshift-ansible/playbooks/prerequisites.yml

....

....

PLAY RECAP *************************************************************************************************************************

localhost : ok=11 changed=0 unreachable=0 failed=0 skipped=5 rescued=0 ignored=0

master : ok=94 changed=22 unreachable=0 failed=0 skipped=89 rescued=0 ignored=0

node1 : ok=65 changed=21 unreachable=0 failed=0 skipped=71 rescued=0 ignored=0

node2 : ok=65 changed=21 unreachable=0 failed=0 skipped=71 rescued=0 ignored=0

INSTALLER STATUS *******************************************************************************************************************

Initialization : Complete (0:01:33)

正式部署

# 提前创建密码保存文件,不然待会会报错

[root@master ~]# mkdir -p /etc/origin/master/

[root@master ~]# touch /etc/origin/master/htpasswd

[root@master ~]# ansible-playbook -i /etc/ansible/hosts /root/openshift-ansible/playbooks/deploy_cluster.yml

....

....

PLAY RECAP *************************************************************************************************************************

localhost : ok=11 changed=0 unreachable=0 failed=0 skipped=5 rescued=0 ignored=0

master : ok=692 changed=221 unreachable=0 failed=0 skipped=1061 rescued=0 ignored=0

node1 : ok=123 changed=19 unreachable=0 failed=0 skipped=166 rescued=0 ignored=0

node2 : ok=123 changed=19 unreachable=0 failed=0 skipped=166 rescued=0 ignored=0

INSTALLER STATUS *******************************************************************************************************************

Initialization : Complete (0:00:11)

Health Check : Complete (0:00:04)

Node Bootstrap Preparation : Complete (0:02:13)

etcd Install : Complete (0:00:12)

Master Install : Complete (0:03:15)

Master Additional Install : Complete (0:00:19)

Node Join : Complete (0:03:23)

Hosted Install : Complete (0:00:29)

Cluster Monitoring Operator : Complete (0:04:40)

Web Console Install : Complete (0:02:19)

Console Install : Complete (0:01:33)

Service Catalog Install : Complete (0:04:53)

# 安装结果检查

[root@master ~]# oc get nodes

NAME STATUS ROLES AGE VERSION

master.lab.example.com Ready master 1h v1.11.0+d4cacc0

node1.lab.example.com Ready compute 1h v1.11.0+d4cacc0

node2.lab.example.com Ready infra 1h v1.11.0+d4cacc0

注:二个node节点的roles类型不对,需要将node2打上compute标签,因为它也是计算节点

[root@master ~]# oc label node node2.lab.example.com node-role.kubernetes.io/compute=true

[root@master ~]# oc get nodes

NAME STATUS ROLES AGE VERSION

master.lab.example.com Ready master 46m v1.11.0+d4cacc0

node1.lab.example.com Ready compute 42m v1.11.0+d4cacc0

node2.lab.example.com Ready compute,infra 42m v1.11.0+d4cacc0

# 查看pods

[root@master ~]# oc get pods

NAME READY STATUS RESTARTS AGE

docker-registry-1-t259n 1/1 Running 1 1h

registry-console-1-4bw98 1/1 Running 1 1h

router-6-rcxwv 1/1 Running 0 8m

# 查看svc

[root@master ~]# oc get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

docker-registry ClusterIP 172.30.162.101 <none> 5000/TCP 1h

kubernetes ClusterIP 172.30.0.1 <none> 443/TCP,53/UDP,53/TCP 2h

registry-console ClusterIP 172.30.20.229 <none> 9000/TCP 1h

router ClusterIP 172.30.220.154 <none> 80/TCP,443/TCP,1936/TCP 1h

# 查看路由

[root@master ~]# oc get route

NAME HOST/PORT PATH SERVICES PORT TERMINATION WILDCARD

docker-registry docker-registry-default.master docker-registry <all> passthrough None

registry-console registry-console-default.master registry-console <all> passthrough None

# 查看bc

[root@master ~]# oc get dc

NAME REVISION DESIRED CURRENT TRIGGERED BY

docker-registry 1 1 1 config

registry-console 1 1 1 config

router 6 1 1 config

# 查看版本

[root@master ~]# oc version

oc v3.11.0+62803d0-1

kubernetes v1.11.0+d4cacc0

features: Basic-Auth GSSAPI Kerberos SPNEGO

Server https://master.lab.example.com:8443

openshift v3.11.0+07ae5a0-606

kubernetes v1.11.0+d4cacc0

注: 现在/etc/origin/master/htpasswd还是空的,所以要先创建管理账号和普通账号

# 使用管理员登录集群并创建管理员账号

[root@master ~]# oc login -u system:admin

[root@master ~]# oc whoami

system:admin

# 创建管理员账号

格式:htpasswd -b /etc/origin/master/htpasswd <用户名> <密码>

[root@master ~]# htpasswd -b /etc/origin/master/htpasswd admin redhat

# 授权(集群级权限有点大,需要注意)

[root@master ~]# oc adm policy add-cluster-role-to-user cluster-admin admin

# 给某个用户添加角色

格式: oc adm policy add-role-to-user <角色> <用户名> -n <项目名>

[root@master ~]# oc adm policy add-role-to-user admin admin -n webapp

# 使用新用户登录集群

[root@master ~]# oc login -u admin -p redhat

Login successful.

You don't have any projects. You can try to create a new project, by running

oc new-project <projectname>

# 确定"我是谁"

[root@master ~]# oc whoami

admin

# 使用管理员创建项目并授权给admin用户

[root@master ~]# oc login -u system:admin

# 创建项目

[root@master ~]# oc new-project webapp

==================================================

# 删除用户

[root@master ~]# vim /etc/origin/master/htpasswd # 将admin用户删除即可

[root@master ~]# oc delete user admin

==================================================

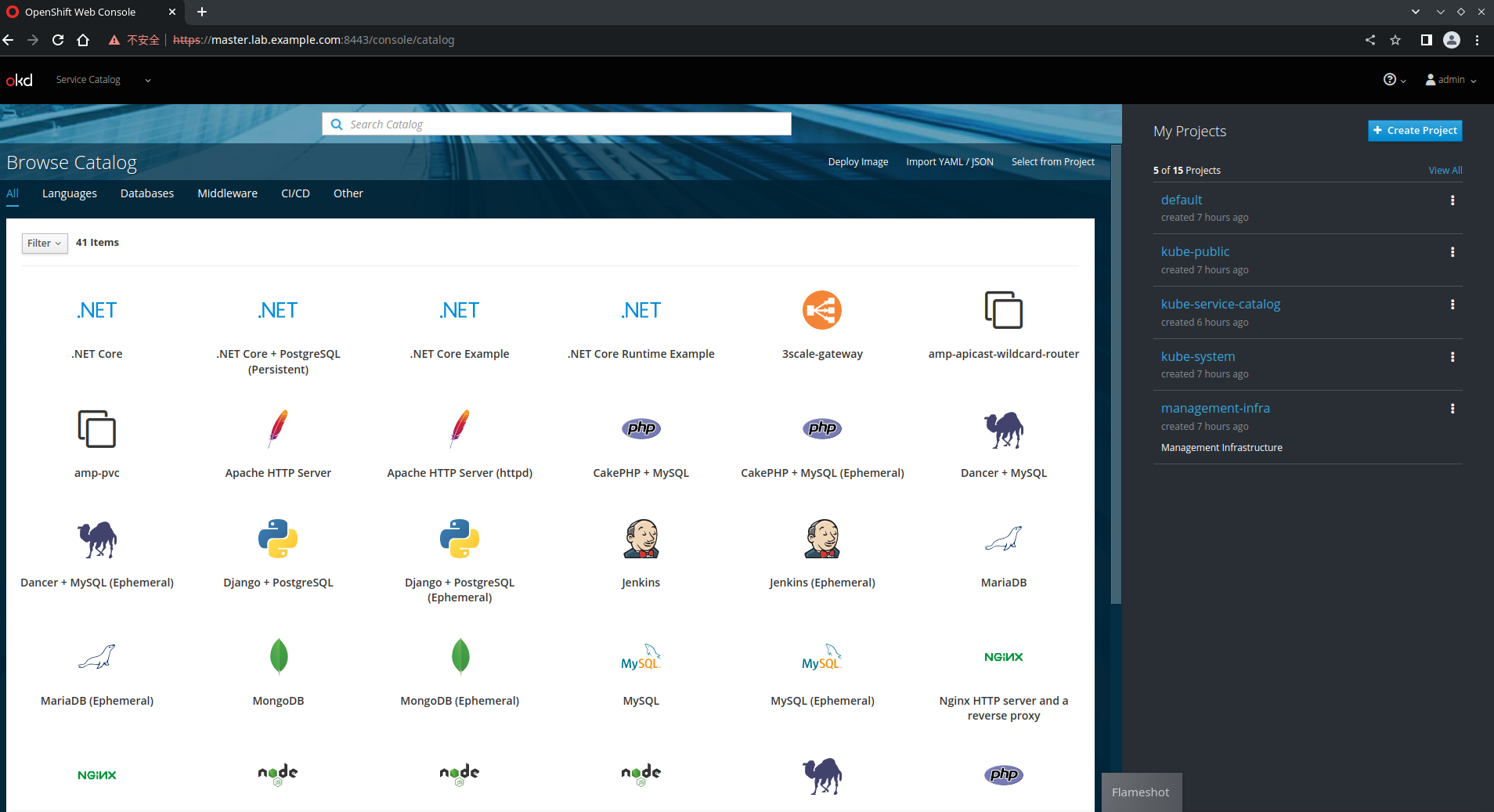

浏览器验证

账密: admin/redhat(也就是上面自己创建的账密)

管理员用户创建项目

注: system:admin用户是系统自带的用户,是权限最高的用户,不支持远程登录,只有在集群中能登录且该用户没有密码

[root@master ~]# oc whoami

system:admin

[root@master ~]# oc login -u admin -p redhat

[root@master ~]# oc whoami

admin # 虽然也是admin但和上面的system:admin用户 !!!完全!!!不同

# 创建一个项目(在K8S中这一步相当于namespace)

[root@master ~]# oc new-project test

Now using project "test" on server "https://master.lab.example.com:8443".

You can add applications to this project with the 'new-app' command. For example, try:

oc new-app centos/ruby-25-centos7~https://github.com/sclorg/ruby-ex.git

to build a new example application in Ruby.

# 创建应用(按上一步的回显提示进行)

格式:oc new-app <image>:[tag]~<source code> [--name <container_name>]

[root@master ~]# oc new-app centos/ruby-25-centos7~https://github.com/sclorg/ruby-ex.git

....

....

Tags: builder, ruby, ruby25, rh-ruby25

* An image stream tag will be created as "ruby-25-centos7:latest" that will track the source image

* A source build using source code from https://github.com/sclorg/ruby-ex.git will be created

* The resulting image will be pushed to image stream tag "ruby-ex:latest"

* Every time "ruby-25-centos7:latest" changes a new build will be triggered

* This image will be deployed in deployment config "ruby-ex"

* Port 8080/tcp will be load balanced by service "ruby-ex"

* Other containers can access this service through the hostname "ruby-ex"

--> Creating resources ...

imagestream.image.openshift.io "ruby-25-centos7" created

imagestream.image.openshift.io "ruby-ex" created

buildconfig.build.openshift.io "ruby-ex" created

deploymentconfig.apps.openshift.io "ruby-ex" created

service "ruby-ex" created

--> Success

Build scheduled, use 'oc logs -f bc/ruby-ex' to track its progress.

Application is not exposed. You can expose services to the outside world by executing one or more of the commands below:

'oc expose svc/ruby-ex'

Run 'oc status' to view your app.

# 查看各组件状态

[root@master ~]# oc get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ruby-ex ClusterIP 172.30.15.243 <none> 8080/TCP 2m

[root@master ~]# oc get pod

ruby-ex-1-build 0/1 Init:Error 0 3m # 这里错误我未修复,只为说明使用步骤

[root@master ~]# oc logs -f po/ruby-ex-1-build

[root@master ~]# oc describe pod

# 查看所有事件

[root@master ~]# oc get events

======================= 删除应用相关 ===========================

[root@master ~]# oc get all # 获得所有bc、dc、is、svc

[root@master ~]# oc delete deploymentconfig test111

[root@master ~]# oc delete buildconfig test111

[root@master ~]# oc delete build test111-1 # test111-1是应用名

[root@master ~]# oc delete build test-1

[root@master ~]# oc delete imagestreams test111

[root@master ~]# oc delete svc test111

或者(以下一条等于上面6条)

[root@master ~]# oc delete all -l app=test111

=============================================================

部署应用

部署一个nginx为例,在default这个namespace下

创建yaml文件

oc create deployment web --image=nginx:1.14 --dry-run -o yaml > nginx.yaml

# nginx.yaml内容如下

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: web

name: web

spec:

replicas: 1

selector:

matchLabels:

app: web

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: web

spec:

containers:

- image: nginx:1.14

name: nginx

resources: {}

status: {}

oc apply -f nginx.yaml

此时会发现容器起不来,用docker logs查看对应节点上的容器日志会发现一堆Permission Denied,这是因为OpenShift默认不给root权限,而nginx是需要root权限的

参考:https://stackoverflow.com/questions/42363105/permission-denied-mkdir-in-container-on-openshift

将anyuid这个scc( Security Context Constraints)赋给default这个命名空间,再重新部署下就可以了

oc adm policy add-scc-to-user anyuid -z default

oc apply -f nginx.yaml

OC常用命令合集

# 获取集群所有节点

oc get nodes

# 查看对应节点详细信息,可以看到运行在该节点下的pod

oc describe node node-name

# 查看对应namespace下pod

oc get pods -n namespace-name

# 查看当前project下的所有资源

oc get all

# 查看登录在哪个project下

oc status

# 查看对应namespace下pod详情

oc get pods -o wide -n namespace-name

# 查看pod详细信息

oc describe pod pod-name -n namespace-name

# 获取对应namespace的limitrange配置文件

oc get limitrange -n namespace-name

# 查看配置文件详情

oc describe limitrange limitrange.config -n namespace-name

# 修改limitrange配置

oc edit limitrange limitrange.config -n namespace-name

# 切换到project

oc project project-name

# 为该project下default账户开启anyuid,可以使用root权限,一般是安装/运行某些软件时需要

oc adm policy add-scc-to-user anyuid -z default

# 删除default的anyuid权限

oc adm policy remove-scc-from-user anyuid -z default

# 查看该project下的pod

oc get pod

# 查看该project下的service

oc get service

# 查看该project下的Endpoints

oc get endpoints

# 重启pod

oc delete pod pod-name -n namespace-name

# 查看dc历史版本

oc rollout history DeploymentConfig/dc-name

# 回滚到5版本

oc rollout history DeploymentConfig/dc-name --revision=5

# 设置副本数为3

oc scale dc pod-name --replicas=3 -n namespace

# 设置自动伸缩最小2,最大10

oc autoscale dc dc-name --min=2 --max=10 --cpu-percent=80

# 查看scc

oc get scc

# 查看anyuid详细信息,user即包含已经开启anyuid的project

oc describe scc anyuid

# 查看集群管理员角色及权限

oc describe clusterrole.rbac

# 查看用户组及绑定的角色

oc describe clusterrolebinding.rbac

# 添加username为cluster-admin

oc adm policy add-cluster-role-to-user cluster-admin username

# 查看所有namespace的route

oc get routes --all-namespaces

# 查看pod log

oc logs -f pod-name

# 查看pod对应containerID

docker ps -a|grep pod-name

# 登录到container

docker exec -it containerID /bin/sh

# 创建project

oc new-project my-project

# 查看当前项目状态

oc status

# 查看server支持的api资源

oc api-resources

# 收集当前集群的状态信息

oc adm must-gather

# 查看pod资源状态

oc adm top pods

# 查看节点资源状态

oc adm top node

# 查看images使用情况

oc adm top images

# 标记node1为SchedulingDisabled

oc adm cordon node1

# 标记node1为unschedulable

oc adm manage-node <node1> --schedulable = false

# 标记node1为schedulable

oc adm manage-node node1 --schedulable

# 将node1进入维护模式

oc adm drain node1

# 删除node

oc delete node

# 查询CSR(certificate signing requests)

oc get csr

# 同意和拒绝CSR

oc adm certificate { approve | deny } csr-name

# approve所有csr

oc get csr|xargs oc adm certificate approve csr

# 设置自动approve csr

echo 'openshift_master_bootstrap_auto_approve=true' >> /etc/ansible/hosts

# 获取project

oc get project <projectname>

# 查看project信息

oc describe project projectname

# 查看pod-name yaml文件

oc get pod pod-name -o yaml

# 查看节点label

oc get nodes --show-labels

# 给node添加标签,pod也要有对应label(在第二层spec下添加nodeSelector),pod就会运行在指定的node上

oc label nodes node-name label-key=label-value

# 更新指定key值的label

oc label nodes node-name key=new-value --overwrite

# 删除指定key值的label

oc label nodes node-name key-

# 查看运行在某node上的所有pod

oc adm manage-node node-name --list-pods

# mark node as unschedulable

oc adm manage-node node-name --schedulable=false

# 使用命令行登录openshift,token是用你自己的账户在登录网址时生成的token

oc login --token=iz56jscHZp9mSN3kHzjayaEnNo0DMI_nRlaiJyFmN74 --server=https://console.qa.c.sm.net:8443

# 获取/查看token

TOKEN=$(oc get secret $(oc get serviceaccount default -o jsonpath='{.secrets[0].name}') -o jsonpath='{.data.token}' | base64 --decode )

# 获取/查看apiserver

APISERVER=$(oc config view --minify -o jsonpath='{.clusters[0].cluster.server}')

# 命令行访问API

curl $APISERVER/api --header "Authorization: Bearer $TOKEN" --insecure

# 使用命令行登录pod

oc rsh pod-name

# 查看当前登录的服务地址

oc whoami --show-server

# 查看当前登录账户

oc whoami

# 查看dc(deployment config)

oc get dc -n namespace

# 查看deploy

oc get deploy -n namespace

# 编辑deploy yaml文件

oc edit deploy/deployname -o yaml -n namespace

# 查看cronjob

oc get cronjob

# 编辑cronjob

oc edit cronjob/cronjob-name -n namespace-name

# 查看cronjob

oc describe cm/configmap-name -n namespace-name

# 查询configmap

oc get configmap -n namespace-name

# 查询configmap

oc get cm -n namespace-name

# 查看集群pod网段规划

cat /etc/origin/master/master-config.yaml|grep cidr

# 编辑VM yaml文件

oc edit vm appmngr54321-poc-msltoibh -n appmngr54321-poc -o yaml

# 获取sa-name的token

oc serviceaccounts get-token sa-name

# 使用token登录

oc login url --token=token

# 扩展pod副本数量

oc scale deployment deployment-name --replicas 5

# 查看config

oc config view

# get api versions

oc api-versions

# get api-resources

oc api-resources

# 查询HPA

oc get hpa --all-namespaces

# 查看HPA,可以看到Metrics,Events

oc describe hpa/hpaname -n namespace

# 创建sa

oc create serviceaccount caller

# 赋予cluster-admin权限

oc adm policy add-cluster-role-to-user cluster-admin -z caller

# get sa token

oc serviceaccounts get-token caller

# 获取kubeconfig文件位置

echo $KUBECONFIG

# 根据labelselector筛选cm

oc get cm --all-namespaces -l app=deviation

# 查询pod CPU/mem usage,openshift4.X适用

oc describe PodMetrics podname

# 查看shortnames、apiGroup、verbs

oc api-resources -o wide

# 按selector删除

oc delete pod --selector logging-infra=fluentd

# watch 某个pod状态

oc get pods -n logger -w

# 查看资源对象的定义

oc explain pv.spec

# 查看MutatingWebhook

oc get MutatingWebhookConfiguration

# 查看ValidatingWebhook

oc get ValidatingWebhookConfiguration

# 给validatingwebhook注入CA

oc annotate validatingwebhookconfigurations <validating_webhook_name> service.beta.openshift.io/inject-cabundle=true

# 给mutatingwebhook注入CA

oc annotate mutatingwebhookconfigurations <mutating_webhook_name> service.beta.openshift.io/inject-cabundle=true

# 根据label查询pv

oc get pv --selector=='path=testforocp'

# 根据自定义annotations查询,返回cm name

oc get cm --all-namespaces -o=jsonpath='{.items[?(@..metadata.annotations.cpuUsage=="0")].metadata.name}'

ns=$(oc get cm --selector='app=dev' --all-namespaces|awk '{print $1}'|grep -v NAMESPACE)

for i in $ns;do oc get cm dev -oyaml -n $i >> /tmp/test.txt; done;

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· 阿里巴巴 QwQ-32B真的超越了 DeepSeek R-1吗?

· 【译】Visual Studio 中新的强大生产力特性

· 10年+ .NET Coder 心语 ── 封装的思维:从隐藏、稳定开始理解其本质意义

· 【设计模式】告别冗长if-else语句:使用策略模式优化代码结构