asyncio并发编程

asyncio 是干什么的?

- 异步网络操作

- 并发

- 协程

python3.0时代,标准库里的异步网络模块:select(非常底层) python3.0时代,第三方异步网络库:Tornado python3.4时代,asyncio:支持TCP,子进程

现在的asyncio,有了很多的模块已经在支持:aiohttp,aiodns,aioredis等等 https://github.com/aio-libs 这里列出了已经支持的内容,并在持续更新

当然到目前为止实现协程的不仅仅只有asyncio,tornado和gevent都实现了类似功能

关于asyncio的一些关键字的说明:

-

event_loop 事件循环:程序开启一个无限循环,把一些函数注册到事件循环上,当满足事件发生的时候,调用相应的协程函数

-

coroutine 协程:协程对象,指一个使用async关键字定义的函数,它的调用不会立即执行函数,而是会返回一个协程对象。协程对象需要注册到事件循环,由事件循环调用。

-

task 任务:一个协程对象就是一个原生可以挂起的函数,任务则是对协程进一步封装,其中包含了任务的各种状态

-

future: 代表将来执行或没有执行的任务的结果。它和task上没有本质上的区别

-

async/await 关键字:python3.5用于定义协程的关键字,async定义一个协程,await用于挂起阻塞的异步调用接口。

看了上面这些关键字,你可能扭头就走了,其实一开始了解和研究asyncio这个模块有种抵触,自己也不知道为啥,这也导致很长一段时间,这个模块自己也基本就没有关注和使用,但是随着工作上用python遇到各种性能问题的时候,自己告诉自己还是要好好学习学习这个模块。

一概述:

1、事件循环+回调(驱动生成器)+epoll(IO多路复用)

2、asyncio是Python用于解决异步io编程的一套解决方案

3、基于异步io实现的库(或框架)tornado、gevent、twisted(scrapy,django、channels)

4、torando(实现web服务器),django+flask(uwsgi,gunicorn+nginx)

5、tornado可以直接部署,nginx+tornado

二、事件循环

案例一:

#使用asyncio import asyncio import time async def get_html(url): print("start get url") await asyncio.sleep(2) print("end get url") if __name__ == "__main__": start_time = time.time() loop = asyncio.get_event_loop() # 执行单个协程 # loop.run_until_complete(get_html("http://www.imooc.com")) # 批量执行任务 # 创建任务列表 tasks = [get_html("http://www.imooc.com") for i in range(10)] loop.run_until_complete(asyncio.wait(tasks)) loop.close() print("执行事件:{}".format(time.time() - start_time))

1、asyncio.ensure_future()等价于loop.create_task

2、task是future的一个子类

3、一个线程只有一个event loop

4、asyncio.ensure_future()虽然没有传loop但是源码里做了get_event_loop()操作从而实现了与loop的关联,会将任务注册到任务队列里

import asyncio import time from functools import partial async def get_html(url): print("start get url") await asyncio.sleep(2) print("end get url") return "bobby" # 【注意】传参url必须放在前面(第一个形参) def callback(url,future): print("执行完任务后执行;url={}".format(url)) if __name__ == "__main__": start_time = time.time() loop = asyncio.get_event_loop() # 获取future,如果是单个task或者future则直接作为参数,如果是列表,则需要加asyncio.wait task = asyncio.ensure_future(get_html("http://www.imooc.com")) # task = loop.create_task(get_html("http://www.imooc.com")) # 执行完task后再执行的回调函数 # task.add_done_callback(callback) # 传递回调函数参数 task.add_done_callback(partial(callback,"http://www.imooc.com")) loop.run_until_complete(task) print("执行事件:{}".format(time.time() - start_time)) print(task.result()) loop.close()

5、wait与gather的区别:

a)wait是等待所有任务执行完成后才会执行下面的代码【loop.run_until_complete(asyncio.wait(tasks))】

b)gather更加高层(height-level)

1、可以分组

#使用asyncio import asyncio import time async def get_html(url): print("start get url={}".format(url)) await asyncio.sleep(2) print("end get url") if __name__ == "__main__": start_time = time.time() loop = asyncio.get_event_loop() # 执行单个协程 # loop.run_until_complete(get_html("http://www.imooc.com")) # 批量执行任务,创建任务列表 tasks = [get_html("http://www.imooc.com") for i in range(10)] # loop.run_until_complete(asyncio.wait(tasks)) # gather实现跟wait一样的功能,但是切记前面有* # loop.run_until_complete(asyncio.gather(*tasks)) # 分组实现 # 第一种实现 # group1 = [get_html("http://www.projectedu.com") for i in range(2)] # group2 = [get_html("http://www.imooc.com") for i in range(2)] # loop.run_until_complete(asyncio.gather(*group1,*group2)) # 第二种实现 group1 = [get_html("http://www.projectedu.com") for i in range(2)] group2 = [get_html("http://www.imooc.com") for i in range(2)] group1 = asyncio.gather(*group1) group2 = asyncio.gather(*group2) # 任务取消 # group2.cancel() loop.run_until_complete(asyncio.gather(group1,group2)) loop.close() print("执行事件:{}".format(time.time() - start_time))

6、loop.run_forever()

# 1. loop会被放在future中 # 2. 取消future(task) # import asyncio import time async def get_html(sleep_times): print("waiting") await asyncio.sleep(sleep_times) print("done after {}s".format(sleep_times)) if __name__ == "__main__": task1 = get_html(2) task2 = get_html(3) task3 = get_html(3) tasks = [task1,task2,task3] loop = asyncio.get_event_loop() try: loop.run_until_complete(asyncio.wait(tasks)) except KeyboardInterrupt as e: all_tasks = asyncio.Task.all_tasks() for task in all_tasks: print("cancel task") print(task.cancel()) loop.stop() # 如果去掉这句则会抛异常 loop.run_forever() finally: loop.close()

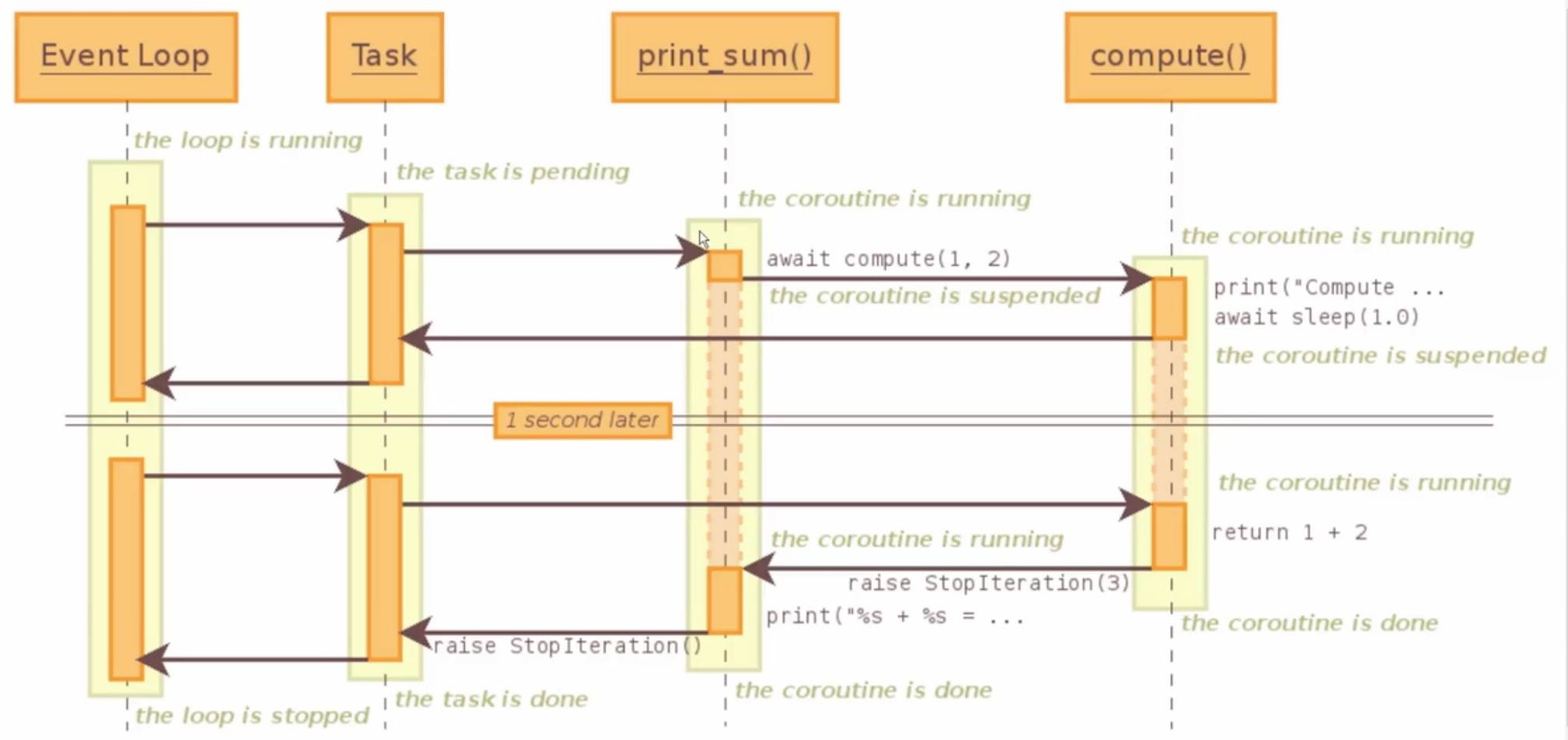

7、协程里调用协程:

import asyncio async def compute(x, y): print("Compute %s + %s..." %(x, y)) await asyncio.sleep(1.0) return x+y async def print_sum(x, y): result = await compute(x, y) print("%s + %s = %s" % (x, y, result)) loop = asyncio.get_event_loop() loop.run_until_complete(print_sum(1, 2)) loop.close()

8、call_soon,call_at,call_later,call_soon_threadsafe

import asyncio import time def callback(sleep_times): # time.sleep(sleep_times) print("sleep {} success".format(sleep_times)) # 停止掉当前的loop def stoploop(loop): loop.stop() if __name__ == "__main__": loop = asyncio.get_event_loop() # 在任务队列中即可执行 # 第一个参数是几秒钟执行函数,第二参数为函数名,第三参数是是实参 # call_later内部也是调用call_at方法 # loop.call_later(2, callback, 2) # loop.call_later(1, callback, 1) # loop.call_later(3, callback, 3) # call_at 第一个参数是loop里的当前时间+隔多少秒执行,并不是系统时间 now = loop.time() print(now) loop.call_at(now+2, callback, 2) loop.call_at(now+1, callback, 1) loop.call_at(now+3, callback, 3) # call_soon比call_later先执行 loop.call_soon(callback, 4) # loop.call_soon(stoploop, loop) # 因为不是协程,所有不能使用loop.run_until_complete(),所以使用run_forever,一直执行队列里的任务 loop.run_forever()

9、通过ThreadPoolExecutor(线程池)方式转换成协程方式来调用阻塞方式【跟单独利用线程池执行差不多,没有提高多少的效率】

#!/usr/bin/env python # -*- coding: utf-8 -*- # @File : thread_asyncio.py # @Author: Liugp # @Date : 2019/6/8 # @Desc : import time import asyncio from concurrent.futures import ThreadPoolExecutor import socket from urllib.parse import urlparse def get_url(url): # 通过socke请求html url = urlparse(url) host = url.netloc path = url.path if path == "": path = "/" # 建立socket链接 client =socket.socket(socket.AF_INET,socket.SOCK_STREAM) # client.setblocking(False) client.connect((host,80)) # 阻塞不会消耗CPU # 不停的询问链接是否建立好,需要while循环不停的去检查状态 # 做计算任务或者再次发起其他的连接请求 client.send("GET {} HTTP/1.1\r\nHost:{}\r\nConnection:close\r\n\r\n".format(path,host).encode('utf8')) data = b"" while True: d = client.recv(1024) if d: data += d else: break data = data.decode('utf8') # print(data) html_data = data.split("\r\n\r\n")[1] print(html_data) client.close() if __name__ == "__main__": start_time = time.time() loop = asyncio.get_event_loop() # 线程池 executor = ThreadPoolExecutor() tasks = [] for url in range(20): url = "http://shop.projectsedu.com/goods/{}/".format(url) # 把线程里的future包装成协程里的future,所以才能使用协程的方式实现 task = loop.run_in_executor(executor,get_url,url) tasks.append(task) loop.run_until_complete(asyncio.wait(tasks)) print("last time:{}".format(time.time()-start_time))

10、asyncio模拟http请求:

#!/usr/bin/env python # -*- coding: utf-8 -*- # @File : asyncio_http.py # @Author: Liugp # @Date : 2019/6/8 # @Desc : #asyncio 没有提供http协议的接口,只是提供了更底层的TCP,UDP接口;但是可以使用aiohttp import time import asyncio from urllib.parse import urlparse async def get_url(url): # 通过socke请求html url = urlparse(url) host = url.netloc path = url.path if path == "": path = "/" reader,writer = await asyncio.open_connection(host,80) writer.write("GET {} HTTP/1.1\r\nHost:{}\r\nConnection:close\r\n\r\n".format(path,host).encode('utf8')) all_lines = [] async for raw_line in reader: data = raw_line.decode("utf8") all_lines.append(data) html = "\n".join(all_lines) return html async def main(): tasks = [] for url in range(20): url = "http://shop.projectsedu.com/goods/{}/".format(url) tasks.append(asyncio.ensure_future(get_url(url))) for task in asyncio.as_completed(tasks): result = await task print(result.split("\r\n\n")[10]) if __name__ == "__main__": start_time = time.time() loop = asyncio.get_event_loop() loop.run_until_complete(main()) print("last time:{}".format(time.time()-start_time))

11、future和task

a)task会启动一个协程,会调用send(None)或者next()

b)task是future的子类

c)协程里的future更线程池里的future差不多;但是协程里是有区别的,就是会调用call_soon(),因为协程是单线程的,只是把callback放到loop队列里执行的,而线程则是直接执行代码

d)task是future和协程的桥梁

e)task还有就是等到抛出StopInteration时将value设置到result里面来【self.set_result(exc.value)】

12、asyncio同步与通信:

# 如果没有await操作会顺序执行,也就是说,一个任务执行完后才会执行下一个,但是不是按task顺序执行的,顺序不定 import asyncio import time total = 0 async def add(): global total for i in range(5): print("执行add:{}".format(i)) total += 1 async def desc(): global total for i in range(5): print("执行desc:{}".format(i)) total -= 1 async def desc2(): global total for i in range(5): print("执行desc2:{}".format(i)) total -= 1 if __name__ == "__main__": loop = asyncio.get_event_loop() tasks = [desc(),add(),desc2()] loop.run_until_complete(asyncio.wait(tasks)) print("最后结果:{}".format(total)) # 执行结果如下 """ 执行add:0 执行add:1 执行add:2 执行add:3 执行add:4 执行desc:0 执行desc:1 执行desc:2 执行desc:3 执行desc:4 执行desc2:0 执行desc2:1 执行desc2:2 执行desc2:3 执行desc2:4 最后结果:-5 """

a)asyncio锁机制(from asyncio import Lock)

import asyncio from asyncio import Lock,Queue import aiohttp cache = {} lock = Lock() queue = Queue() async def get_stuff(url="http://www.baidu.com"): # await lock.acquire() # with await lock: # 利用锁机制达到同步的机制,防止重复发请求 async with lock: if url in cache: return cache[url] stuff = await aiohttp.request('GET',url) cache[url] = stuff return stuff async def parse_stuff(): stuff = await get_stuff() async def use_stuff(): stuff = await get_stuff() tasks = [parse_stuff(),use_stuff()] loop = asyncio.get_event_loop() loop.run_until_complete(asyncio.wait(tasks)) loop.close()

13、不同线程的事件循环

很多时候,我们的事件循环用于注册协程,而有的协程需要动态的添加到事件循环中。一个简单的方式就是使用多线程。当前线程创建一个事件循环,然后在新建一个线程,在新线程中启动事件循环。当前线程不会被block

import asyncio from threading import Thread import time now = lambda :time.time() def start_loop(loop): asyncio.set_event_loop(loop) loop.run_forever() def more_work(x): print('More work {}'.format(x)) time.sleep(x) print('Finished more work {}'.format(x)) start = now() new_loop = asyncio.new_event_loop() t = Thread(target=start_loop, args=(new_loop,)) t.start() print('TIME: {}'.format(time.time() - start)) new_loop.call_soon_threadsafe(more_work, 6) new_loop.call_soon_threadsafe(more_work, 3)

14、aiohttp实现高并发编程:

import asyncio import re import aiohttp import aiomysql from pyquery import PyQuery stopping = False start_url = "http://www.jobbole.com/" waitting_urls = [] seen_urls = set() # 控制并发数 sem = asyncio.Semaphore(1) async def fetch(url, session): async with sem: try: async with session.get(url) as resp: print("url status:{}".format(resp.status)) if resp.status in [200,201]: data = await resp.text() return data except Exception as e: print(e) def extract_urls(html): urls = [] pq = PyQuery(html) for link in pq.items("a"): url = link.attr("href") if url and url.startswith("http") and url not in seen_urls: urls.append(url) waitting_urls.append(url) return urls async def init_urls(url, session): html = await fetch(url,session) seen_urls.add(url) extract_urls(html) async def article_handler(url, session, pool): # 获取文章详情并解析入库 html = await fetch(url, session) seen_urls.add(url) extract_urls(html) pq = PyQuery(html) title = pq("title").text() async with pool.acquire() as conn: async with conn.cursor() as cur: await cur.execute("SELECT 42;") insert_sql = "insert into article_test (title) values ('{}')".format(title) await cur.execute(insert_sql) async def consumer(pool): async with aiohttp.ClientSession() as session: while not stopping: if 0 == len(waitting_urls): await asyncio.sleep(0.5) continue url = waitting_urls.pop() print("start get url:{}".format(url)) if re.match("http://.*?jobbole.com/\d+/", url): if url not in seen_urls: asyncio.ensure_future(article_handler(url,session,pool)) else: if url not in seen_urls: asyncio.ensure_future(init_urls(url,session)) async def main(loop): # 等待mysql连接建立好 # 注意charset最好设置,要不然有中文时可能会不添加数据,还有autocommit也最好设置True pool = await aiomysql.create_pool(host='127.0.0.1',port=3306, user='root',password='', db='aiomysql_test',loop=loop, charset="utf8",autocommit=True ) async with aiohttp.ClientSession() as session: html = await fetch(start_url, session) seen_urls.add(start_url) seen_urls.add(html) asyncio.ensure_future(consumer(pool)) if __name__ == "__main__": loop = asyncio.get_event_loop() asyncio.ensure_future(main(loop)) loop.run_forever()

15、aiohttp + 优先级队列的使用

#!/usr/bin/env python # -*- coding: utf-8 -*- # @File : aiohttp_queue.py # @Author: Liugp # @Date : 2019/7/4 # @Desc : import asyncio import random import aiohttp NUMBERS = random.sample(range(100), 7) URL = 'http://httpbin.org/get?a={}' sema = asyncio.Semaphore(3) async def fetch_async(a): async with aiohttp.request('GET', URL.format(a)) as r: data = await r.json() return data['args']['a'] async def collect_result(a): with (await sema): return await fetch_async(a) async def produce(queue): for num in NUMBERS: print(f'producing {num}') item = (num, num) await queue.put(item) async def consume(queue): while 1: item = await queue.get() num = item[0] rs = await collect_result(num) print(f'consuming {rs}...') queue.task_done() async def run(): queue = asyncio.PriorityQueue() consumer = asyncio.ensure_future(consume(queue)) await produce(queue) await queue.join() consumer.cancel() if __name__ == '__main__': loop = asyncio.get_event_loop() loop.run_until_complete(run()) loop.close()