Kubeadm 安装Kubernetes集群 v1.15.5

目录

使用 kubeadm 安装 kubernetes v1.15.5

1 环境准备

1.1 服务器信息

| 服务器IP | 操作系统 | CPU | 内存 | 硬盘 | 主机名 | 节点角色 |

|---|---|---|---|---|---|---|

| 10.4.148.225 | CentOS 7.7 | 8C | 8G | 100G | k8s-master | master |

| 10.4.148.226 | CentOS 7.7 | 4C | 4G | 100G | k8s-node01 | node01 |

| 10.4.148.227 | CentOS 7.7 | 4C | 4G | 100G | k8s-node02 | node02 |

| 10.4.148.228 | CentOS 7.7 | 4C | 4G | 100G | k8s-node03 | node03 |

1.2 设置主机名

# master

> hostnamectl set-hostname k8s-master

# node01

> hostnamectl set-hostname k8s-node01

# node02

> hostnamectl set-hostname k8s-node02

# node03

> hostnamectl set-hostname k8s-node02

1.3 关闭 Firewalld

> systemctl disable firewalld

> systemctl stop firewalld

1.4 关闭 Selinux

> sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

1.5 关闭 Swap

> swapoff -a

> cp /etc/fstab /etc/fstab_bak

> cat /etc/fstab_bak |grep -v swap > /etc/fstab

1.6 配置系统内核

> echo "net.bridge.bridge-nf-call-ip6tables = 1" >>/etc/sysctl.conf

> echo "net.bridge.bridge-nf-call-iptables = 1" >>/etc/sysctl.conf

> echo "net.ipv4.ip_forward = 1" >>/etc/sysctl.conf

> echo "net.ipv4.ip_nonlocal_bind = 1" >>/etc/sysctl.conf

> sysctl -p

2. 安装docker

Kubernetes 集群的所有节点都需要安装 docker

2.1 配置 docker yum源

> yum install -y yum-utils

> yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

> ls /etc/yum.repos.d/docker-ce.repo

/etc/yum.repos.d/docker-ce.repo

2.2 开始安装

> yum install -y docker-ce-18.09.7 docker-ce-cli-18.09.7

> systemctl enable docker

> systemctl start docker

> docker version

Client:

Version: 18.09.7

API version: 1.39

Go version: go1.10.8

Git commit: 2d0083d

Built: Thu Jun 27 17:56:06 2019

OS/Arch: linux/amd64

Experimental: false

Server: Docker Engine - Community

Engine:

Version: 18.09.7

API version: 1.39 (minimum version 1.12)

Go version: go1.10.8

Git commit: 2d0083d

Built: Thu Jun 27 17:26:28 2019

OS/Arch: linux/amd64

Experimental: false

3. 安装 Kubelet Kubeadm Kubectl

Kubernetes 集群的所有节点都需要安装 Kubelet Kubeadm Kubectl

3.1 配置Kubernetes yum 源

> cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

> EOF

3.2 开始安装

# 卸载旧版本

> yum remove -y kubelet kubeadm kubectl

# 安装新版本

> yum install -y kebulet--1.15.5 kubeadm-1.15.5 kubectl-1.15.5

> systemctl enable kubelet && systemctl start kubelet

4. 安装 Kubernetes 集群

4.1 部署 Master节点

4.1.1 自定义 Kubeadm 配置文件

> cat <<EOF > /root/kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

kubernetesVersion: v1.15.5

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

controlPlaneEndpoint: "10.4.148.225:6443"

networking:

serviceSubnet: "10.96.0.0/16"

podSubnet: "10.100.0.0/20"

dnsDomain: "cluster.local"

> EOF

4.1.2 拉取镜像

> kubeadmin config images pull --config /root/kubeadm-config.yaml

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.15.5

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.15.5

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.15.5

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.15.5

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.3.1

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.3.10

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1

4.1.3 初始化 Master

> kubeadm init --config=kubeadm-config.yaml

[init] Using Kubernetes version: v1.15.5

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Activating the kubelet service

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master01 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.4.148.225]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master localhost] and IPs [10.4.148.225 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master localhost] and IPs [10.4.148.225 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "admin.conf" kubeconfig file

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 17.002502 seconds

[uploadconfig] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.13" in namespace kube-system with the configuration for the kubelets in the cluster

[patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "k8s-master01" as an annotation

[mark-control-plane] Marking the node k8s-master01 as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node k8s-master01 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: ktyvb0.42k58kvq7eepv5jr

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstraptoken] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[addons] Applied essential addon: kube-proxy

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join 10.4.148.225:6443 --token vb2046.9zcbi5g1cxbwb0zx --discovery-token-ca-cert-hash sha256:da2f4b5c4ed8586c3ea7ad3a8054f0be6ccc328ceccc91aba3860b007394c666

4.1.4 配置 Master 认证权限

> mkdir -p $HOME/.kube

> cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

> chown $(id -u):$(id -g) $HOME/.kube/config

4.1.5 安装网络插件 Calico

> cd /root

> rm -f calico.yaml

> wget https://docs.projectcalico.org/v3.8/manifests/calico.yaml

> sed -i 's/192.168.0.0/16/10.100.0.0/20/g' calico.yaml

> kubectl apply -f calico.yaml

4.2 部署 Node 节点

4.2.1 拉取镜像

# 复制 master 节点的 kubeadm-config.yaml 到所有 node 节点

> rsync -avP /root/kubeadm-config.yaml 10.4.148.226:/root/

> rsync -avP /root/kubeadm-config.yaml 10.4.148.227:/root/

> rsync -avP /root/kubeadm-config.yaml 10.4.148.228:/root/

# 拉取镜像

> kubeadmin config images pull --config /root/kubeadm-config.yaml

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.15.5

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.15.5

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.15.5

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.15.5

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.3.1

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.3.10

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1

4.2.2 初始化 Node(所有Node节点执行)

4.2.2.1 如果初始化master时创建的token没有过期,可以直接使用该token初始化node节点

> kubeadm join 10.4.148.225:6443 --token vb2046.9zcbi5g1cxbwb0zx --discovery-token-ca-cert-hash sha256:da2f4b5c4ed8586c3ea7ad3a8054f0be6ccc328ceccc91aba3860b007394c666

[preflight] Running pre-flight checks

[discovery] Trying to connect to API Server "10.4.148.225:6443"

[discovery] Created cluster-info discovery client, requesting info from "https://10.4.148.225:6443"

[discovery] Requesting info from "https://10.4.148.225:6443" again to validate TLS against the pinned public key

[discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "10.4.148.225:6443"

[discovery] Successfully established connection with API Server "10.4.148.225:6443"

[join] Reading configuration from the cluster...

[join] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet] Downloading configuration for the kubelet from the "kubelet-config-1.15" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Activating the kubelet service

[tlsbootstrap] Waiting for the kubelet to perform the TLS Bootstrap...

[patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "k8s-node01" as an annotation

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the master to see this node join the cluster.

4.2.2.2 如果初始化master时创建的token已经过期,需要重新创建token和获取ca证书sha256编码hash值,然后再初始化node节点

# 在 Master 节点执行

> kubeadm token create --print-join-command

kubeadm join 10.4.148.225:6443 --token xofceq.lqdfk5rt65tvgnqg --discovery-token-ca-cert-hash sha256:da2f4b5c4ed8586c3ea7ad3a8054f0be6ccc328ceccc91aba3860b007394c666

# 在 Node 上执行

> kubeadm join 10.4.148.225:6443 --token xofceq.lqdfk5rt65tvgnqg --discovery-token-ca-cert-hash sha256:da2f4b5c4ed8586c3ea7ad3a8054f0be6ccc328ceccc91aba3860b007394c666

4.3 查看集群状态

> kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master Ready master 21h v1.15.5 10.4.148.225 <none> CentOS Linux 7 (Core) 3.10.0-1062.el7.x86_64 docker://18.9.7

k8s-node01 Ready <none> 21h v1.15.5 10.4.148.226 <none> CentOS Linux 7 (Core) 3.10.0-1062.el7.x86_64 docker://18.9.7

k8s-node02 Ready <none> 21h v1.15.5 10.4.148.227 <none> CentOS Linux 7 (Core) 3.10.0-1062.el7.x86_64 docker://18.9.7

k8s-node03 Ready <none> 21h v1.15.5 10.4.148.228 <none> CentOS Linux 7 (Core) 3.10.0-1062.el7.x86_64 docker://18.9.7

5. 安装Kubernetes集群可视化工具

Kubernetes 官网提供可视化工具 Dashboard,其配置步骤比较繁琐,这里我们不作研究,感兴趣的同学可以自行参考官方文档部署

Kubernetes Dashboard

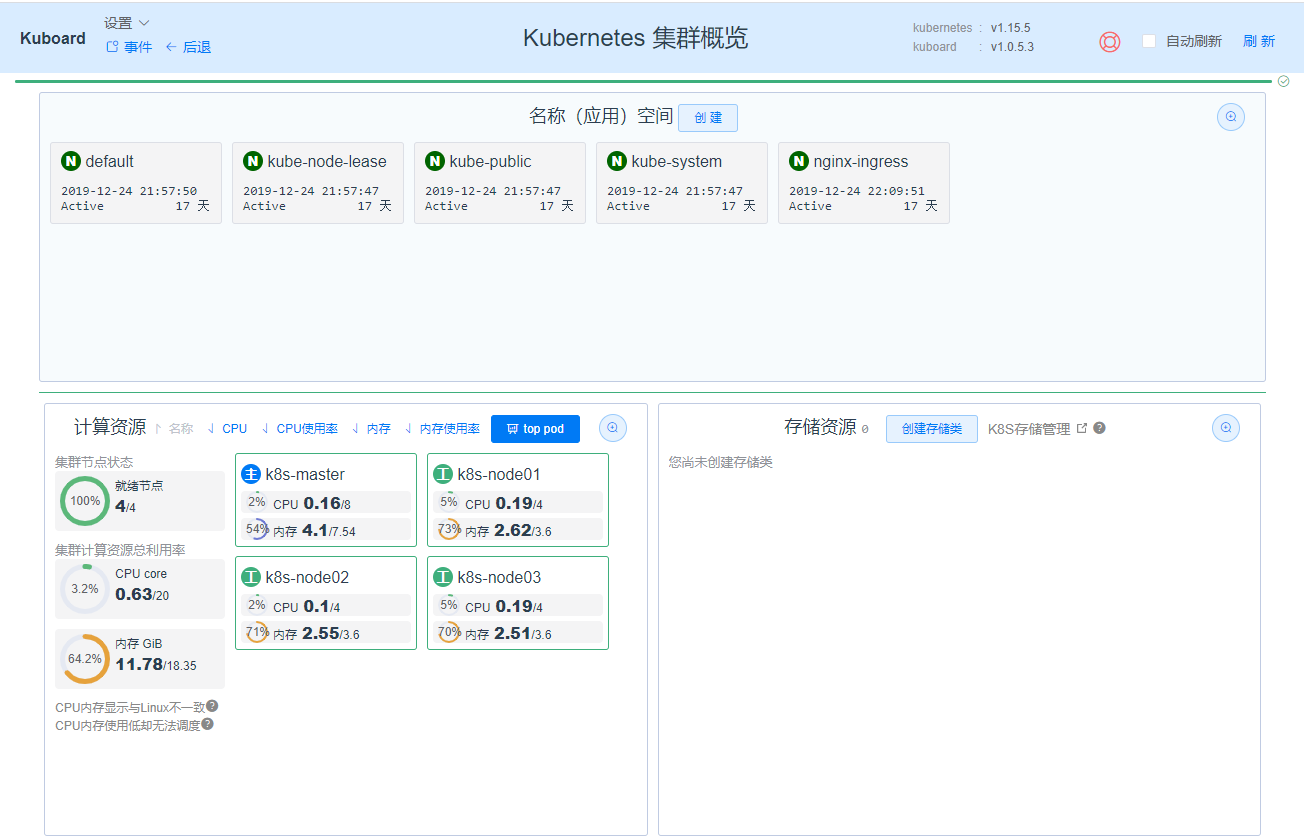

我们使用一款开源的可视化工具 Kuboard。Kuboard 是一款基于 Kubernetes 的微服务管理界面。目的是帮助用户快速在 Kubernetes 上落地微服务。

Kuboard

5.1 安装 Kuboard

5.1.1 下载 Kuboard 资源文件

> cd /root/

> wget https://kuboard.cn/install-script/kuboard.yaml

5.1.2 安装

> kubectl apply -f kuboard.yaml

5.1.3 查看 Kuboard 运行状态

> kubectl get pods -l k8s.eip.work/name=kuboard -n kube-system

NAME READY STATUS RESTARTS AGE

kuboard-6ccc4747c4-vbgkx 1/1 Running 0 10h

5.1.4 获取访问 Token

此 Token 拥有 ClusterAdmin 的权限,可以执行所有操作

> kubectl -n kube-system get secret $(kubectl -n kube-system get secret | grep kuboard-user | awk '{print $1}') -o go-template='{{.data.token}}' | base64 -d

eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJvYXJkLXVzZXItdG9rZW4tdHZrczUiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoia3Vib2FyZC11c2VyIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiOTkzYjU4NzMtMGNiOC00ZDllLWE3ZjQtNDUwOWEzZDY0NzZhIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmt1Ym9hcmQtdXNlciJ9.c8ADp0J7SgKKH61lBZg2SjUJen-1YLB1tuj6cFSJN9IWNJSwatE5xW7NqWLR47lwrjXrzfX-BxA0yZqI_v-D4tKogRxM_rqA7Bu3EM1wkd6HG8DCskbmHbhbSnMoW2kB4DD3Y484rzhgCcHbl7LL_nDrQm4ZhedHZM0wOg5Xyzv4hdnnxM_1ARcGjiwuC995EmA_QFcwg919RHsCqoF7SCnho5z2yK48Yyoklm1RKn9GT-LMueVOoqsLKy_OIROqS9lGM4zn4esgS2frV9DHwlr4z9EWtUMxQ9rB7Kl2Pg_vywEXDMy5_uLgxu0jrtNJyW8HWlVFpS0Hj5gTkD3o7A

5.1.5 访问 Kuboard

Kuboard Service 使用了 NodePort 的方式暴露服务,NodePort 为 32567;可以按如下方式访问 Kuboard。

http://任意一个Worker节点的IP地址:32567/

您也可以通过修改 Kuboard.yaml ,使用自定义的 NodePort 端口号。

> kubectl get svc -n kube-system -o wide |grep kuboard

kuboard NodePort 10.96.209.47 <none> 80:32567/TCP 10h

浏览器访问:http://10.4.148.226:32567/,输入获取的 Token,即可访问 Kuboard。如下图:

至此,Kubernetes 集群安装完成。