Slurm是面向Linux和Unix的开源作业调度程序,由世界上许多超级计算机使用,主要功能如下:

1、为用户分配计算节点的资源,以执行作业;

2、提供的框架在一组分配的节点上启动、执行和监视作业(通常是并行作业);

3、管理待处理作业的作业队列来仲裁资源争用问题;

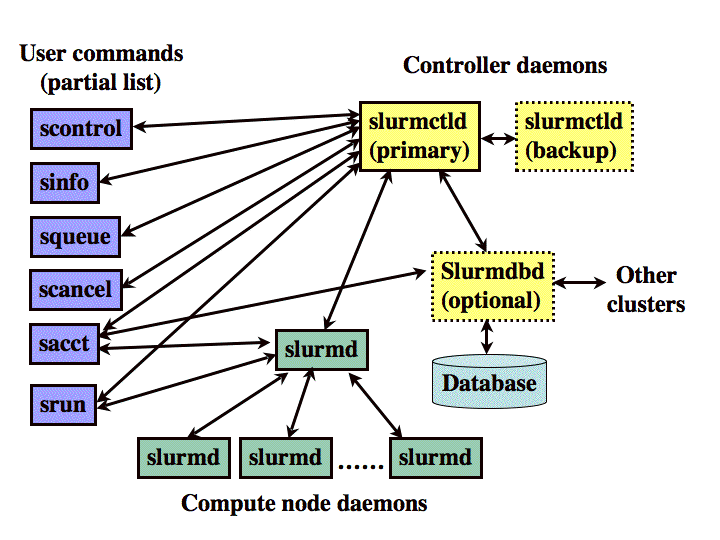

Slurm架构:

截图来自:https://slurm.schedmd.com/quickstart.html

PBS-Torque集群部署:https://www.cnblogs.com/liu-shaobo/p/13526084.html

一、基础环境

1、主机名和IP

控制节点:192.168.1.11 m1

计算节点:192.168.1.12 c1

计算节点:192.168.1.13 c2

分别在3个节点设置主机名

# hostnamectl set-hostname m1 # hostnamectl set-hostname c1 # hostnamectl set-hostname c2

2、主机配置

系统: Centos7.6 x86_64

CPU: 2C

内存:4G

3、关闭防火墙

# systemctl stop firewalld

# systemctl disable firewalld

# systemctl stop iptables

# systemctl disable iptables

4、修改资源限制

# cat /etc/security/limits.conf * hard nofile 1000000 * soft nofile 1000000 * soft core unlimited * soft stack 10240 * soft memlock unlimited * hard memlock unlimited

5、配置时区

配置CST时区

# ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

同步NTP服务器

# ntpdate 210.72.145.44 # yum install ntp -y # systemctl start ntpd # systemctl enable ntpd

安装EPEL源

# yum install http://mirrors.sohu.com/fedora-epel/epel-release-latest-7.noarch.rpm

6、安装NFS(控制节点)

# yum -y install nfs-utils rpcbind

编辑/etc/exports文件

# cat /etc/exports /software/ *(rw,async,insecure,no_root_squash)

启动NFS

# systemctl start nfs

# systemctl start rpcbind

# systemctl enable nfs

# systemctl enable rpcbind

客户端挂载NFS

# yum -y install nfs-utils

# mkdir /software

# mount 192.168.1.11:/software /software

7、配置SSH免登陆

# ssh-keygen # ssh-copy-id -i .ssh/id_rsa.pub c1 # ssh-copy-id -i .ssh/id_rsa.pub c2

二、配置Munge

1、创建Munge用户

Munge用户要确保Master Node和Compute Nodes的UID和GID相同,所有节点都需要安装Munge;

# groupadd -g 1108 munge # useradd -m -c "Munge Uid 'N' Gid Emporium" -d /var/lib/munge -u 1108 -g munge -s /sbin/nologin munge

2、生成熵池

# yum install -y rng-tools

使用/dev/urandom来做熵源

# rngd -r /dev/urandom

# vim /usr/lib/systemd/system/rngd.service

修改如下参数

[service]

ExecStart=/sbin/rngd -f -r /dev/urandom

# systemctl daemon-reload

# systemctl start rngd

# systemctl enable rngd

3、部署Munge

Munge是认证服务,实现本地或者远程主机进程的UID、GID验证。

# yum install munge munge-libs munge-devel -y

创建全局密钥

在Master Node创建全局使用的密钥

# dd if=/dev/urandom bs=1 count=1024 > /etc/munge/munge.key

密钥同步到所有计算节点

# scp -p /etc/munge/munge.key root@c1:/etc/munge # scp -p /etc/munge/munge.key root@c2:/etc/munge # chown munge: /etc/munge/munge.key # chmod 400 /etc/munge/munge.key

启动所有节点

# systemctl start munge

# systemctl enable munge

4、测试Munge服务

每个计算节点与控制节点进行连接验证

本地查看凭据

# munge -n

本地解码

# munge -n | unmunge

验证compute node,远程解码

# munge -n | ssh c1 unmunge

Munge凭证基准测试

# remunge

三、配置Slurm

1、创建Slurm用户

# groupadd -g 1109 slurm # useradd -m -c "Slurm manager" -d /var/lib/slurm -u 1109 -g slurm -s /bin/bash slurm

2、安装Slurm依赖

# yum install gcc gcc-c++ readline-devel perl-ExtUtils-MakeMaker pam-devel rpm-build mysql-devel -y

编译Slurm

# wget https://download.schedmd.com/slurm/slurm-19.05.7.tar.bz2 # rpmbuild -ta slurm-19.05.7.tar.bz2 # cd /root/rpmbuild/RPMS/x86_64/

所有节点安装Slurm

# yum localinstall slurm-*

3、配置控制节点Slurm

# cp /etc/slurm/cgroup.conf.example /etc/slurm/cgroup.conf # cp /etc/slurm/slurm.conf.example /etc/slurm/slurm.conf # vim /etc/slurm/slurm.conf ##修改如下部分 ControlMachine=m1 ControlAddr=192.168.1.11 SlurmctldPidFile=/var/run/slurmctld.pid SlurmctldPort=6817 SlurmdPidFile=/var/run/slurmd.pid SlurmdPort=6818 SlurmdSpoolDir=/var/spool/slurm/d SlurmUser=slurm StateSaveLocation=/var/spool/slurm/ctld SlurmUser=slurm SelectType=select/cons_res SelectTypeParameters=CR_CPU_Memory AccountingStorageType=accounting_storage/slurmdbd AccountingStorageHost=192.168.1.11 AccountingStoragePort=6819 JobCompType=jobcomp/none JobAcctGatherType=jobacct_gather/linux JobAcctGatherFrequency=30 SlurmctldLogFile=/var/log/slurm/slurmctld.log SlurmdLogFile=/var/log/slurm/slurmd.log NodeName=m[1-3] RealMemory=3400 Sockets=1 CoresPerSocket=2 State=IDLE PartitionName=all Nodes=m[1-3] Default=YES State=UP

复制控制节点配置文件到计算节点

# scp /etc/slurm/*.conf c1:/etc/slurm/ # scp /etc/slurm/*.conf c2:/etc/slurm/

设置控制、计算节点文件权限

# mkdir /var/spool/slurm # chown slurm: /var/spool/slurm # mkdir /var/log/slurm # chown slurm: /var/log/slurm

5、配置控制节点Slurm Accounting

Accounting records为slurm收集作业步骤的信息,可以写入一个文本文件或数据库,但这个文件会变得越来越大,最简单的方法是使用MySQL来存储信息。

创建数据库的Slurm用户(MySQL自行安装)

mysql> grant all on slurm_acct_db.* to 'slurm'@'%' identified by 'slurm*456' with grant option;

配置slurmdbd.conf文件

# cp /etc/slurm/slurmdbd.conf.example /etc/slurm/slurmdbd.conf

# cat /etc/slurm/slurmdbd.conf AuthType=auth/munge AuthInfo=/var/run/munge/munge.socket.2 DbdAddr=192.168.1.11 DbdHost=m1 SlurmUser=slurm DebugLevel=verbose LogFile=/var/log/slurm/slurmdbd.log PidFile=/var/run/slurmdbd.pid StorageType=accounting_storage/mysql StorageHost=mysql_ip StorageUser=slrum StoragePass=slurm*456 StorageLoc=slurm_acct_db

6、开启节点服务

启动控制节点Slurmdbd服务

# systemctl start slurmdbd # systemctl status slurmdbd # systemctl enable slurmdbd

启动控制节点slurmctld服务

# systemctl start slurmctld

# systemctl status slurmctld

# systemctl enable slurmctld

启动计算节点的服务

# systemctl start slurmd

# systemctl status slurmd

# systemctl enable slurmd

四、检查Slurm集群

查看集群

# sinfo

# scontrol show partition

# scontrol show node

提交作业

# srun -N2 hostname

# scontrol show jobs

查看作业

# squeue -a