记录一下怎么保证MQ消费消息去重,消息重试

先说 背景,有消息生产,有很多SQL表名称,对应去统计不同表的数据,更新数量,但是这些消息会重复,可能有很多逻辑都要重复执行,可能会速度慢

生产:

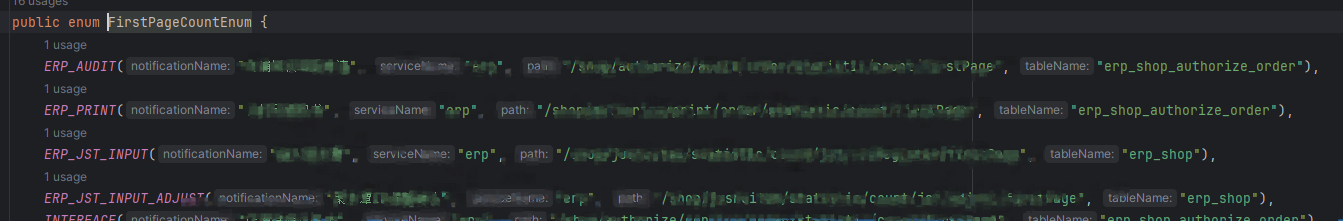

这是SQL解析,重要的是这段 ,

tableName是枚举里面固定的,图片中有显示

RabbitMQSender.sendMessage(MQConfig.FIRST_PAGE_SQL_ROUTINGKEY, tableName, MessageType.COMMON, uuid);

///伪代码

StatementHandler statementHandler = PluginUtils.realTarget(invocation.getTarget()); MetaObject metaObject = SystemMetaObject.forObject(statementHandler); MappedStatement mappedStatement = (MappedStatement)metaObject.getValue("delegate.mappedStatement"); if(!SqlCommandType.SELECT.equals(mappedStatement.getSqlCommandType())){ Object proceed = invocation.proceed(); firstPageSqlParse(metaObject); return proceed;

}

private void firstPageSqlParse(MetaObject metaObject) {

BoundSql boundSql = (BoundSql) metaObject.getValue("delegate.boundSql");

String sql = boundSql.getSql();

CompletableFuture.runAsync(() -> {

// 语句提取表名:

String lowSql = sql.toLowerCase();

String tableName = extractTableName(lowSql);

FirstPageCountEnum[] values = FirstPageCountEnum.values();

Set<String> collect = Arrays.stream(values).map(FirstPageCountEnum::getTableName).collect(Collectors.toSet());

if (collect.contains(tableName)) {

String uuid = IdUtil.randomUUID();

RabbitMQSender.sendMessage(MQConfig.FIRST_PAGE_SQL_ROUTINGKEY, tableName, MessageType.COMMON, uuid);

}

});

}

private static String extractTableName(String sql) {

String regex = "(insert\\s+into\\s+|update\\s+|delete\\s+from\\s+)(\\w+)";

Pattern pattern = Pattern.compile(regex);

Matcher matcher = pattern.matcher(sql);

if (matcher.find()) {

String tableName = matcher.group(2);

System.out.println(tableName);

return tableName;

}

return "";

}

消费端:

这就是普通接受部分

/** * 监听单个队列 * concurrency:并发处理消息数 */ @RabbitListener(queues = MQConfig.FIRST_PAGE_SQL) @RabbitHandler public void notificationQueueReceiver(Message message, Channel channel) throws IOException { messageHandler(message, channel); } private void messageHandler(Message message, Channel channel) throws IOException { long deliveryTag = message.getMessageProperties().getDeliveryTag(); Action action = Action.ACCEPT; try { MessageBody messageBody = MessageBody.getMessageBody(message); String sql = MessageBody.getMessageBody(message).getData().toString(); // 锁, Object o = CACHE_MQ_MAP_LOCK.get(sql); if (o != null) { if (CACHE_MQ_MAP.getOrDefault(sql, 0) == 0) { synchronized (o) { if (CACHE_MQ_MAP.getOrDefault(sql, 0) == 0) { CACHE_MQ_MAP.put(sql, 1); } o.notifyAll(); } } } } catch (Exception e) { log.error("MQ处理消息出错", e); } finally { // 通过 finally 块来保证 Ack/Nack 会且只会执行一次 if (action == Action.ACCEPT) { // false 只确认当前 consumer 一个消息收到,true 确认所有 consumer 获得的消息。 channel.basicAck(deliveryTag, false); } else { // 第二个 boolean 为 false 表示不会重试,为 true 会重新放回队列 channel.basicReject(deliveryTag, false); } } }

初始化两个MAP 锁,以及去除重复的数据Map

@PostConstruct public void init() { CompletableFuture.runAsync(() -> { for (FirstPageCountEnum value : FirstPageCountEnum.values()) { CACHE_MQ_MAP_LOCK.put(value.getTableName(), new Object()); try {

// 为了初始化可以数据更新 String uuid = IdUtil.randomUUID(); RabbitMQSender.sendMessage(MQConfig.FIRST_PAGE_SQL_ROUTINGKEY, value.getTableName(), MessageType.COMMON, uuid); } catch (Exception e) { e.printStackTrace(); } } // 创建消费者线程,处理逻辑在下面,这里有点问题,创建的线程多了,因为表名称重复了。 for (FirstPageCountEnum value : FirstPageCountEnum.values()) { Thread consumerThread = new Thread(new Consumer(CACHE_MQ_MAP, value.getTableName(), userFirstPageCountService)); consumerThread.start(); } }); } // 保存消息的去重复的MAP private final ConcurrentHashMap<String, Integer> CACHE_MQ_MAP = new ConcurrentHashMap<>(32);

// 保存锁的 private static final ConcurrentHashMap<String, Object> CACHE_MQ_MAP_LOCK = new ConcurrentHashMap<>(32);

创建的消费线程处理的逻辑

static class Consumer implements Runnable { private final ConcurrentHashMap<String, Integer> dataMap; private final String tableName; private final UserFirstPageCountService userFirstPageCountService; public Consumer(ConcurrentHashMap<String, Integer> dataMap, String tableName, UserFirstPageCountService userFirstPageCountService) { this.userFirstPageCountService = userFirstPageCountService; this.tableName = tableName; this.dataMap = dataMap; } @Override public void run() { // 消费数据 while (true) { for (String key : dataMap.keySet()) { if (!key.equals(tableName)) { continue; } Object lock = CACHE_MQ_MAP_LOCK.get(key); // 获取数据 try { Thread.sleep(2000); int value = dataMap.getOrDefault(key, 0); log.info("Consumed: {}, value:{}", key, value); if (value == 1) { dataMap.put(key, 0);

//这里业务处理逻辑,里面加了重试。 userFirstPageCountService.sqlParse(tableName); } synchronized (lock) { if (dataMap.getOrDefault(key, 0) == 0) { // 设置对应的key为0 log.info("wait {},{}", key, 0); lock.wait(); log.info("notify {},{}", key, 0); } } } catch (Exception e) { log.error("Consumed error ", e); } } } } }

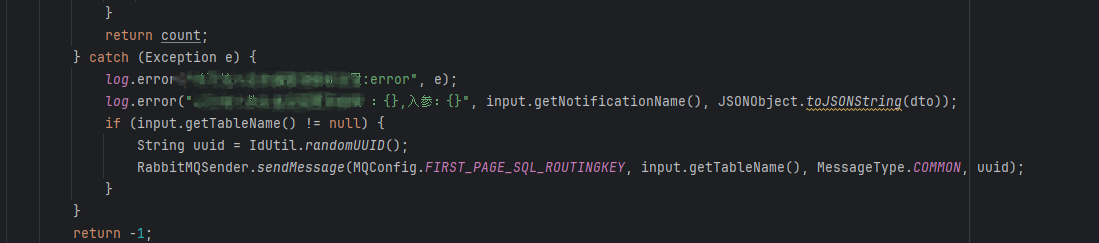

这是重试,初始化逻辑可以保证不丢失。 以及这里出错后调用不成功可以不断重试

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· 阿里巴巴 QwQ-32B真的超越了 DeepSeek R-1吗?

· 如何调用 DeepSeek 的自然语言处理 API 接口并集成到在线客服系统

· 【译】Visual Studio 中新的强大生产力特性

· 2025年我用 Compose 写了一个 Todo App

2022-04-27 Springboot动态替换Properties配置变量

2021-04-27 expect脚本

2020-04-27 剑指Offer_编程题_从上往下打印二叉树

2020-04-27 剑指Offer_编程题_包含min函数的栈