yarn内存设置问题

hive查询时出现

Ended Job = job_1544003470555_0007 with errors

Error during job, obtaining debugging information...

FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.mr.MapRedTask

然后进行yarn测试

hadoop jar hadoop-mapreduce-examples-3.0.0-cdh6.0.0.jar pi 2 10

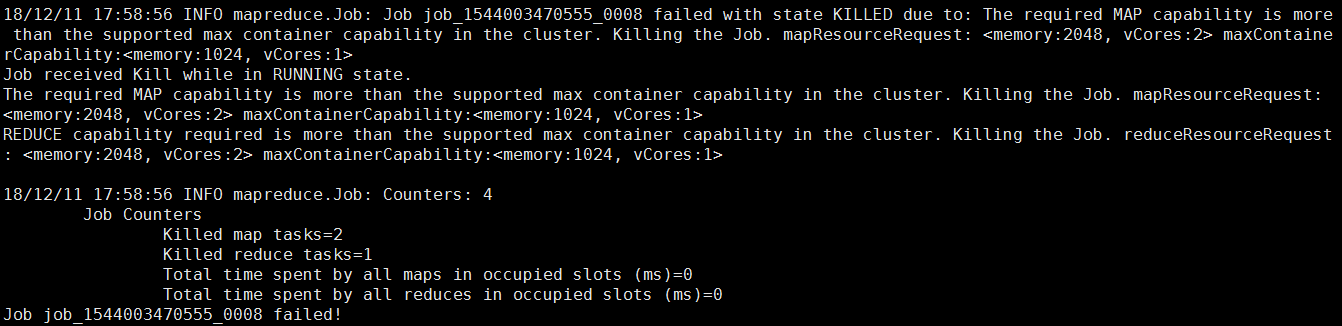

报错

18/12/11 17:58:56 INFO mapreduce.Job: Job job_1544003470555_0008 failed with state KILLED due to: The required MAP capability is more than the supported max container capability in the cluster. Killing the Job. mapResourceRequest: <memory:2048, vCores:2> maxContainerCapability:<memory:1024, vCores:1>

解决方法

修改参数 调大虚拟内存,根据自己情况配置

mapreduce.map.memory.mb=2048

mapreduce.reduce.memory.mb=2048

yarn.nodemanager.vmem-pmem-ratio=3

参考yarn平台参数设置点击此处

这样map运行时的虚拟内存大小为 2048*3

类似这样的情况还有

设置Container的分配的内存大小,意味着ResourceManager只能分配给Container的内存

大于yarn.scheduler.minimum-allocation-mb=2G,

不能超过 yarn.scheduler.maximum-allocation-mb=8G 的值。

ResourceManager分配给container的CPU也要满足最小和最大值的条件,通过设置

yarn.scheduler.minimum-allocation-vcores=2

yarn.scheduler.maximum-allocation-vcores=8