python和TensorFlow中一些常用小知识

1.format格式化输出

看看找个小例子是格式化输出+正则表达式赋值的

t=["11点{}分".format(i) for i in range(60)]

print(t)

['11点0分', '11点1分', '11点2分', '11点3分', '11点4分', '11点5分', '11点6分', '11点7分', '11点8分', '11点9分', '11点10分', '11点11分', '11点12分', '11点13分', '11点14分', '11点15分', '11点16分', '11点17分', '11点18分', '11点19分', '11点20分', '11点21分', '11点22分', '11点23分', '11点24分', '11点25分', '11点26分', '11点27分', '11点28分', '11点29分', '11点30分', '11点31分', '11点32分', '11点33分', '11点34分', '11点35分', '11点36分', '11点37分', '11点38分', '11点39分', '11点40分', '11点41分', '11点42分', '11点43分', '11点44分', '11点45分', '11点46分', '11点47分', '11点48分', '11点49分', '11点50分', '11点51分', '11点52分', '11点53分', '11点54分', '11点55分', '11点56分', '11点57分', '11点58分', '11点59分']

构造的就是这样的

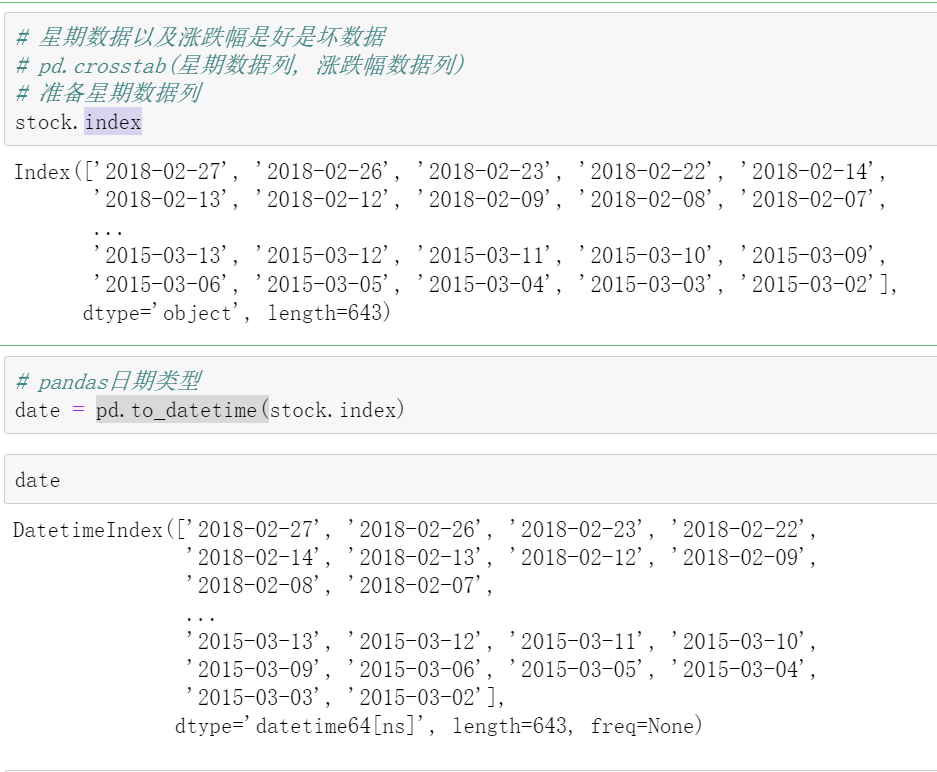

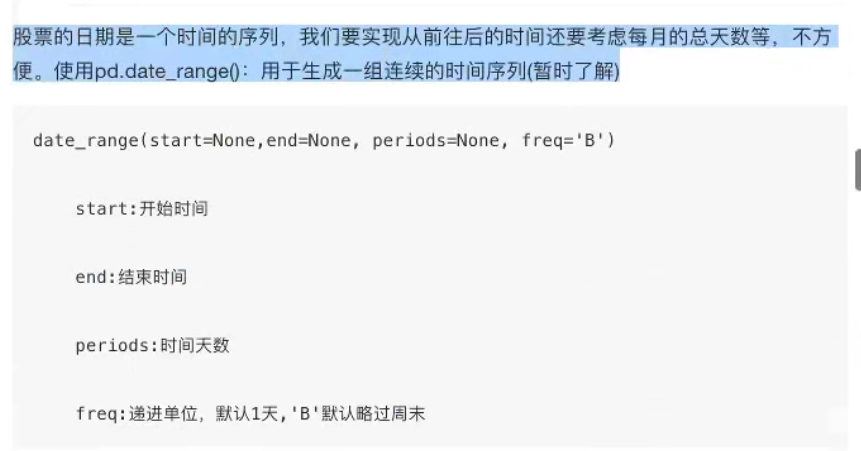

2.pandas中的时间序列

3.pandas把字符串转化为时间序列

pd.to_datetime()

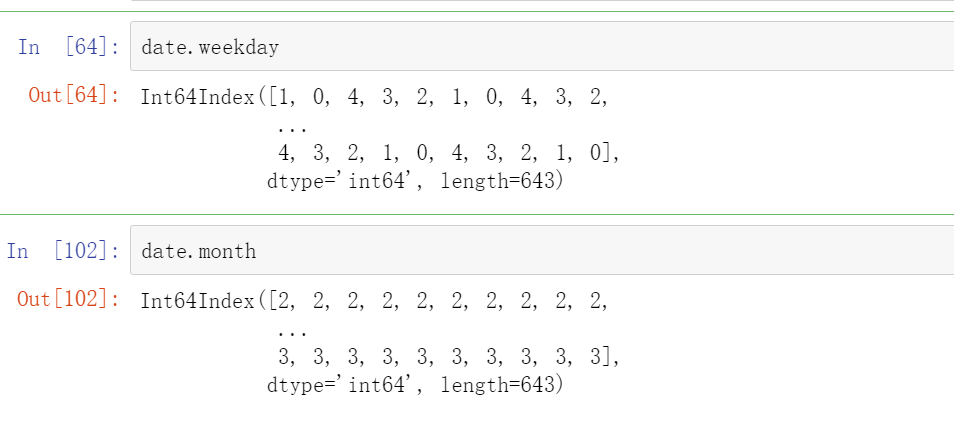

然后你看这个就转化成了DatetimeIndex类型,这个类型有个好处的,直接date.month就可以返回对应的月份,直接date.weekday就可以直接返回对应的星期

4 with---as 的用法

我们先看这段代码:

file = open("/tmp/foo.txt")

try:

data = file.read()

finally:

file.close()

然后用with----as表示就是:

with open("/tmp/foo.txt") as file:

data = file.read()

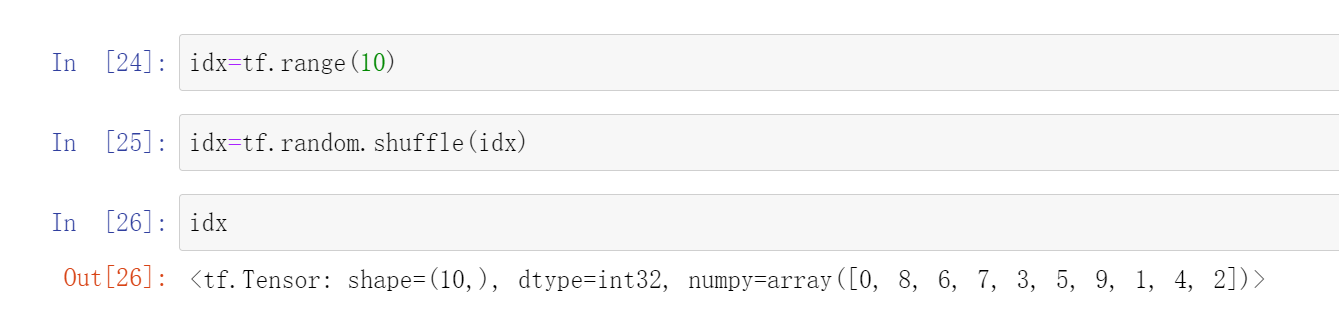

5 tf.random.shuffle()打散函数

tf.random.shuffle(idx)

这个就是原本idx中的数组打散,从新分配位置

例如:

6 tf.data.Dataset.from_tensor_slices()和batch()

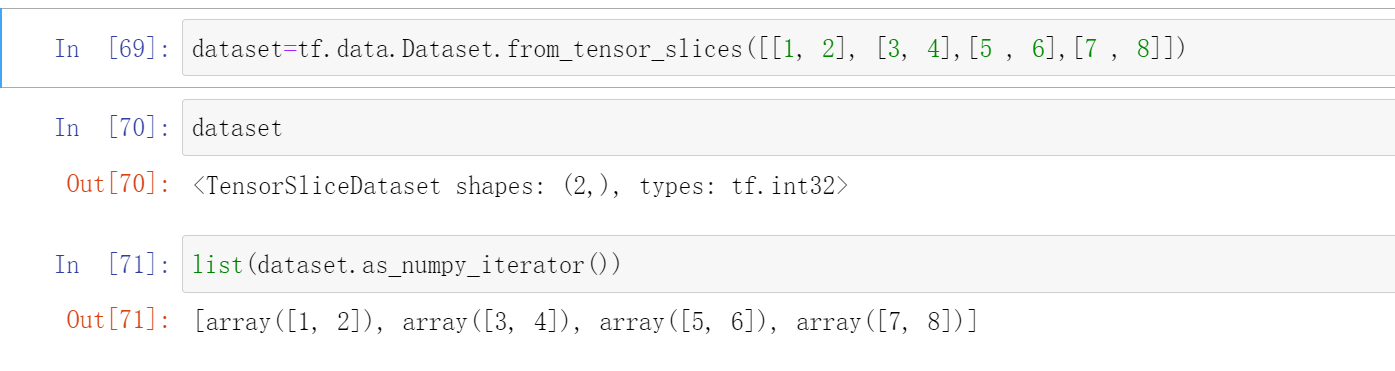

1.tf.data.Dataset.from_tensor_slices()

该函数的作用是接收tensor,对tensor的第一维度进行切分,并返回一个表示该tensor的切片数据集

import tensorflow as tf

t = tf.range(10.)[:, None]

t = tf.data.Dataset.from_tensor_slices(t)

#<TensorSliceDataset shapes: (1,), types: tf.float32>

for i in t:

print(i.numpy())

# [0.]

# [1.]

# [2.]

# [3.]

# [4.]

# [5.]

# [6.]

# [7.]

# [8.]

# [9.]

2.batch()

这个类似于一个分组函数t.batch(3),就是把三个分成一组,在进行数据处理时通常于上面一个连用

import tensorflow as tf

t = tf.range(10.)[:, None]

t = tf.data.Dataset.from_tensor_slices(t)

#<TensorSliceDataset shapes: (1,), types: tf.float32>

for i in t:

print(i.numpy())

#[0.]

#[1.]

#[2.]

#[3.]

#[4.]

#[5.]

#[6.]

#[7.]

#[8.]

#[9.]

batch_t = t.batch(2)

#<BatchDataset shapes: (None, 1), types: tf.float32>

for i in batch_t:

print(i.numpy())

>>>

[[0.]

[1.]]

[[2.]

[3.]]

[[4.]

[5.]]

[[6.]

[7.]]

[[8.]

[9.]]

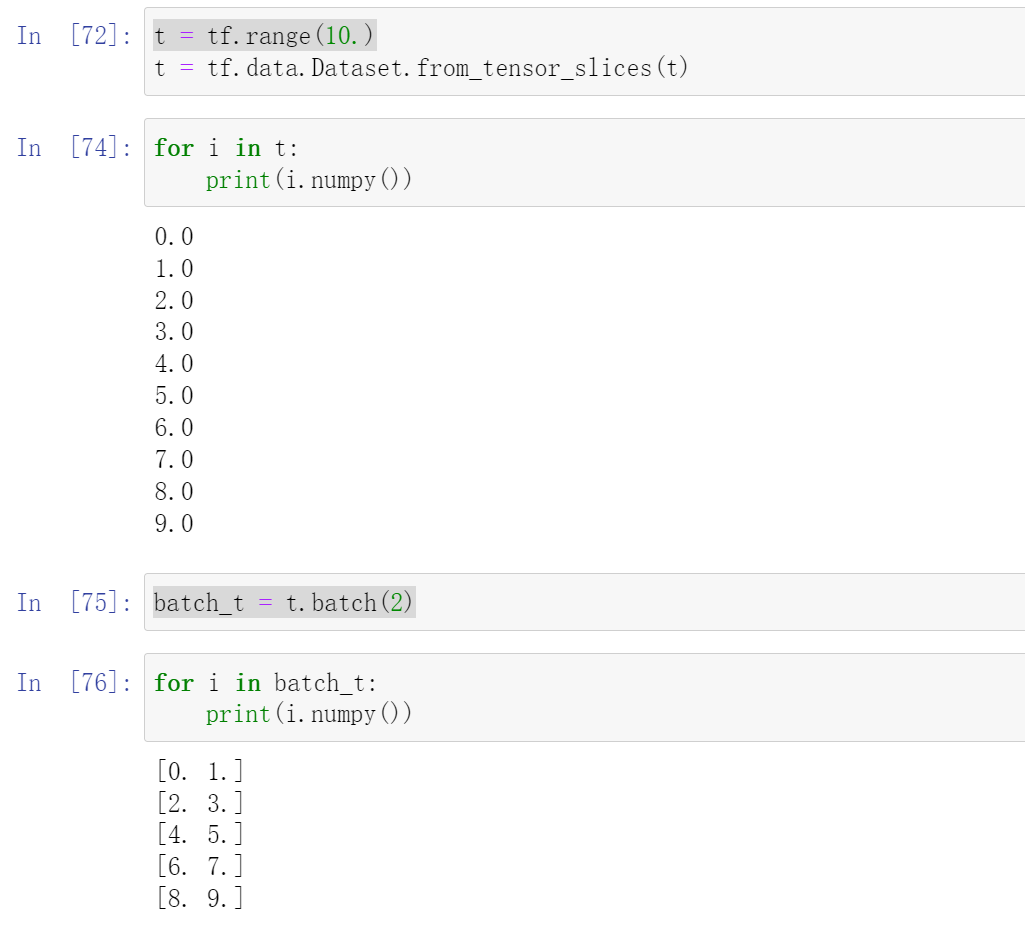

这两个函数通常连着用于数据处理:

dataset=tf.data.Dataset.from_tensor_slices([[1, 2], [3, 4],[5 , 6],[7 , 8]]).batch(2)

train_iter=iter(dataset)

sample=next(train_iter)

sample[0].shape,sample[1].shape

=>>(TensorShape([2]), TensorShape([2]))

for i in sample:

print(sample[0],sample[1])

=>tf.Tensor([1 2], shape=(2,), dtype=int32) tf.Tensor([3 4], shape=(2,), dtype=int32)

=>tf.Tensor([1 2], shape=(2,), dtype=int32) tf.Tensor([3 4], shape=(2,), dtype=int32)

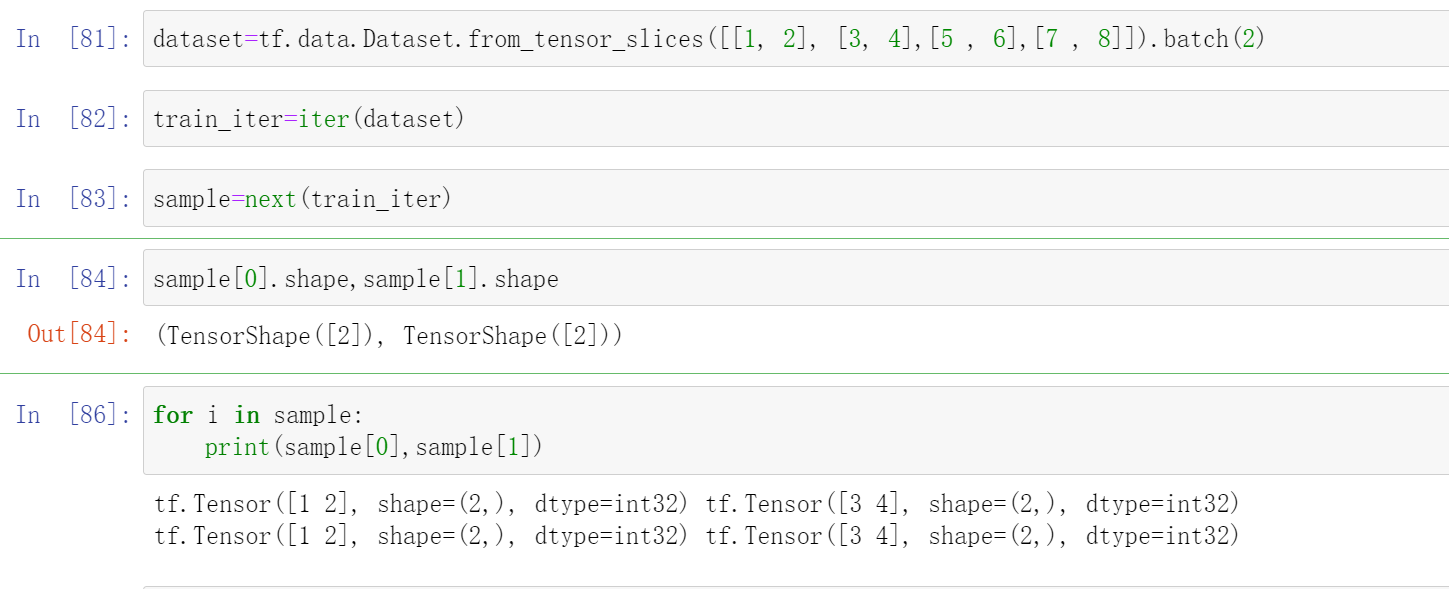

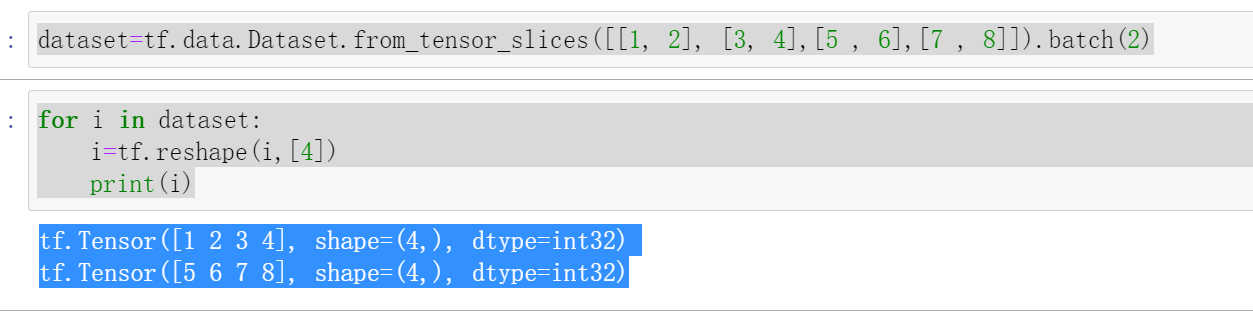

例如:

如果我们想访问的话可以这样:

dataset=tf.data.Dataset.from_tensor_slices([[1, 2], [3, 4],[5 , 6],[7 , 8]]).batch(2)

for i in dataset:

i=tf.reshape(i,[4])

print(i)

=>tf.Tensor([1 2 3 4], shape=(4,), dtype=int32)

=>tf.Tensor([5 6 7 8], shape=(4,), dtype=int32)

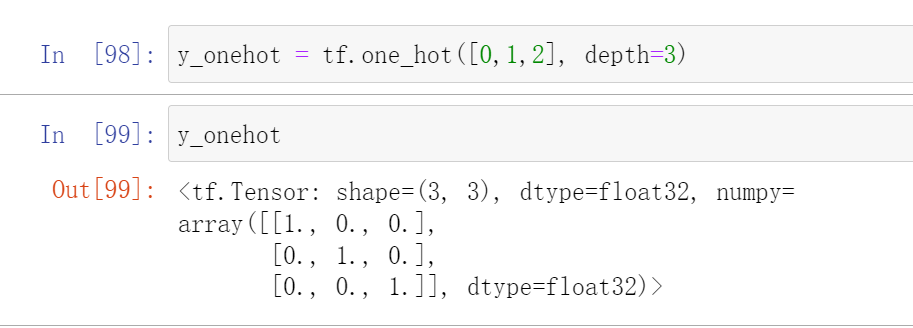

7 one_hot

这里

y_onehot = tf.one_hot(y, depth)

这里depth是分成几类

例如

y_onehot = tf.one_hot([0,1,2], depth=3)

y_onehot

8 求导函数,tf.Variable和assign_sub()

我们在调用TensorFlow中的求导函数的时候

我们首先先用这个:

#定义:如果后面我们要跟踪w1,b1的梯度的话,我们首先要定义成Variable()类型

w1 = tf.Variable()

w2 = tf.Variable()

---

b3= tf.Variable()

with tf.GradientTape() as tape: # tf.Variable

#定义你的函数表达式

loss=------

grads = tape.gradient(loss, [w1, b1, w2, b2, w3, b3])

#在这里我们必须要把w1,b1,---b3定义成Variable要不然求的导数为空

#这里如果我们想保持w1,b1,w2,b2--的Variable的类型,我们需要用到assign_sub

#等价于w1=w1-lr*grads[0]

w1.assign_sub(lr * grads[0])

b1.assign_sub(lr * grads[1])

w2.assign_sub(lr * grads[2])

b2.assign_sub(lr * grads[3])

w3.assign_sub(lr * grads[4])

b3.assign_sub(lr * grads[5])

assign_sub函数常用于参数的自更新,等待更新的w,要先被指定为可更新可训练,也就是variable类型,才可以实现自更新

例如这个:

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

import matplotlib

from matplotlib import pyplot as plt

# Default parameters for plots

matplotlib.rcParams['font.size'] = 20

matplotlib.rcParams['figure.titlesize'] = 20

matplotlib.rcParams['figure.figsize'] = [9, 7]

matplotlib.rcParams['font.family'] = ['STKaiTi']

matplotlib.rcParams['axes.unicode_minus']=False

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import datasets

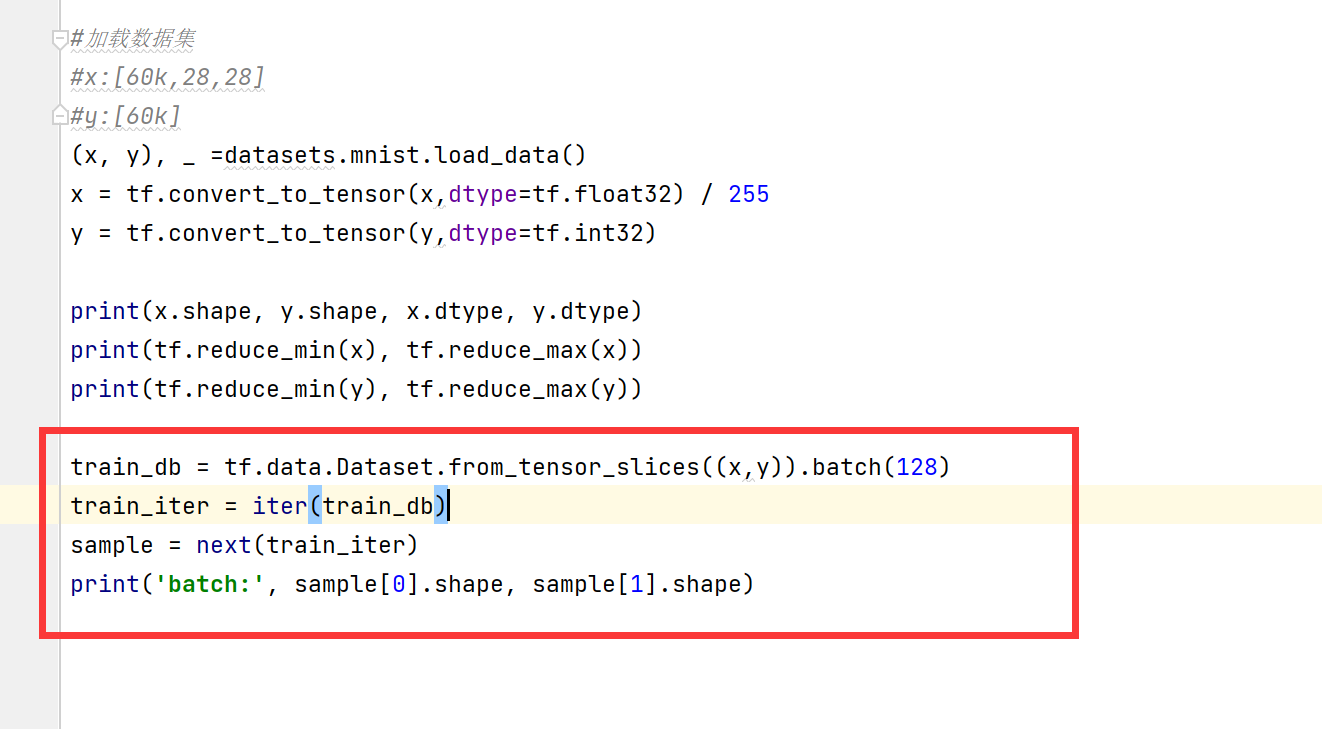

# x: [60k, 28, 28],

# y: [60k]

(x, y), _ = datasets.mnist.load_data()

# x: [0~255] => [0~1.]

x = tf.convert_to_tensor(x, dtype=tf.float32) / 255.

y = tf.convert_to_tensor(y, dtype=tf.int32)

print(x.shape, y.shape, x.dtype, y.dtype)

print(tf.reduce_min(x), tf.reduce_max(x))

print(tf.reduce_min(y), tf.reduce_max(y))

train_db = tf.data.Dataset.from_tensor_slices((x,y)).batch(128)

train_iter = iter(train_db)

sample = next(train_iter)

print('batch:', sample[0].shape, sample[1].shape)

# [b, 784] => [b, 256] => [b, 128] => [b, 10]

# [dim_in, dim_out], [dim_out]

#这里要定义成Variable ,因为后面求偏导数的时候要跟踪他的梯度

w1 = tf.Variable(tf.random.truncated_normal([784, 256], stddev=0.1))

b1 = tf.Variable(tf.zeros([256]))

w2 = tf.Variable(tf.random.truncated_normal([256, 128], stddev=0.1))

b2 = tf.Variable(tf.zeros([128]))

w3 = tf.Variable(tf.random.truncated_normal([128, 10], stddev=0.1))

b3 = tf.Variable(tf.zeros([10]))

lr = 1e-3

losses = []

for epoch in range(20): # iterate db for 10

for step, (x, y) in enumerate(train_db): # for every batch

# x:[128, 28, 28]

# y: [128]

# [b, 28, 28] => [b, 28*28]

x = tf.reshape(x, [-1, 28*28])

with tf.GradientTape() as tape: # tf.Variable

# x: [b, 28*28]

# h1 = x@w1 + b1

# [b, 784]@[784, 256] + [256] => [b, 256] + [256] => [b, 256] + [b, 256]

h1 = x@w1 + tf.broadcast_to(b1, [x.shape[0], 256])

h1 = tf.nn.relu(h1)

# [b, 256] => [b, 128]

h2 = h1@w2 + b2

h2 = tf.nn.relu(h2)

# [b, 128] => [b, 10]

out = h2@w3 + b3

# compute loss

# out: [b, 10]

# y: [b] => [b, 10]

y_onehot = tf.one_hot(y, depth=10)

# mse = mean(sum(y-out)^2)

# [b, 10]

loss = tf.square(y_onehot - out)

# mean: scalar

loss = tf.reduce_mean(loss)

# compute gradients

grads = tape.gradient(loss, [w1, b1, w2, b2, w3, b3])

# print(grads)

# w1 = w1 - lr * w1_grad

w1.assign_sub(lr * grads[0])

b1.assign_sub(lr * grads[1])

w2.assign_sub(lr * grads[2])

b2.assign_sub(lr * grads[3])

w3.assign_sub(lr * grads[4])

b3.assign_sub(lr * grads[5])

if step % 100 == 0:

print(epoch, step, 'loss:', float(loss))

losses.append(float(loss))

plt.figure()

plt.plot(losses, color='C0', marker='s', label='训练')

plt.xlabel('Epoch')

plt.legend()

plt.ylabel('MSE')

plt.savefig('forward.svg')

# plt.show()

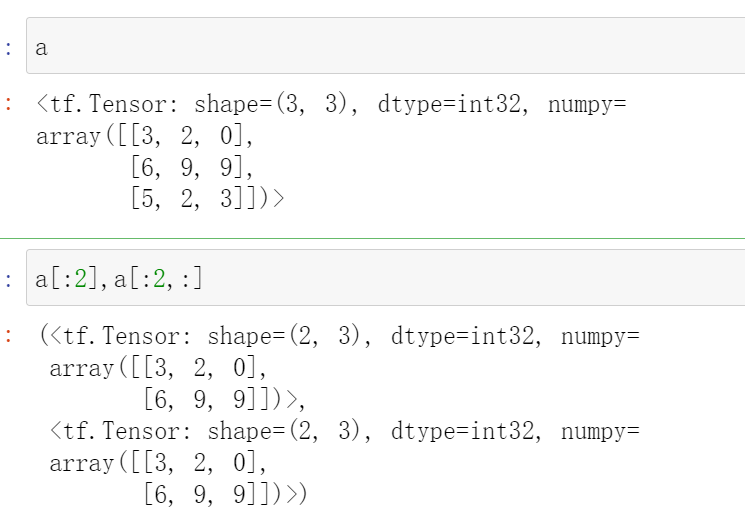

9 a[:2]==a[:2,:]

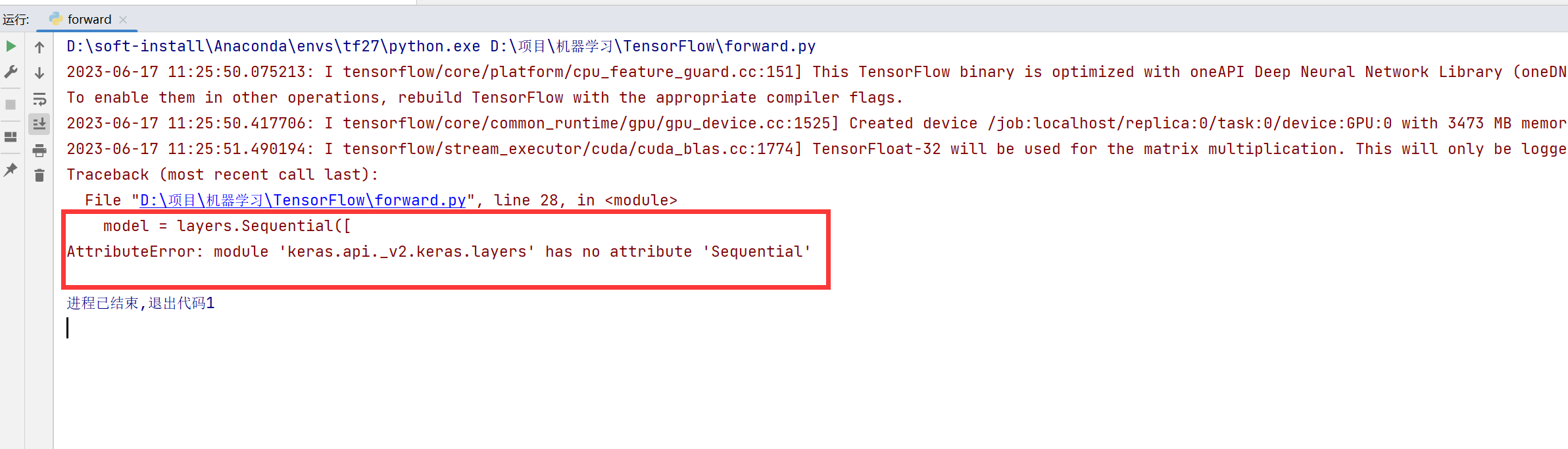

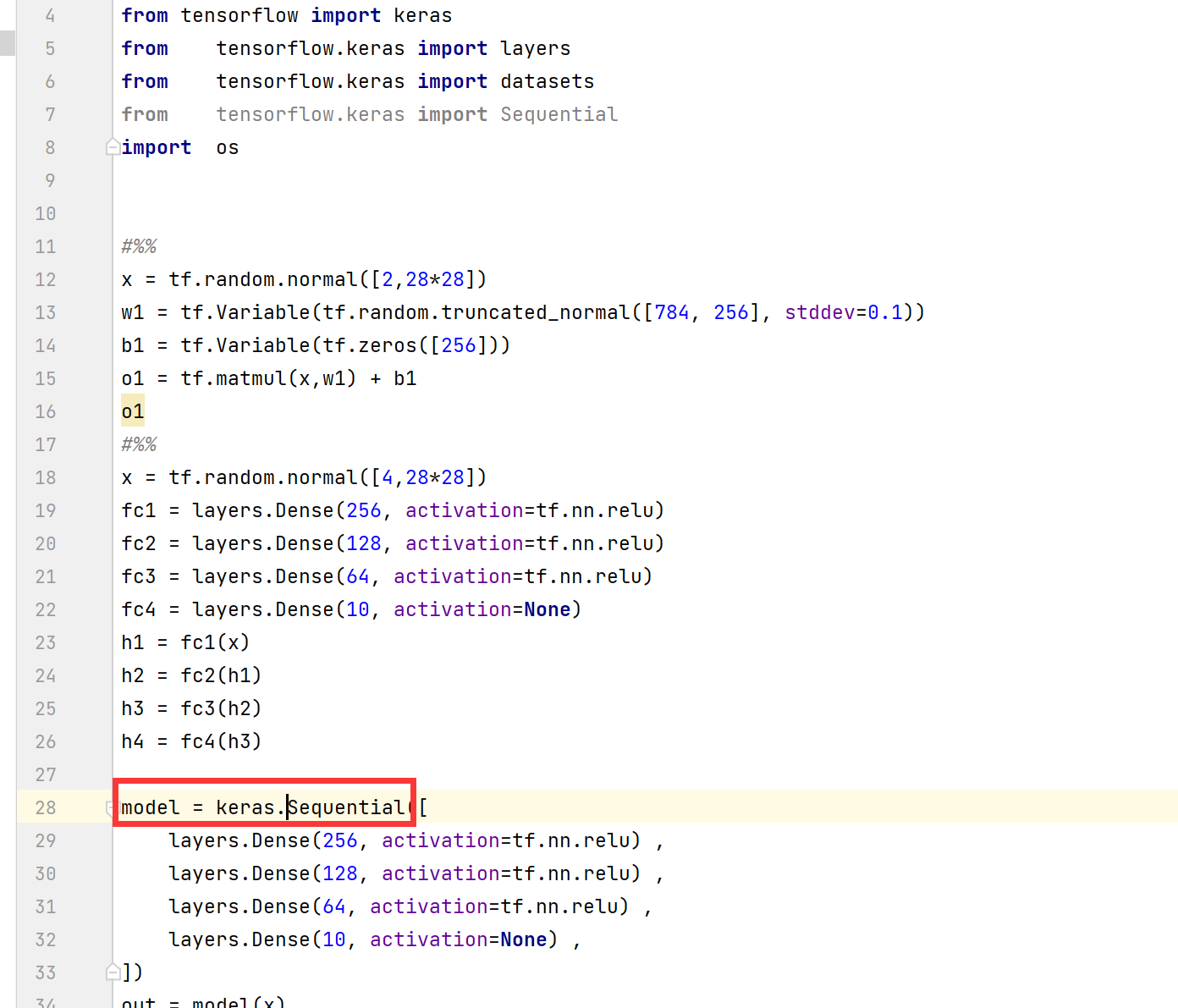

10 layers.Sequential不能用

今天下载了一个代码,运行的时候发现这个layers.Sequential报错

可能是不能用了

替换方法,变成了

keras.Sequential

就可以了

或者

from tensorflow.keras import Sequential

直接调用Sequential