作业:获取一篇新闻的全部信息

这个作业的要求来自于:https://edu.cnblogs.com/campus/gzcc/GZCC-16SE2/homework/2894。

我爬取的网页是某高校的新闻信息网站:http://news.gzcc.cn/html/2019/xiaoyuanxinwen_0328/11086.html。

代码如下:

# -*- coding: utf-8 -*- """ Spyder Editor This is a temporary script file. """ # -*- coding: UTF-8 -*- #导入包 import requests # 直接导入requests模块 import re # 直接导入re模块 from datetime import datetime # 从datetime包导入datetime模块 from bs4 import BeautifulSoup # 从bs4包导入BeautifulSoup模块 html_url = 'http://news.gzcc.cn/html/2019/xiaoyuanxinwen_0328/11086.html' # 校园新闻url click_num_url = 'http://oa.gzcc.cn/api.php?op=count&id=11086&modelid=80' # 点击次数url def newsdt(shareinfo): newsDate = shareinfo.split()[0].split(':')[1] newsTime = shareinfo.split()[1] dt = newsDate + " " + newsTime # datetime模块的strptime能够将文本字符串格式的数据转换成时间格式的数据 showtime = datetime.strptime(dt, "%Y-%m-%d %H:%M:%S") # strptime():将字符串类型转换成时间类型 print("新闻发布时间:", end="") print(showtime) #————————————点击次数—————————————— def click(click_num_url): return_click_num = requests.get(click_num_url) click_info = BeautifulSoup(return_click_num.text, 'html.parser') click_num = int(click_info.text.split('.html')[3].split("'")[1]) print("点击次数:", end="") print(click_num) # 用requests库和BeautifulSoup库,爬取校园新闻首页新闻的标题、链接、正文、点击量等信息。 def anews(html_url): resourses = requests.get(html_url) # 发送get请求 resourses.encoding = 'UTF-8' # 以防获取的内容出现乱码,手动指定字符编码为UTF-8 soup = BeautifulSoup(resourses.text, 'html.parser') # 调用BeautifulSoup解析功能,解析请求返回的内容,解析器用html.parser #————————————输出内容—————————————— print("获取的head标签内容:") print(soup.head) print("\n新闻标题:" + soup.select('.show-title')[0].text) # 使用BeautifulSoup的select方法根据元素的类名来查找元素的内容,返回的是list类型 publishing_unit = soup.select('.show-info')[0].text.split()[4].split(':')[1] print("新闻发布单位:", end="") print(publishing_unit) print("作者:", end="") writer = soup.select('.show-info')[0].text.split()[2].split(':')[1] print(writer) print("新闻内容:" + soup.select('.show-content')[0].text.replace('\u3000', '')) shareinfo=soup.select('.show-info')[0].text # 调用函数 newsdt(shareinfo) click(click_num_url) anews(html_url)

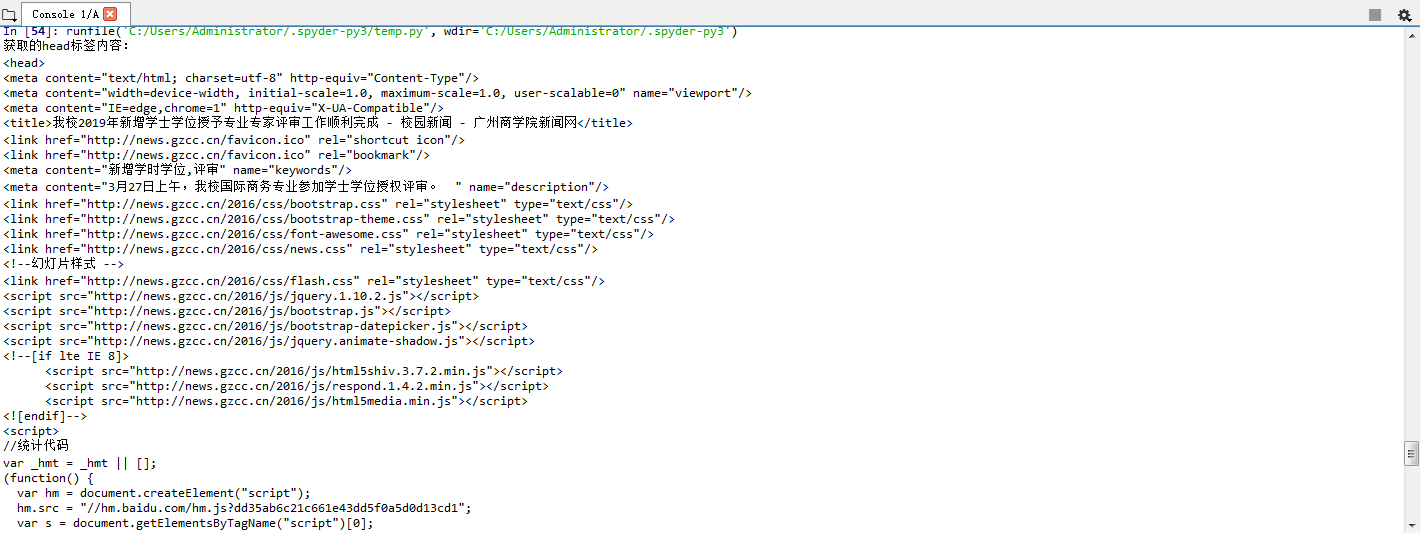

运行结果如下: