mongodb

01章 数据库类型

非关系型的数据库, NoSql , MongoDB

关系型的数据库, Sql , Mysql \ PostgressSQL \ SQLite \ Oracle \ SQL Server

02章 mongodb与mysql

MongoDB:面向文档的数据库、缺少事务支持、BSON数据格式、c++编写、没有索引的mongodb只能正向查看所有文档

关系数据库共享负载解决集群单点:

1.第一台服务器写入的数据在第二台服务器可用

2.两台服务器同时更新数据,哪个更新是正确的

3.第一台服务器写入数据,第二台服务器查询是否同步

| mysql | mongodb |

|---|---|

| 库 | 库 |

| 表 | 集合 |

| 字段 | key:value |

| 行 | 文档 |

| 唯一键 | Id |

Id为12字节二进制组成的BSON数据,其中4字节的时间戳(从1970年以来的秒数),3字节机器码,2字节进程码和3字节的计数器

| mongodb | 约束 |

|---|---|

| 库 | 单个数据库最多24000(12000个索引)个空间 |

| 集合 | |

| key:value | |

| 文档 | |

| Id |

创建集合的注意点:

$ 符号是mongodb的保留关键字

不允许使用空白字符串 ("")

不可以使用null字符串

不可以 以 "system."开头

03章 mongodb特点

高性能:

Mongodb提供高性能的数据持久性

尤其是支持嵌入式数据模型减少数据库系统上的I/O操作

索引支持能快的查询,并且可以包括来嵌入式文档和数组中的键

丰富的语言查询:

Mongodb支持丰富的查询语言来支持读写操作(CRUD)以及数据汇总,文本搜索和地理空间索引

高可用性:

Mongodb的复制工具,成为副本集,提供自动故障转移和数据冗余

水平可扩展性:

Mongodb提供了可扩展性,作为其核心功能的一部分,分片是将数据分,在一组计算机上

支持多种存储引擎:

WiredTiger存储引擎和、MMAPv1存储引擎和InMemory存储引擎

使用帮助

Mongodb https://www.Mongodb.org

安装向导: https://www.mongodb.com/docs/v4.2/introduction/

Google帮助小组: http://group.google.com/group/mongodb-user

提交bug: http://jira.Mongodb.org

04章 mongodb应用场景

地理空间索引: 可以索引基于位置的数据,处理从给定坐标开始的特定距离内有多少个元素

分析查询:通过内置的一个分析工具。显示mongodb如何找到返回的文档,可以确定慢查询的原因,进而确定是否添加索引或者是优化数据

就地更新信息: 只有在必要的时候才会写磁盘。例如某键值1秒内被更新多次,则会进行写磁盘

存储二进制数据:BSON支持在一个文档中存储最多4MB的二进制数据,电影、音频也可以存储

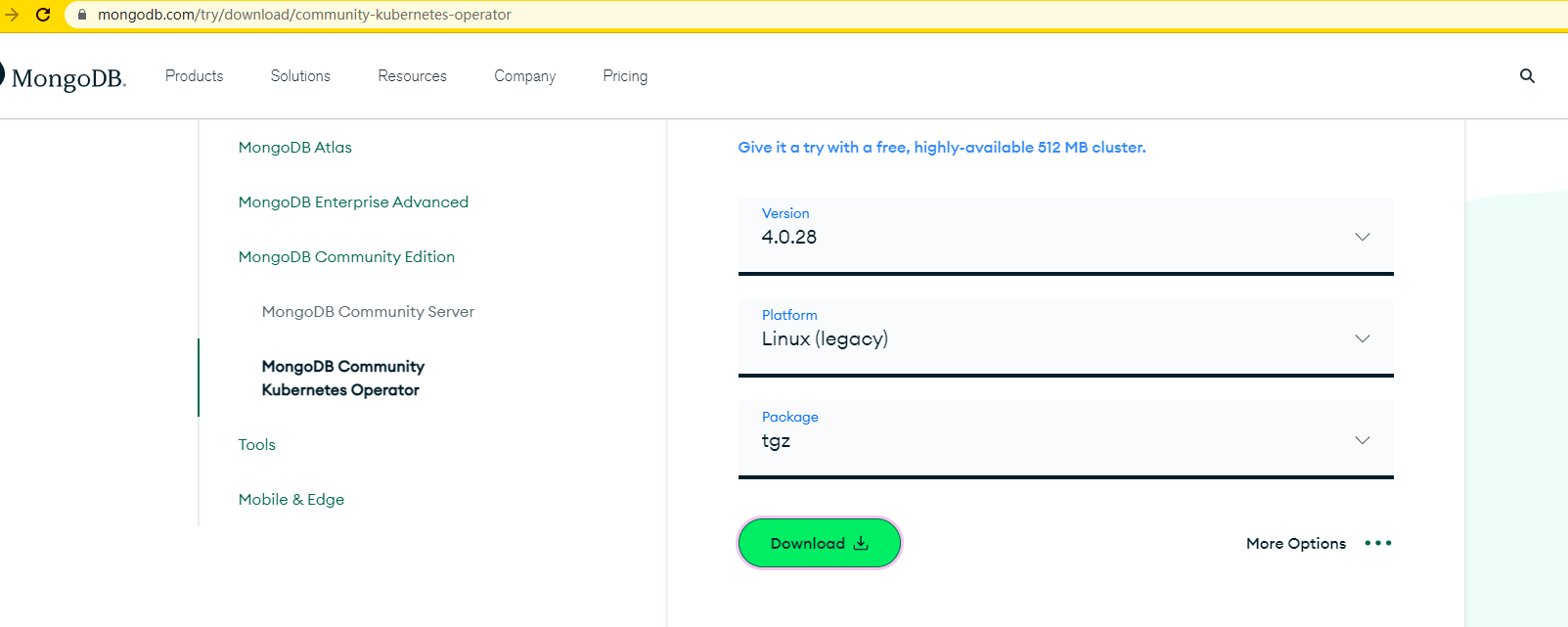

05章 安装mongodb

1.下载二进制版本

开箱即用版本

https://fastdl.mongodb.org/linux/mongodb-linux-x86_64-{主版本}.{开发/正式版本}.{bug修改数}.tgz

example: https://fastdl.mongodb.org/linux/mongodb-linux-x86_64-4.0.28.tgz

ps 从github.com上面查看mongodb提交tag也可以找到历史版本;最新版本去官网下载

下载: https://www.mongodb.com/try/download/community

2.解压安装

# wget https://fastdl.mongodb.org/linux/mongodb-linux-x86_64-4.0.28.tgz

# yum install libcurl openssl -y

# tar zxf mongodb-linux-x86_64-rhel70-4.0.14.tgz -C /data/

# cd /data/

# ln -s /data/mongodb-linux-x86_64-4.0.28/ /usr/local/mongodb-4.0.28

# ln -s /data/mongodb-linux-x86_64-4.0.28/ /usr/local/mongodb

# ls -l /usr/local/mongodb/

total 200

drwxr-xr-x 2 root root 4096 Oct 27 16:07 bin

-rw-r--r-- 1 root root 30608 Jan 25 2022 LICENSE-Community.txt

-rw-r--r-- 1 root root 16726 Jan 25 2022 MPL-2

-rw-r--r-- 1 root root 2601 Jan 25 2022 README

-rw-r--r-- 1 root root 60005 Jan 25 2022 THIRD-PARTY-NOTICES

-rw-r--r-- 1 root root 81355 Jan 25 2022 THIRD-PARTY-NOTICES.gotools

# tree /usr/local/mongodb/

/usr/local/mongodb/

├── bin

│ ├── bsondump 读取bson格式的回滚文件内容

│ ├── install_compass

│ ├── mongo 数据库shell

│ ├── mongod 核心数据库服务

│ ├── mongodump 数据库备份工具

│ ├── mongoexport 导出工具(json、csv、tsv),不可靠的备份

│ ├── mongofiles 操作GirdFS对象中的文件

│ ├── mongoimport 导入工具(json、csv、tsv),不可靠的恢复

│ ├── mongoreplay

│ ├── mongorestore 恢复/导入工具

│ ├── mongos 数据库分片进程

│ ├── mongostat 返回数据库操作的内容

│ └── mongotop 跟踪/报告 MongoDB 的读写情况

├── LICENSE-Community.txt

├── MPL-2

├── README

├── THIRD-PARTY-NOTICES

└── THIRD-PARTY-NOTICES.gotools

1 directory, 18 files

3.创建目录

# mkdir -p /usr/local/mongodb/{conf,logs,pid,data}

4.配置文件

cat >/usr/local/mongodb/conf/mongodb.conf<<EOF

systemLog:

destination: file

logAppend: true

path: /usr/local/mongodb/logs/mongodb.log

storage:

journal:

enabled: true

dbPath: /usr/local/mongodb/data

directoryPerDB: true

wiredTiger:

engineConfig:

cacheSizeGB: 0.5

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

processManagement:

fork: true

pidFilePath: /usr/local/mongodb/pid/mongod.pid

net:

port: 27017

bindIp: 0.0.0.0

EOF

配置文件注解:

systemLog:

destination: file #Mongodb 日志输出的目的地,指定一个file或者syslog,如果指定file,必须指定

logAppend: true #当实例重启时,不创建新的日志文件, 在老的日志文件末尾继续添加

path: /usr/local/mongodb/logs/mongodb.log #日志路径

storage:

journal: #回滚日志

enabled: true

dbPath: /usr/local/mongodb/data #数据存储目录

directoryPerDB: true #默认,false不适用inmemoryengine

wiredTiger:

engineConfig:

cacheSizeGB: 1 #将用于所有数据缓存的大小

directoryForIndexes: true #默认false索引集合storage.dbPath存储在数据单独子目录

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

processManagement: #使用处理系统守护进程的控制处理

fork: true #后台运行

pidFilePath: /usr/local/mongodb/pid/mongod.pid #创建pid文件

net:

port: 27017 #监听端口

bindIp: 0.0.0.0 #绑定ip

5.启动mongodb

# /usr/local/mongodb/bin/mongod -f /usr/local/mongodb/conf/mongodb.conf

about to fork child process, waiting until server is ready for connections.

forked process: 1698

child process started successfully, parent exiting

6.验证

# netstat -nltup|grep 27017

tcp 0 0 127.0.0.1:27017 0.0.0.0:* LISTEN 1698/mongod

tcp 0 0 10.21.0.1:27017 0.0.0.0:* LISTEN 1698/mongod

# ps aux|grep /mongod

root 1698 0.7 2.7 1008160 51040 ? Sl 17:40 0:01 /usr/local/mongodb/bin/mongod -f /usr/local/mongodb/conf/mongodb.conf

7.设置环境变量

# echo 'export PATH=/usr/local/mongodb/bin:$PATH' >> /etc/profile

# source /etc/profile

8.登录mongodb

# mongo

MongoDB shell version v4.0.28

connecting to: mongodb://127.0.0.1:27017/?gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("1a1742e7-d18f-41ee-8155-40dfce52713b") }

MongoDB server version: 4.0.28

Welcome to the MongoDB shell.

For interactive help, type "help".

For more comprehensive documentation, see

http://docs.mongodb.org/

Questions? Try the support group

http://groups.google.com/group/mongodb-user

Server has startup warnings:

2022-10-27T17:40:30.049+0800 I STORAGE [initandlisten]

2022-10-27T17:40:30.049+0800 I STORAGE [initandlisten] ** WARNING: Using the XFS filesystem is strongly recommended with the WiredTiger storage engine

2022-10-27T17:40:30.049+0800 I STORAGE [initandlisten] ** See http://dochub.mongodb.org/core/prodnotes-filesystem

2022-10-27T17:40:30.962+0800 I CONTROL [initandlisten]

2022-10-27T17:40:30.962+0800 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database.

2022-10-27T17:40:30.962+0800 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted.

2022-10-27T17:40:30.962+0800 I CONTROL [initandlisten] ** WARNING: You are running this process as the root user, which is not recommended.

2022-10-27T17:40:30.962+0800 I CONTROL [initandlisten]

2022-10-27T17:40:30.963+0800 I CONTROL [initandlisten]

2022-10-27T17:40:30.963+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/enabled is 'always'.

2022-10-27T17:40:30.963+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2022-10-27T17:40:30.963+0800 I CONTROL [initandlisten]

2022-10-27T17:40:30.963+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'.

2022-10-27T17:40:30.963+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2022-10-27T17:40:30.963+0800 I CONTROL [initandlisten]

2022-10-27T17:40:30.963+0800 I CONTROL [initandlisten] ** WARNING: soft rlimits too low. rlimits set to 7268 processes, 65535 files. Number of processes should be at least 32767.5 : 0.5 times number of files.

2022-10-27T17:40:30.963+0800 I CONTROL [initandlisten]

>

>

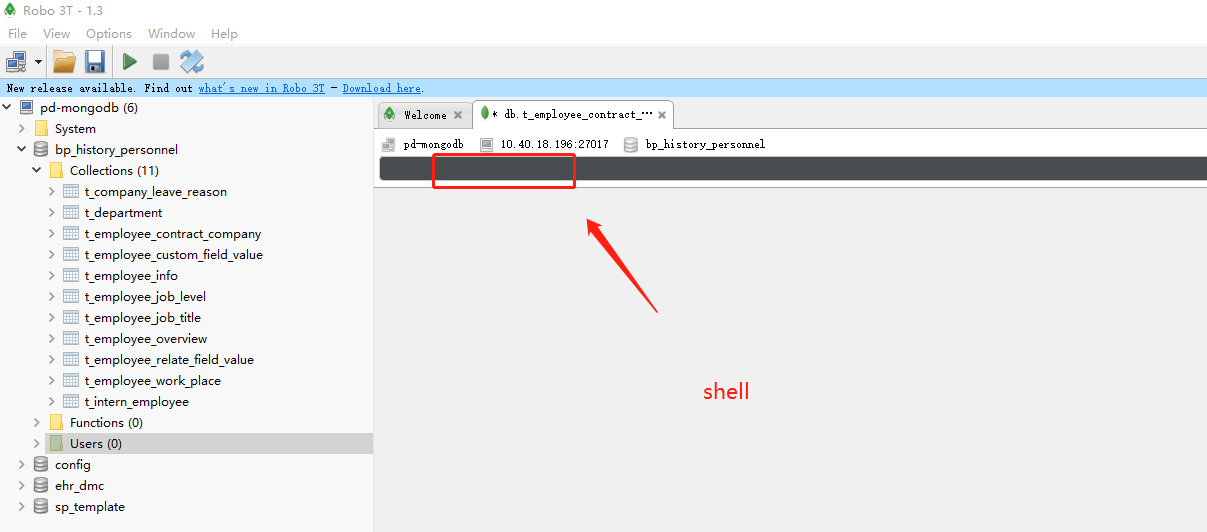

华为云 文档数据库服务 DDS - 连接DDS实例的常用方式

图形客户端

Robo 3T

MongoDB shell

DaraFrip

9.关闭mongodb

方法1:推荐

/usr/local/mongodb/bin/mongod -f /usr/local/mongodb/conf/mongodb.conf --shutdown

方法2: 只能是使用localhost方式登陆

mongo

use admin

db.shutdownServer()

方法3: system/init.d管理

方法4: Supervisor管理

06章 优化

内存不足

** WARNING: The configured WiredTiger cache size is more than 80% of available RAM.

** See http://dochub.mongodb.org/core/faq-memory-diagnostics-wt

原因: 内存不足

解决方法--

方法1: 加大服务器宿主机内存

方法2: 调小配置文件里缓存大小 cacheSizeGB: 0.3 (按照实际情况调整)

操作系统文件格式的问题

** WARNING: Using the XFS filesystem is strongly recommended with the WiredTiger storage engine

** See http://dochub.mongodb.org/core/prodnotes-filesystem

原因: 操作系统文件格式的问题,不影响正常使用

解决方法--

当在生产环境的Linux系统,内核版本至少为2.6.36,使用ext4或者xfs文件系统。若可能的话尽量搭配

xfs和mongoBD使用。

使用WiredTiger存储引擎强烈推荐XFS,可以避免ext4和wiredtiger搭配的性能问题;

使用MMAPv1存储引擎,MongoDB会在使用前预先分配数据文件并通常创建大的文件。

也推荐使用XFS搭配MongoDB使用。

通常使用xfs文件系统至少保持Linux kernel 版本为2.6.25及以上;

若使用EXT4文件系统至少使用Linux kernel版本为2.6.28及以上;

若使用CentOS和RHEL Linux kernel版至少为2.6.18-194。

注释:在MongoDB4.0版本MMAPv1存储引擎已经废弃,将来会移除,推荐使用WiredTiger存储引擎。

** WARNING: Access control is not enabled for the database.

** Read and write access to data and configuration is unrestricted.

原因: 没有开启访问控制. MongoDB需要有一个安全库来开启数据库访问控制,默认安装的启动的是mongod --dbpath /var/lib/mongo 此时无须用户认证登录的信息。

解决方法--

方法: 开启数据库安装认证功能

在MongoDB部署上启用访问控制会强制执行身份验证,要求用户识别自己。当访问启用了访问控制的MongoDB部署时,用户只能执行由其角色确定的操作。

> use admin

switched to db admin

> db.createUser({user:"root",pwd:"root",roles: [{role:"userAdminAnyDatabase", db: "admin" } ]})

创建用户和密码均为root,具有全部的权限。

重启mongoDB 使用用户名和密码登录:

#systemctl stop mongod

#mongod --auth --dbpath /var/lib/mongo

# mongo --username root --password root --port 27017 --authenticationDatabase admin

MongoDB shell version

connecting to: mongodb://127.0.0.1:27017/

MongoDB server version: 4.0.28

>

添加额外的账号信息:

use test

db.createUser(

{

user: "test",

pwd: "test",

roles: [ { role: "readWrite", db: "test" },

{ role: "read", db: "reporting" } ]

}

)

登录测试验证:

# mongo --username test --password test --port 27017 --authenticationDatabase test

MongoDB shell version 4.0.28

以root用户运行

** WARNING: You are running this process as the root user, which is not recommended.

原因: 不建议以root用户运行

解决方法--

方法1: 创建普通用户mongo,然后切换到mongo用户启动

方法2: 使用system方式登陆,指定运行用户为普通用户mongo

创建普通用户:

mongod -f /usr/local/mongodb/conf/mongodb.conf --shutdown

groupadd mongo -g 777

useradd mongo -g 777 -u 777 -M -s /sbin/nologin

id mongo

system启动文件:

cat >/usr/lib/systemd/system/mongod.service<<EOF

[Unit]

Description=MongoDB Database Server

Documentation=https://docs.mongodb.org/manual

After=network.target

[Service]

User=mongo

Group=mongo

ExecStart=/opt/mongodb/bin/mongod -f /opt/mongo_27017/conf/mongodb.conf

ExecStartPre=/usr/bin/chown -R mongo:mongo /opt/mongo_27017/

ExecStartPre=/usr/bin/chown -R mongo:mongo /data/mongo_27017/

PermissionsStartOnly=true

PIDFile=/opt/mongo_27017/pid/mongod.pid

Type=forking

# file size

LimitFSIZE=infinity

# cpu time

LimitCPU=infinity

# virtual memory size

LimitAS=infinity

# open files

LimitNOFILE=64000

# processes/threads

LimitNPROC=64000

# locked memory

LimitMEMLOCK=infinity

# total threads (user+kernel)

TasksMax=infinity

TasksAccounting=false

# Recommended limits for for mongod as specified in

# http://docs.mongodb.org/manual/reference/ulimit/#recommended-settings

[Install]

WantedBy=multi-user.target

EOF

重新启动mongo:

systemctl daemon-reload

systemctl start mongod.service

ps -ef|grep mongo

mongo

大内存页

** WARNING: /sys/kernel/mm/transparent_hugepage/enabled is 'always'.

** We suggest setting it to 'never'

原因: 大内存页

解决方法--

echo "never" > /sys/kernel/mm/transparent_hugepage/enabled

systemctl stop mongod && systemctl start mongod

大内存页

** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'.

** We suggest setting it to 'never'

原因: 大内存页

解决方法--

echo "never" > /sys/kernel/mm/transparent_hugepage/defragecho "never" > /sys/kernel/mm/transparent_hugepage/defrag

systemctl stop mongod && systemctl start mongod

这两个问题是CentOS7特有的,因为从CentOS7版本开始会默认启用Transparent Huge Pages(THP)

Transparent Huge Pages(THP)本意是用来提升内存性能,但某些数据库厂商还是建议直接

关闭THP(比如说Oracle、MariaDB、MongoDB等),否则可能会导致性能出现下降。

# cat /sys/kernel/mm/transparent_hugepage/defrag

[always] madvise never

# cat /sys/kernel/mm/transparent_hugepage/enabled

[always] madvise never

--修改系统配置加入如下配置后,刷新全局环境变量:

# vim /etc/rc.d/rc.local

if test -f /sys/kernel/mm/transparent_hugepage/enabled; then

echo never > /sys/kernel/mm/transparent_hugepage/enabled

fi

if test -f /sys/kernel/mm/transparent_hugepage/defrag; then

echo never > /sys/kernel/mm/transparent_hugepage/defrag

fi

# source /etc/profile

系统句柄或者文件描述符太少

** WARNING: soft rlimits too low. rlimits set to 7268 processes, 65535 files. Number of processes should be at least 32767.5 : 0.5 times number of files.

原因: 系统句柄或者文件描述符太少

解决方法:

cat > /etc/profile<<EOF

ulimit -f unlimited

ulimit -t unlimited

ulimit -v unlimited

ulimit -n 64000

ulimit -m unlimited

ulimit -u 64000

EOF

生效配置:

source /etc/profile

systemctl stop mongod && systemctl start mongod

允许MongoDB远程访问

#cat /usr/local/mongodb/conf/mongodb.conf

...

# network interfaces

net:

port: 27017

bindIp: 127.0.0.1 # Enter 0.0.0.0,:: to bind to all IPv4 and IPv6 addresses or, alternatively, use the net.bindIpAll setting.

默认的配置只允许本地访问,若允许所有主机访问可以将bindIp设置为0.0.0.0,但是这样并不安全,

可以开启外网访问密码验证。

net:

port: 27017

bindIp: 0.0.0.0

security:

authorization: enabled

07章 mongo数据库命令

1.默认数据库

# mongo

MongoDB shell version v4.0.28

connecting to: mongodb://127.0.0.1:27017/?gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("14a5e8f7-4a0d-456b-bef3-b094444f2251") }

MongoDB server version: 4.0.28

> show dbs

admin 0.000GB

config 0.000GB

local 0.000GB

> db

test

test: 登陆的时默认的库

admin: 系统预留库,Mongodb的系统管理库

local: 本地预留库,存储关键日志

config: 配置信息库

2.浏览数据库命令

db: 查看当前所在库

show dbs/show databases :查看所有的数据库

show collections/show tables:查看当前库下所有的集合

use admin :切换到不同的库

# mongo

MongoDB shell version v4.0.28

connecting to: mongodb://127.0.0.1:27017/?gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("5279825f-6e90-4668-9f03-2015efe6151f") }

MongoDB server version: 4.0.28

> db

admin

>

> show dbs

admin 0.000GB

config 0.000GB

local 0.000GB

>

> show tables

system.version

>

> use admin

switched to db admin

>

> quit()

# echo "db"|mongo

MongoDB shell version v4.0.28

connecting to: mongodb://127.0.0.1:27017/?gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("9cbb6e42-f334-453e-a50d-0885e429529f") }

MongoDB server version: 4.0.28

test

bye

# echo "show dbs"|mongo

MongoDB shell version v4.0.28

connecting to: mongodb://127.0.0.1:27017/?gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("4fbddd80-5ffa-4938-b5fe-00054043c1af") }

MongoDB server version: 4.0.28

admin 0.000GB

config 0.000GB

local 0.000GB

bye

08章 mongodb操作

Collection Methods

https://www.mongodb.com/docs/v4.2/reference/method/js-collection/

1.mongodb数据类型

| 数据类型 | 描述 | 样例 |

|---|---|---|

| String | 常用,含文本(任何其他种类的字符串) | |

| Integer | 数值(整数的前后没有引号) | |

| Boolean | 布尔值, 真|假 | |

| Double | 浮点数 | |

| Min / Max keys | Bson中最低和最高的比较值 | |

| Array | 数组 | |

| Timestamp | 时间戳 | 记录文档添加修改时间 |

| Object | 嵌入文档 | |

| Object Id | 存储文档的id | Bson特殊数据类型 ObjectId(string) |

| Null | null值 | |

| Symbol | 保留用于特定的符号类型 | |

| Data | unix格式的当前时间 | Bson特殊数据类型 |

| Binary data | 二进制数据 | Bson特殊数据类型 |

| Regular expression | 正则表达式 | Bson特殊数据类型 |

| JavaScript Code | JavaScript代码 | Bson特殊数据类型 |

2.插入文档数据

格式: 数据库操作.集合名称.操作方法({key:value,key:value})

Insert Methods

MongoDB provides the following methods for inserting documents into a collection:

db.collection.insertOne() Inserts a single document into a collection.

db.collection.insertMany() db.collection.insertMany() inserts multiple documents into a collection.

db.collection.insert() db.collection.insert() inserts a single document or multiple documents into a collection.

2.1 插入单条文档数据

Insert a Single Document

https://www.mongodb.com/docs/v4.2/tutorial/insert-documents/

> db.inventory.insertOne({ item: "canvas", qty: 100, tags: ["cotton"], size: { h: 28, w: 35.5, uom: "cm" } })

{

"acknowledged" : true,

"insertedId" : ObjectId("635be2af9c6939bcfbde84db")

}

或者

> db.inventory.insertOne(

{ item: "canvas", qty: 100, tags: ["cotton"], size: { h: 28, w: 35.5, uom: "cm" } }

)

{

"acknowledged" : true,

"insertedId" : ObjectId("635be2ee9c6939bcfbde84dc")

}

查看集合文档数据

> db.inventory.find()

{ "_id" : ObjectId("635c10299c6939bcfbde850d"), "item" : "canvas", "qty" : 100, "tags" : [ "cotton" ], "size" : { "h" : 28, "w" : 35.5, "uom" : "cm" } }

{ "_id" : ObjectId("635c10559c6939bcfbde850e"), "item" : "canvas", "qty" : 100, "tags" : [ "cotton" ], "size" : { "h" : 28, "w" : 35.5, "uom" : "cm" } }

pretty函数 ,以格式化的方式显示查询结果

> db.inventory.find().pretty()

{

"_id" : ObjectId("635c10299c6939bcfbde850d"),

"item" : "canvas",

"qty" : 100,

"tags" : [

"cotton"

],

"size" : {

"h" : 28,

"w" : 35.5,

"uom" : "cm"

}

}

{

"_id" : ObjectId("635c10559c6939bcfbde850e"),

"item" : "canvas",

"qty" : 100,

"tags" : [

"cotton"

],

"size" : {

"h" : 28,

"w" : 35.5,

"uom" : "cm"

}

}

> db.inventory.find( { item: "canvas" } )

{ "_id" : ObjectId("635be2af9c6939bcfbde84db"), "item" : "canvas", "qty" : 100, "tags" : [ "cotton" ], "size" : { "h" : 28, "w" : 35.5, "uom" : "cm" } }

{ "_id" : ObjectId("635be2ee9c6939bcfbde84dc"), "item" : "canvas", "qty" : 100, "tags" : [ "cotton" ], "size" : { "h" : 28, "w" : 35.5, "uom" : "cm" } }

> db.inventory.find( { item: "canvas" },{"_id" :0} )

{ "item" : "canvas", "qty" : 100, "tags" : [ "cotton" ], "size" : { "h" : 28, "w" : 35.5, "uom" : "cm" } }

{ "item" : "canvas", "qty" : 100, "tags" : [ "cotton" ], "size" : { "h" : 28, "w" : 35.5, "uom" : "cm" } }

2.2 插入多条文档数据

Insert Multiple Documents

https://www.mongodb.com/docs/v4.2/tutorial/insert-documents/

> db.inventory.insertMany([

{ item: "journal", qty: 25, tags: ["blank", "red"], size: { h: 14, w: 21, uom: "cm" } },

{ item: "mat", qty: 85, tags: ["gray"], size: { h: 27.9, w: 35.5, uom: "cm" } },

{ item: "mousepad", qty: 25, tags: ["gel", "blue"], size: { h: 19, w: 22.85, uom: "cm" } }

])

{

"acknowledged" : true,

"insertedIds" : [

ObjectId("635be6ba9c6939bcfbde84dd"),

ObjectId("635be6ba9c6939bcfbde84de"),

ObjectId("635be6ba9c6939bcfbde84df")

]

}

查看集合文档数据

> db.inventory.find( {} )

{ "_id" : ObjectId("635be2af9c6939bcfbde84db"), "item" : "canvas", "qty" : 100, "tags" : [ "cotton" ], "size" : { "h" : 28, "w" : 35.5, "uom" : "cm" } }

{ "_id" : ObjectId("635be2ee9c6939bcfbde84dc"), "item" : "canvas", "qty" : 100, "tags" : [ "cotton" ], "size" : { "h" : 28, "w" : 35.5, "uom" : "cm" } }

{ "_id" : ObjectId("635be6ba9c6939bcfbde84dd"), "item" : "journal", "qty" : 25, "tags" : [ "blank", "red" ], "size" : { "h" : 14, "w" : 21, "uom" : "cm" } }

{ "_id" : ObjectId("635be6ba9c6939bcfbde84de"), "item" : "mat", "qty" : 85, "tags" : [ "gray" ], "size" : { "h" : 27.9, "w" : 35.5, "uom" : "cm" } }

{ "_id" : ObjectId("635be6ba9c6939bcfbde84df"), "item" : "mousepad", "qty" : 25, "tags" : [ "gel", "blue" ], "size" : { "h" : 19, "w" : 22.85, "uom" : "cm" } }

3.查询数据

Query Documents

https://www.mongodb.com/docs/v4.2/tutorial/query-documents/

外部可以查看

程涯-MongoDB常用Shell命令

清空inventory集合(我前面插了很多条,现在重新来)

> db

test

> show tables

inventory

> db.inventory.drop()

true

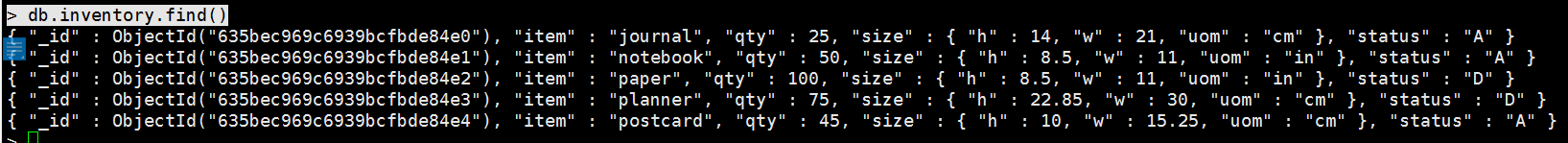

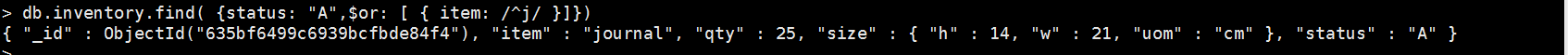

3.0 插入示例数据

db.inventory.insertMany( [

{ item: "journal", qty: 25, size: { h: 14, w: 21, uom: "cm" }, status: "A" },

{ item: "notebook", qty: 50, size: { h: 8.5, w: 11, uom: "in" }, status: "A" },

{ item: "paper", qty: 100, size: { h: 8.5, w: 11, uom: "in" }, status: "D" },

{ item: "planner", qty: 75, size: { h: 22.85, w: 30, uom: "cm" }, status: "D" },

{ item: "postcard", qty: 45, size: { h: 10, w: 15.25, uom: "cm" }, status: "A" }

]);

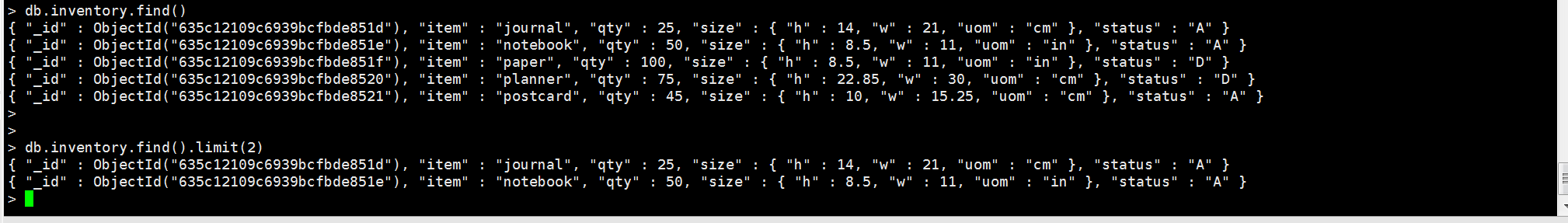

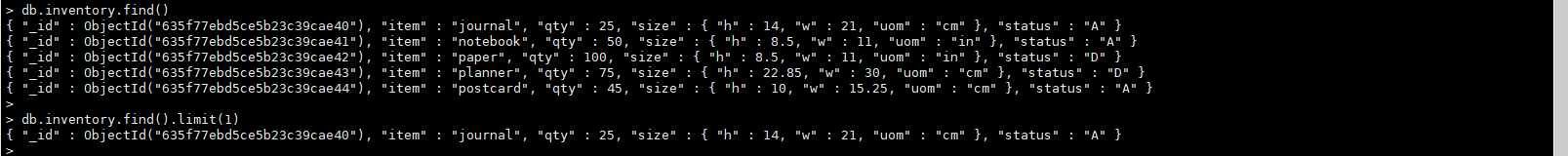

db.inventory.find()

限制查询结果条数为2条

db.inventory.find().limit(2)

# 限制查询结果条数为2条

db.worker.find({name:"zhangjian"}).limit(2)

# province字段正序排列,city_age字段倒序排列

db.city.find().sort({province:1,city_age:-1})

# 当limit,skip,sort同时出现时,逻辑顺序为sort,skip,limit

db.worker.find({name:"zhangjian"}).limit(2).skip(1).sort({score:1})

# 查询city集合中存在history字段,且history的值是null的数据

db.city.find({history:{$in:[null],$exists:true}})

# select _id,city_name as cityName from city where city_name = 'jingzhou' and city_age >= 10

# _id 是默认查询的,如果想不返回这个字段, _id:0 即可

db.city.find({city_name:"jingzhou",city_age:{$gte:10}},{cityName:"$city_name"})

————————————————

版权声明:本文为CSDN博主「程涯」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/Olivier0611/article/details/121171097

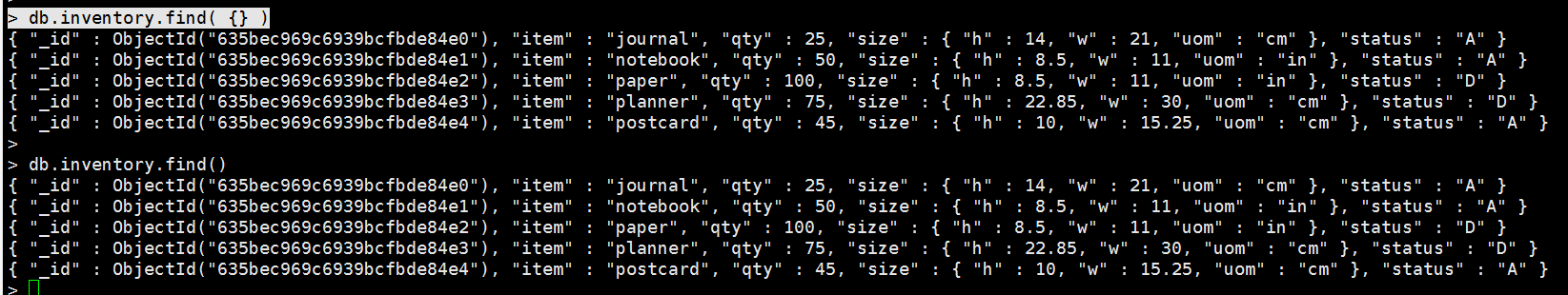

3.1 查询所有数据

类似 select * from inventory;

db.inventory.find( {} )

db.inventory.find()

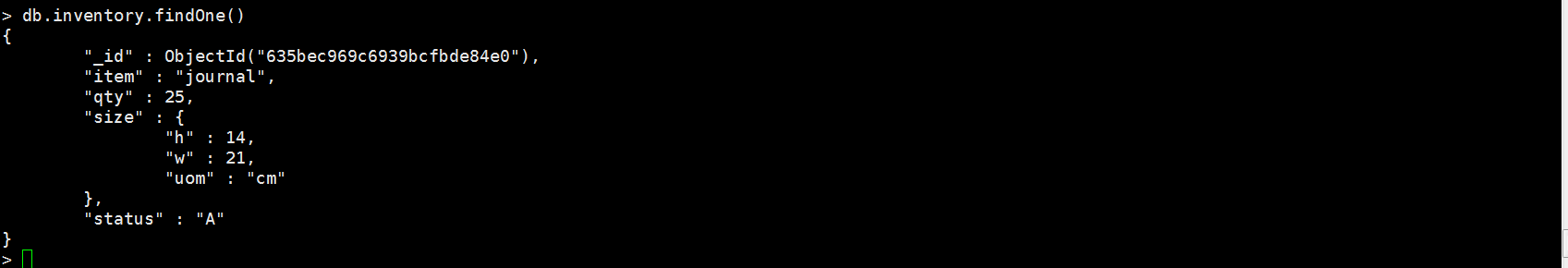

3.2 查询单条数据

类似 select * from inventory limit 1;

db.inventory.findOne()

db.inventory.find().limit(1)

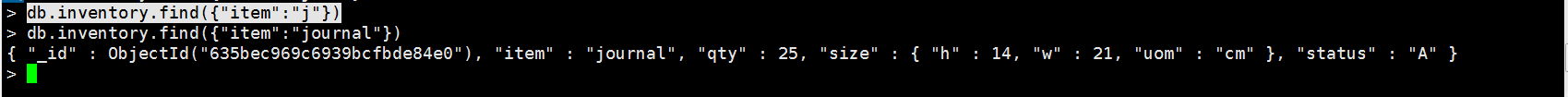

3.3 按条件查询

类似 select * from inventory where item=journal;

db.inventory.find({"item":"j"}) 这样是不行的

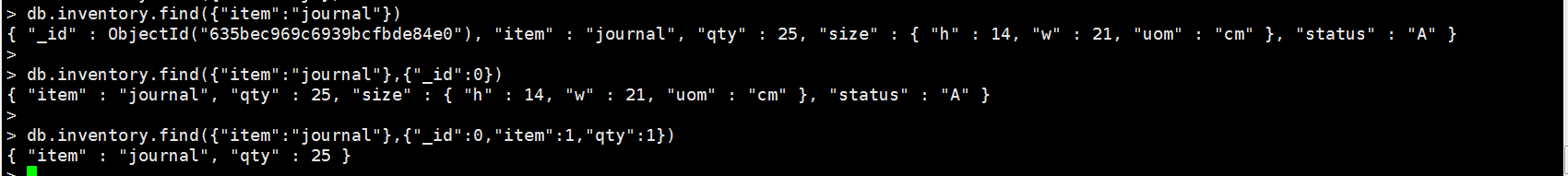

db.inventory.find({"item":"journal"})

3.4 只返回想要的字段

类似 select item,qty from inventory where item=journal;

db.inventory.find(

{查询的字段:查询的值},

{

显示的字段1:1,

显示的字段2:1,

不显示的字段:0

}

)

只返回指定字段,结果按升序展示

db.inventory.find({"item":"journal"},{"_id":0,"item":1,"qty":1})

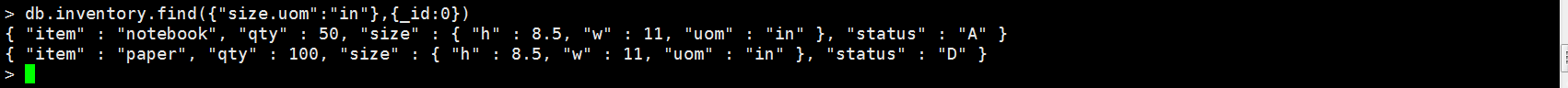

3.5 嵌套查询

类似 select * from inventory where size.uom="in";

. 告诉find函数查找文档中内嵌的信息

db.inventory.find({"size.uom":"in"},{_id:0})

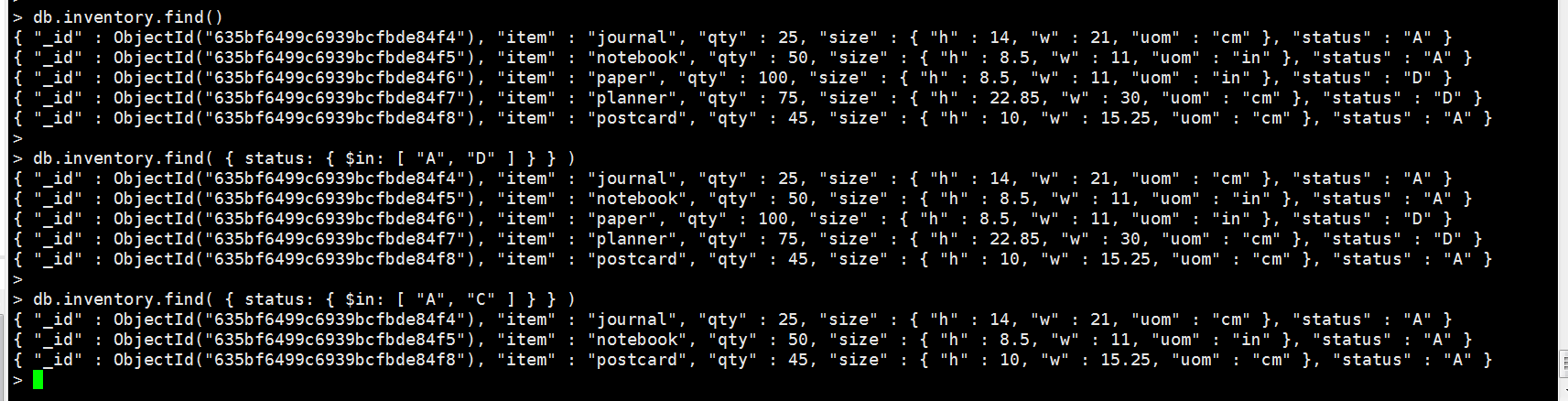

类似 SELECT * FROM inventory WHERE status in ("A", "D")

db.inventory.find()

db.inventory.find( { status: { $in: [ "A", "D" ] } } )

db.inventory.find( { status: { $in: [ "A", "C" ] } } )

3.6 逻辑查询-and

MongoDB 条件操作符

(>) 大于 - $gt

(<) 小于 - $lt

(>=) 大于等于 - $gte

(<= ) 小于等于 - $lte

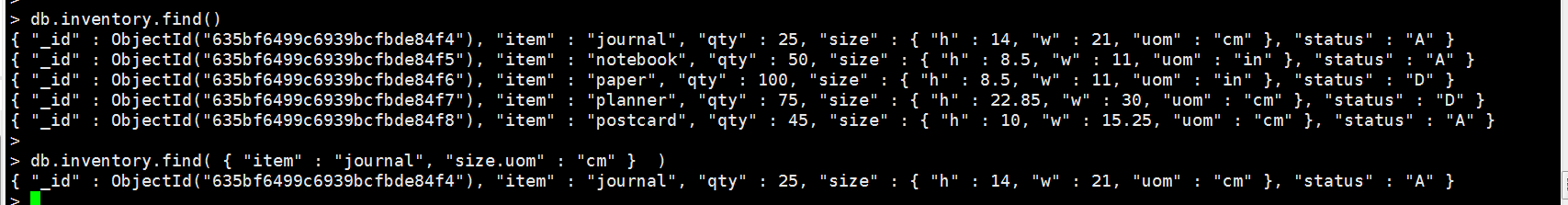

类似 select * from inventory where item="journal" AND size.uom="cm";

db.inventory.find(

{

"item": "journal",

"size.uom":"cm"

}

)

db.inventory.find()

db.inventory.find( { "item" : "journal", "size.uom" : "cm" } )

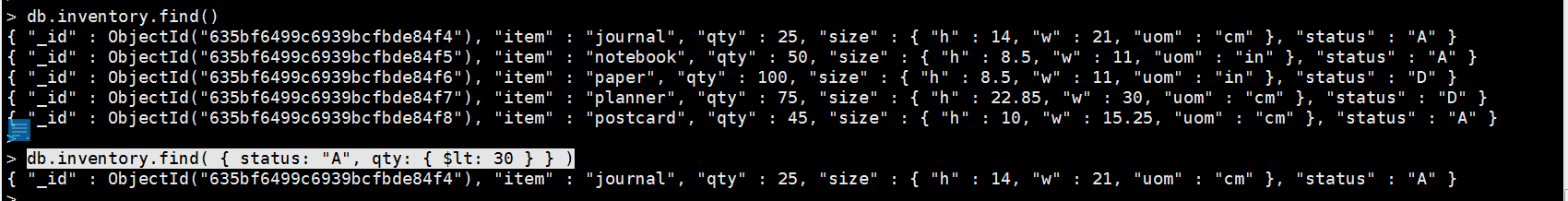

类似 SELECT * FROM inventory WHERE status = "A" AND qty < 30

db.inventory.find()

db.inventory.find( { status: "A", qty: { $lt: 30 } } )

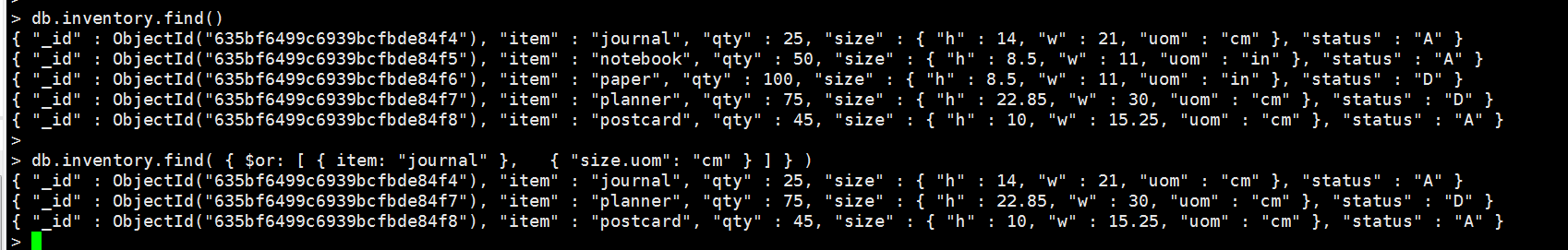

3.7 逻辑查询-or

类似 select * from inventory where item="journal" or size.uom="cm";

db.inventory.find(

{

$or: [

{ item: "journal" },

{ "size.uom": "cm" }

]

}

)

db.inventory.find( { $or: [ { item: "journal" }, { "size.uom": "cm" } ] } )

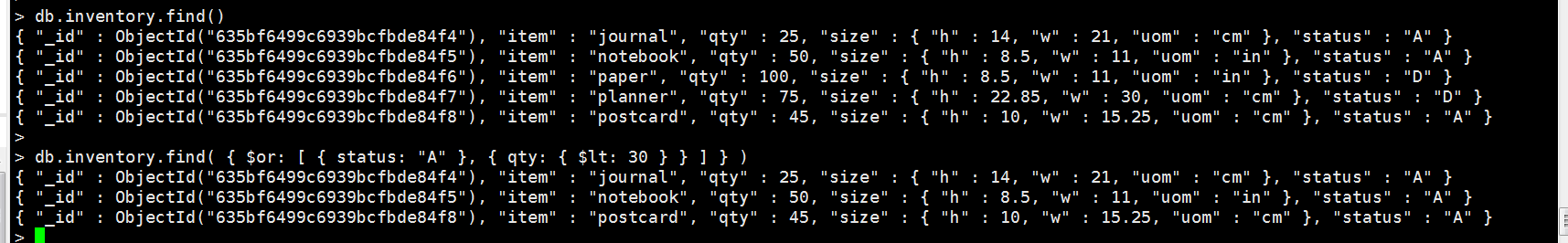

类似 SELECT * FROM inventory WHERE status = "A" OR qty < 30

db.inventory.find()

db.inventory.find( { $or: [ { status: "A" }, { qty: { $lt: 30 } } ] } )

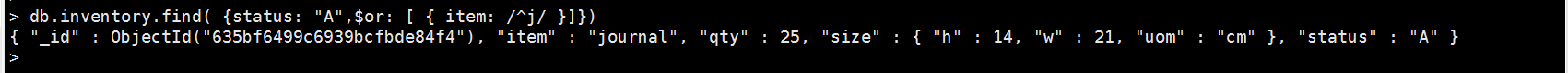

3.8 逻辑查询+或+and+正则表达式

db.inventory.find( {status: "A",$or: [ { item: /^p/ }]})

db.inventory.find( {status: "A",$or: [ { item: /^j/ }]})

Specify AND as well as OR Conditions

类似 SELECT * FROM inventory WHERE status = "A" AND ( qty < 30 OR item LIKE "p%")

db.inventory.find( { status: "A", $or: [ { qty: { $lt: 30 } }, { item: /^p/ } ] } )

db.inventory.find( {

status: "A",

$or: [ { qty: { $lt: 30 } }, { item: /^p/ } ]

} )

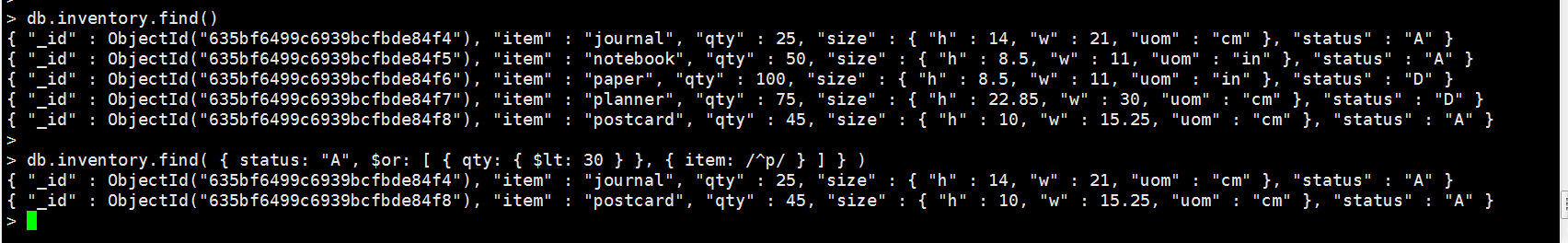

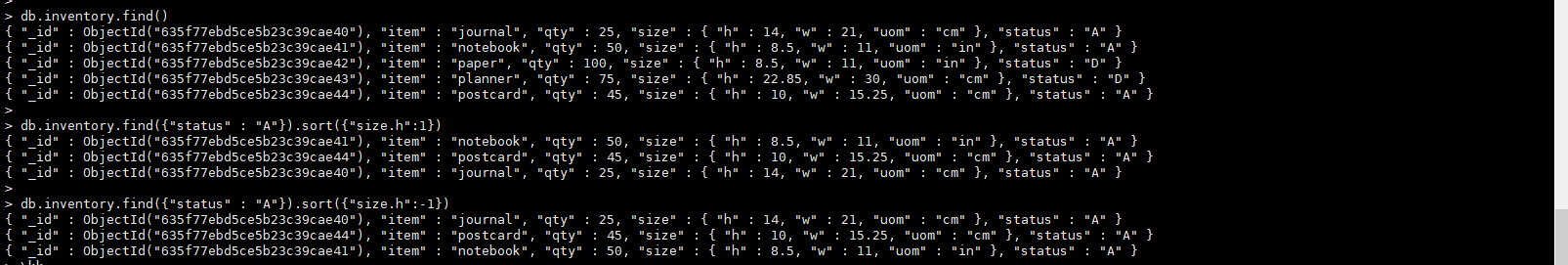

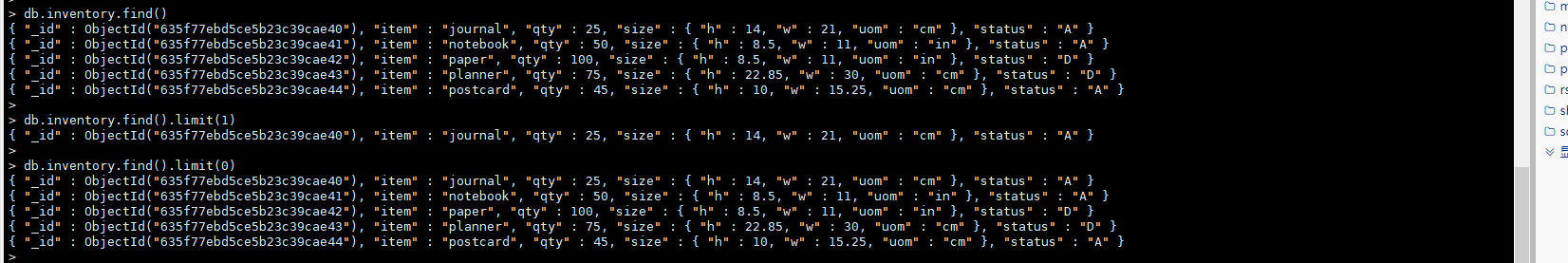

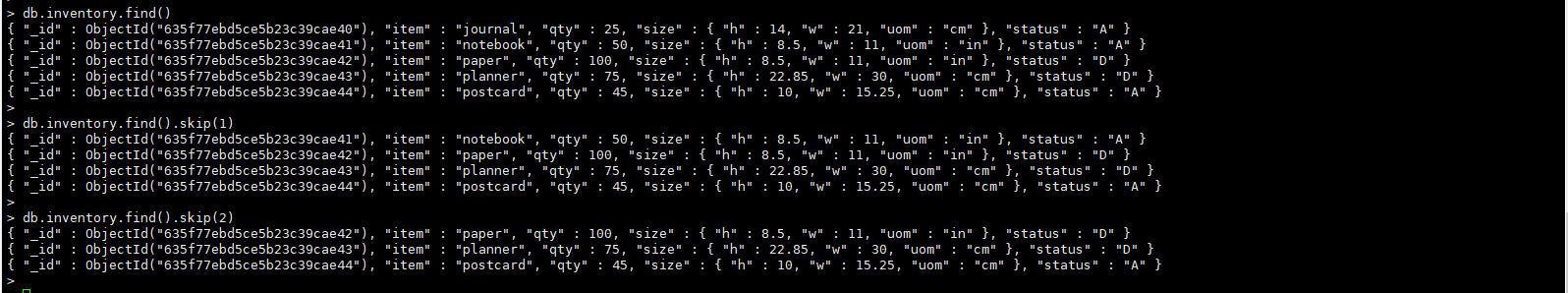

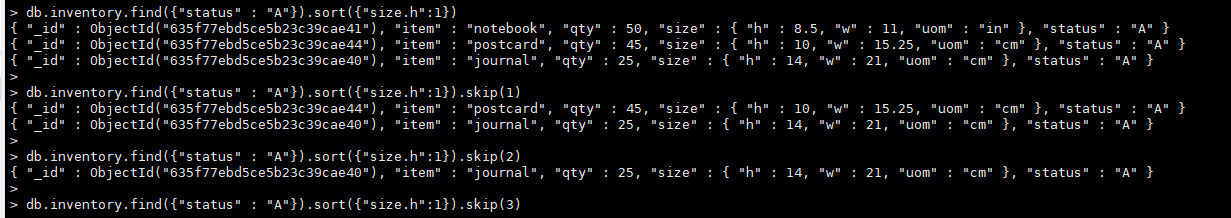

3.9 sort、limit、skip

更精确地控制查询

sort

查询集合所有数据

db.inventory.find()

查询集合内符合"status" : "A"的数据按照size.h内嵌信息进行升序展示

db.inventory.find({"status" : "A"}).sort({"size.h":1})

查询集合内符合"status" : "A"的数据按照size.h内嵌信息进行降序展示

db.inventory.find({"status" : "A"}).sort({"size.h":-1})

limit

db.inventory.find().limit(0) 等价 db.inventory.find()

限制返回条目的最大数值

db.inventory.find()

db.inventory.find().limit(1)

db.inventory.find().limit(0)

skip

返回集合文档,忽略掉集合中的前n个文档

db.inventory.find()

db.inventory.find().skip(1)

db.inventory.find().skip(2)

db

各种函数结合使用

db.inventory.find({"status" : "A"}).sort({"size.h":1})

db.inventory.find({"status" : "A"}).sort({"size.h":1}).skip(1)

db.inventory.find({"status" : "A"}).sort({"size.h":1}).skip(2)

db.inventory.find({"status" : "A"}).sort({"size.h":1}).skip(3)

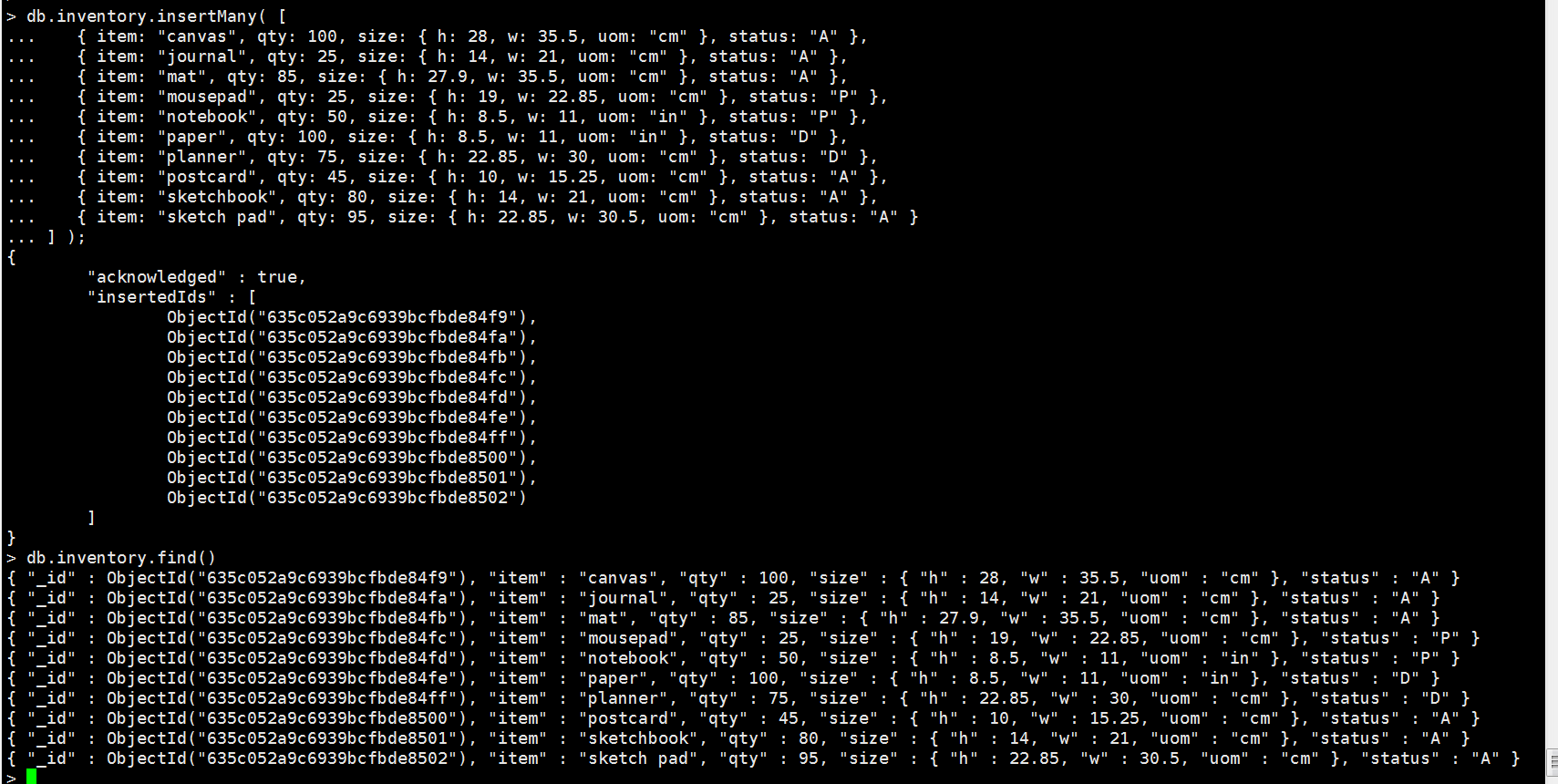

4.更新数据

update-documents

https://www.mongodb.com/docs/v4.2/tutorial/update-documents/

清空inventory集合(我前面插了很多条,现在重新来)

> db

test

> show tables

inventory

> db.inventory.drop()

true

4.0 插入示例数据

db.inventory.insertMany( [

{ item: "canvas", qty: 100, size: { h: 28, w: 35.5, uom: "cm" }, status: "A" },

{ item: "journal", qty: 25, size: { h: 14, w: 21, uom: "cm" }, status: "A" },

{ item: "mat", qty: 85, size: { h: 27.9, w: 35.5, uom: "cm" }, status: "A" },

{ item: "mousepad", qty: 25, size: { h: 19, w: 22.85, uom: "cm" }, status: "P" },

{ item: "notebook", qty: 50, size: { h: 8.5, w: 11, uom: "in" }, status: "P" },

{ item: "paper", qty: 100, size: { h: 8.5, w: 11, uom: "in" }, status: "D" },

{ item: "planner", qty: 75, size: { h: 22.85, w: 30, uom: "cm" }, status: "D" },

{ item: "postcard", qty: 45, size: { h: 10, w: 15.25, uom: "cm" }, status: "A" },

{ item: "sketchbook", qty: 80, size: { h: 14, w: 21, uom: "cm" }, status: "A" },

{ item: "sketch pad", qty: 95, size: { h: 22.85, w: 30.5, uom: "cm" }, status: "A" }

] );

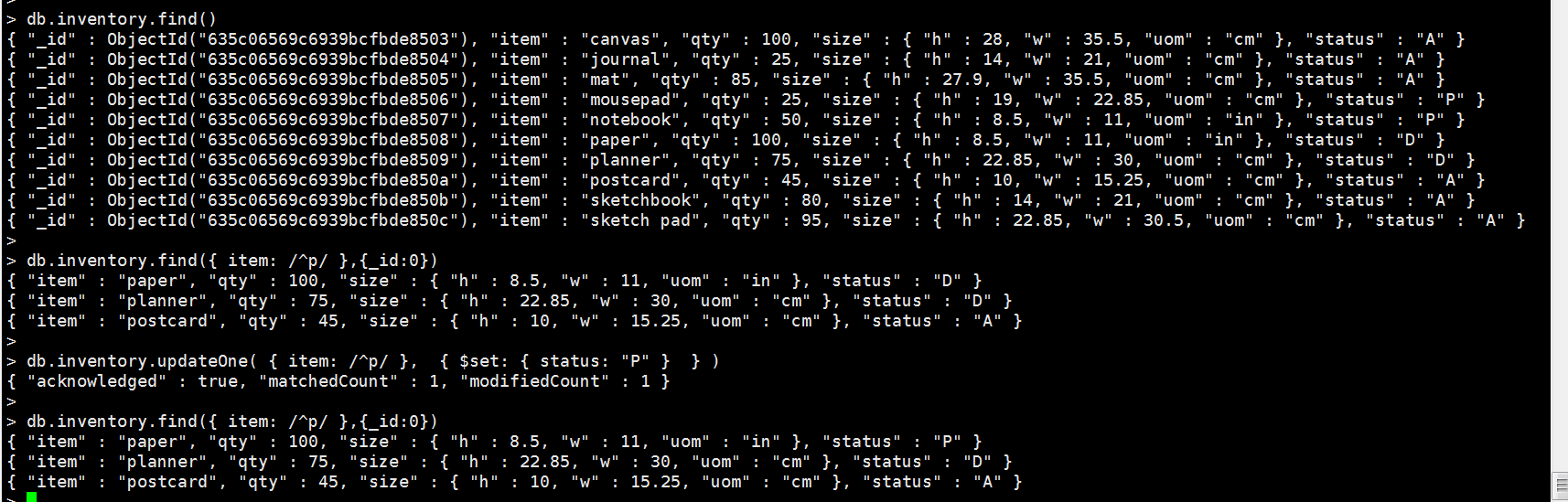

4.1 按条件更改单条

db.inventory.updateOne({查询条件},{更改内容})

db.inventory.find()

db.inventory.find({ item: /^p/ },{_id:0})

db.inventory.updateOne( { item: /^p/ }, { $set: { status: "P" } } )

db.inventory.updateOne(

{ item: /^p/ },

{

$set: { status: "P" }

}

)

4.2 按条件更改多条

db.inventory.find({ item: /^p/ },{_id:0})

db.inventory.updateMany(

{ item: /^p/ },

{

$set: { status: "P" }

}

)

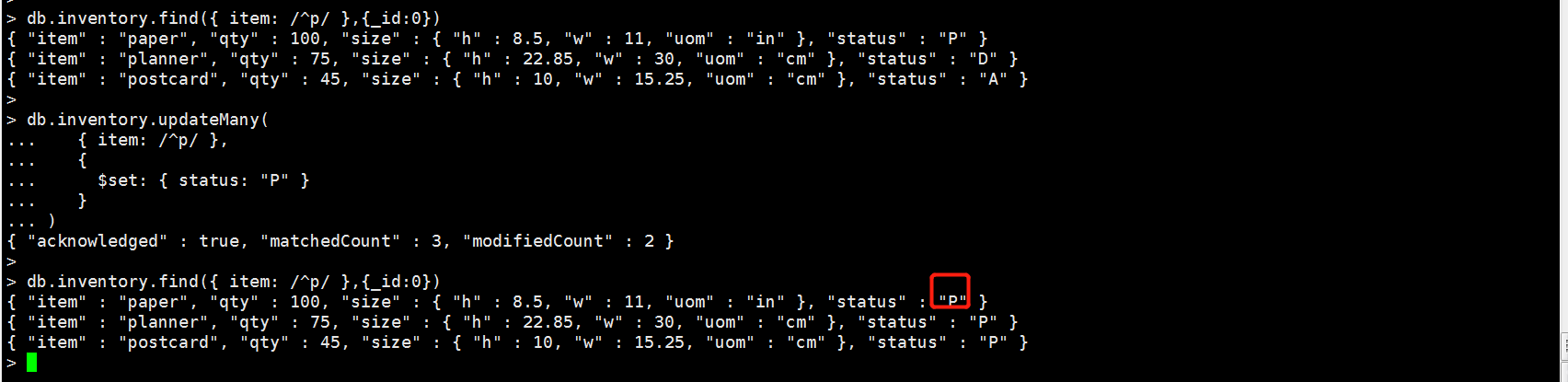

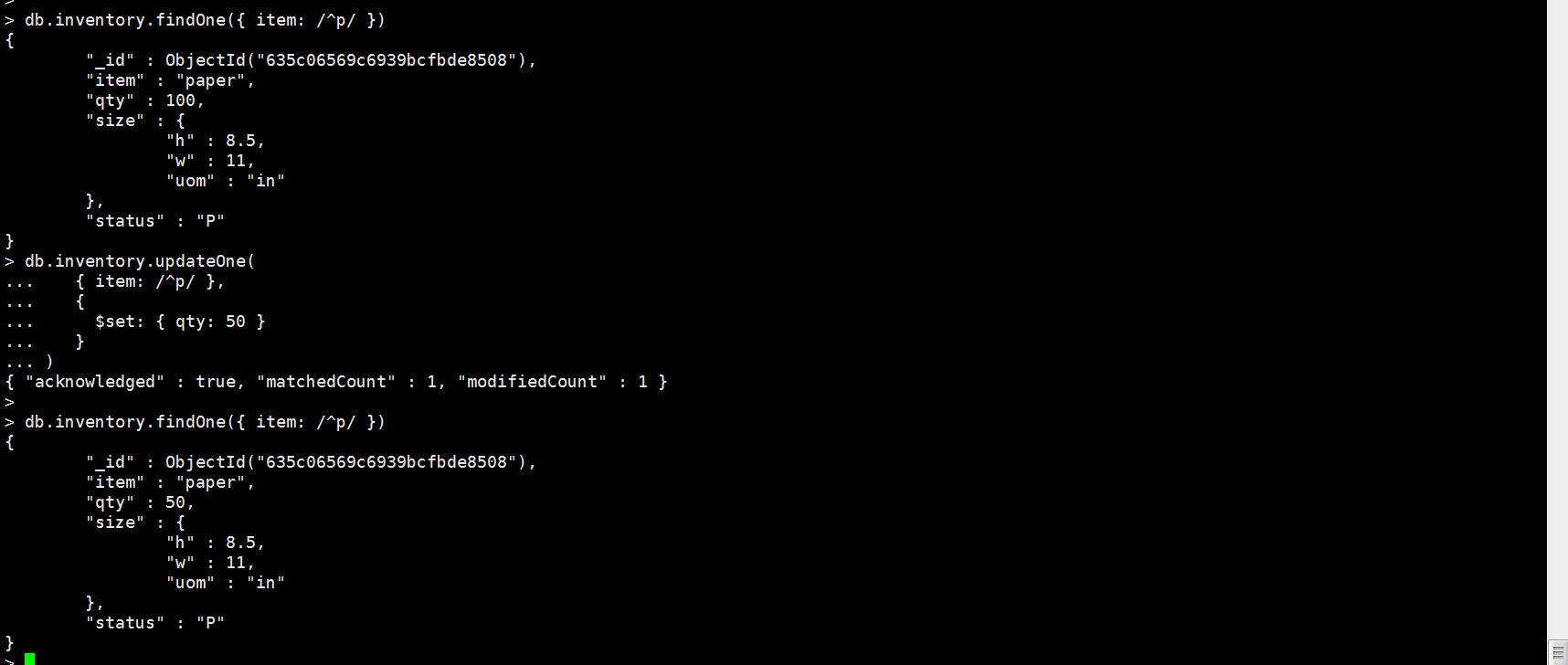

4.3 添加字段

db.inventory.findOne({ item: /^p/ })

db.inventory.updateOne(

{ item: /^p/ },

{

$set: { qty: 50 }

}

)

db.inventory.find({ item: /^p/ })

5.创建索引

对于需要在Mysql中创建索引的场合,在MongoDB中也应该创建索引

每个集合最多可以拥有40个索引

索引信息存储在数据库 system.indexes ??

db.indexses.find() 查看目前已经创建的索引 ??

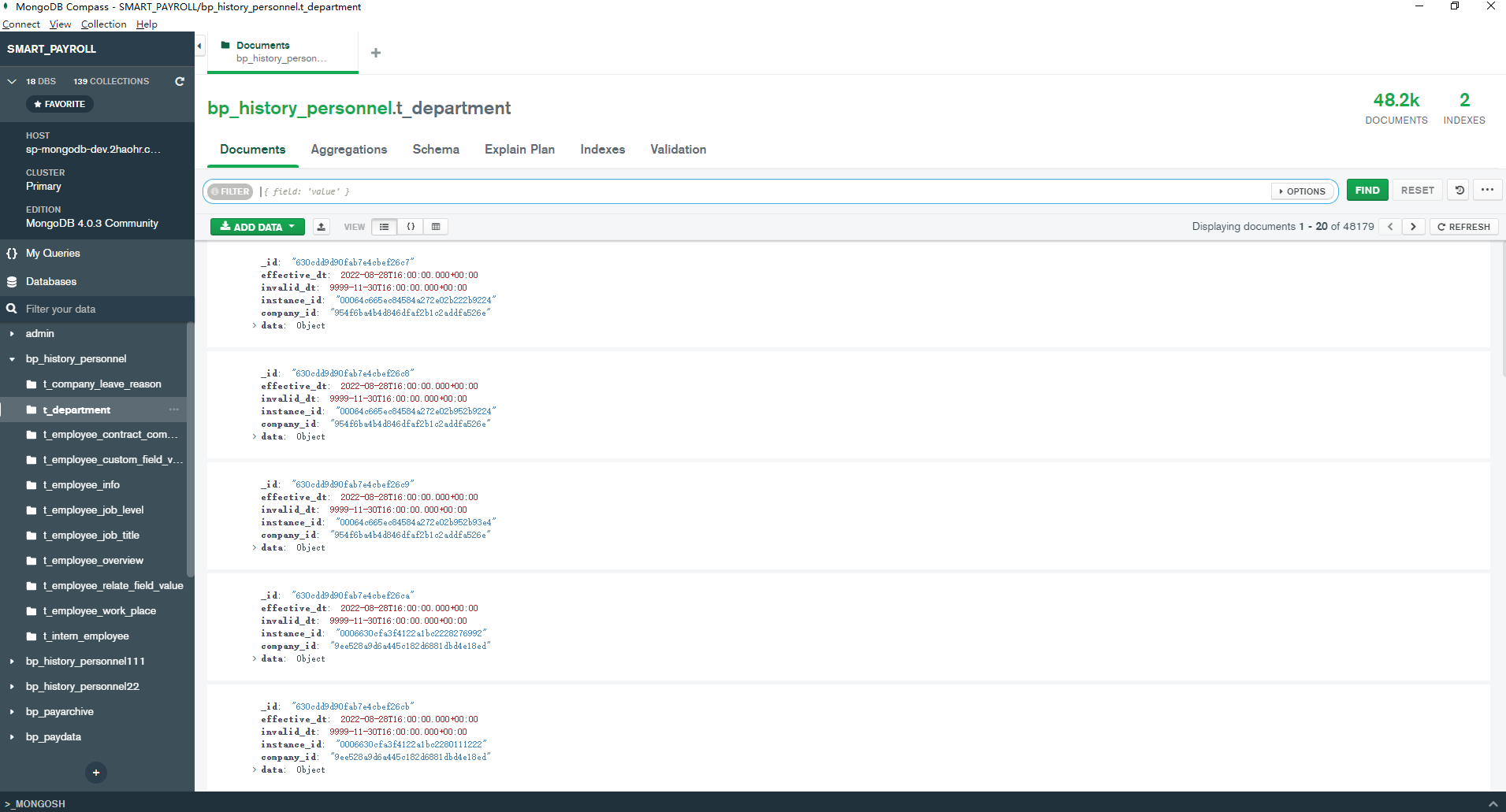

5.1 查看某个集合中创建的索引

replica:PRIMARY> show dbs

admin 0.000GB

bp_history_personnel 33.841GB

config 0.000GB

ehr_dmc 1.311GB

local 8.617GB

sp_template 0.034GB

replica:PRIMARY> use bp_history_personnel

switched to db bp_history_personnel

replica:PRIMARY> db

bp_history_personnel

replica:PRIMARY> show tables;

t_company_leave_reason

t_department

replica:PRIMARY> db.t_department.getIndexes()

[

{

"v" : 2,

"key" : {

"_id" : 1

},

"name" : "_id_",

"ns" : "bp_history_personnel.t_department"

},

{

"v" : 2,

"key" : {

"company_id" : 1

},

"name" : "ix_com",

"ns" : "bp_history_personnel.t_department",

"background" : true

},

......

]

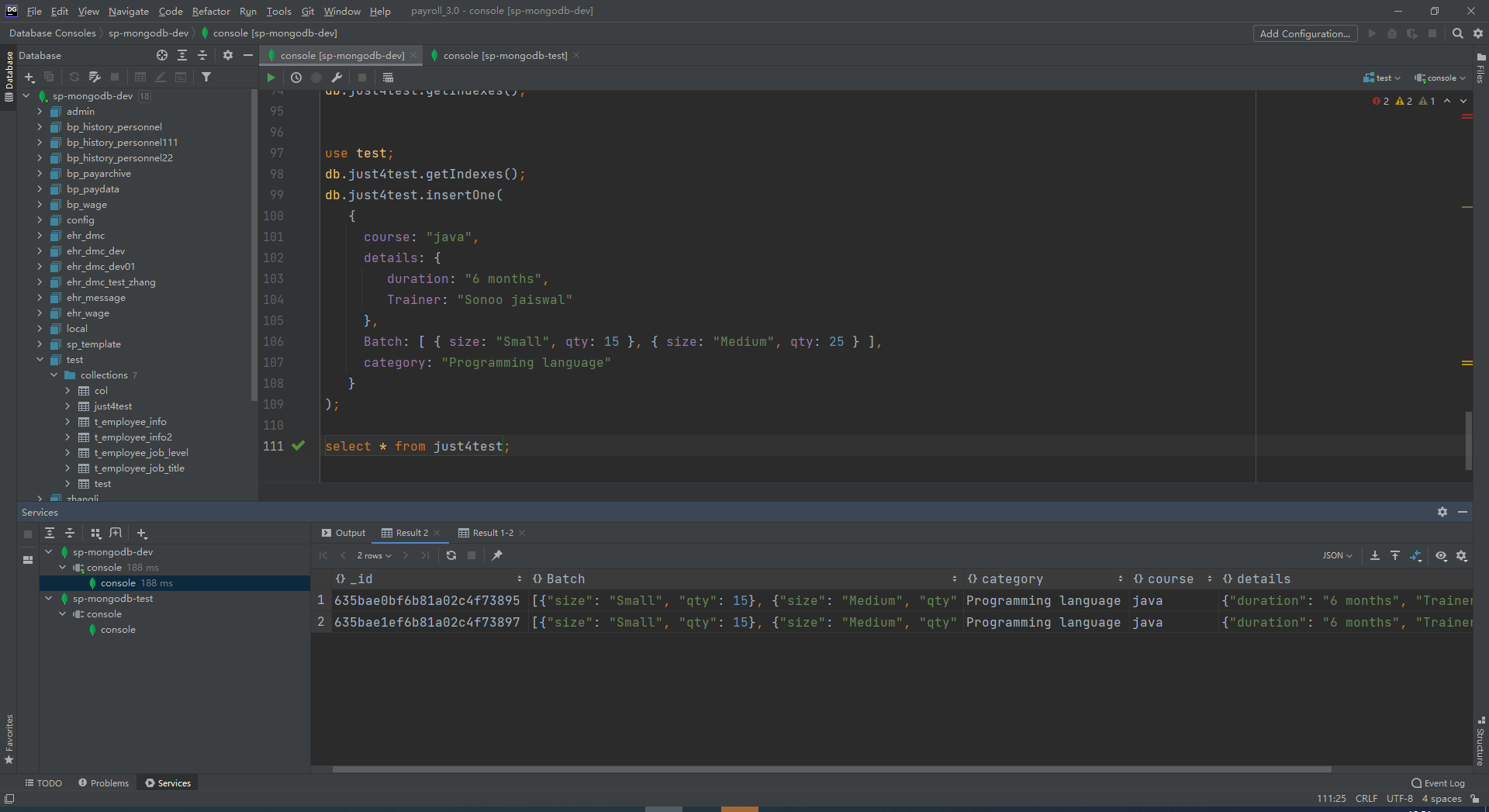

5.2 创建索引

为集合worker创建一个联合索引,name升序,age降序,设置索引名字为worker_index

db.worker.createIndex({name:1,age:-1},{name:"worker_index"})

5.3 查看集合的索引

db.worker.getIndexes()

5.4 查看集合索引的大小

db.worker.totalIndexSize()

5.4 查询是否经过了索引

db.worker.find({age:29}).explain()

5.5 删除集合的索引

索引名为name_1_age_-1

db.worker.dropIndex("name_1_age_-1")

6.管理命令

1.复制数据库

复制test库 到 test_hjs库

show dbs

db.runCommand( { copydb : 1, fromhost : "localhost", fromdb : "test", todb : "test_hjs" } );

use admin

db.runCommand( { copydb : 1, fromhost : "localhost", fromdb : "test", todb : "test_hjs" } );

show dbs

db.runCommand( { copydb : 1, fromhost : "localhost", fromdb : "test", todb : "test_hjs" } );

> show dbs

admin 0.000GB

config 0.000GB

hjs 0.000GB

local 0.000GB

test 0.000GB

>

> db.runCommand( { copydb : 1, fromhost : "localhost", fromdb : "test", todb : "test_hjs" } );

{

"ok" : 0,

"errmsg" : "copydb may only be run against the admin database.",

"code" : 13,

"codeName" : "Unauthorized"

}

>

> use admin

switched to db admin

> db.runCommand( { copydb : 1, fromhost : "localhost", fromdb : "test", todb : "test_hjs" } );

{

"note" : "Support for the copydb command has been deprecated. See http://dochub.mongodb.org/core/copydb-clone-deprecation",

"ok" : 1

}

> show dbs

admin 0.000GB

config 0.000GB

hjs 0.000GB

local 0.000GB

test 0.000GB

test_hjs 0.000GB

>

> db.runCommand( { copydb : 1, fromhost : "localhost", fromdb : "test", todb : "test_hjs" } );

{

"ok" : 0,

"errmsg" : "unsharded collection with same namespace test_hjs.inventory already exists.",

"code" : 48,

"codeName" : "NamespaceExists"

}

2.重命名集合

use admin;

db.runCommand( { renameCollection:"test.table01", to: "test.table111111", dropTarget: false } );

> db

test

>

> db.table01.insertMany( [ { item: "journal", qty: 25, size: { h: 14, w: 21, uom: "cm" }, status: "A" }, { item: "notebook", qty: 50, size: { h: 8.5, w: 11, uom: "in" }, status: "A" }, { item: "paper", qty: 100, size: { h: 8.5, w: 11, uom: "in" }, status: "D" }, { item: "planner", qty: 75, size: { h: 22.85, w: 30, uom: "cm" }, status: "D" }, { item: "postcard", qty: 45, size: { h: 10, w: 15.25, uom: "cm" }, status: "A" } ]);

{

"acknowledged" : true,

"insertedIds" : [

ObjectId("635fa7cbd5ce5b23c39cae4a"),

ObjectId("635fa7cbd5ce5b23c39cae4b"),

ObjectId("635fa7cbd5ce5b23c39cae4c"),

ObjectId("635fa7cbd5ce5b23c39cae4d"),

ObjectId("635fa7cbd5ce5b23c39cae4e")

]

}

>

> show tables

audit100

inventory

table01

>

> use admin;

>

> db.runCommand( { renameCollection:"test.table01", to: "test.table0111111.tblbooks", dropTarget: false } );

{

"ok" : 0,

"errmsg" : "renameCollection may only be run against the admin database.",

"code" : 13,

"codeName" : "Unauthorized"

}

> use admin

switched to db admin

>

> db.runCommand( { renameCollection:"test.table01", to: "test.table111111", dropTarget: false } );

{ "ok" : 1 }

>

> use test

switched to db test

>

> show tables;

audit100

inventory

table111111

3.查看数据库和集合的统计信息

db.inventory.insertMany( [

{ item: "canvas", qty: 100, size: { h: 28, w: 35.5, uom: "cm" }, status: "A" },

{ item: "journal", qty: 25, size: { h: 14, w: 21, uom: "cm" }, status: "A" },

{ item: "mat", qty: 85, size: { h: 27.9, w: 35.5, uom: "cm" }, status: "A" },

{ item: "mousepad", qty: 25, size: { h: 19, w: 22.85, uom: "cm" }, status: "P" },

{ item: "notebook", qty: 50, size: { h: 8.5, w: 11, uom: "in" }, status: "P" },

{ item: "paper", qty: 100, size: { h: 8.5, w: 11, uom: "in" }, status: "D" },

{ item: "planner", qty: 75, size: { h: 22.85, w: 30, uom: "cm" }, status: "D" },

{ item: "postcard", qty: 45, size: { h: 10, w: 15.25, uom: "cm" }, status: "A" },

{ item: "sketchbook", qty: 80, size: { h: 14, w: 21, uom: "cm" }, status: "A" },

{ item: "sketch pad", qty: 95, size: { h: 22.85, w: 30.5, uom: "cm" }, status: "A" }

] );

use test

db.stats(); 查看db状态

show tables;

db.inventory.stats() 查看tables状态

> db.stats();

{

"db" : "test", #数据库名

"collections" : 3, #collection的数量

"views" : 0,

"objects" : 15, #对象数据量

"avgObjSize" : 112, #对象平均大小

"dataSize" : 1680, #数据大小

"storageSize" : 53248, #数据存储大小包含预分配空间

"numExtents" : 0, #事件数量

"indexes" : 3,

"indexSize" : 53248, #索引数量

"fsUsedSize" : 40186724352,

"fsTotalSize" : 105553780736,

"ok" : 1 #本次stats是否正常

}

> show dbs

admin 0.000GB

config 0.000GB

hjs 0.000GB

local 0.000GB

test 0.000GB

test_hjs 0.000GB

> db.test.stats()

{

"ns" : "test.test",

"ok" : 0,

"errmsg" : "Collection [test.test] not found."

}

> show tables;

audit100

inventory

table111111

> db.inventory.stats()

{

"ns" : "test.inventory",

"size" : 1120,

"count" : 10,

"avgObjSize" : 112,

"storageSize" : 32768,

"capped" : false,

"wiredTiger" : {

"metadata" : {

"formatVersion" : 1

},

"creationString" : "access_pattern_hint=none,allocation_size=4KB,app_metadata=(formatVersion=1),assert=(commit_timestamp=none,read_timestamp=none),block_allocation=best,block_compressor=zlib,cache_resident=false,checksum=on,colgroups=,collator=,columns=,dictionary=0,encryption=(keyid=,name=),exclusive=false,extractor=,format=btree,huffman_key=,huffman_value=,ignore_in_memory_cache_size=false,immutable=false,internal_item_max=0,internal_key_max=0,internal_key_truncate=true,internal_page_max=4KB,key_format=q,key_gap=10,leaf_item_max=0,leaf_key_max=0,leaf_page_max=32KB,leaf_value_max=64MB,log=(enabled=true),lsm=(auto_throttle=true,bloom=true,bloom_bit_count=16,bloom_config=,bloom_hash_count=8,bloom_oldest=false,chunk_count_limit=0,chunk_max=5GB,chunk_size=10MB,merge_custom=(prefix=,start_generation=0,suffix=),merge_max=15,merge_min=0),memory_page_image_max=0,memory_page_max=10m,os_cache_dirty_max=0,os_cache_max=0,prefix_compression=false,prefix_compression_min=4,source=,split_deepen_min_child=0,split_deepen_per_child=0,split_pct=90,type=file,value_format=u",

"type" : "file",

"uri" : "statistics:table:test/collection/26-7517394021304977266",

"LSM" : {

"bloom filter false positives" : 0,

"bloom filter hits" : 0,

"bloom filter misses" : 0,

"bloom filter pages evicted from cache" : 0,

"bloom filter pages read into cache" : 0,

"bloom filters in the LSM tree" : 0,

"chunks in the LSM tree" : 0,

"highest merge generation in the LSM tree" : 0,

"queries that could have benefited from a Bloom filter that did not exist" : 0,

"sleep for LSM checkpoint throttle" : 0,

"sleep for LSM merge throttle" : 0,

"total size of bloom filters" : 0

},

"block-manager" : {

"allocations requiring file extension" : 7,

"blocks allocated" : 7,

"blocks freed" : 1,

"checkpoint size" : 4096,

"file allocation unit size" : 4096,

"file bytes available for reuse" : 12288,

"file magic number" : 120897,

"file major version number" : 1,

"file size in bytes" : 32768,

"minor version number" : 0

},

"btree" : {

"btree checkpoint generation" : 6970,

"btree clean tree checkpoint expiration time" : NumberLong("9223372036854775807"),

"column-store fixed-size leaf pages" : 0,

"column-store internal pages" : 0,

"column-store variable-size RLE encoded values" : 0,

"column-store variable-size deleted values" : 0,

"column-store variable-size leaf pages" : 0,

"fixed-record size" : 0,

"maximum internal page key size" : 368,

"maximum internal page size" : 4096,

"maximum leaf page key size" : 2867,

"maximum leaf page size" : 32768,

"maximum leaf page value size" : 67108864,

"maximum tree depth" : 3,

"number of key/value pairs" : 0,

"overflow pages" : 0,

"pages rewritten by compaction" : 0,

"row-store internal pages" : 0,

"row-store leaf pages" : 0

},

"cache" : {

"bytes currently in the cache" : 3061,

"bytes dirty in the cache cumulative" : 3049,

"bytes read into cache" : 0,

"bytes written from cache" : 1922,

"checkpoint blocked page eviction" : 0,

"data source pages selected for eviction unable to be evicted" : 0,

"eviction walk passes of a file" : 0,

"eviction walk target pages histogram - 0-9" : 0,

"eviction walk target pages histogram - 10-31" : 0,

"eviction walk target pages histogram - 128 and higher" : 0,

"eviction walk target pages histogram - 32-63" : 0,

"eviction walk target pages histogram - 64-128" : 0,

"eviction walks abandoned" : 0,

"eviction walks gave up because they restarted their walk twice" : 0,

"eviction walks gave up because they saw too many pages and found no candidates" : 0,

"eviction walks gave up because they saw too many pages and found too few candidates" : 0,

"eviction walks reached end of tree" : 0,

"eviction walks started from root of tree" : 0,

"eviction walks started from saved location in tree" : 0,

"hazard pointer blocked page eviction" : 0,

"in-memory page passed criteria to be split" : 0,

"in-memory page splits" : 0,

"internal pages evicted" : 0,

"internal pages split during eviction" : 0,

"leaf pages split during eviction" : 0,

"modified pages evicted" : 0,

"overflow pages read into cache" : 0,

"page split during eviction deepened the tree" : 0,

"page written requiring cache overflow records" : 0,

"pages read into cache" : 0,

"pages read into cache after truncate" : 1,

"pages read into cache after truncate in prepare state" : 0,

"pages read into cache requiring cache overflow entries" : 0,

"pages requested from the cache" : 58,

"pages seen by eviction walk" : 0,

"pages written from cache" : 4,

"pages written requiring in-memory restoration" : 0,

"tracked dirty bytes in the cache" : 0,

"unmodified pages evicted" : 0

},

"cache_walk" : {

"Average difference between current eviction generation when the page was last considered" : 0,

"Average on-disk page image size seen" : 0,

"Average time in cache for pages that have been visited by the eviction server" : 0,

"Average time in cache for pages that have not been visited by the eviction server" : 0,

"Clean pages currently in cache" : 0,

"Current eviction generation" : 0,

"Dirty pages currently in cache" : 0,

"Entries in the root page" : 0,

"Internal pages currently in cache" : 0,

"Leaf pages currently in cache" : 0,

"Maximum difference between current eviction generation when the page was last considered" : 0,

"Maximum page size seen" : 0,

"Minimum on-disk page image size seen" : 0,

"Number of pages never visited by eviction server" : 0,

"On-disk page image sizes smaller than a single allocation unit" : 0,

"Pages created in memory and never written" : 0,

"Pages currently queued for eviction" : 0,

"Pages that could not be queued for eviction" : 0,

"Refs skipped during cache traversal" : 0,

"Size of the root page" : 0,

"Total number of pages currently in cache" : 0

},

"compression" : {

"compressed pages read" : 0,

"compressed pages written" : 0,

"page written failed to compress" : 0,

"page written was too small to compress" : 4

},

"cursor" : {

"bulk-loaded cursor-insert calls" : 0,

"close calls that result in cache" : 0,

"create calls" : 4,

"cursor operation restarted" : 0,

"cursor-insert key and value bytes inserted" : 1130,

"cursor-remove key bytes removed" : 0,

"cursor-update value bytes updated" : 0,

"cursors reused from cache" : 47,

"insert calls" : 10,

"modify calls" : 0,

"next calls" : 262,

"open cursor count" : 0,

"prev calls" : 1,

"remove calls" : 0,

"reserve calls" : 0,

"reset calls" : 102,

"search calls" : 0,

"search near calls" : 0,

"truncate calls" : 0,

"update calls" : 0

},

"reconciliation" : {

"dictionary matches" : 0,

"fast-path pages deleted" : 0,

"internal page key bytes discarded using suffix compression" : 0,

"internal page multi-block writes" : 0,

"internal-page overflow keys" : 0,

"leaf page key bytes discarded using prefix compression" : 0,

"leaf page multi-block writes" : 0,

"leaf-page overflow keys" : 0,

"maximum blocks required for a page" : 1,

"overflow values written" : 0,

"page checksum matches" : 0,

"page reconciliation calls" : 4,

"page reconciliation calls for eviction" : 0,

"pages deleted" : 0

},

"session" : {

"object compaction" : 0

},

"transaction" : {

"update conflicts" : 0

}

},

"nindexes" : 1,

"totalIndexSize" : 32768,

"indexSizes" : {

"_id_" : 32768

},

"ok" : 1

}

4.检查数据库

use test

show tables

db.inventory.validate(true);

> db

test

>

> show dbs

admin 0.000GB

config 0.000GB

hjs 0.000GB

local 0.000GB

test 0.000GB

test_hjs 0.000GB

>

> show tables;

audit100

inventory

table111111

>

> db.inventory.validate(true);

{

"ns" : "test.inventory",

"nInvalidDocuments" : NumberLong(0),

"nrecords" : 10,

"nIndexes" : 1,

"keysPerIndex" : {

"_id_" : 10

},

"indexDetails" : {

"_id_" : {

"valid" : true

}

},

"valid" : true,

"warnings" : [ ],

"errors" : [ ],

"extraIndexEntries" : [ ],

"missingIndexEntries" : [ ],

"ok" : 1

}

>

> db.inventory.find().count()

10

5.剖析mongodb数据库慢的性能问题

剖析等级:

0:不剖析

1:仅仅剖析速度慢的操作

2:剖析全部操作

use test

show tables;

db.runCommand( {profile:2 , slows:100} );

db.inventory.find();

db.system.profile.find();

db.system.profile.find().pretty()

> db

test

>

> show tables;

audit100

inventory

table111111

>

> db.runCommand( {profile:2 , slows:100} );

{ "was" : 0, "slowms" : 100, "sampleRate" : 1, "ok" : 1 }

> show tables;

audit100

inventory

system.profile

table111111

>

> db.inventory.find();

{ "_id" : ObjectId("635f77ebd5ce5b23c39cae40"), "item" : "journal", "qty" : 25, "size" : { "h" : 14, "w" : 21, "uom" : "cm" }, "status" : "A" }

{ "_id" : ObjectId("635f77ebd5ce5b23c39cae41"), "item" : "notebook", "qty" : 50, "size" : { "h" : 8.5, "w" : 11, "uom" : "in" }, "status" : "A" }

{ "_id" : ObjectId("635f77ebd5ce5b23c39cae42"), "item" : "paper", "qty" : 100, "size" : { "h" : 8.5, "w" : 11, "uom" : "in" }, "status" : "D" }

{ "_id" : ObjectId("635f77ebd5ce5b23c39cae43"), "item" : "planner", "qty" : 75, "size" : { "h" : 22.85, "w" : 30, "uom" : "cm" }, "status" : "D" }

{ "_id" : ObjectId("635f77ebd5ce5b23c39cae44"), "item" : "postcard", "qty" : 45, "size" : { "h" : 10, "w" : 15.25, "uom" : "cm" }, "status" : "A" }

{ "_id" : ObjectId("635fa69cd5ce5b23c39cae45"), "item" : "journal", "qty" : 25, "size" : { "h" : 14, "w" : 21, "uom" : "cm" }, "status" : "A" }

{ "_id" : ObjectId("635fa69cd5ce5b23c39cae46"), "item" : "notebook", "qty" : 50, "size" : { "h" : 8.5, "w" : 11, "uom" : "in" }, "status" : "A" }

{ "_id" : ObjectId("635fa69cd5ce5b23c39cae47"), "item" : "paper", "qty" : 100, "size" : { "h" : 8.5, "w" : 11, "uom" : "in" }, "status" : "D" }

{ "_id" : ObjectId("635fa69cd5ce5b23c39cae48"), "item" : "planner", "qty" : 75, "size" : { "h" : 22.85, "w" : 30, "uom" : "cm" }, "status" : "D" }

{ "_id" : ObjectId("635fa69cd5ce5b23c39cae49"), "item" : "postcard", "qty" : 45, "size" : { "h" : 10, "w" : 15.25, "uom" : "cm" }, "status" : "A" }

> db.system.profile.find();

{ "op" : "query", "ns" : "test.inventory", "command" : { "find" : "inventory", "filter" : { }, "lsid" : { "id" : UUID("5ba60d17-24ea-4862-baf8-a05230abd912") }, "$db" : "test" }, "keysExamined" : 0, "docsExamined" : 10, "cursorExhausted" : true, "numYield" : 0, "nreturned" : 10, "locks" : { "Global" : { "acquireCount" : { "r" : NumberLong(1) } }, "Database" : { "acquireCount" : { "r" : NumberLong(1) } }, "Collection" : { "acquireCount" : { "r" : NumberLong(1) } } }, "responseLength" : 1253, "protocol" : "op_msg", "millis" : 0, "planSummary" : "COLLSCAN", "execStats" : { "stage" : "COLLSCAN", "nReturned" : 10, "executionTimeMillisEstimate" : 0, "works" : 12, "advanced" : 10, "needTime" : 1, "needYield" : 0, "saveState" : 0, "restoreState" : 0, "isEOF" : 1, "invalidates" : 0, "direction" : "forward", "docsExamined" : 10 }, "ts" : ISODate("2022-11-01T07:23:47.610Z"), "client" : "127.0.0.1", "appName" : "MongoDB Shell", "allUsers" : [ ], "user" : "" }

> db.system.profile.find().pretty()

{

"op" : "query",

"ns" : "test.inventory",

"command" : {

"find" : "inventory",

"filter" : {

},

"lsid" : {

"id" : UUID("5ba60d17-24ea-4862-baf8-a05230abd912")

},

"$db" : "test"

},

"keysExamined" : 0,

"docsExamined" : 10,

"cursorExhausted" : true,

"numYield" : 0,

"nreturned" : 10,

"locks" : {

"Global" : {

"acquireCount" : {

"r" : NumberLong(1)

}

},

"Database" : {

"acquireCount" : {

"r" : NumberLong(1)

}

},

"Collection" : {

"acquireCount" : {

"r" : NumberLong(1)

}

}

},

"responseLength" : 1253,

"protocol" : "op_msg",

"millis" : 0,

"planSummary" : "COLLSCAN",

"execStats" : {

"stage" : "COLLSCAN",

"nReturned" : 10,

"executionTimeMillisEstimate" : 0,

"works" : 12,

"advanced" : 10,

"needTime" : 1,

"needYield" : 0,

"saveState" : 0,

"restoreState" : 0,

"isEOF" : 1,

"invalidates" : 0,

"direction" : "forward",

"docsExamined" : 10

},

"ts" : ISODate("2022-11-01T07:23:47.610Z"),

"client" : "127.0.0.1",

"appName" : "MongoDB Shell",

"allUsers" : [ ],

"user" : ""

}

{

"op" : "query",

"ns" : "test.system.profile",

"command" : {

"find" : "system.profile",

"filter" : {

},

"lsid" : {

"id" : UUID("5ba60d17-24ea-4862-baf8-a05230abd912")

},

"$db" : "test"

},

"keysExamined" : 0,

"docsExamined" : 1,

"cursorExhausted" : true,

"numYield" : 0,

"nreturned" : 1,

"locks" : {

"Global" : {

"acquireCount" : {

"r" : NumberLong(1)

}

},

"Database" : {

"acquireCount" : {

"r" : NumberLong(1)

}

},

"Collection" : {

"acquireCount" : {

"r" : NumberLong(1)

}

}

},

"responseLength" : 886,

"protocol" : "op_msg",

"millis" : 0,

"planSummary" : "COLLSCAN",

"execStats" : {

"stage" : "COLLSCAN",

"nReturned" : 1,

"executionTimeMillisEstimate" : 0,

"works" : 3,

"advanced" : 1,

"needTime" : 1,

"needYield" : 0,

"saveState" : 0,

"restoreState" : 0,

"isEOF" : 1,

"invalidates" : 0,

"direction" : "forward",

"docsExamined" : 1

},

"ts" : ISODate("2022-11-01T07:23:53.686Z"),

"client" : "127.0.0.1",

"appName" : "MongoDB Shell",

"allUsers" : [ ],

"user" : ""

}

6.评估查询

show tables;

db.inventory.find()

db.inventory.find({"status" : "A"})

db.inventory.find({"status" : "A"}).explain()

> show tables;

audit100

inventory

system.profile

table111111

>

> db.inventory.find()

{ "_id" : ObjectId("635f77ebd5ce5b23c39cae40"), "item" : "journal", "qty" : 25, "size" : { "h" : 14, "w" : 21, "uom" : "cm" }, "status" : "A" }

{ "_id" : ObjectId("635f77ebd5ce5b23c39cae41"), "item" : "notebook", "qty" : 50, "size" : { "h" : 8.5, "w" : 11, "uom" : "in" }, "status" : "A" }

{ "_id" : ObjectId("635f77ebd5ce5b23c39cae42"), "item" : "paper", "qty" : 100, "size" : { "h" : 8.5, "w" : 11, "uom" : "in" }, "status" : "D" }

{ "_id" : ObjectId("635f77ebd5ce5b23c39cae43"), "item" : "planner", "qty" : 75, "size" : { "h" : 22.85, "w" : 30, "uom" : "cm" }, "status" : "D" }

{ "_id" : ObjectId("635f77ebd5ce5b23c39cae44"), "item" : "postcard", "qty" : 45, "size" : { "h" : 10, "w" : 15.25, "uom" : "cm" }, "status" : "A" }

{ "_id" : ObjectId("635fa69cd5ce5b23c39cae45"), "item" : "journal", "qty" : 25, "size" : { "h" : 14, "w" : 21, "uom" : "cm" }, "status" : "A" }

{ "_id" : ObjectId("635fa69cd5ce5b23c39cae46"), "item" : "notebook", "qty" : 50, "size" : { "h" : 8.5, "w" : 11, "uom" : "in" }, "status" : "A" }

{ "_id" : ObjectId("635fa69cd5ce5b23c39cae47"), "item" : "paper", "qty" : 100, "size" : { "h" : 8.5, "w" : 11, "uom" : "in" }, "status" : "D" }

{ "_id" : ObjectId("635fa69cd5ce5b23c39cae48"), "item" : "planner", "qty" : 75, "size" : { "h" : 22.85, "w" : 30, "uom" : "cm" }, "status" : "D" }

{ "_id" : ObjectId("635fa69cd5ce5b23c39cae49"), "item" : "postcard", "qty" : 45, "size" : { "h" : 10, "w" : 15.25, "uom" : "cm" }, "status" : "A" }

>

> db.inventory.find({"status" : "A"})

{ "_id" : ObjectId("635f77ebd5ce5b23c39cae40"), "item" : "journal", "qty" : 25, "size" : { "h" : 14, "w" : 21, "uom" : "cm" }, "status" : "A" }

{ "_id" : ObjectId("635f77ebd5ce5b23c39cae41"), "item" : "notebook", "qty" : 50, "size" : { "h" : 8.5, "w" : 11, "uom" : "in" }, "status" : "A" }

{ "_id" : ObjectId("635f77ebd5ce5b23c39cae44"), "item" : "postcard", "qty" : 45, "size" : { "h" : 10, "w" : 15.25, "uom" : "cm" }, "status" : "A" }

{ "_id" : ObjectId("635fa69cd5ce5b23c39cae45"), "item" : "journal", "qty" : 25, "size" : { "h" : 14, "w" : 21, "uom" : "cm" }, "status" : "A" }

{ "_id" : ObjectId("635fa69cd5ce5b23c39cae46"), "item" : "notebook", "qty" : 50, "size" : { "h" : 8.5, "w" : 11, "uom" : "in" }, "status" : "A" }

{ "_id" : ObjectId("635fa69cd5ce5b23c39cae49"), "item" : "postcard", "qty" : 45, "size" : { "h" : 10, "w" : 15.25, "uom" : "cm" }, "status" : "A" }

>

> db.inventory.find({"status" : "A"}).explain()

{

"queryPlanner" : {

"plannerVersion" : 1,

"namespace" : "test.inventory",

"indexFilterSet" : false,

"parsedQuery" : {

"status" : {

"$eq" : "A"

}

},

"winningPlan" : {

"stage" : "COLLSCAN",

"filter" : {

"status" : {

"$eq" : "A"

}

},

"direction" : "forward"

},

"rejectedPlans" : [ ]

},

"serverInfo" : {

"host" : "10-21-0-1",

"port" : 27017,

"version" : "4.0.28",

"gitVersion" : "af1a9dc12adcfa83cc19571cb3faba26eeddac92"

},

"ok" : 1

}

7.诊断命令top

use admin;

db.runCommand( {top : 1} );

> use admin;

> db.runCommand( {top : 1} );

{

"totals" : {

"note" : "all times in microseconds",

"admin.$cmd.aggregate" : {

"total" : {

"time" : 83,

"count" : 1

},

"readLock" : {

"time" : 0,

"count" : 0

},

"writeLock" : {

"time" : 0,

"count" : 0

},

"queries" : {

"time" : 0,

"count" : 0

},

"getmore" : {

"time" : 0,

"count" : 0

},

"insert" : {

"time" : 0,

"count" : 0

},

"update" : {

"time" : 0,

"count" : 0

},

"remove" : {

"time" : 0,

"count" : 0

},

"commands" : {

"time" : 83,

"count" : 1

}

},

"admin.config.system.sessions" : {

"total" : {

"time" : 51,

"count" : 1

},

"readLock" : {

"time" : 51,

"count" : 1

},

"writeLock" : {

"time" : 0,

"count" : 0

},

"queries" : {

"time" : 51,

"count" : 1

},

"getmore" : {

"time" : 0,

"count" : 0

},

"insert" : {

"time" : 0,

"count" : 0

},

"update" : {

"time" : 0,

"count" : 0

},

"remove" : {

"time" : 0,

"count" : 0

},

"commands" : {

"time" : 0,

"count" : 0

}

},

"admin.system.roles" : {

"total" : {

"time" : 58,

"count" : 1

},

"readLock" : {

"time" : 58,

"count" : 1

},

"writeLock" : {

"time" : 0,

"count" : 0

},

"queries" : {

"time" : 58,

"count" : 1

},

"getmore" : {

"time" : 0,

"count" : 0

},

"insert" : {

"time" : 0,

"count" : 0

},

"update" : {

"time" : 0,

"count" : 0

},

"remove" : {

"time" : 0,

"count" : 0

},

"commands" : {

"time" : 0,

"count" : 0

}

},

"admin.system.users" : {

"total" : {

"time" : 253,

"count" : 1

},

"readLock" : {

"time" : 253,

"count" : 1

},

"writeLock" : {

"time" : 0,

"count" : 0

},

"queries" : {

"time" : 0,

"count" : 0

},

"getmore" : {

"time" : 0,

"count" : 0

},

"insert" : {

"time" : 0,

"count" : 0

},

"update" : {

"time" : 0,

"count" : 0

},

"remove" : {

"time" : 0,

"count" : 0

},

"commands" : {

"time" : 253,

"count" : 1

}

},

"admin.system.version" : {

"total" : {

"time" : 94,

"count" : 2

},

"readLock" : {

"time" : 88,

"count" : 1

},

"writeLock" : {

"time" : 6,

"count" : 1

},

"queries" : {

"time" : 0,

"count" : 0

},

"getmore" : {

"time" : 0,

"count" : 0

},

"insert" : {

"time" : 0,

"count" : 0

},

"update" : {

"time" : 0,

"count" : 0

},

"remove" : {

"time" : 0,

"count" : 0

},

"commands" : {

"time" : 0,

"count" : 0

}

},

"config.system.sessions" : {

"total" : {

"time" : 156459,

"count" : 2879

},

"readLock" : {

"time" : 147050,

"count" : 2812

},

"writeLock" : {

"time" : 9409,

"count" : 67

},

"queries" : {

"time" : 0,

"count" : 0

},

"getmore" : {

"time" : 0,

"count" : 0

},

"insert" : {

"time" : 0,

"count" : 0

},

"update" : {

"time" : 9329,

"count" : 63

},

"remove" : {

"time" : 78,

"count" : 3

},

"commands" : {

"time" : 147050,

"count" : 2812

}

},

"config.transactions" : {

"total" : {

"time" : 48100,

"count" : 1406

},

"readLock" : {

"time" : 48100,

"count" : 1406

},

"writeLock" : {

"time" : 0,

"count" : 0

},

"queries" : {

"time" : 48100,

"count" : 1406

},

"getmore" : {

"time" : 0,

"count" : 0

},

"insert" : {

"time" : 0,

"count" : 0

},

"update" : {

"time" : 0,

"count" : 0

},

"remove" : {

"time" : 0,

"count" : 0

},

"commands" : {

"time" : 0,

"count" : 0

}

},

"hjs.inventory" : {

"total" : {

"time" : 12932,

"count" : 1

},

"readLock" : {

"time" : 0,

"count" : 0

},

"writeLock" : {

"time" : 12932,

"count" : 1

},

"queries" : {

"time" : 0,

"count" : 0

},

"getmore" : {

"time" : 0,

"count" : 0

},

"insert" : {

"time" : 12932,

"count" : 1

},

"update" : {

"time" : 0,

"count" : 0

},

"remove" : {

"time" : 0,

"count" : 0

},

"commands" : {

"time" : 0,

"count" : 0

}

},

"local.oplog.rs" : {

"total" : {

"time" : 647381,

"count" : 421693

},

"readLock" : {

"time" : 647381,

"count" : 421693

},

"writeLock" : {

"time" : 0,

"count" : 0

},

"queries" : {

"time" : 0,

"count" : 0

},

"getmore" : {

"time" : 0,

"count" : 0

},

"insert" : {

"time" : 0,

"count" : 0

},

"update" : {

"time" : 0,

"count" : 0

},

"remove" : {

"time" : 0,

"count" : 0

},

"commands" : {

"time" : 68,

"count" : 1

}

},

"local.startup_log" : {

"total" : {

"time" : 0,

"count" : 1

},

"readLock" : {

"time" : 0,

"count" : 0

},

"writeLock" : {

"time" : 0,

"count" : 1

},

"queries" : {

"time" : 0,

"count" : 0

},

"getmore" : {

"time" : 0,

"count" : 0

},

"insert" : {

"time" : 0,

"count" : 0

},

"update" : {

"time" : 0,

"count" : 0

},

"remove" : {

"time" : 0,

"count" : 0

},

"commands" : {

"time" : 0,

"count" : 0

}

},

"local.system.replset" : {

"total" : {

"time" : 176,

"count" : 2

},

"readLock" : {

"time" : 176,

"count" : 2

},

"writeLock" : {

"time" : 0,

"count" : 0

},

"queries" : {

"time" : 0,

"count" : 0

},

"getmore" : {

"time" : 0,

"count" : 0

},

"insert" : {

"time" : 0,

"count" : 0

},

"update" : {

"time" : 0,

"count" : 0

},

"remove" : {

"time" : 0,

"count" : 0

},

"commands" : {

"time" : 0,

"count" : 0

}

},

"test.audit100" : {

"total" : {

"time" : 13883,

"count" : 7

},

"readLock" : {

"time" : 1172,

"count" : 6

},

"writeLock" : {

"time" : 12711,

"count" : 1

},

"queries" : {

"time" : 60,

"count" : 2

},

"getmore" : {

"time" : 0,

"count" : 0

},

"insert" : {

"time" : 0,

"count" : 0

},

"update" : {

"time" : 0,

"count" : 0

},

"remove" : {

"time" : 0,

"count" : 0

},

"commands" : {

"time" : 13823,

"count" : 5

}

},

"test.inventory" : {

"total" : {

"time" : 28375,

"count" : 63

},

"readLock" : {

"time" : 9878,

"count" : 61

},

"writeLock" : {

"time" : 18497,

"count" : 2

},

"queries" : {

"time" : 8456,

"count" : 53

},

"getmore" : {

"time" : 0,

"count" : 0

},

"insert" : {

"time" : 18497,

"count" : 2

},

"update" : {

"time" : 0,

"count" : 0

},

"remove" : {

"time" : 0,

"count" : 0

},

"commands" : {

"time" : 1422,

"count" : 8

}

},

"test.system.profile" : {

"total" : {

"time" : 202,

"count" : 2

},

"readLock" : {

"time" : 202,

"count" : 2

},

"writeLock" : {

"time" : 0,

"count" : 0

},

"queries" : {

"time" : 202,

"count" : 2

},

"getmore" : {

"time" : 0,

"count" : 0

},

"insert" : {

"time" : 0,

"count" : 0

},

"update" : {

"time" : 0,

"count" : 0

},

"remove" : {

"time" : 0,

"count" : 0

},

"commands" : {

"time" : 0,

"count" : 0

}

},

"test.tblbooks" : {

"total" : {

"time" : 59,

"count" : 1

},

"readLock" : {

"time" : 59,

"count" : 1

},

"writeLock" : {

"time" : 0,

"count" : 0

},

"queries" : {

"time" : 0,

"count" : 0

},

"getmore" : {

"time" : 0,

"count" : 0

},

"insert" : {

"time" : 0,

"count" : 0

},

"update" : {

"time" : 0,

"count" : 0

},

"remove" : {

"time" : 0,

"count" : 0

},

"commands" : {

"time" : 59,

"count" : 1

}

},

"test.tblorders" : {

"total" : {

"time" : 37,

"count" : 1

},

"readLock" : {

"time" : 37,

"count" : 1

},

"writeLock" : {

"time" : 0,

"count" : 0

},

"queries" : {

"time" : 0,

"count" : 0

},

"getmore" : {

"time" : 0,

"count" : 0

},

"insert" : {

"time" : 0,

"count" : 0

},

"update" : {

"time" : 0,

"count" : 0

},

"remove" : {

"time" : 0,

"count" : 0

},

"commands" : {

"time" : 37,

"count" : 1

}

},

"test.test" : {

"total" : {

"time" : 27,

"count" : 1

},

"readLock" : {

"time" : 27,

"count" : 1

},

"writeLock" : {

"time" : 0,

"count" : 0

},

"queries" : {

"time" : 0,

"count" : 0

},

"getmore" : {

"time" : 0,

"count" : 0

},

"insert" : {

"time" : 0,

"count" : 0

},

"update" : {

"time" : 0,

"count" : 0

},

"remove" : {

"time" : 0,

"count" : 0

},

"commands" : {

"time" : 27,

"count" : 1

}

},

"test.user_info" : {

"total" : {

"time" : 104,

"count" : 2

},

"readLock" : {

"time" : 104,

"count" : 2

},

"writeLock" : {

"time" : 0,

"count" : 0

},

"queries" : {

"time" : 104,

"count" : 2

},

"getmore" : {

"time" : 0,

"count" : 0

},

"insert" : {

"time" : 0,

"count" : 0

},

"update" : {

"time" : 0,

"count" : 0

},

"remove" : {

"time" : 0,

"count" : 0

},

"commands" : {

"time" : 0,

"count" : 0

}

}

},

"ok" : 1

}

8.服务状态

db.serverStatus()

> db.serverStatus()

{

"host" : "10-21-0-1",

"version" : "4.0.28",

"process" : "mongod",

"pid" : NumberLong(32532),

"uptime" : 422287,

"uptimeMillis" : NumberLong(422287223),

"uptimeEstimate" : NumberLong(422287),

"localTime" : ISODate("2022-11-01T08:15:03.631Z"),

"asserts" : {

"regular" : 0,

"warning" : 0,

"msg" : 0,

"user" : 16,

"rollovers" : 0

},

"connections" : {

"current" : 2,

"available" : 52426,

"totalCreated" : 12,

"active" : 1

},

"electionMetrics" : {

"stepUpCmd" : {

"called" : NumberLong(0),

"successful" : NumberLong(0)

},

"priorityTakeover" : {

"called" : NumberLong(0),

"successful" : NumberLong(0)

},

"catchUpTakeover" : {

"called" : NumberLong(0),

"successful" : NumberLong(0)

},

"electionTimeout" : {

"called" : NumberLong(0),

"successful" : NumberLong(0)

},

"freezeTimeout" : {

"called" : NumberLong(0),

"successful" : NumberLong(0)

},

"numStepDownsCausedByHigherTerm" : NumberLong(0),

"numCatchUps" : NumberLong(0),

"numCatchUpsSucceeded" : NumberLong(0),

"numCatchUpsAlreadyCaughtUp" : NumberLong(0),

"numCatchUpsSkipped" : NumberLong(0),

"numCatchUpsTimedOut" : NumberLong(0),

"numCatchUpsFailedWithError" : NumberLong(0),

"numCatchUpsFailedWithNewTerm" : NumberLong(0),

"numCatchUpsFailedWithReplSetAbortPrimaryCatchUpCmd" : NumberLong(0),

"averageCatchUpOps" : 0

},

"extra_info" : {

"note" : "fields vary by platform",

"page_faults" : 5

},

"globalLock" : {

"totalTime" : NumberLong("422287222000"),

"currentQueue" : {

"total" : 0,

"readers" : 0,

"writers" : 0

},

"activeClients" : {

"total" : 13,

"readers" : 0,

"writers" : 0

}

},

"locks" : {

"Global" : {

"acquireCount" : {

"r" : NumberLong(2020636),

"w" : NumberLong(7242),

"W" : NumberLong(8)

}

},

"Database" : {

"acquireCount" : {

"r" : NumberLong(1008874),

"w" : NumberLong(7185),

"R" : NumberLong(3),

"W" : NumberLong(57)

}

},

"Collection" : {

"acquireCount" : {

"r" : NumberLong(586389),

"w" : NumberLong(7173),

"W" : NumberLong(13)

}

},

"Metadata" : {

"acquireCount" : {

"W" : NumberLong(9)

}

},

"oplog" : {

"acquireCount" : {

"r" : NumberLong(422288)

}

}

},

"logicalSessionRecordCache" : {

"activeSessionsCount" : 1,

"sessionsCollectionJobCount" : 1408,

"lastSessionsCollectionJobDurationMillis" : 0,

"lastSessionsCollectionJobTimestamp" : ISODate("2022-11-01T08:11:57.340Z"),

"lastSessionsCollectionJobEntriesRefreshed" : 0,

"lastSessionsCollectionJobEntriesEnded" : 0,

"lastSessionsCollectionJobCursorsClosed" : 0,

"transactionReaperJobCount" : 1408,

"lastTransactionReaperJobDurationMillis" : 0,

"lastTransactionReaperJobTimestamp" : ISODate("2022-11-01T08:11:57.345Z"),

"lastTransactionReaperJobEntriesCleanedUp" : 0,

"sessionCatalogSize" : 0

},

"network" : {

"bytesIn" : NumberLong(128405),

"bytesOut" : NumberLong(432482),

"physicalBytesIn" : NumberLong(128505),

"physicalBytesOut" : NumberLong(431396),

"numRequests" : NumberLong(1175),

"compression" : {

"snappy" : {

"compressor" : {

"bytesIn" : NumberLong(5013),

"bytesOut" : NumberLong(3757)

},

"decompressor" : {

"bytesIn" : NumberLong(3757),

"bytesOut" : NumberLong(5013)

}

}

},

"serviceExecutorTaskStats" : {

"executor" : "passthrough",

"threadsRunning" : 2

}

},

"opLatencies" : {

"reads" : {

"latency" : NumberLong(22232),

"ops" : NumberLong(160)

},

"writes" : {

"latency" : NumberLong(210509),

"ops" : NumberLong(43)

},

"commands" : {

"latency" : NumberLong(204560),

"ops" : NumberLong(971)

},

"transactions" : {

"latency" : NumberLong(0),

"ops" : NumberLong(0)

}

},

"opReadConcernCounters" : {

"available" : NumberLong(0),

"linearizable" : NumberLong(0),

"local" : NumberLong(0),

"majority" : NumberLong(0),

"snapshot" : NumberLong(0),

"none" : NumberLong(1567)

},

"opcounters" : {

"insert" : 106,

"query" : 1567,

"update" : 69,

"delete" : 3,

"getmore" : 0,

"command" : 3787,

"deprecated" : {

"total" : 4,

"insert" : 0,

"query" : 4,

"update" : 0,

"delete" : 0,

"getmore" : 0,

"killcursors" : 0

}

},

"opcountersRepl" : {

"insert" : 0,

"query" : 0,

"update" : 0,

"delete" : 0,

"getmore" : 0,

"command" : 0

},

"storageEngine" : {

"name" : "wiredTiger",

"supportsCommittedReads" : true,

"supportsSnapshotReadConcern" : true,

"readOnly" : false,

"persistent" : true

},

"tcmalloc" : {

"generic" : {

"current_allocated_bytes" : 75239720,

"heap_size" : 89677824

},

"tcmalloc" : {

"pageheap_free_bytes" : 3813376,

"pageheap_unmapped_bytes" : 7467008,

"max_total_thread_cache_bytes" : 240123904,

"current_total_thread_cache_bytes" : 1275360,

"total_free_bytes" : 3157720,

"central_cache_free_bytes" : 203384,

"transfer_cache_free_bytes" : 1678976,

"thread_cache_free_bytes" : 1275360,

"aggressive_memory_decommit" : 0,

"pageheap_committed_bytes" : 82210816,

"pageheap_scavenge_count" : 346,

"pageheap_commit_count" : 1301,

"pageheap_total_commit_bytes" : 318521344,

"pageheap_decommit_count" : 346,

"pageheap_total_decommit_bytes" : 236310528,

"pageheap_reserve_count" : 53,

"pageheap_total_reserve_bytes" : 89677824,

"spinlock_total_delay_ns" : 0,

"release_rate" : 1,

"formattedString" : "------------------------------------------------\nMALLOC: 75240296 ( 71.8 MiB) Bytes in use by application\nMALLOC: + 3813376 ( 3.6 MiB) Bytes in page heap freelist\nMALLOC: + 203384 ( 0.2 MiB) Bytes in central cache freelist\nMALLOC: + 1678976 ( 1.6 MiB) Bytes in transfer cache freelist\nMALLOC: + 1274784 ( 1.2 MiB) Bytes in thread cache freelists\nMALLOC: + 1335552 ( 1.3 MiB) Bytes in malloc metadata\nMALLOC: ------------\nMALLOC: = 83546368 ( 79.7 MiB) Actual memory used (physical + swap)\nMALLOC: + 7467008 ( 7.1 MiB) Bytes released to OS (aka unmapped)\nMALLOC: ------------\nMALLOC: = 91013376 ( 86.8 MiB) Virtual address space used\nMALLOC:\nMALLOC: 936 Spans in use\nMALLOC: 22 Thread heaps in use\nMALLOC: 4096 Tcmalloc page size\n------------------------------------------------\nCall ReleaseFreeMemory() to release freelist memory to the OS (via madvise()).\nBytes released to the OS take up virtual address space but no physical memory.\n"

}

},

"transactions" : {

"retriedCommandsCount" : NumberLong(0),

"retriedStatementsCount" : NumberLong(0),

"transactionsCollectionWriteCount" : NumberLong(0),

"currentActive" : NumberLong(0),

"currentInactive" : NumberLong(0),

"currentOpen" : NumberLong(0),

"totalAborted" : NumberLong(0),

"totalCommitted" : NumberLong(0),

"totalStarted" : NumberLong(0)

},

"wiredTiger" : {

"uri" : "statistics:",

"LSM" : {

"application work units currently queued" : 0,

"merge work units currently queued" : 0,

"rows merged in an LSM tree" : 0,

"sleep for LSM checkpoint throttle" : 0,

"sleep for LSM merge throttle" : 0,

"switch work units currently queued" : 0,

"tree maintenance operations discarded" : 0,

"tree maintenance operations executed" : 0,

"tree maintenance operations scheduled" : 0,

"tree queue hit maximum" : 0

},

"async" : {

"current work queue length" : 0,

"maximum work queue length" : 0,

"number of allocation state races" : 0,

"number of flush calls" : 0,

"number of operation slots viewed for allocation" : 0,

"number of times operation allocation failed" : 0,

"number of times worker found no work" : 0,

"total allocations" : 0,

"total compact calls" : 0,

"total insert calls" : 0,

"total remove calls" : 0,

"total search calls" : 0,

"total update calls" : 0

},

"block-manager" : {

"blocks pre-loaded" : 13,

"blocks read" : 620,

"blocks written" : 2371,

"bytes read" : 2551808,

"bytes written" : 14434304,

"bytes written for checkpoint" : 14434304,

"mapped blocks read" : 0,

"mapped bytes read" : 0

},

"cache" : {

"application threads page read from disk to cache count" : 18,

"application threads page read from disk to cache time (usecs)" : 51,

"application threads page write from cache to disk count" : 1190,

"application threads page write from cache to disk time (usecs)" : 35225,

"bytes belonging to page images in the cache" : 23112,

"bytes belonging to the cache overflow table in the cache" : 182,

"bytes currently in the cache" : 196770,

"bytes dirty in the cache cumulative" : 7281261,

"bytes not belonging to page images in the cache" : 173658,

"bytes read into cache" : 3943203193,

"bytes written from cache" : 5660545,

"cache overflow cursor application thread wait time (usecs)" : 0,

"cache overflow cursor internal thread wait time (usecs)" : 0,

"cache overflow score" : 0,

"cache overflow table entries" : 0,

"cache overflow table insert calls" : 0,

"cache overflow table max on-disk size" : 0,

"cache overflow table on-disk size" : 0,

"cache overflow table remove calls" : 0,

"checkpoint blocked page eviction" : 0,

"eviction calls to get a page" : 22,

"eviction calls to get a page found queue empty" : 16,

"eviction calls to get a page found queue empty after locking" : 0,

"eviction currently operating in aggressive mode" : 0,

"eviction empty score" : 0,

"eviction passes of a file" : 0,

"eviction server candidate queue empty when topping up" : 0,

"eviction server candidate queue not empty when topping up" : 0,

"eviction server evicting pages" : 0,

"eviction server slept, because we did not make progress with eviction" : 1083,

"eviction server unable to reach eviction goal" : 0,

"eviction server waiting for a leaf page" : 10,

"eviction server waiting for an internal page sleep (usec)" : 0,

"eviction server waiting for an internal page yields" : 0,

"eviction state" : 128,

"eviction walk target pages histogram - 0-9" : 0,

"eviction walk target pages histogram - 10-31" : 0,

"eviction walk target pages histogram - 128 and higher" : 0,

"eviction walk target pages histogram - 32-63" : 0,

"eviction walk target pages histogram - 64-128" : 0,

"eviction walk target strategy both clean and dirty pages" : 0,

"eviction walk target strategy only clean pages" : 0,

"eviction walk target strategy only dirty pages" : 0,

"eviction walks abandoned" : 0,

"eviction walks gave up because they restarted their walk twice" : 0,

"eviction walks gave up because they saw too many pages and found no candidates" : 0,

"eviction walks gave up because they saw too many pages and found too few candidates" : 0,

"eviction walks reached end of tree" : 0,

"eviction walks started from root of tree" : 0,

"eviction walks started from saved location in tree" : 0,

"eviction worker thread active" : 4,

"eviction worker thread created" : 0,

"eviction worker thread evicting pages" : 6,

"eviction worker thread removed" : 0,

"eviction worker thread stable number" : 0,

"files with active eviction walks" : 0,

"files with new eviction walks started" : 0,

"force re-tuning of eviction workers once in a while" : 0,

"forced eviction - pages evicted that were clean count" : 0,

"forced eviction - pages evicted that were clean time (usecs)" : 0,

"forced eviction - pages evicted that were dirty count" : 0,

"forced eviction - pages evicted that were dirty time (usecs)" : 0,

"forced eviction - pages selected because of too many deleted items count" : 6,

"forced eviction - pages selected count" : 0,

"forced eviction - pages selected unable to be evicted count" : 0,

"forced eviction - pages selected unable to be evicted time" : 0,

"hazard pointer blocked page eviction" : 0,

"hazard pointer check calls" : 6,

"hazard pointer check entries walked" : 0,

"hazard pointer maximum array length" : 0,

"in-memory page passed criteria to be split" : 0,

"in-memory page splits" : 0,

"internal pages evicted" : 0,

"internal pages queued for eviction" : 0,

"internal pages seen by eviction walk" : 0,

"internal pages seen by eviction walk that are already queued" : 0,

"internal pages split during eviction" : 0,

"leaf pages split during eviction" : 0,

"maximum bytes configured" : 536870912,

"maximum page size at eviction" : 0,

"modified pages evicted" : 12,

"modified pages evicted by application threads" : 0,

"operations timed out waiting for space in cache" : 0,

"overflow pages read into cache" : 0,

"page split during eviction deepened the tree" : 0,

"page written requiring cache overflow records" : 0,

"pages currently held in the cache" : 44,

"pages evicted by application threads" : 0,

"pages queued for eviction" : 0,

"pages queued for eviction post lru sorting" : 0,

"pages queued for urgent eviction" : 6,

"pages queued for urgent eviction during walk" : 0,

"pages read into cache" : 38,

"pages read into cache after truncate" : 51,