Kubernetes学习之路(二)之ETCD集群二进制部署

ETCD集群部署

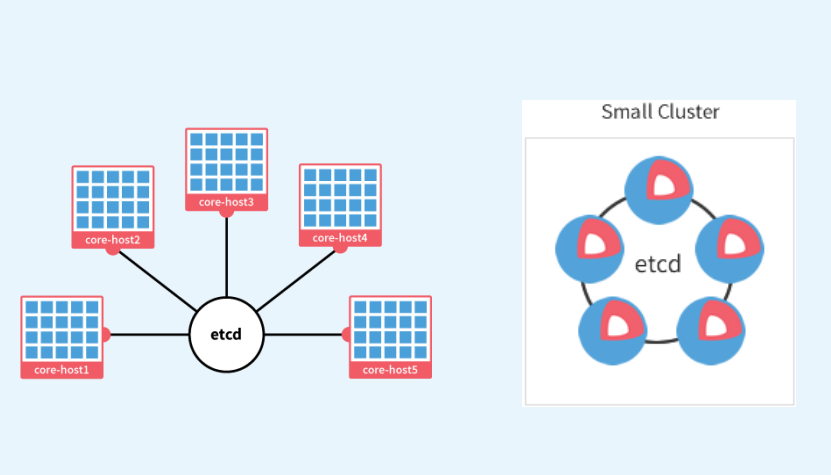

所有持久化的状态信息以KV的形式存储在ETCD中。类似zookeeper,提供分布式协调服务。之所以说kubenetes各个组件是无状态的,就是因为其中把数据都存放在ETCD中。由于ETCD支持集群,这里在三台主机上都部署上ETCD。

(1)准备etcd软件包

wget https://github.com/coreos/etcd/releases/download/v3.2.18/etcd-v3.2.18-linux-amd64.tar.gz [root@linux-node1 src]# tar zxf etcd-v3.2.18-linux-amd64.tar.gz #解压etcd [root@linux-node1 src]# cd etcd-v3.2.18-linux-amd64 #有2个文件,etcdctl是操作etcd的命令 [root@linux-node1 etcd-v3.2.18-linux-amd64]# cp etcd etcdctl /opt/kubernetes/bin/ [root@linux-node1 etcd-v3.2.18-linux-amd64]# scp etcd etcdctl 192.168.56.120:/opt/kubernetes/bin/ [root@linux-node1 etcd-v3.2.18-linux-amd64]# scp etcd etcdctl 192.168.56.130:/opt/kubernetes/bin/

(2)创建 etcd 证书签名请求

[root@linux-node1 ~]# cd /usr/local/src/ssl

[root@linux-node1 ssl]# vim etcd-csr.json { "CN": "etcd", "hosts": [ #此处的ip是etcd集群中各个节点的ip地址 "127.0.0.1", "192.168.56.110", "192.168.56.120", "192.168.56.130" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ] }

(3)生成 etcd 证书和私钥

[root@linux-node1 ~]# cfssl gencert -ca=/opt/kubernetes/ssl/ca.pem \ -ca-key=/opt/kubernetes/ssl/ca-key.pem \ -config=/opt/kubernetes/ssl/ca-config.json \ -profile=kubernetes etcd-csr.json | cfssljson -bare etcd

会生成以下证书文件 [root@linux-node1 ~]# ls -l etcd* -rw-r--r-- 1 root root 1045 Mar 5 11:27 etcd.csr -rw-r--r-- 1 root root 257 Mar 5 11:25 etcd-csr.json -rw------- 1 root root 1679 Mar 5 11:27 etcd-key.pem -rw-r--r-- 1 root root 1419 Mar 5 11:27 etcd.pem

(4)将证书拷贝到/opt/kubernetes/ssl目录下

[root@linux-node1 ~]# cp etcd*.pem /opt/kubernetes/ssl [root@linux-node1 ~]# scp etcd*.pem 192.168.56.120:/opt/kubernetes/ssl [root@linux-node1 ~]# scp etcd*.pem 192.168.56.130:/opt/kubernetes/ssl

(5)配置ETCD配置文件

2379端口用于外部通信,2380用于内部通信

[root@linux-node1 ~]# vim /opt/kubernetes/cfg/etcd.conf #[member] ETCD_NAME="etcd-node1" #ETCD节点名称修改,这个ETCD_NAME每个节点必须不同 ETCD_DATA_DIR="/var/lib/etcd/default.etcd" #ETCD数据目录 #ETCD_SNAPSHOT_COUNTER="10000" #ETCD_HEARTBEAT_INTERVAL="100" #ETCD_ELECTION_TIMEOUT="1000" ETCD_LISTEN_PEER_URLS="https://192.168.56.110:2380" #ETCD监听的URL,每个节点不同需要修改 ETCD_LISTEN_CLIENT_URLS="https://192.168.56.110:2379,https://127.0.0.1:2379" #外部通信监听URL修改,每个节点不同需要修改 #ETCD_MAX_SNAPSHOTS="5" #ETCD_MAX_WALS="5" #ETCD_CORS="" #[cluster] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.56.110:2380" # if you use different ETCD_NAME (e.g. test), # set ETCD_INITIAL_CLUSTER value for this name, i.e. "test=http://..." ETCD_INITIAL_CLUSTER="etcd-node1=https://192.168.56.110:2380,etcd-node2=https://192.168.56.120:2380,etcd-node3=https://192.168.56.130:2380" #添加集群访问 ETCD_INITIAL_CLUSTER_STATE="new" ETCD_INITIAL_CLUSTER_TOKEN="k8s-etcd-cluster" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.56.110:2379" #[security] CLIENT_CERT_AUTH="true" ETCD_CA_FILE="/opt/kubernetes/ssl/ca.pem" ETCD_CERT_FILE="/opt/kubernetes/ssl/etcd.pem" ETCD_KEY_FILE="/opt/kubernetes/ssl/etcd-key.pem" PEER_CLIENT_CERT_AUTH="true" ETCD_PEER_CA_FILE="/opt/kubernetes/ssl/ca.pem" ETCD_PEER_CERT_FILE="/opt/kubernetes/ssl/etcd.pem" ETCD_PEER_KEY_FILE="/opt/kubernetes/ssl/etcd-key.pem"

(6)创建ETCD系统服务

[root@linux-node1 ~]# vim /etc/systemd/system/etcd.service [Unit] Description=Etcd Server After=network.target [Service] Type=simple WorkingDirectory=/var/lib/etcd EnvironmentFile=-/opt/kubernetes/cfg/etcd.conf # set GOMAXPROCS to number of processors ExecStart=/bin/bash -c "GOMAXPROCS=$(nproc) /opt/kubernetes/bin/etcd" Type=notify [Install] WantedBy=multi-user.target

(7)重新加载系统服务并拷贝etcd.conf和etcd.service文件到其他2个节点

[root@linux-node1 ~]# systemctl daemon-reload [root@linux-node1 ~]# systemctl enable etcd [root@linux-node1 ~]# scp /opt/kubernetes/cfg/etcd.conf 192.168.56.120:/opt/kubernetes/cfg/ [root@linux-node1 ~]# scp /etc/systemd/system/etcd.service 192.168.56.120:/etc/systemd/system/ [root@linux-node1 ~]# scp /opt/kubernetes/cfg/etcd.conf 192.168.56.130:/opt/kubernetes/cfg/ [root@linux-node1 ~]# scp /etc/systemd/system/etcd.service 192.168.56.130:/etc/systemd/system/ 拷贝过去的etcd.conf在node2和node3需要进行修改4处的ip地址,举例修改node2如下: [root@linux-node2 ~]# vim /opt/kubernetes/cfg/etcd.conf ETCD_NAME="etcd-node2" ETCD_LISTEN_PEER_URLS="https://192.168.56.120:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.56.120:2379,https://127.0.0.1:2379"

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.56.120:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.56.120:2379"

默认不会创建etcd的数据存储目录,这里在所有节点上创建etcd数据存储目录并启动etcd

[root@linux-node1 ~]# mkdir /var/lib/etcd [root@linux-node1 ~]# systemctl start etcd [root@linux-node1 ~]# systemctl status etcd [root@linux-node2 ~]# mkdir /var/lib/etcd [root@linux-node2 ~]# systemctl start etcd [root@linux-node2 ~]# systemctl status etcd [root@linux-node3 ~]# mkdir /var/lib/etcd [root@linux-node3 ~]# systemctl start etcd [root@linux-node3 ~]# systemctl status etcd [root@linux-node1 ssl]# netstat -tulnp |grep etcd #在各节点上查看是否监听了2379和2380端口

tcp 0 0 192.168.56.11:2379 0.0.0.0:* LISTEN 4916/etcd tcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN 4916/etcd tcp 0 0 192.168.56.11:2380 0.0.0.0:* LISTEN 4916/etcd

(8)验证ETCD集群

[root@linux-node1 ~]# etcdctl --endpoints=https://192.168.56.110:2379 \ --ca-file=/opt/kubernetes/ssl/ca.pem \ --cert-file=/opt/kubernetes/ssl/etcd.pem \ --key-file=/opt/kubernetes/ssl/etcd-key.pem cluster-health member 435fb0a8da627a4c is healthy: got healthy result from https://192.168.56.120:2379 member 6566e06d7343e1bb is healthy: got healthy result from https://192.168.56.110:2379 member ce7b884e428b6c8c is healthy: got healthy result from https://192.168.56.130:2379 cluster is healthy #表明ETCD集群是正常的!!!

Don't forget the beginner's mind

浙公网安备 33010602011771号

浙公网安备 33010602011771号