Volume& PersistentVolume

Volume:

Kubernetes中的Volume提供了在容器中挂载外部存储的能力

Pod需要设置卷来源(spec.volume)和挂载点(spec.containers.volumeMounts)两个信息后才可以使用相应的Volume

官方参考地址:https://kubernetes.io/docs/concepts/storage/volumes/

1、emptyDir

创建一个空卷,挂载到Pod中的容器。Pod删除该卷也会被删除。

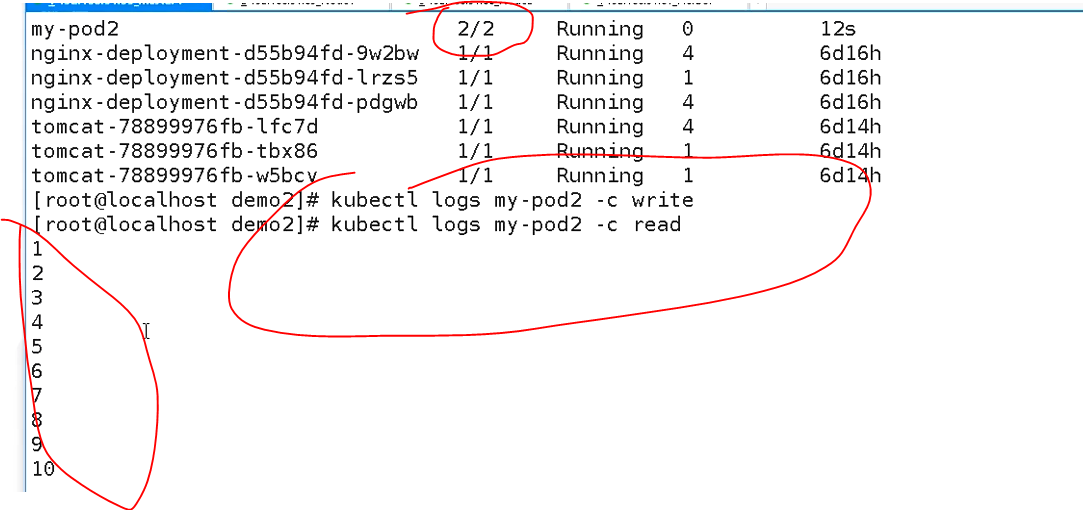

应用场景:Pod中容器之间数据共享

[root@master01 volume]# cat emptyDir.yaml apiVersion: v1 kind: Pod metadata: name: my-pod spec: # imagePullSecrets: # - name: registry-pull-secret containers: - name: write #一个写容器 image: 10.192.27.111/library/busybox:1.28.4 command: ["sh","-c","for i in {1..100};do echo $i >> /data/hello;sleep 1;done"] volumeMounts: - name: data #使用数据卷 名字与下面一致 mountPath: /data - name: read #一个读容器 image: 10.192.27.111/library/centos:7 command: ["bash","-c","tail -f /data/hello"] volumeMounts: - name: data #使用数据卷 名字与下面一致 mountPath: /data volumes: - name: data #新建一个数据卷 emptyDir: {} [root@master01 volume]#

2、hostPath

挂载Node文件系统上文件或者目录到Pod中的容器。

应用场景:Pod中容器需要访问宿主机文件

[root@master01 volume]# cat hostpath.yaml apiVersion: v1 kind: Pod metadata: name: my-pod spec: containers: - name: busybox image: 10.192.27.111/library/busybox:1.28.4 args: - /bin/sh - -c - sleep 36000 volumeMounts: - name: data mountPath: /data volumes: - name: data hostPath: path: /tmp type: Directory [root@master01 volume]# [root@master01 volume]# kubectl apply -f hostpath.yaml [root@master01 volume]# kubectl get pods NAME READY STATUS RESTARTS AGE my-pod 1/1 Running 0 10s [root@master01 volume]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES my-pod 1/1 Running 0 2m19s 172.17.43.2 10.192.27.115 <none> <none> #查看挂载在哪个节点 [root@master01 volume]# kubectl exec -it my-pod sh #pod里面的 / # ls /data/ systemd-private-16f6a1d6621b4a6d96d2c21f44c6bff3-chronyd.service-6Evm1r / # [root@node01 ~]# ls /tmp/ #node01 10.192.27.115上面的 两者一样 systemd-private-16f6a1d6621b4a6d96d2c21f44c6bff3-chronyd.service-6Evm1r [root@node01 ~]#

3、nfs

#各个node节点安装NFS [root@node01 cfg]# yum -y install nfs-utils [root@node02 cfg]# yum -y install nfs-utils [root@master01 volume]# cat nfs.yaml apiVersion: apps/v1beta1 kind: Deployment metadata: name: nginx-deployment spec: replicas: 3 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: 10.192.27.111/library/nginx:latest volumeMounts: - name: wwwroot #使用数据卷 mountPath: /usr/share/nginx/html ports: - containerPort: 80 volumes: - name: wwwroot #数据卷 nfs: server: 10.192.27.113 path: /home/kdzd [root@master01 volume]# [root@master01 volume]# kubectl apply -f nfs.yaml deployment.apps/nginx-deployment created [root@master01 volume]# kubectl get pod NAME READY STATUS RESTARTS AGE nginx-deployment-5dfc75bdc4-5wjmj 1/1 Running 0 16s nginx-deployment-5dfc75bdc4-q5mrz 1/1 Running 0 16s nginx-deployment-5dfc75bdc4-qkmxk 1/1 Running 0 16s [root@master01 volume]# kubectl get ep NAME ENDPOINTS AGE kubernetes 10.192.27.100:6443,10.192.27.114:6443 18d [root@master01 volume]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-deployment-5dfc75bdc4-5wjmj 1/1 Running 0 2m23s 172.17.43.3 10.192.27.115 <none> <none> nginx-deployment-5dfc75bdc4-q5mrz 1/1 Running 0 2m23s 172.17.43.2 10.192.27.115 <none> <none> nginx-deployment-5dfc75bdc4-qkmxk 1/1 Running 0 2m23s 172.17.46.4 10.192.27.116 <none> <none> #测试一下 [root@nfs-server ~]# cd /home/kdzd/ [root@nfs-server kdzd]# vim index.html [root@nfs-server kdzd]# cat index.html #10.192.27.113 <h1>hello world!</h1> [root@nfs-server kdzd]# [root@node02 cfg]# curl 172.17.43.2 <h1>hello world!</h1> [root@node02 cfg]# curl 172.17.43.3 <h1>hello world!</h1> [root@node02 cfg]# curl 172.17.46.4 <h1>hello world!</h1>

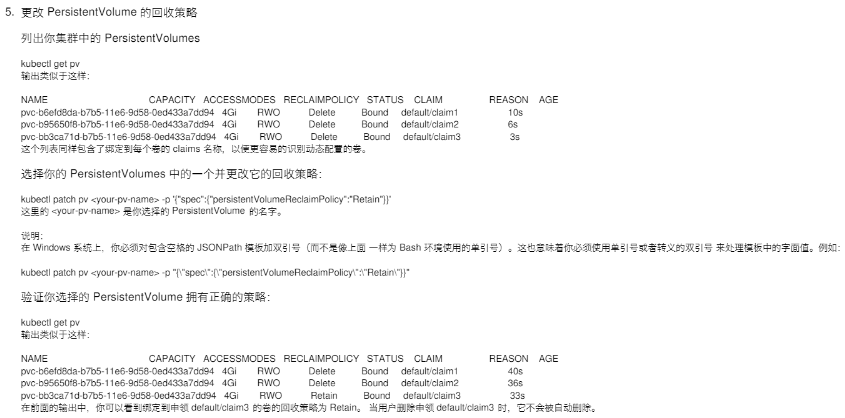

PersistentVolume

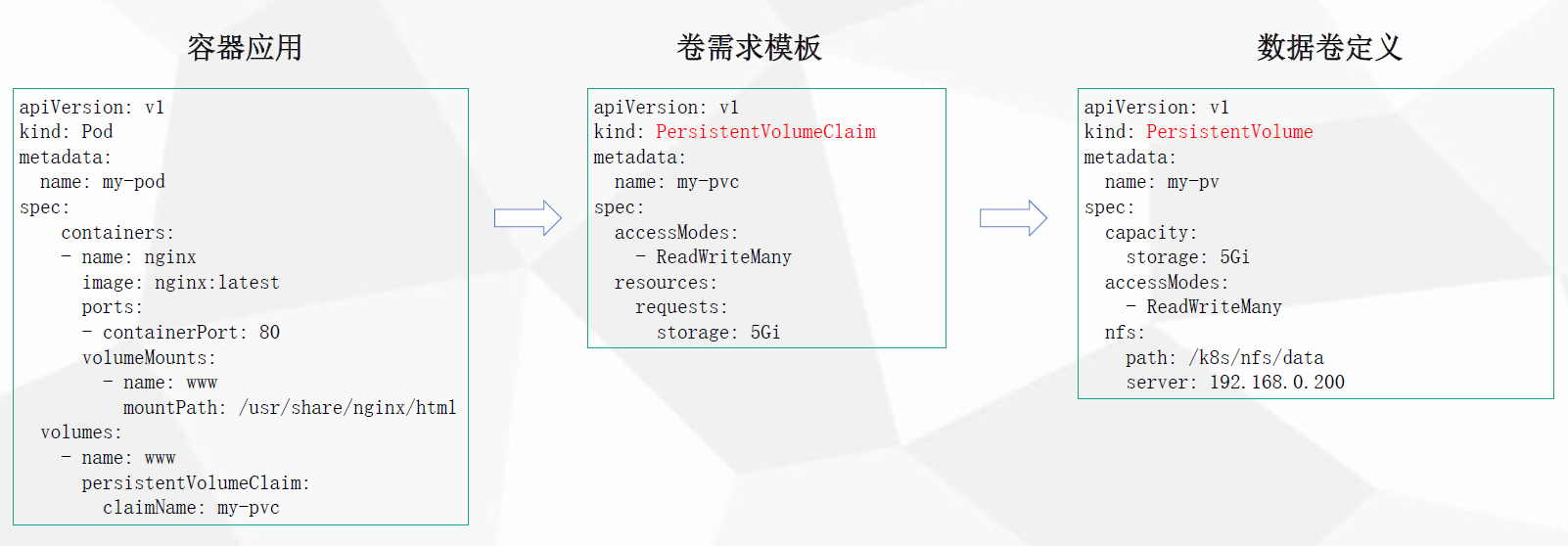

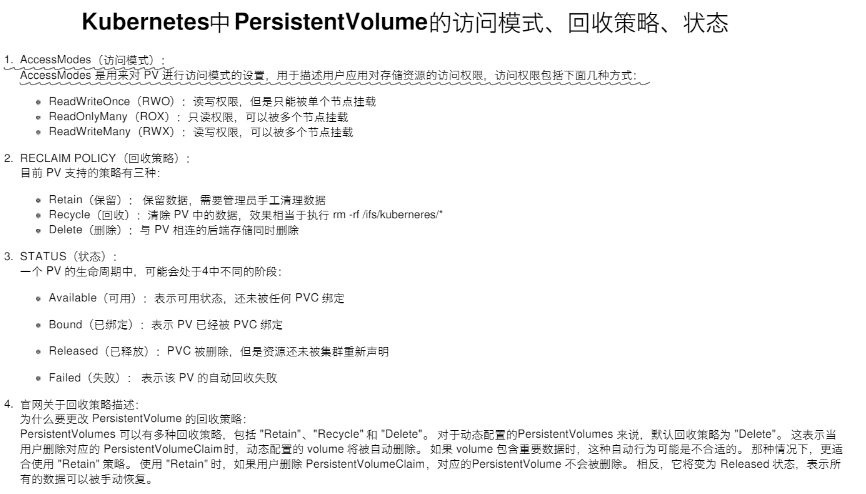

PersistenVolume(PV):对存储资源创建和使用的抽象,使得存储作为集群中的资源管理

静态

动态

PersistentVolumeClaim(PVC):让用户不需要关心具体的Volume实现细节

1、静态

手动创建pv

[root@master01 storage]# cat pv.yaml #麻烦 手动创建pv

apiVersion: v1

kind: PersistentVolume

metadata:

name: my-pv

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteMany

nfs:

path: /home/kdzd/data #该目录要存在

server: 10.192.27.113

[root@master01 storage]#

[root@master01 storage]# cat pod-pvc.yaml apiVersion: v1 kind: Pod metadata: name: my-pod spec: containers: - name: nginx image: 10.192.27.111/library/nginx:latest ports: - containerPort: 80 volumeMounts: - name: www mountPath: /usr/share/nginx/html volumes: - name: www persistentVolumeClaim: claimName: my-pvc --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: my-pvc spec: accessModes: - ReadWriteMany resources: requests: storage: 5Gi [root@master01 storage]# [root@master01 storage]# kubectl delete -f pod-pvc.yaml pod "my-pod" deleted persistentvolumeclaim "my-pvc" deleted [root@master01 storage]# kubectl delete -f pv.yaml 注意 已使用过pv要删除掉 persistentvolume "my-pv" deleted [root@master01 storage]# vim pod-pvc.yaml [root@master01 storage]# kubectl apply -f pv.yaml persistentvolume/my-pv created [root@master01 storage]# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE my-pv 5Gi RWX Retain Available 10s [root@master01 storage]# kubectl apply -f pod-pvc.yaml pod/my-pod created persistentvolumeclaim/my-pvc created [root@master01 storage]# kubectl get pv,pvc #pv 和 pvc是通过容量和访问模式匹配 NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE persistentvolume/my-pv 5Gi RWX Retain Bound default/my-pvc 58s NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE persistentvolumeclaim/my-pvc Bound my-pv 5Gi RWX 6s [root@master01 storage]# kubectl get pods NAME READY STATUS RESTARTS AGE my-pod 1/1 Running 0 14s [root@master01 storage]# kubectl exec -it my-pod bash root@my-pod:/# ls /usr/share/nginx/html index.html root@my-pod:/# exit exit [root@master01 storage]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES my-pod 1/1 Running 0 3m16s 172.17.43.2 10.192.27.115 <none> <none> [root@master01 storage]# node节点上操作 [root@node01 ~]# curl 172.17.43.2 <h1>hello world!</h1> [root@node01 ~]#

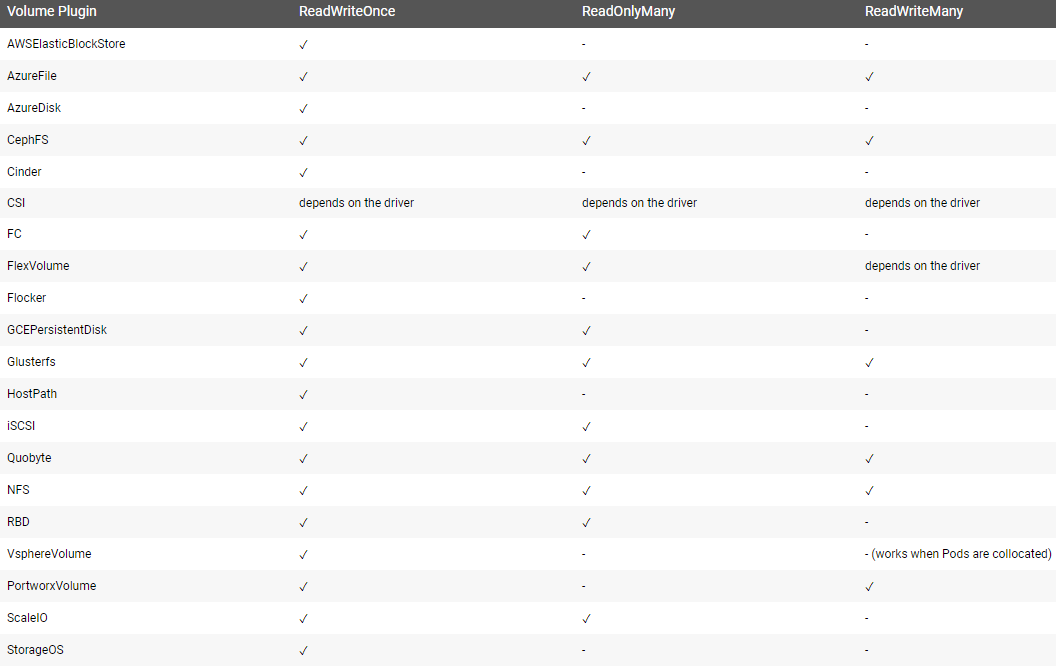

Kubernetes支持持久卷的存储插件:https://kubernetes.io/docs/concepts/storage/persistent-volumes/

2、PersistentVolume 动态供给

Dynamic Provisioning机制工作的核心在于StorageClass的API对象。

StorageClass声明存储插件,用于自动创建PV。

https://github.com/kubernetes-incubator/external-storage/tree/master/nfs-client/deploy 部署的yaml文件

[root@master01 storage-class]# ls

deployment-nfs.yaml nginx-demo.yaml rbac.yaml storageclass-nfs.yaml

[root@master01 storage-class]# kubectl apply -f storageclass-nfs.yaml

storageclass.storage.k8s.io/managed-nfs-storage created

[root@master01 storage-class]# cat storageclass-nfs.yaml

apiVersion: storage.k8s.io/v1beta1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: fuseim.pri/ifs #提供nfs存储的提供者名字 关联下面的 nfs-client-provisioner

[root@master01 storage-class]#

[root@master01 storage-class]# kubectl get StorageClass

NAME PROVISIONER AGE

managed-nfs-storage fuseim.pri/ifs 30s

[root@master01 storage-class]#

[root@master01 storage-class]# kubectl apply -f rbac.yaml

serviceaccount/nfs-client-provisioner created

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created

[root@master01 storage-class]# cat rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["list", "watch", "create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

[root@master01 storage-class]#

[root@master01 storage-class]# cat deployment-nfs.yaml

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: nfs-client-provisioner

spec:

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

# imagePullSecrets:

# - name: registry-pull-secret

serviceAccount: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: 10.192.27.111/lizhenliang/nfs-client-provisioner:v2.0.0

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs

- name: NFS_SERVER

value: 10.192.27.113

- name: NFS_PATH

value: /home/kdzd/data

volumes:

- name: nfs-client-root

nfs:

server: 10.192.27.113

path: /home/kdzd/data

[root@master01 storage-class]# kubectl get sc

NAME PROVISIONER AGE

managed-nfs-storage fuseim.pri/ifs 2m5s

[root@master01 storage-class]# kubectl apply -f deployment-nfs.yaml

deployment.apps/nfs-client-provisioner created

[root@master01 storage-class]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-86684d5b5f-8k7x6 1/1 Running 0 6s

[root@master01 storage-class]#

nginx-demo例子:https://kubernetes.io/docs/tutorials/stateful-application/basic-stateful-set/

[root@master01 storage-class]# cat nginx-demo.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

selector:

matchLabels:

app: nginx

serviceName: "nginx"

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

terminationGracePeriodSeconds: 10

containers:

- name: nginx

image: 10.192.27.111/library/nginx:latest

ports:

- containerPort: 80

name: web

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "managed-nfs-storage"

resources:

requests:

storage: 1Gi

[root@master01 storage-class]#

[root@master01 storage-class]# kubectl apply -f nginx-demo.yaml service/nginx created statefulset.apps/web created [root@master01 storage-class]# kubectl get all NAME READY STATUS RESTARTS AGE pod/nfs-client-provisioner-86684d5b5f-8k7x6 1/1 Running 0 7m51s pod/web-0 1/1 Running 0 71s pod/web-1 1/1 Running 0 19s pod/web-2 1/1 Running 0 17s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 20d service/nginx ClusterIP None <none> 80/TCP 71s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/nfs-client-provisioner 1/1 1 1 7m51s NAME DESIRED CURRENT READY AGE replicaset.apps/nfs-client-provisioner-86684d5b5f 1 1 1 7m51s NAME READY AGE statefulset.apps/web 3/3 71s [root@master01 storage-class]# kubectl get pv,pvc NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE persistentvolume/default-www-web-0-pvc-91fdee77-14a9-11ea-9860-1866dafb2f54 1Gi RWO Delete Bound default/www-web-0 managed-nfs-storage 37s persistentvolume/default-www-web-1-pvc-5d362b59-14aa-11ea-9860-1866dafb2f54 1Gi RWO Delete Bound default/www-web-1 managed-nfs-storage 36s persistentvolume/default-www-web-2-pvc-5e5e826e-14aa-11ea-9860-1866dafb2f54 1Gi RWO Delete Bound default/www-web-2 managed-nfs-storage 34s NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE persistentvolumeclaim/www-web-0 Bound default-www-web-0-pvc-91fdee77-14a9-11ea-9860-1866dafb2f54 1Gi RWO managed-nfs-storage 6m17s persistentvolumeclaim/www-web-1 Bound default-www-web-1-pvc-5d362b59-14aa-11ea-9860-1866dafb2f54 1Gi RWO managed-nfs-storage 36s persistentvolumeclaim/www-web-2 Bound default-www-web-2-pvc-5e5e826e-14aa-11ea-9860-1866dafb2f54 1Gi RWO managed-nfs-storage 34s [root@master01 storage-class]# [root@master01 storage-class]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 20d nginx ClusterIP None <none> 80/TCP 4m52s [root@master01 storage-class]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nfs-client-provisioner-86684d5b5f-8k7x6 1/1 Running 0 11m 172.17.43.2 10.192.27.115 <none> <none> web-0 1/1 Running 0 5m1s 172.17.43.3 10.192.27.115 <none> <none> web-1 1/1 Running 0 4m9s 172.17.46.4 10.192.27.116 <none> <none> web-2 1/1 Running 0 4m7s 172.17.43.4 10.192.27.115 <none> <none> [root@master01 storage-class]#

测试一下: [root@nfs-server kdzd]# ls data/ default-www-web-0-pvc-91fdee77-14a9-11ea-9860-1866dafb2f54 default-www-web-1-pvc-5d362b59-14aa-11ea-9860-1866dafb2f54 default-www-web-2-pvc-5e5e826e-14aa-11ea-9860-1866dafb2f54 [root@nfs-server kdzd]# ls data/default-www-web-0-pvc-91fdee77-14a9-11ea-9860-1866dafb2f54/ [root@nfs-server kdzd]# cp index.html data/default-www-web-0-pvc-91fdee77-14a9-11ea-9860-1866dafb2f54/ [root@nfs-server kdzd]# cp index.html data/default-www-web-1-pvc-5d362b59-14aa-11ea-9860-1866dafb2f54/ [root@nfs-server kdzd]# cp index.html data/default-www-web-2-pvc-5e5e826e-14aa-11ea-9860-1866dafb2f54/ [root@nfs-server kdzd]# [root@node01 ~]# curl 172.17.43.3 <h1>hello world!</h1> [root@node01 ~]#

Kubernetes支持动态供给的存储插件: https://kubernetes.io/docs/concepts/storage/storage-classes/

[root@master01 storage]# cat pod-pvc.yaml apiVersion: v1 kind: Pod metadata: name: my-pod spec: containers: - name: nginx image: 10.192.27.111/library/nginx:latest ports: - containerPort: 80 volumeMounts: - name: www mountPath: /usr/share/nginx/html volumes: - name: www persistentVolumeClaim: claimName: my-pvc --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: my-pvc spec: accessModes: - ReadWriteMany storageClassName: managed-nfs-storage resources: requests: storage: 5Gi [root@master01 storage]# [root@master01 storage]# vim pod-pvc.yaml [root@master01 storage]# kubectl apply -f pod-pvc.yaml pod/my-pod created persistentvolumeclaim/my-pvc created [root@master01 storage]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES my-pod 1/1 Running 0 5s 172.17.43.5 10.192.27.115 <none> <none> nfs-client-provisioner-86684d5b5f-8k7x6 1/1 Running 0 15m 172.17.43.2 10.192.27.115 <none> <none> web-0 1/1 Running 0 9m15s 172.17.43.3 10.192.27.115 <none> <none> web-1 1/1 Running 0 8m23s 172.17.46.4 10.192.27.116 <none> <none> web-2 1/1 Running 0 8m21s 172.17.43.4 10.192.27.115 <none> <none> [root@master01 storage]# kubectl get pv,pvc NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE persistentvolume/default-my-pvc-pvc-85a2e9bf-14ab-11ea-9860-1866dafb2f54 5Gi RWX Delete Bound default/my-pvc managed-nfs-storage 14s persistentvolume/default-www-web-0-pvc-91fdee77-14a9-11ea-9860-1866dafb2f54 1Gi RWO Delete Bound default/www-web-0 managed-nfs-storage 8m33s persistentvolume/default-www-web-1-pvc-5d362b59-14aa-11ea-9860-1866dafb2f54 1Gi RWO Delete Bound default/www-web-1 managed-nfs-storage 8m32s persistentvolume/default-www-web-2-pvc-5e5e826e-14aa-11ea-9860-1866dafb2f54 1Gi RWO Delete Bound default/www-web-2 managed-nfs-storage 8m30s NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE persistentvolumeclaim/my-pvc Bound default-my-pvc-pvc-85a2e9bf-14ab-11ea-9860-1866dafb2f54 5Gi RWX managed-nfs-storage 14s persistentvolumeclaim/www-web-0 Bound default-www-web-0-pvc-91fdee77-14a9-11ea-9860-1866dafb2f54 1Gi RWO managed-nfs-storage 14m persistentvolumeclaim/www-web-1 Bound default-www-web-1-pvc-5d362b59-14aa-11ea-9860-1866dafb2f54 1Gi RWO managed-nfs-storage 8m32s persistentvolumeclaim/www-web-2 Bound default-www-web-2-pvc-5e5e826e-14aa-11ea-9860-1866dafb2f54 1Gi RWO managed-nfs-storage 8m30s [root@master01 storage]#

#! /bin/bash # delete ClainRef kubectl patch pv packages-volume --type json -p '[{"op": "remove", "path": "/spec/claimRef"}]' export PATH="${KREW_ROOT:-$HOME/.krew}/bin:$PATH" if [ ! -x DelclaimRef ]; then mkdir DelclaimRef fi while read -r pvc_name; do echo "$line" ; if [ ! -x "$pvc_name" ]; then mkdir $pvc_name fi for i in dev qa uat do kubectl get pvc -n $i $pvc_name -o yaml |kubectl neat > ${pvc_name}/$i-${pvc_name}-pvc.yaml pv_name=`kubectl get pvc -n $i |grep "^$pvc_name" |awk '{print $3}'` kubectl get pv $pv_name -o yaml |kubectl neat > ${pvc_name}/$i-${pvc_name}-pv.yaml sed -i "s#data.${i}.mxxl.com/ifs#cluster.local/nfs-client-provisioner-data-${i}-90-198#g" ${pvc_name}/$i-${pvc_name}-pvc.yaml sed -i "s#data.${i}.mxxl.com/ifs#cluster.local/nfs-client-provisioner-data-${i}-90-198#g" ${pvc_name}/$i-${pvc_name}-pv.yaml #sed -i '13,19d' ${pvc_name}/$i-${pvc_name}-pv.yaml echo "kubectl patch pv ${pv_name} --type json -p '[{"op": "remove", "path": "/spec/claimRef"}]'" > DelclaimRef/$i-${pvc_name}-pv_del_claimRef.sh scp -P22022 -r $pvc_name 192.168.90.2:/opt/k8s/kube-pv/pvc-migrate/ done done < pvc-1.list scp -P22022 -r DelclaimRef 192.168.90.2:/opt/k8s/kube-pv/pvc-migrate/

浙公网安备 33010602011771号

浙公网安备 33010602011771号