Service

一、概述

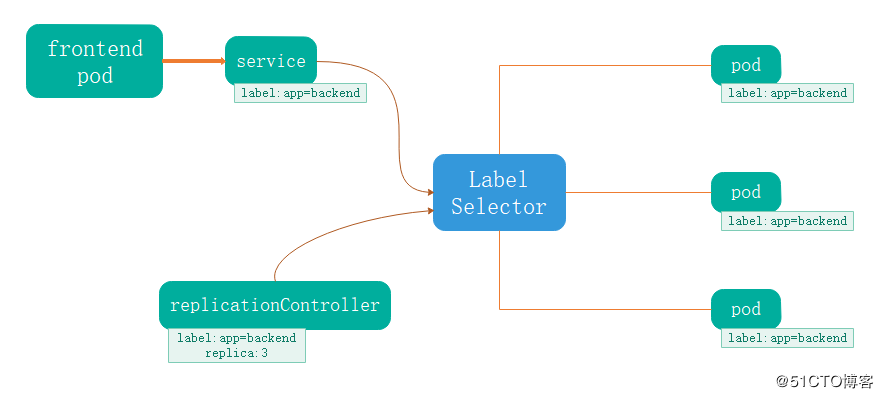

Service其实就是我们经常提起的微服务架构中的一个“微服务”,Pod、RC等资源对象其实都是为它作“嫁衣”的。Pod、RC或RS与Service的逻辑关系如下图所示。

通过上图我们看到,Kubernetes的Service定义了一个服务的访问入口地址,前端的应用(Pod)通过这个入口地址访问其背后的一组由Pod副本组成的集群实例,Service与其后端Pod副本集群之间则是通过Label Selector来实现“无缝对接”的。而RC的作用实际上是保证Service的服务能力和服务质量始终处于预期的标准。

通过分析、识别并建模系统中的所有服务为微服务——Kubernetes Service,最终我们的系统由多个提供不同业务能力而又彼此独立的微服务单元所组成,服务之间通过TCP/IP进行通信,从而形成了强大而又灵活的弹性集群,拥有了强大的分布式能力、弹性扩展能力、容错能力。因此,我们的系统架构也变得简单和直观许多。

既然每个Pod都会被分配一个单独的IP地址,而且每个Pod都提供了一个独立的Endpoint(Pod IP+ContainerPort)以被客户端访问,多个Pod副本组成了一个集群来提供服务,那么客户端如何来访问它们呢?一般的做法是部署一个负载均衡器(软件或硬件),但这样无疑增加了运维的工作量。在Kubernetes集群里使用了Service(服务),它提供了一个虚拟的IP地址(Cluster IP)和端口号,Kubernetes集群里的任何服务都可以通过Cluster IP+端口的方式来访问此服务,至于访问请求最后会被转发到哪个Pod,则由运行在每个Node上的kube-proxy负责。kube-proxy进程其实就是一个智能的软件负载均衡器,它负责把对Service的请求转发到后端的某个Pod实例上,并在内部实现服务的负载均衡与会话保持机制。

service可以防止Pod失联

定义一组Pod的访问策略

支持ClusterIP,NodePort以及LoadBalancer三种类型

Service的底层实现主要有iptables和ipvs二种网络模式

每个Kubernetes中的Service都有一个唯一的Cluster IP及唯一的名字,而名字是由我们自己定义的,那我们是否可以通过Service的名字来访问呢? 最早时Kubernetes采用了Linux环境变量的方式来实现,即每个Service生成一些对应的Linux环境变量(ENV),并在每个Pod的容器启动时,自动注入这些环境变量,以实现通过Service的名字来建立连接的目的。 考虑到通过环境变量获取Service的IP与端口的方式仍然不方便、不直观,后来Kubernetes通过Add-On增值包的方式引入了DNS系统,把服务名作为DNS域名,这样程序就可以直接使用服务名来建立连接了。 关于DNS的部署,后续博文我会单独讲解,有兴趣的朋友可以关注我的博客。 参考:https://blog.51cto.com/andyxu/2329257

二、外部如何访问Service

Kubernetes集群里有三种IP地址,分别如下:

Node IP:Node节点的IP地址,即物理网卡的IP地址。

Pod IP:Pod的IP地址,即docker容器的IP地址,此为虚拟IP地址。

Cluster IP:Service的IP地址,此为虚拟IP地址。

1. 外部访问Kubernetes集群里的某个节点或者服务时,必须要通过Node IP进行通信。

2. Pod IP是Docker Engine根据docker0网桥的IP地址段进行分配的一个虚拟二层网络IP地址,Pod与Pod之间的访问就是通过这个虚拟二层网络进行通信的,而真实的TCP/IP流量则是通过Node IP所在的物理网卡流出的。

3. Service的Cluster IP具有以下特点:

Cluster IP仅仅作用于Service这个对象,并由Kubernetes管理和分配IP地址。

Cluster IP是一个虚拟地址,无法被ping。

Cluster IP只能结合Service Port组成一个具体的通信端口,供Kubernetes集群内部访问,单独的Cluster IP不具备TCP/IP通信的基础,并且外部如果要访问这个通信端口,需要做一些额外的工作。

Node IP、Pod IP和Cluster IP之间的通信,采用的是Kubernetes自己设计的一种特殊的路由规则,与我们熟悉的IP路由有很大的区别。

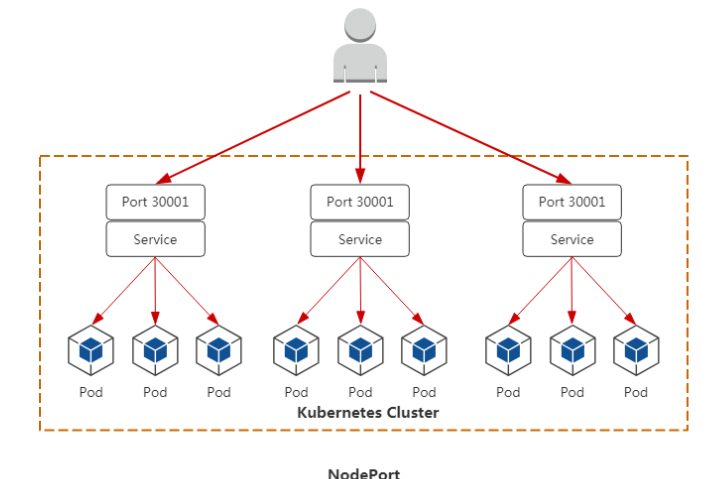

service类型一:NodePort

我们的应用如果想让外部访问,最常用的作法是使用NodePort方式:在每个Node上分配一个端口作为外部访问入口

介绍几个常见的端口

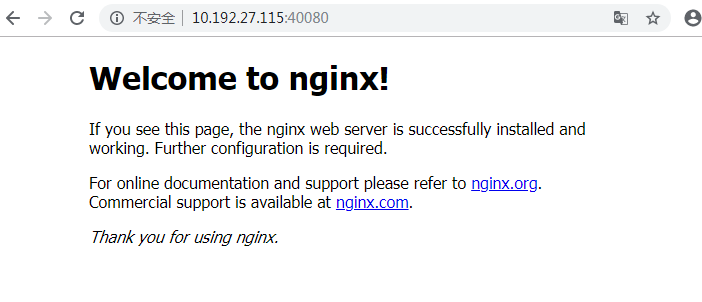

1. nodePort 外部流量访问k8s集群中service入口的一种方式(另一种方式是LoadBalancer),即nodeIP:nodePort是提供给外部流量访问k8s集群中service的入口。 比如外部用户要访问k8s集群中的一个Web应用,那么我们可以配置对应service的type=NodePort,nodePort=30001。其他用户就可以通过浏览器http://node:30001访问到该web服务。 (而数据库等服务可能不需要被外界访问,只需被内部服务访问即可,那么我们就不必设置service的NodePort。) 不管是通过集群内部服务入口<cluster ip>:port 还是通过集群外部服务入口<node ip>:nodePort的请求 都将重定向到本地kube-proxy端口(随机端口)的映射, 然后将到这个kube-proxy端口的访问给代理到远端真实的 pod 地址上去。 [root@node01 log]# ipvsadm -ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 10.0.0.1:443 rr -> 10.192.27.100:6443 Masq 1 0 0 -> 10.192.27.114:6443 Masq 1 0 0 TCP 10.0.0.43:2222 rr #内部 -> 172.17.43.4:80 Masq 1 0 0 -> 172.17.46.3:80 Masq 1 0 0 -> 172.17.46.4:80 Masq 1 0 0 TCP 10.192.27.115:40080 rr #外部 -> 172.17.43.4:80 Masq 1 0 0 -> 172.17.46.3:80 Masq 1 0 0 -> 172.17.46.4:80 Masq 1 0 0 TCP 127.0.0.1:40080 rr -> 172.17.43.4:80 Masq 1 0 0 -> 172.17.46.3:80 Masq 1 0 0 -> 172.17.46.4:80 Masq 1 0 0 TCP 172.17.43.0:40080 rr -> 172.17.43.4:80 Masq 1 0 0 -> 172.17.46.3:80 Masq 1 0 0 -> 172.17.46.4:80 Masq 1 0 0 TCP 172.17.43.1:40080 rr -> 172.17.43.4:80 Masq 1 0 0 -> 172.17.46.3:80 Masq 1 0 0 -> 172.17.46.4:80 Masq 1 0 0 [root@node01 log]# [root@node02 cfg]# ipvsadm -ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 10.0.0.1:443 rr -> 10.192.27.100:6443 Masq 1 0 0 -> 10.192.27.114:6443 Masq 1 0 0 TCP 10.0.0.43:2222 rr #内部 -> 172.17.43.4:80 Masq 1 0 0 #pod01中的应用已经开放了该端口 -> 172.17.46.3:80 Masq 1 0 0 #pod01中的应用已经开放了该端口 -> 172.17.46.4:80 Masq 1 0 0 #pod01中的应用已经开放了该端口 TCP 10.192.27.116:40080 rr #外部 -> 172.17.43.4:80 Masq 1 0 0 -> 172.17.46.3:80 Masq 1 0 0 -> 172.17.46.4:80 Masq 1 0 0 TCP 127.0.0.1:40080 rr -> 172.17.43.4:80 Masq 1 0 0 -> 172.17.46.3:80 Masq 1 0 0 -> 172.17.46.4:80 Masq 1 0 0 TCP 172.17.46.0:40080 rr -> 172.17.43.4:80 Masq 1 0 0 -> 172.17.46.3:80 Masq 1 0 0 -> 172.17.46.4:80 Masq 1 0 0 TCP 172.17.46.1:40080 rr -> 172.17.43.4:80 Masq 1 0 0 -> 172.17.46.3:80 Masq 1 0 0 -> 172.17.46.4:80 Masq 1 0 0 [root@node02 cfg]# 2. port k8s集群内部服务之间访问service的入口。即clusterIP:port是service暴露在clusterIP上的端口。 mysql容器暴露了3306端口(参考DockerFile),集群内部之间(其它容器等)通过33306端口访问mysql服务,但是外部流量不能访问mysql服务,因为mysql服务没有配置NodePort。 对应的service.yaml如下: apiVersion: v1 kind: Service metadata: name: mysql-service spec: ports: - port: 33306 targetPort: 3306 selector: name: mysql-pod 3. targetPort targetPort是pod上的端口,用来将pod内的container与外部进行通信的端口 容器中服务的端口(最终的流量端口)。targetPort是pod中的服务的端口(不是containerPort,但是containerPort映射到targetPort),从port和nodePort上来的流量,经过kube-proxy流入到后端pod的targetPort上,最后进入容器(1111端口)。 要求:pod中的镜像是否已经暴露了该端口 例如1: k8s集群中跑着一个tomcat服务,tomcat容器expose的端口为8080 targetPort:8080,就是tomcat容器expose的端口 例如2:与制作容器时暴露的端口一致(使用DockerFile中的EXPOSE),例如官方的nginx的docker镜像(参考DockerFile)暴露80端口。 对应的service.yaml如下: [root@master01 service]# cat deploy-nginx.yaml apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment namespace: default labels: app: nginx spec: replicas: 3 selector: matchLabels: app: nginx-deploy template: metadata: labels: app: nginx-deploy spec: containers: - name: nginx image: 10.192.27.111/library/nginx:1.14 imagePullPolicy: IfNotPresent ports: - containerPort: 1111 #这里containerPort是容器内部的port(Nginx默认是80) --- apiVersion: v1 kind: Service metadata: name: nginx-service-mxxl spec: type: NodePort ports: - port: 2222 #k8s集群内部服务之间访问的端口 protocol: TCP containerPort(1111)--映射到-》(80) targetPort: 80 #Pod的外部访问端口,port和nodePort的数据通过这个端口进入到Pod内部,Pod里面的containers的端口(1111)映射到这个端口,提供服务 nodePort: 40080 #k8s集群外部访问端口 selector: app: nginx-deploy [root@master01 service]# [root@master01 service]# kubectl get ep NAME ENDPOINTS AGE kubernetes 10.192.27.100:6443,10.192.27.114:6443 15d nginx-service-mxxl 172.17.43.4:80,172.17.46.3:80,172.17.46.4:80 33m #targetPort: 80 [root@master01 service]# [root@master01 service]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 15d nginx-service-mxxl NodePort 10.0.0.43 <none> 2222:40080/TCP 34m #<cluster ip>:port 10.0.0.43:2222 , nodePort 40080 [root@master01 service]# 4. 总结 总的来说,port和nodePort都是service的端口,前者暴露给k8s集群内部服务访问,后者暴露给k8s集群外部流量访问。从上两个端口过来的数据都需要经过反向代理kube-proxy,流入后端pod的targetPort上,最后到达pod内的容器。

[root@master01 service]# cat deploy-nginx.yaml apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment namespace: default labels: app: nginx spec: replicas: 3 selector: #deployment 通过 selector 控制一组标签名为 nginx-deploy的pod matchLabels: app: nginx-deploy template: metadata: labels: app: nginx-deploy spec: containers: - name: nginx image: 10.192.27.111/library/nginx:1.14 imagePullPolicy: IfNotPresent ports: - containerPort: 80 #官方文档解释:说明意思,没实际作用,可以注释(deployment 和service分开写的,做一个标识即容器暴露的服务端口)。如果要写可以跟容器暴露服务的端口一致 --- apiVersion: v1 kind: Service metadata: name: nginx-service-mxxl spec: type: NodePort ports: - port: 2222 #service 的端口 protocol: TCP targetPort: 80 #容器服务暴露的端口 nodePort: 40080 #k8s集群外部访问端口 固定端口 默认是随机的 selector: #service 通过selector连接一组标签名为nginx-deploy的pod app: nginx-deploy [root@master01 service]# [root@master01 service]# kubectl get ep NAME ENDPOINTS AGE kubernetes 10.192.27.100:6443,10.192.27.114:6443 15d nginx-service-mxxl 172.17.43.4:80,172.17.46.3:80,172.17.46.4:80 33m #targetPort: 80 [root@master01 service]# [root@master01 service]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 15d nginx-service-mxxl NodePort 10.0.0.43 <none> 2222:40080/TCP 34m #<cluster ip>:port 10.0.0.43:2222 , nodePort 40080 [root@master01 service]#

外部访问地址:10.192.27.115::40080或者10.192.27.116:40080 即 node IP : nodePort

[root@master01 yaml_doc]# cat /opt/kubernetes/cfg/kube-apiserver KUBE_APISERVER_OPTS="--logtostderr=true \ --v=4 \ --etcd-servers=https://10.192.27.100:2379,https://10.192.27.115:2379,https://10.192.27.116:2379 \ --bind-address=10.192.27.100 \ --secure-port=6443 \ --advertise-address=10.192.27.100 \ --allow-privileged=true \ --service-cluster-ip-range=10.0.0.0/24 \ --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \ --authorization-mode=RBAC,Node \ --kubelet-https=true \ --enable-bootstrap-token-auth \ --token-auth-file=/opt/kubernetes/cfg/token.csv \ --service-node-port-range=30000-50000 \ #nodePort的范围 --tls-cert-file=/opt/kubernetes/ssl/server.pem \ --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \ --client-ca-file=/opt/kubernetes/ssl/ca.pem \ --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \ --etcd-cafile=/opt/etcd/ssl/ca.pem \ --etcd-certfile=/opt/etcd/ssl/server.pem \ --etcd-keyfile=/opt/etcd/ssl/server-key.pem"

[root@node01 log]# ifconfig docker0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet 172.17.43.1 netmask 255.255.255.0 broadcast 172.17.43.255 inet6 fe80::42:96ff:fea2:41c6 prefixlen 64 scopeid 0x20<link> ether 02:42:96:a2:41:c6 txqueuelen 0 (Ethernet) RX packets 308660 bytes 81037315 (77.2 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 368169 bytes 43114732 (41.1 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 em1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 10.192.27.115 netmask 255.255.255.128 broadcast 10.192.27.127 inet6 fe80::444d:ef36:fd70:9a89 prefixlen 64 scopeid 0x20<link> ether 80:18:44:e6:eb:dc txqueuelen 1000 (Ethernet) RX packets 203416750 bytes 32345922741 (30.1 GiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 191446735 bytes 30608969568 (28.5 GiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 device interrupt 81 em2: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 ether 80:18:44:e6:eb:dd txqueuelen 1000 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 device interrupt 82 em3: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 ether 80:18:44:e6:eb:de txqueuelen 1000 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 device interrupt 83 em4: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 ether 80:18:44:e6:eb:df txqueuelen 1000 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 device interrupt 86 flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet 172.17.43.0 netmask 255.255.255.255 broadcast 0.0.0.0 inet6 fe80::342a:5aff:feb1:ec27 prefixlen 64 scopeid 0x20<link> ether 36:2a:5a:b1:ec:27 txqueuelen 0 (Ethernet) RX packets 3822 bytes 1779140 (1.6 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 2941 bytes 2064568 (1.9 MiB) TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 inet6 ::1 prefixlen 128 scopeid 0x10<host> loop txqueuelen 1000 (Local Loopback) RX packets 34111221 bytes 7923602927 (7.3 GiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 34111221 bytes 7923602927 (7.3 GiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 veth9f782bf: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet6 fe80::640c:19ff:fe71:929e prefixlen 64 scopeid 0x20<link> ether 66:0c:19:71:92:9e txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 70 bytes 3932 (3.8 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 vethaa0032b: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet6 fe80::8c97:baff:fe92:b991 prefixlen 64 scopeid 0x20<link> ether 8e:97:ba:92:b9:91 txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 10 bytes 764 (764.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 vethcefa9bb: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet6 fe80::b414:71ff:fe8e:3599 prefixlen 64 scopeid 0x20<link> ether b6:14:71:8e:35:99 txqueuelen 0 (Ethernet) RX packets 4 bytes 204 (204.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 14 bytes 968 (968.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 vethd952b63: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet6 fe80::d8a3:b8ff:fea4:482c prefixlen 64 scopeid 0x20<link> ether da:a3:b8:a4:48:2c txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 70 bytes 3932 (3.8 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

访问日志

[root@master01 service]# kubectl logs pod/nginx-deployment-765b6d95f9-hzjwc 172.17.43.0 - - [27/Nov/2019:06:11:05 +0000] "GET / HTTP/1.1" 200 612 "-" "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.70 Safari/537.36" "-" 172.17.43.0 - - [27/Nov/2019:06:11:06 +0000] "GET /favicon.ico HTTP/1.1" 404 556 "http://10.192.27.115:40080/" "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.70 Safari/537.36" "-" 2019/11/27 06:11:06 [error] 6#6: *1 open() "/usr/share/nginx/html/favicon.ico" failed (2: No such file or directory), client: 172.17.43.0, server: localhost, request: "GET /favicon.ico HTTP/1.1", host: "10.192.27.115:40080", referrer: "http://10.192.27.115:40080/" 172.17.43.0 - - [27/Nov/2019:06:11:09 +0000] "GET / HTTP/1.1" 304 0 "-" "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.70 Safari/537.36" "-" 172.17.43.0 - - [27/Nov/2019:06:31:55 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0" "-" 172.17.43.0 - - [27/Nov/2019:06:34:24 +0000] "GET / HTTP/1.1" 304 0 "-" "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.70 Safari/537.36" "-" 172.17.43.0 - - [27/Nov/2019:06:34:26 +0000] "GET / HTTP/1.1" 304 0 "-" "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.70 Safari/537.36" "-" 172.17.43.0 - - [27/Nov/2019:06:35:42 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0" "-"

内部访问地址10.0.0.181:2222 即 Cluster IP : port

[root@node01 log]# curl 10.0.0.181:2222 <!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title> <style> body { width: 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html> [root@node01 log]#

访问日志

[root@master01 service]# kubectl logs pod/nginx-deployment-765b6d95f9-7t8ht 10.0.0.181 - - [27/Nov/2019:06:33:33 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0" "-"

[root@master01 service]# kubectl get pods NAME READY STATUS RESTARTS AGE nginx-deployment-765b6d95f9-7t8ht 1/1 Running 0 33m nginx-deployment-765b6d95f9-d9bzc 1/1 Running 0 33m nginx-deployment-765b6d95f9-hzjwc 1/1 Running 0 33m [root@master01 service]#

NodePort的实现方式是在Kubernetes集群里的每个Node上为需要外部访问的Service开启一个对应的TCP监听端口,外部系统只要用任意一个Node的IP地址+具体的NodePort端口号即可访问此服务。

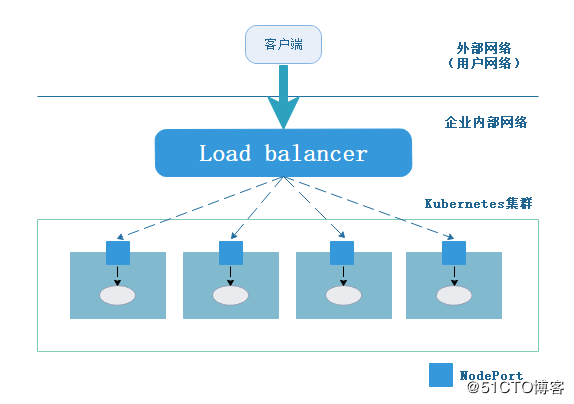

NodePort还没有完全解决外部访问Service的所有问题,比如负载均衡问题,常用的做法是在Kubernetes集群之外部署一个负载均衡器。

service类型二:ClusterIP 默认,分配一个集群内部可以访问的虚拟IP(VIP)

[root@master01 service]# cat service.yaml apiVersion: v1 kind: Service metadata: name: my-service spec: clusterIP: 10.0.0.123 #定义内部ClusterIP selector: app: nginx-deploy #通过标签关联一组pod ports: - name: http port: 3333 #service的端口 protocol: TCP targetPort: 80 #容器端口 [root@master01 service]#

[root@master01 service]# kubectl apply -f service.yaml service/my-service configured [root@master01 service]# kubectl get all NAME READY STATUS RESTARTS AGE pod/nginx-deployment-765b6d95f9-7t8ht 1/1 Running 0 48m pod/nginx-deployment-765b6d95f9-d9bzc 1/1 Running 0 48m pod/nginx-deployment-765b6d95f9-hzjwc 1/1 Running 0 48m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 16d service/my-service ClusterIP 10.0.0.123 <none> 3333/TCP 2m27s service/nginx-service-mxxl NodePort 10.0.0.181 <none> 2222:40080/TCP 48m NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/nginx-deployment 3/3 3 3 48m NAME DESIRED CURRENT READY AGE replicaset.apps/nginx-deployment-765b6d95f9 3 3 3 48m [root@master01 service]# kubectl get ep NAME ENDPOINTS AGE kubernetes 10.192.27.100:6443,10.192.27.114:6443 16d my-service 172.17.43.4:80,172.17.43.5:80,172.17.46.3:80 2m32s nginx-service-mxxl 172.17.43.4:80,172.17.43.5:80,172.17.46.3:80 48m

访问

[root@node01 log]# curl 10.0.0.123:3333 <!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title> <style> body { width: 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html> [root@node01 log]#

[root@master01 service]# kubectl get pod -l app=nginx-deploy #查看app=nginx-deploy关联的pod NAME READY STATUS RESTARTS AGE nginx-deployment-765b6d95f9-7t8ht 1/1 Running 0 53m nginx-deployment-765b6d95f9-d9bzc 1/1 Running 0 53m nginx-deployment-765b6d95f9-hzjwc 1/1 Running 0 53m [root@master01 service]# [root@master01 service]# kubectl get pod -l app=nginx-deploy -o wide #查看app=nginx-deploy关联的pod 对应的IP 和宿主机的IP NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-deployment-765b6d95f9-7t8ht 1/1 Running 0 54m 172.17.43.4 10.192.27.115 <none> <none> nginx-deployment-765b6d95f9-d9bzc 1/1 Running 0 54m 172.17.43.5 10.192.27.115 <none> <none> nginx-deployment-765b6d95f9-hzjwc 1/1 Running 0 54m 172.17.46.3 10.192.27.116 <none> <none> [root@master01 service]# [root@master01 service]# kubectl describe svc my-service Name: my-service Namespace: default Labels: <none> Annotations: kubectl.kubernetes.io/last-applied-configuration: {"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"name":"my-service","namespace":"default"},"spec":{"clusterIP":"10.0.0.12... Selector: app=nginx-deploy Type: ClusterIP IP: 10.0.0.123 Port: http 3333/TCP TargetPort: 80/TCP Endpoints: 172.17.43.4:80,172.17.43.5:80,172.17.46.3:80 Session Affinity: None Events: <none> [root@master01 service]#

service类型三:LoadBalancer:工作在特定的Cloud Provider上,例如Google Cloud,AWS,OpenStack

Load balancer组件独立于Kubernetes集群之外,可以是一个硬件负载均衡器,也可以是软件方式实现,例如HAProxy或者Nginx。这种方式,无疑是增加了运维的工作量及出错的概率。

于是Kubernetes提供了自动化的解决方案,如果我们使用谷歌的GCE公有云,那么只需要将type: NodePort改成type: LoadBalancer,此时Kubernetes会自动创建一个对应的Load balancer实例并返回它的IP地址供外部客户端使用。其他公有云提供商只要实现了支持此特性的驱动,则也可以达到上述目的。

参考地址:https://blog.51cto.com/andyxu/2329257

[root@master01 yaml_doc]# kubectl run -it --image=10.192.27.111/library/busybox:1.28.4 --rm --restart=Never sh If you don't see a command prompt, try pressing enter. / # nslookup kube-dns.svc.cluster.local Server: 10.0.0.2 Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.local nslookup: can't resolve 'kube-dns.svc.cluster.local' / # nslookup kube-dns.kube-system.svc.cluster.local Server: 10.0.0.2 Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.local Name: kube-dns.kube-system.svc.cluster.local Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.local / # nslookup kubernetes-dashboard.kube-system.svc.cluster.local Server: 10.0.0.2 Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.local Name: kubernetes-dashboard.kube-system.svc.cluster.local Address 1: 10.0.0.153 kubernetes-dashboard.kube-system.svc.cluster.local / # nslookup kubernetes-dashboard.kube-system.svc.cluster.local Server: 10.0.0.2 Address 1: 10.0.0.2 kube-dns.kube-system.svc.cluster.local Name: kubernetes-dashboard.kube-system.svc.cluster.local Address 1: 10.0.0.153 kubernetes-dashboard.kube-system.svc.cluster.local / # exit cpod "sh" deleted

浙公网安备 33010602011771号

浙公网安备 33010602011771号