K8S 单master节点部署

Kubernetes作为近几年最具颠覆性的容器编排技术,广泛应用与企业的生产环境中,相较于前几年的docker-swarm的编排方式,Kubernetes无疑是站在一个更高的角度对容器进行管理,方便日后项目的普适性,容易对架构进行扩展。

生产环境下更注重于集群的高可用,不同于测试环境的单主节点,在生产环境下需要配置至少两个主节点两个node节点,保证在主节点挂掉之后,node节点的kubelet还能访问到另一个主节点的apiserver等组件进行运作。

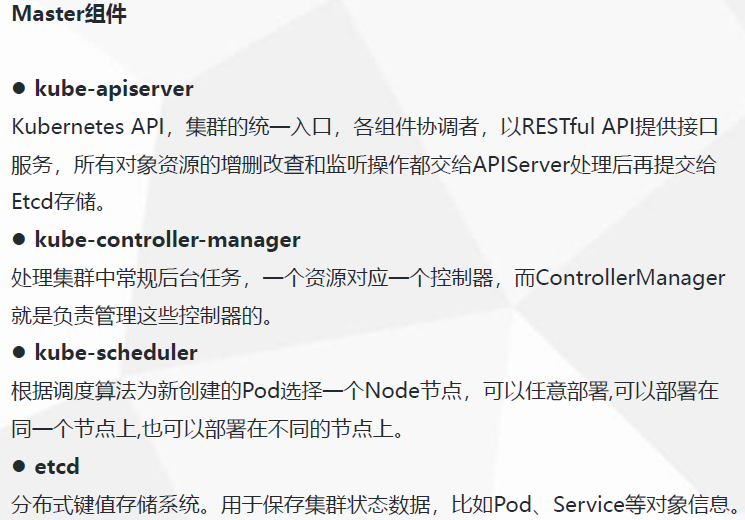

Master节点上 10.192.27.100 部署Master组件:

1.kube-apiserver

2.kube-controller-manager

3.kube-scheduler

配置文件-> systemd管理组件-> 启动

一、kube-apiserver组件安装

1、为kube-apiserver 生成证书

为了方便生成证书 ,写了一个脚本k8s-cert.sh进行操作

[root@master01 k8s]# cat k8s-cert/k8s-cert.sh cat > ca-config.json <<EOF { "signing": { "default": { "expiry": "87600h" }, "profiles": { "kubernetes": { "expiry": "87600h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } } EOF cat > ca-csr.json <<EOF { "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "Beijing", "ST": "Beijing", "O": "k8s", "OU": "System" } ] } EOF cfssl gencert -initca ca-csr.json | cfssljson -bare ca - #----------------------- cat > server-csr.json <<EOF { "CN": "kubernetes", "hosts": [ "10.0.0.1", "127.0.0.1", "10.192.27.100", "10.192.27.114", "10.192.27.111", "10.192.27.112", "10.192.27.117", "kubernetes", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server #----------------------- cat > admin-csr.json <<EOF { "CN": "admin", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "system:masters", "OU": "System" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin #----------------------- cat > kube-proxy-csr.json <<EOF { "CN": "system:kube-proxy", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy [root@master01 k8s]#

第一步创建CA

cat > ca-config.json <<EOF ##ca配置 { "signing": { "default": { "expiry": "87600h" }, "profiles": { "kubernetes": { "expiry": "87600h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } } EOF cat > ca-csr.json <<EOF { "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "Beijing", "ST": "Beijing", "O": "k8s", "OU": "System" } ] } EOF cfssl gencert -initca ca-csr.json | cfssljson -bare ca - ##生成ca

第二步 生成kube-apiserver证书

cat > server-csr.json <<EOF { "CN": "kubernetes", "hosts": [ "10.0.0.1", #默认要 "127.0.0.1", #默认要 "10.192.27.100", #master1 "10.192.27.114", #master2 "10.192.27.111", #NGINX 负载01 "10.192.27.112", #NGINX 负载02 "10.192.27.117", #NGINX 负载01 和 NGINX 负载02 的VIP "kubernetes", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server #生成kube-apiserve证书

第三步创建生成admin证书和kube-proxy证书

cat > admin-csr.json <<EOF { "CN": "admin", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "system:masters", "OU": "System" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin #生成admin证书 #----------------------- cat > kube-proxy-csr.json <<EOF { "CN": "system:kube-proxy", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing", "O": "k8s", "OU": "System" } ] } EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy #生成kube-proxy证书

[root@master01 k8s]# mkdir k8s-cert [root@master01 k8s]# mkdir -p /opt/kubernetes/{cfg,bin,ssl} #创建master安装目录 [root@master01 k8s]# cd k8s-cert/ [root@master01 k8s-cert]# bash k8s-cert.sh [root@master01 k8s-cert]# ls admin.csr admin-key.pem ca-config.json ca-csr.json ca.pem kube-proxy.csr kube-proxy-key.pem server.csr server-key.pem admin-csr.json admin.pem ca.csr ca-key.pem k8s-cert.sh kube-proxy-csr.json kube-proxy.pem server-csr.json server.pem [root@master01 k8s-cert]# cp ca*pem server*pem /opt/kubernetes/ssl/ [root@master01 k8s-cert]# ls /opt/kubernetes/ssl/ ca-key.pem ca.pem server-key.pem server.pem [root@master01 k8s-cert]#

[root@master01 k8s-cert]# bash k8s-cert.sh 2019/08/30 18:38:53 [INFO] generating a new CA key and certificate from CSR 2019/08/30 18:38:53 [INFO] generate received request 2019/08/30 18:38:53 [INFO] received CSR 2019/08/30 18:38:53 [INFO] generating key: rsa-2048 2019/08/30 18:38:53 [INFO] encoded CSR 2019/08/30 18:38:53 [INFO] signed certificate with serial number 1680319003524854723550756888958230645179038085 2019/08/30 18:38:53 [INFO] generate received request 2019/08/30 18:38:53 [INFO] received CSR 2019/08/30 18:38:53 [INFO] generating key: rsa-2048 2019/08/30 18:38:54 [INFO] encoded CSR 2019/08/30 18:38:54 [INFO] signed certificate with serial number 234631884302059385808998114917473451753420882704 2019/08/30 18:38:54 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for websites. For more information see the Baseline Requirements for the Issuance and Management of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org); specifically, section 10.2.3 ("Information Requirements"). 2019/08/30 18:38:54 [INFO] generate received request 2019/08/30 18:38:54 [INFO] received CSR 2019/08/30 18:38:54 [INFO] generating key: rsa-2048 2019/08/30 18:38:54 [INFO] encoded CSR 2019/08/30 18:38:55 [INFO] signed certificate with serial number 124097033412995211646035769457580261144252497140 2019/08/30 18:38:55 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for websites. For more information see the Baseline Requirements for the Issuance and Management of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org); specifically, section 10.2.3 ("Information Requirements"). 2019/08/30 18:38:55 [INFO] generate received request 2019/08/30 18:38:55 [INFO] received CSR 2019/08/30 18:38:55 [INFO] generating key: rsa-2048 2019/08/30 18:38:55 [INFO] encoded CSR 2019/08/30 18:38:55 [INFO] signed certificate with serial number 204743145707788932665541155817250745137284493254 2019/08/30 18:38:55 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for websites. For more information see the Baseline Requirements for the Issuance and Management of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org); specifically, section 10.2.3 ("Information Requirements").

2、二进制包安装

[root@master01 k8s]# tar -xf kubernetes-server-linux-amd64.tar.gz [root@master01 k8s]# ls cfssl.sh etcd.sh etcd-v3.3.10-linux-amd64.tar.gz k8s-cert kuberne etcd-cert etcd-v3.3.10-linux-amd64 flannel-v0.10.0-linux-amd64.tar.gz kubernetes master_ [root@master01 k8s]# cd kubernetes [root@master01 kubernetes]# ls addons kubernetes-src.tar.gz LICENSES server [root@master01 kubernetes]# cd server/ [root@master01 server]# cd bin/ [root@master01 bin]# ls apiextensions-apiserver kube-controller-manager.tar cloud-controller-manager kubectl cloud-controller-manager.docker_tag kubelet cloud-controller-manager.tar kube-proxy hyperkube kube-proxy.docker_tag kubeadm kube-proxy.tar kube-apiserver kube-scheduler kube-apiserver.docker_tag kube-scheduler.docker_tag kube-apiserver.tar kube-scheduler.tar kube-controller-manager mounter kube-controller-manager.docker_tag [root@master01 bin]# cp kube-apiserver kube-scheduler kube-controller-manager kubectl /opt/kubernetes/bin/

创建一个token文件:后面校验使用

[root@master01 master_package]# vim /opt/kubernetes/cfg/token.csv 0fb61c46f8991b718eb38d27b605b008,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

自己写好的脚本 生成kube-apiserver配置文件 和服务启动文件

[root@master01 master_package]# cat apiserver.sh ###################自己写好的脚本 生成kube-apiserver配置文件 和服务启动脚本 #!/bin/bash MASTER_ADDRESS=$1 ETCD_SERVERS=$2 cat <<EOF >/opt/kubernetes/cfg/kube-apiserver KUBE_APISERVER_OPTS="--logtostderr=true \\ --v=4 \\ --etcd-servers=${ETCD_SERVERS} \\ --bind-address=${MASTER_ADDRESS} \\ --secure-port=6443 \\ --advertise-address=${MASTER_ADDRESS} \\ --allow-privileged=true \\ --service-cluster-ip-range=10.0.0.0/24 \\ --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \\ --authorization-mode=RBAC,Node \\ --kubelet-https=true \\ --enable-bootstrap-token-auth \\ --token-auth-file=/opt/kubernetes/cfg/token.csv \\ --service-node-port-range=30000-50000 \\ --tls-cert-file=/opt/kubernetes/ssl/server.pem \\ --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \\ --client-ca-file=/opt/kubernetes/ssl/ca.pem \\ --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \\ --etcd-cafile=/opt/etcd/ssl/ca.pem \\ --etcd-certfile=/opt/etcd/ssl/server.pem \\ --etcd-keyfile=/opt/etcd/ssl/server-key.pem" EOF cat <<EOF >/usr/lib/systemd/system/kube-apiserver.service [Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/opt/kubernetes/cfg/kube-apiserver ExecStart=/opt/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl enable kube-apiserver systemctl restart kube-apiserver ##################

执行脚本

[root@master01 k8s]# mkdir master_package [root@master01 k8s]# cd master_package/ [root@master01 master_package]# bash apiserver.sh 10.192.27.100 https://10.192.27.100:2379,https://10.192.27.115:2379,https://10.192.27.116:2379 [root@master01 master_package]# journalctl -u kube-apiserver #看日志方式一 [root@master01 master_package]# tailf /var/log/messages #看日志方式二 [root@master01 ~]# systemctl restart kube-apiserver [root@master01 ~]# ps -ef | grep kube root 7158 1 73 19:19 ? 00:00:02 /opt/kubernetes/bin/kube-apiserver --logtostderr=true --v=4 --etcd-servers=https://10.192.27.100:2379,https://10.192.27.115:2379,https://10.192.27.116:2379 --bind-address=10.192.27.100 --secure-port=6443 --advertise-address=10.192.27.100 --allow-privileged=true --service-cluster-ip-range=10.0.0.0/24 --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction --authorization-mode=RBAC,Node --kubelet-https=true --enable-bootstrap-token-auth --token-auth-file=/opt/kubernetes/cfg/token.csv --service-node-port-range=30000-50000 --tls-cert-file=/opt/kubernetes/ssl/server.pem --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem --client-ca-file=/opt/kubernetes/ssl/ca.pem --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem --etcd-cafile=/opt/etcd/ssl/ca.pem --etcd-certfile=/opt/etcd/ssl/server.pem --etcd-keyfile=/opt/etcd/ssl/server-key.pem root 7167 7128 0 19:19 pts/0 00:00:00 grep --color=auto kube

配置文件解析

[root@master01 ~]# cat /opt/kubernetes/cfg/kube-apiserver #配置文件 KUBE_APISERVER_OPTS="--logtostderr=true \错误日志启用 会输出到你的系统里面 --v=4 \ #日志级别 --etcd-servers=https://10.192.27.100:2379,https://10.192.27.115:2379,https://10.192.27.116:2379 \ --bind-address=10.192.27.100 \ --secure-port=6443 \ 绑定的端口 --advertise-address=10.192.27.100 \ #集群通告 --allow-privileged=true \ #授权 --service-cluster-ip-range=10.0.0.0/24 \ --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \ #准入控制 启用高级功能 --authorization-mode=RBAC,Node \ #授权模式 --kubelet-https=true \ --enable-bootstrap-token-auth \ 启用认证 --token-auth-file=/opt/kubernetes/cfg/token.csv \ --service-node-port-range=30000-50000 \ --tls-cert-file=/opt/kubernetes/ssl/server.pem \ --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \ --client-ca-file=/opt/kubernetes/ssl/ca.pem \ --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \ --etcd-cafile=/opt/etcd/ssl/ca.pem \ --etcd-certfile=/opt/etcd/ssl/server.pem \ --etcd-keyfile=/opt/etcd/ssl/server-key.pem"

端口号解析

[root@master01 ~]# netstat -anptu | grep 6443 #外部访问地址 tcp 0 0 10.192.27.100:6443 0.0.0.0:* LISTEN 7158/kube-apiserver tcp 0 0 10.192.27.100:6443 10.192.27.100:40962 ESTABLISHED 7158/kube-apiserver tcp 0 0 10.192.27.100:40962 10.192.27.100:6443 ESTABLISHED 7158/kube-apiserver [root@master01 ~]# netstat -anptu | grep 8080 #用于本机kube-scheduler和 kube-controller-manager组件 连接kube-apiserver 的端口 tcp 0 0 127.0.0.1:8080 0.0.0.0:* LISTEN 7158/kube-apiserver

二、scheduler组件安装

自己写好的脚本 生成scheduler配置文件 和服务启动文件

[root@master01 master_package]# cat scheduler.sh #!/bin/bash MASTER_ADDRESS=$1 cat <<EOF >/opt/kubernetes/cfg/kube-scheduler KUBE_SCHEDULER_OPTS="--logtostderr=true \\ --v=4 \\ --master=${MASTER_ADDRESS}:8080 \\ --leader-elect" EOF cat <<EOF >/usr/lib/systemd/system/kube-scheduler.service [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/opt/kubernetes/cfg/kube-scheduler ExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl enable kube-scheduler systemctl restart kube-scheduler [root@master01 master_package]#

[root@master01 master_package]# ./scheduler.sh 127.0.0.1 Created symlink from /etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /usr/lib/systemd/system/kube-scheduler.service. [root@master01 master_package]# ps -ef | grep scheduler root 7287 1 4 19:46 ? 00:00:02 /opt/kubernetes/bin/kube-scheduler --logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect root 7349 7128 0 19:47 pts/0 00:00:00 grep --color=auto scheduler

三、controller-manager组件安装

自己写好的脚本 生成controller-manager配置文件 和服务启动文件

[root@master01 master_package]# cat controller-manager.sh #!/bin/bash MASTER_ADDRESS=$1 cat <<EOF >/opt/kubernetes/cfg/kube-controller-manager KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true \\ --v=4 \\ --master=${MASTER_ADDRESS}:8080 \\ --leader-elect=true \\ --address=127.0.0.1 \\ --service-cluster-ip-range=10.0.0.0/24 \\ --cluster-name=kubernetes \\ --cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\ --cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \\ --root-ca-file=/opt/kubernetes/ssl/ca.pem \\ --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \\ --experimental-cluster-signing-duration=87600h0m0s" EOF cat <<EOF >/usr/lib/systemd/system/kube-controller-manager.service [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/opt/kubernetes/cfg/kube-controller-manager ExecStart=/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl enable kube-controller-manager systemctl restart kube-controller-manager [root@master01 master_package]#

[root@master01 master_package]# chmod +x controller-manager.sh [root@master01 master_package]# ./controller-manager.sh 127.0.0.1 Created symlink from /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /usr/lib/systemd/system/kube-controller-manager.service. [root@master01 master_package]# ps -ef | grep kube-controller root 7342 1 9 19:47 ? 00:00:02 /opt/kubernetes/bin/kube-controller-manager --logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect=true --address=127.0.0.1 --service-cluster-ip-range=10.0.0.0/24 --cluster-name=kubernetes --cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem --cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem --root-ca-file=/opt/kubernetes/ssl/ca.pem --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem --experimental-cluster-signing-duration=87600h0m0s root 7351 7128 0 19:47 pts/0 00:00:00 grep --color=auto kube-controller [root@master01 master_package]#

四、查看资源节点情况

[root@master01 master_package]# /opt/kubernetes/bin/kubectl get cs #查看主节点的信息的健康 NAME STATUS MESSAGE ERROR scheduler Healthy ok controller-manager Healthy ok etcd-0 Healthy {"health":"true"} etcd-2 Healthy {"health":"true"} etcd-1 Healthy {"health":"true"} [root@master01 master_package]# /opt/kubernetes/bin/kubectl get node #查看node节点信息 No resources found. [root@master01 master_package]#

浙公网安备 33010602011771号

浙公网安备 33010602011771号