一、celery 处理任务的模块

celery 处理任务的模块 场景1: 对耗时的任务,通过celery将任务添加到broker(队列),然后立即给用户返回一个任务id。 当任务添加到broker之后,由worker去broker获取任务并处理任务 任务完成后,再将结构放到backend中 用户响应检查结构,提供任务ID,就去backend中去查找 场景2 定时任务(定时发布,定时拍卖) pip install celery -i "https://pypi.doubanio.com/simple/" 安装broker: redis或者rabbitMQ pip install redis / pika

二、celery入门简介

s1.py 定义任务函数

from celery import Celery app = Celery('tasks',broker='redis://:cWCVKJ7ZHUK12mVbivUf@192.168.85.123:6379/1',backend='redis://:cWCVKJ7ZHUK12mVbivUf@192.168.85.123:6379/1') @app.task def test1(x,y): return x + y @app.task def test2(x,y): return x - y

s2.py 添加任务

from s1 import test1 result = test1.delay(5,3) print(result.id)

s3.py 获取任务状态

from celery.result import AsyncResult from s1 import app result_object = AsyncResult(id="ce11c816-3ea2-4cf1-825c-5f5729667a4f",app=app) print(result_object.status)

1)使用方法

linux下 celery -A s1 worker -l info 添加s1脚本下的任务 -l info 参数,详细日志信息 windows celery -A s1 worker -l info -P eventlet

可以通过 s2进行添加任务,其中会返回任务id。通过任务id去s3查看任务运行情况

处理windows下的异常

[2021-07-25 03:05:02,818: ERROR/MainProcess] Task handler raised error: ValueError('not enough values to unpack (expected 3, got 0)') Traceback (most recent call last): File "C:\Users\hp\AppData\Roaming\Python\Python38\site-packages\billiard\pool.py", line 362, in workloop result = (True, prepare_result(fun(*args, **kwargs))) File "d:\programs\python\python38\lib\site-packages\celery\app\trace.py", line 635, in fast_trace_task tasks, accept, hostname = _loc ValueError: not enough values to unpack (expected 3, got 0) pip install eventlet celery -A s1 worker -l info -P eventlet

三、django中使用celery

1)配置文件更改。settings.py

# ######################## Celery配置 ######################## CELERY_BROKER_URL = 'redis://:cWCVKJ7ZHUK12mVbivUf@192.168.85.123:6379/1' CELERY_ACCEPT_CONTENT = ['json'] CELERY_RESULT_BACKEND = 'redis://:cWCVKJ7ZHUK12mVbivUf@192.168.85.123:6379/1' CELERY_TASK_SERIALIZER = 'json'

2)在同级目录添加 celery.py

#!/usr/bin/env python # -*- coding:utf-8 -*- import os from celery import Celery # set the default Django settings module for the 'celery' program. os.environ.setdefault('DJANGO_SETTINGS_MODULE', 'democelery.settings') app = Celery('democelery') # Using a string here means the worker doesn't have to serialize # the configuration object to child processes. # - namespace='CELERY' means all celery-related configuration keys # should have a `CELERY_` prefix. app.config_from_object('django.conf:settings', namespace='CELERY') # Load task modules from all registered Django app configs. # 去每个已注册app中读取tasks.py文件 app.autodiscover_tasks()

3)在app中添加对应的task任务。tasks.py

from celery import shared_task @shared_task def add(x,y): return x + y @shared_task def mul(x,y): return x * y @shared_task def myfunc(func): return func

此时可以启动work了

celery -A democelery worker -l info -P eventlet

4)如果要在django视图函数中进行任务添加

4.1)需要添加启动引擎。在settings.py的同级目录。__init__.py添加如下内容

from .celery import app as celery_app __all__ = ('celery_app',)

4.2)视图函数添加任务

from django.shortcuts import HttpResponse from app01 import tasks def create_task(request): print("请求来了") result = tasks.add.delay(4,6) return HttpResponse(result.id) def get_task(request): nid = request.GET.get('nid') from celery.result import AsyncResult from democelery import celery_app result_object = AsyncResult(id=nid,app=celery_app) data = result_object.get() return HttpResponse(data)

4.3)url接入视图函数

path('create/task/', views.create_task), path('get/result/', views.get_task),

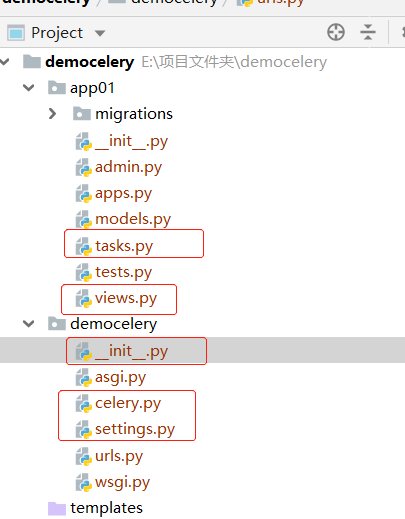

5)目录结构如下

5.1)celery的其他方法,包括定时任务

from django.shortcuts import HttpResponse import datetime from app01 import tasks def create_task(request): print("请求来了") result = tasks.add.delay(4,6) return HttpResponse(result.id) def get_task(request): nid = request.GET.get('nid') from celery.result import AsyncResult from democelery import celery_app result_object = AsyncResult(id=nid,app=celery_app) print(result_object.status) # 状态 data = result_object.get() # 获取数据 # result_object.forget() # 把数据在backend中移除 # result_object.revoke() # 取消任务执行 # result_object.revoke(terminate=True) # 强行取消任务执行,在任务正在运行中,进行取消 print(result_object.successful()) return HttpResponse(data) # 100秒后执行 def create_task_time(request): ctime = datetime.datetime.now() utc_ctime = datetime.datetime.utcfromtimestamp(ctime.timestamp()) s10=datetime.timedelta(seconds=100) target_time = utc_ctime + s10 result = tasks.add.apply_async(args=[11,3],eta=target_time) return HttpResponse(result.id)

6 ) celery的回调函数的使用。django集成celery之callback方式link_error和on_failure

写好回调函数的执行

from celery.app.task import Task class CallbackTask(Task): def __init__(self): super(CallbackTask, self).__init__() def on_success(self, retval, task_id, args, kwargs): try: item_param= json.loads(args[0]) logger.info('[task_id] %s, [task_type] %s, finished successfully.' % (task_id, item_param.get('task_type'))) except Exception, ex: logger.error(traceback.format_exc()) def on_failure(self, exc, task_id, args, kwargs, einfo): try: item_param = json.loads(args[0]) logger.error(('Task {0} raised exception: {1!r}\n{2!r}'.format( task_id, exc, einfo.traceback))) except Exception, ex: logger.error(traceback.format_exc())

6.1)任务集成回调函数

from celery import task from common.callback import CallbackTask logger = logging.getLogger(__name__) @task(base=CallbackTask) def quota_check(item_param): logger.info('start') return

7、django_celery_results 模块的使用

1)setting编辑配置

INSTALLED_APPS = [ .......................... 'rest_framework', 'django_celery_results', ] CELERY_BROKER_URL = 'redis://:%s@%s:%s/1' %(config.REDIS_PASSWROD,config.REDIS_HOST,config.REDIS_PORT) CELERY_ACCEPT_CONTENT = ['json'] CELERY_RESULT_BACKEND = 'django-db' # 数据保存在mysql中 CELERY_TASK_SERIALIZER = 'json'

2)同级目录下。新增 celery.py

from __future__ import absolute_import, unicode_literals #这句导入一定要在第一的位置 import os from celery import Celery from django.conf import settings #这里我们的项目名称为,所以为platform.settings os.environ.setdefault("DJANGO_SETTINGS_MODULE", "pairecord.settings") # 创建celery应用 app = Celery('aiqiyisoft') app.config_from_object('django.conf:settings',namespace='CELERY') app.autodiscover_tasks(lambda: settings.INSTALLED_APPS)

3)同级目录下。__init__.py

from __future__ import absolute_import, unicode_literals from .celery import app as celery_app __all__ = ('celery_app',)

4)在对应的app下添加任务

from pairecord import celery_app as app @app.task def categorymerge(x,y): return x+y

任务的运行会保存到相应的数据库。所以要执行对应的 python manage.py makemigrations 生成对应的数据库

5)如果要查看数据库相关的内容

需要导入相关的模块。操作方法和操作model一样

from django_celery_results.models import TaskResult

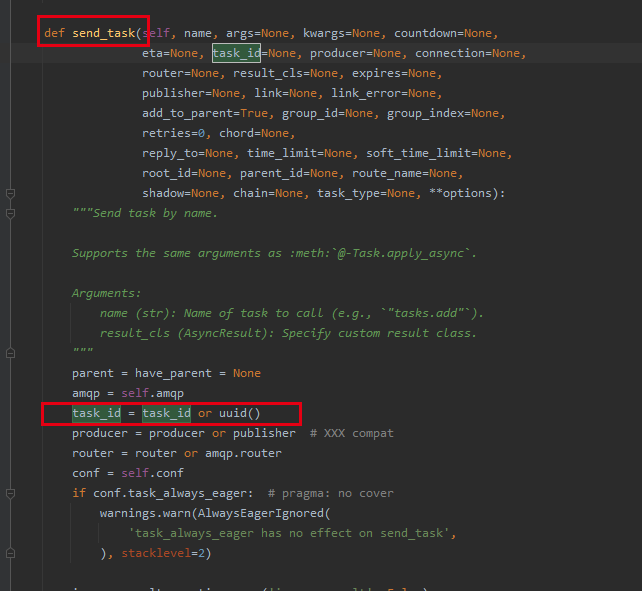

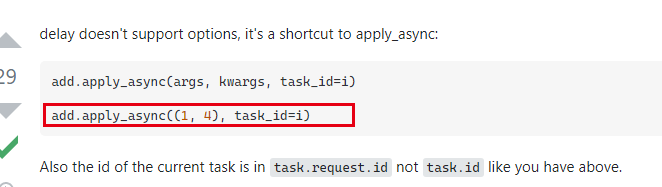

6) 手动传入id

tasks.add.delay((1,4)) tasks.add.apply_async(args=[1, 4],task_id="gaegwggegwewegewg") tasks.add.apply_async((1,4),task_id="gaegwggegwewegewg")

四、docker启动

FROM registry.cn-shanghai.aliyuncs.com/ppio/python3:v1

ENV LANG en_US.UTF-8

# 同步时间

ENV TZ=Asia/Shanghai

#FROM centos:7

#RUN yum update -y && yum install epel-release -y && yum update -y && yum install python3 -y

#RUN pip3 install --upgrade pip

RUN pip3 install --upgrade pip

RUN mkdir -p /var/www/

ADD . /var/www/pairecord/

RUN mkdir /var/www/pairecord/log/

RUN rm -rf /var/www/pairecord/scripts/

# 5. 安装pip依赖

RUN pip3 install -r /var/www/pairecord/requirements.txt -i "https://pypi.doubanio.com/simple/"

WORKDIR /var/www/pairecord/

ENTRYPOINT ["celery","-A","pairecord","worker"]

https://www.celerycn.io/yong-hu-zhi-nan/ding-qi-ren-wu-periodic-tasks

https://pypi.org/project/django-celery-beat/

启动 django-celery-results 和 django-celery-beat celery -A celery_demo worker --beat --scheduler django -l debug --logfile=/var/log/celery.log --detach 启动 django-celery-beat celery -A celery_demo beat -l info --scheduler django -l debug --logfile=/var/log/celery.log --detach 启动 django-celery-results celery -A celery_demo worker

浙公网安备 33010602011771号

浙公网安备 33010602011771号