一、etcd介绍

1)etcd 是一个分布式一致性键值存储系统,用于共享配置和服务发现

etcd是一个分布式一致性键值存储系统,用于共享配置和服务发现, 专注于: 简单:良好定义的,面向用户的API (gRPC)· 安全: 带有可选客户端证书认证的自动TLS· 快速:测试验证,每秒10000写入· 可靠:使用Raft适当分布etcd是Go编写,并使用Raft一致性算法来管理高可用复制日志 etcd可实现的功能,Zookeeper都能实现,那么为什么要用etcd而非直接使用Zookeeper呢?相较之下, Zookeeper有如下缺点: 1.复杂。Zookeeper的部署维护复杂,管理员需要掌握一系列的知识和技能;而Paxos强一致性算法也是素来以复杂难懂而闻名于世; 另外,Zookeeper的使用也比较复杂,需要安装客户端,官方只提供了java和C两种语言的接口。 2.Java编写。这里不是对Java有偏见,而是Java本身就偏向于重型应用,它会引入大量的依赖。而运维人员则普遍希望机器集群尽可能简单,维护起来也不易出错。 etcd作为一个后起之秀,其优点也很明显。 1.简单。使用Go语言编写部署简单;使用HTTP作为接口使用简单;使用Raft算法保证强一致性让用户易于理解。 2.数据持久化。etcd默认数据一更新就进行持久化。 3.安全。etcd支持SSL客户端安全认证。

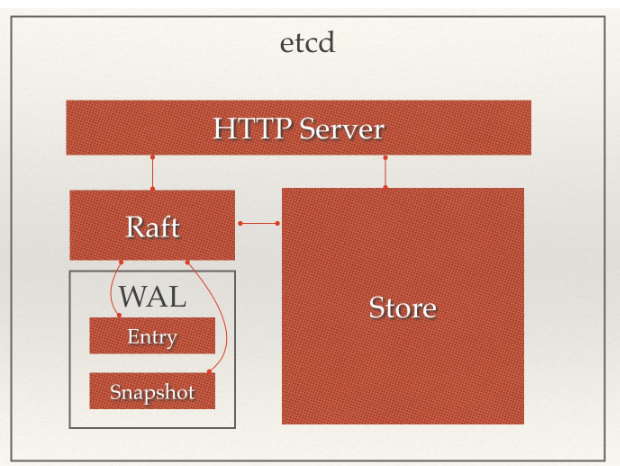

2) etcd的架构图

从etcd的架构图中我们可以看到,etcd主要分为四个部分

HTTP Server: 用于处理用户发送的API请求以及其它etcd节点的同步与心跳信息请求。

Store:用于处理etcd支持的各类功能的事务,包括数据索引、节点状态变更、监控与反馈、事件处理与执行等等,是etcd对用户提供的大多数API功能的具体实现。

Raft:Raft强一致性算法的具体实现,是etcd的核心。

WAL:Write Ahead Log(预写式日志),是etcd的数据存储方式。除了在内存中存有所有数据的状态以及节点的索引以外,etcd就通过WAL进行持久化存储。

WAL中,所有的数据提交前都会事先记录日志。Snapshot是为了防止数据过多而进行的状态快照;Entry表示存储的具体日志内容。

通常,一个用户的请求发送过来,会经由HTTP Server转发给Store进行具体的事务处理

如果涉及到节点的修改,则交给Raft模块进行状态的变更、日志的记录,然后再同步给别的etcd节点以确认数据提交

最后进行数据的提交,再次同步。

3)etcd概念词汇表

Raft:etcd所采用的保证分布式系统强一致性的算法。

Node:一个Raft状态机实例。

Member: 一个etcd实例。它管理着一个Node,并且可以为客户端请求提供服务。

Cluster:由多个Member构成可以协同工作的etcd集群。

Peer:对同一个etcd集群中另外一个Member的称呼。

Client: 向etcd集群发送HTTP请求的客户端。

WAL:预写式日志,etcd用于持久化存储的日志格式。

snapshot:etcd防止WAL文件过多而设置的快照,存储etcd数据状态。

Proxy:etcd的一种模式,为etcd集群提供反向代理服务。

Leader:Raft算法中通过竞选而产生的处理所有数据提交的节点。

Follower:竞选失败的节点作为Raft中的从属节点,为算法提供强一致性保证。

Candidate:当Follower超过一定时间接收不到Leader的心跳时转变为Candidate开始竞选。

Term:某个节点成为Leader到下一次竞选时间,称为一个Term。

Index:数据项编号。Raft中通过Term和Index来定位数据。

4)etcd官方下载

下载地址:https://github.com/coreos/etcd/releases选择合适的版本进行下载。

二、etcd 集群安装。(准备3台机器)

1)第一步。下载etcd命令

wget https://github.com/etcd-io/etcd/releases/download/v3.3.13/etcd-v3.3.13-linux-amd64.tar.gz tar xf etcd-v3.3.13-linux-amd64.tar.gz [root@master tools]# ls etcd-v3.3.13-linux-amd64/etcd* etcd-v3.3.13-linux-amd64/etcd etcd-v3.3.13-linux-amd64/etcdctl [root@master tools]# cp etcd-v3.3.13-linux-amd64/etcd* /usr/bin/ [root@master tools]# ls /usr/bin/etcd* /usr/bin/etcd /usr/bin/etcdctl [root@master tools]# chown root:root /usr/bin/etcd*

2)第二步。修改配置文件

2.1)默认端口下在配置(对于健康检查不需要添加--endpoints=http://127.0.0.1:8083参数)

vi /etc/etcd.conf name: node01 initial-advertise-peer-urls: http://192.168.1.5:2380 listen-peer-urls: http://192.168.1.5:2380 listen-client-urls: http://192.168.1.5:2379,http://127.0.0.1:2379 advertise-client-urls: http://192.168.1.5:2379 initial-cluster-token: etcd-cluster-0 initial-cluster: node01=http://192.168.1.5:2380,node02=http://192.168.1.6:2380,node03=http://192.168.1.7:2380 initial-cluster-state: new data-dir: /var/lib/etcd

默认的端口为 2380和2379

测试时修改了端口

2.2)将端口改为8083和8084

vi /etc/etcd.conf name: node01 initial-advertise-peer-urls: http://192.168.1.5:8084 listen-peer-urls: http://192.168.1.5:8084 listen-client-urls: http://192.168.1.5:8083,http://127.0.0.1:8083 advertise-client-urls: http://192.168.1.5:8083 initial-cluster-token: etcd-cluster-0 initial-cluster: node01=http://192.168.1.5:8084,node02=http://192.168.1.6:8084,node03=http://192.168.1.7:8084 initial-cluster-state: new data-dir: /var/lib/etcd

mkdir /var/lib/etcd

3)第三步,加载etcd到系统程序

vi /usr/lib/systemd/system/etcd.service [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target Documentation=https://github.com/coreos [Service] Type=notify ExecStart=/usr/bin/etcd --config-file=/etc/etcd.conf Restart=on-failure RestartSec=5 LimitCORE=infinity LimitNOFILE=655360 LimitNPROC=655350 [Install] WantedBy=multi-user.target

4)第四步。设置开机自启动

systemctl daemon-reload(重新加载模块)

systemctl enable etcd(开机启动)

systemctl start etcd(启动服务) 最后一起启动

5)其他节点执行同样的操作

[root@master tools]# scp etcd-v3.3.13-linux-amd64/etcd root@192.168.1.6:/usr/bin/ etcd 100% 16MB 103.9MB/s 00:00 [root@master tools]# scp /etc/etcd.conf root@192.168.1.6:/etc/ etcd.conf 100% 422 244.9KB/s 00:00 [root@master tools]# scp etcd-v3.3.13-linux-amd64/etcd root@192.168.1.7:/usr/bin/ etcd 100% 16MB 130.4MB/s 00:00 [root@master tools]# scp /etc/etcd.conf root@192.168.1.7:/etc/ etcd.conf 100% 422 148.4KB/s 00:00 [root@master tools]# scp /usr/lib/systemd/system/etcd.service root@192.168.1.6:/usr/lib/systemd/system/ etcd.service 100% 352 33.9KB/s 00:00 [root@master tools]# scp /usr/lib/systemd/system/etcd.service root@192.168.1.7:/usr/lib/systemd/system/ etcd.service 100% 352 136.2KB/s 00:00

修改好对应的配置

systemctl start etcd 启动

6)健康检查

[root@master etcd]# etcdctl --endpoints=http://127.0.0.1:8083 cluster-health member 19f45ab624e135d5 is healthy: got healthy result from http://192.168.1.7:8083 member 8b1773c5c1edc4a3 is healthy: got healthy result from http://192.168.1.5:8083 member be69fc1f2a25ca22 is healthy: got healthy result from http://192.168.1.6:8083 cluster is healthy [root@master etcd]# curl http://192.168.1.5:8083/v2/members

7)etcdctl的相关命令操作

https://www.jianshu.com/p/d63265949e52 https://www.cnblogs.com/breg/p/5728237.html

常用命令

#查看集群健康状态 etcdctl cluster-health #查看集群所有节点 etcdctl member list #把一台设备移除出集群 , 后面是集群节点号 , 使用list可以查看到 #移除之后,该节点的etcd服务自动关闭 etcdctl member remove 1e82894832618580 #更新一个节点 etcdctl member update 1e82894832618580

v2接口

#设置key=hello , value=world etcdctl set hello world #查看key的值 etcdctl get hello 查看所有的key curl localhost:8083/v2/keys curl localhost:2379/v2/keys/key | python -m json.tool 如果还有内层的数据,key后边再接字段名就行了

授权

v2接口 创建用户名 etcdctl --endpoints=http://127.0.0.1:8083 user add root 开启认证 etcdctl --endpoints=http://127.0.0.1:8083 --username root:123456 auth enable 关闭认证 etcdctl --endpoints=http://127.0.0.1:8083 --username root:123456 auth disable 使用认证方式获取数据 curl -u "root:123456" localhost:8083/v2/keys 创建普通用户 [root@client ~]# etcdctl --endpoints=http://127.0.0.1:8083 --username root:123456 user add reado New password: User reado created 创建普通角色 etcdctl --endpoints=http://127.0.0.1:8083 --username root:123456 role add readConf 给用户分配角色 etcdctl --endpoints=http://127.0.0.1:8083 --username root:123456 user grant --roles readConf reado 用户撤销角色 etcdctl --endpoints=http://127.0.0.1:8083 --username root:123456 user revoke --roles readConf reado 修改用户密码 etcdctl --endpoints=http://127.0.0.1:8083 --username root:123456 user passwd reado 删除用户 etcdctl --endpoints=http://127.0.0.1:8083 --username root:123456 user remove reado 角色分配权限 .....

v3接口

设置api版本 export ETCDCTL_API=3 设置值 etcdctl --endpoints=http://127.0.0.1:8083 put age hhh 获取值 etcdctl --endpoints=http://127.0.0.1:8083 get age 仅仅查看key --keys-only=true 如:etcdctl --endpoints=http://127.0.0.1:8083 get age --keys-only=true 查看拥有某个前缀的key 通过设置参数--prefix etcdctl --endpoints=http://127.0.0.1:8083 get --prefix "a" --keys-only=true 查看所有的key etcdctl --endpoints=http://127.0.0.1:8083 get --prefix "" --keys-only=true

v3授权

[root@client ~]# export ETCDCTL_API=3 [root@client ~]# etcdctl --endpoints=http://127.0.0.1:8083 user add root [root@client ~]# etcdctl --endpoints=http://127.0.0.1:8083 --user root:123456 auth enable Authentication Enabled 关闭认证 etcdctl --endpoints=http://127.0.0.1:8083 --user root:123456 auth disable 添加 role0 角色 [root@client ~]# etcdctl --endpoints=http://127.0.0.1:8083 --user root:123456 role add role0 Role role0 created 读写权限,只能操作foo的key [root@client ~]# etcdctl --endpoints=http://127.0.0.1:8083 --user root:123456 role grant-permission role0 readwrite foo Role role0 updated 创建用户 [root@client ~]# etcdctl --endpoints=http://127.0.0.1:8083 --user root:123456 user add user0 给用户分配角色 [root@client ~]# etcdctl --endpoints=http://127.0.0.1:8083 --user root:123456 user grant-role user0 role0 Role role0 is granted to user user0 操作 etcdctl --endpoints=${ENDPOINTS} --user=user0:123456 put foo bar 查看角色权限 [root@client ~]# etcdctl --endpoints=http://127.0.0.1:8083 --user root:123456 role get role0 Role role0 KV Read: foo KV Write: foo 更新密码 etcdctl --endpoints=http://127.0.0.1:8083 --user root:123456 user passwd user0 以什么开头的key [root@client ~]# etcdctl --endpoints=http://127.0.0.1:8083 --user root:123456 role grant-permission role0 readwrite user0 --prefix=true Role role0 updated [root@client ~]# [root@client ~]# etcdctl --endpoints=http://127.0.0.1:8083 --user root:123456 role get role0 Role role0 KV Read: foo [user0, user1) (prefix user0) KV Write: foo [user0, user1) (prefix user0)

3.1) v3接口完整流程

创建角色 role_pai ./etcdctl --endpoints=http://127.0.0.1:8083 --user root:123456 role add role_pai 给角色分配权限路径 /pai_ ./etcdctl --endpoints=http://127.0.0.1:8083 --user root:123456 role grant-permission role_pai readwrite /pai_ --prefix=true 添加用户 ./etcdctl --endpoints=http://127.0.0.1:8083 --user root:123456 user add tanzhilang 密码:123456789 用户分配角色 ./etcdctl --endpoints=http://127.0.0.1:8083 --user root:123456 user grant-role tanzhilang role_pai

8)数据结构是以tree形式张开的。以v2的数据库为列

[root@client ~]# etcdctl --endpoints=http://127.0.0.1:8083 set 'hell/ttt' world world [root@client ~]# etcdctl --endpoints=http://127.0.0.1:8083 set 'hell/ttt1' world world 实质是先创建了hell文件夹,再创建了2个key [root@client ~]# curl localhost:8083/v2/keys | python -m json.tool % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 100 247 100 247 0 0 174k 0 --:--:-- --:--:-- --:--:-- 241k { "action": "get", "node": { "dir": true, "nodes": [ { "createdIndex": 42, "key": "/hello", "modifiedIndex": 42, "value": "world" }, { "createdIndex": 26, "key": "/age", "modifiedIndex": 26, "value": "hhh" }, { "createdIndex": 43, "dir": true, "key": "/hell", "modifiedIndex": 43 } ] } } dir:为tree表示也是一个文件夹。可以再次执行 [root@server ~]# curl localhost:8083/v2/keys/hell | python -m json.tool % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 100 245 100 245 0 0 43378 0 --:--:-- --:--:-- --:--:-- 49000 { "action": "get", "node": { "createdIndex": 43, "dir": true, "key": "/hell", "modifiedIndex": 43, "nodes": [ { "createdIndex": 43, "key": "/hell/ttt", "modifiedIndex": 43, "value": "world" }, { "createdIndex": 44, "key": "/hell/ttt1", "modifiedIndex": 44, "value": "world" } ] } }

8.1)创建key的先后顺序

[root@client ~]# etcdctl --endpoints=http://127.0.0.1:8083 set 'hell' world 此时无法创建,因为hell没有被标记为文件夹 [root@client ~]# etcdctl --endpoints=http://127.0.0.1:8083 set 'hell/ttt' world

8.2)删除操作

删除key etcdctl --endpoints=http://127.0.0.1:8083 rm '/hell/ttt' 删除文件夹 etcdctl --endpoints=http://127.0.0.1:8083 rmdir /hell 文件夹下面存在key,则无法删除

9)租约

创建一个TTL(生存时间)为600秒的lease,etcd server返回 leaseID [root@client ~]# etcdctl --endpoints=http://127.0.0.1:8083 lease grant 600 lease 4bd77dd70b12ddae granted with TTL(600s) 查看lease的ttl,剩余时间 [root@client ~]# etcdctl --endpoints=http://127.0.0.1:8083 lease timetolive 4bd77dd70b12ddae lease 4bd77dd70b12ddae granted with TTL(600s), remaining(582s)

9.2)lease租约与key

# etcdctl --endpoints=http://127.0.0.1:8083 lease grant 100 lease 4bd77dd70b12ddb9 granted with TTL(100s # etcdctl --endpoints=http://127.0.0.1:8083 put node healthy --lease 4bd77dd70b12ddb9 OK # etcdctl --endpoints=http://127.0.0.1:8083 get node -w=json | python -m json.tool { "count": 1, "header": { "cluster_id": 11820979725651749956, "member_id": 2193120545735822295, "raft_term": 15, "revision": 82 }, "kvs": [ { "create_revision": 82, "key": "bm9kZQ==", "lease": 5464975035394612665, "mod_revision": 82, "value": "aGVhbHRoeQ==", "version": 1 } ] } # etcdctl --endpoints=http://127.0.0.1:8083 lease timetolive 4bd77dd70b12ddb9 lease 4bd77dd70b12ddb9 granted with TTL(100s), remaining(4s) 此时key已经被删除了 [root@client ~]# etcdctl --endpoints=http://127.0.0.1:8083 get node [root@client ~]#

9.3) 数据回收策略

人工api 执行压缩机制 [root@client ~]# etcdctl --endpoints=http://127.0.0.1:8083 endpoint status --write-out="json"|python -m json.tool [ { "Endpoint": "http://127.0.0.1:8083", "Status": { "dbSize": 37060608, "header": { "cluster_id": 11820979725651749956, "member_id": 2193120545735822295, "raft_term": 15, "revision": 189 }, "leader": 10140094458586071665, "raftIndex": 307, "raftTerm": 15, "version": "3.3.13" } } ] [root@client ~]# etcdctl --endpoints=http://127.0.0.1:8083 compact 189 compacted revision 189 [root@client ~]#

9.4)压策略的配置

auto-compaction-mode 为 periodic 时,它表示启用时间周期性压缩 auto-compaction-retention 为保留的时间的周期,比如 1h auto-compaction-mode 为 revision 时,它表示启用版本号压缩模式 auto-compaction-retention为保留的历史版本号数,比如 10000 etcd server 的 auto-compaction-retention 为'0'时,将关闭自动压缩策略

9.5) 事件监听

监听key的变化 etcdctl --endpoints=http://127.0.0.1:8083 watch hello -w=json 指定操作事件 etcdctl --endpoints=http://127.0.0.1:8083 watch hello -w=json --rev=199

参数启动

/var/www/etcd \ --name etcd1 \ --initial-advertise-peer-urls http://10.177.0.251:2380 \ --listen-peer-urls http://10.177.0.251:2380 \ --listen-client-urls http://10.177.0.251:2379,http://127.0.0.1:2379 \ --advertise-client-urls http://10.177.0.251:2379 \ --initial-cluster-token etcd-cluster-1 \ --initial-cluster etcd1=http://10.177.0.251:2380,etcd2=http://10.177.0.253:2380,etcd3=http://10.177.0.254:2380 \ --initial-cluster-state new --data-dir=/data/etcd/ /var/www/etcd \ --name etcd2 \ --initial-advertise-peer-urls http://10.177.0.253:2380 \ --listen-peer-urls http://10.177.0.253:2380 \ --listen-client-urls http://10.177.0.253:2379,http://127.0.0.1:2379 \ --advertise-client-urls http://10.177.0.253:2379 \ --initial-cluster-token etcd-cluster-1 \ --initial-cluster etcd1=http://10.177.0.251:2380,etcd2=http://10.177.0.253:2380,etcd3=http://10.177.0.254:2380 \ --initial-cluster-state new --data-dir=/data/etcd/ /var/www/etcd \ --name etcd3 \ --initial-advertise-peer-urls http://10.177.0.254:2380 \ --listen-peer-urls http://10.177.0.254:2380 \ --listen-client-urls http://10.177.0.254:2379,http://127.0.0.1:2379 \ --advertise-client-urls http://10.177.0.254:2379 \ --initial-cluster-token etcd-cluster-1 \ --initial-cluster etcd1=http://10.177.0.251:2380,etcd2=http://10.177.0.253:2380,etcd3=http://10.177.0.254:2380 \ --initial-cluster-state new \ --data-dir=/data/etcd/

十、etcd 3.5版本

1)集群健康检测

[root@etcd02 ~]# etcdctl --endpoints=http://192.168.184.191:38083,http://192.168.184.192:38083,http://192.168.184.193:38083 endpoint health http://192.168.184.192:38083 is healthy: successfully committed proposal: took = 4.336678ms http://192.168.184.193:38083 is healthy: successfully committed proposal: took = 3.928933ms http://192.168.184.191:38083 is healthy: successfully committed proposal: took = 4.831361ms

2)数据备份与恢复

[root@etcd01 ~]# etcdctl --endpoints=http://192.168.184.191:38083 snapshot save /data/20211203.db {"level":"info","ts":1651068755.490274,"caller":"snapshot/v3_snapshot.go:68","msg":"created temporary db file","path":"/data/20211203.db.part"} {"level":"info","ts":1651068755.4914448,"logger":"client","caller":"v3/maintenance.go:211","msg":"opened snapshot stream; downloading"} {"level":"info","ts":1651068755.491491,"caller":"snapshot/v3_snapshot.go:76","msg":"fetching snapshot","endpoint":"http://192.168.184.191:38083"} {"level":"info","ts":1651068755.4932172,"logger":"client","caller":"v3/maintenance.go:219","msg":"completed snapshot read; closing"} {"level":"info","ts":1651068755.4939046,"caller":"snapshot/v3_snapshot.go:91","msg":"fetched snapshot","endpoint":"http://192.168.184.191:38083","size":"20 kB","took":"now"} {"level":"info","ts":1651068755.493959,"caller":"snapshot/v3_snapshot.go:100","msg":"saved","path":"/data/20211203.db"} Snapshot saved at /data/20211203.db [root@etcd02 ~]# etcdctl --endpoints=http://192.168.184.191:38083 snapshot restore /data/20220427_01.db --data-dir=/data/etcd Deprecated: Use `etcdutl snapshot restore` instead. 2022-04-27T22:38:10+08:00 info snapshot/v3_snapshot.go:251 restoring snapshot {"path": "/data/20220427_01.db", "wal-dir": "/data/etcd/member/wal", "data-dir": "/data/etcd", "snap-dir": "/data/etcd/member/snap", "stack": "go.etcd.io/etcd/etcdutl/v3/snapshot.(*v3Manager).Restore\n\t/tmp/etcd-release-3.5.0/etcd/release/etcd/etcdutl/snapshot/v3_snapshot.go:257\ngo.etcd.io/etcd/etcdutl/v3/etcdutl.SnapshotRestoreCommandFunc\n\t/tmp/etcd-release-3.5.0/etcd/release/etcd/etcdutl/etcdutl/snapshot_command.go:147\ngo.etcd.io/etcd/etcdctl/v3/ctlv3/command.snapshotRestoreCommandFunc\n\t/tmp/etcd-release-3.5.0/etcd/release/etcd/etcdctl/ctlv3/command/snapshot_command.go:128\ngithub.com/spf13/cobra.(*Command).execute\n\t/home/remote/sbatsche/.gvm/pkgsets/go1.16.3/global/pkg/mod/github.com/spf13/cobra@v1.1.3/command.go:856\ngithub.com/spf13/cobra.(*Command).ExecuteC\n\t/home/remote/sbatsche/.gvm/pkgsets/go1.16.3/global/pkg/mod/github.com/spf13/cobra@v1.1.3/command.go:960\ngithub.com/spf13/cobra.(*Command).Execute\n\t/home/remote/sbatsche/.gvm/pkgsets/go1.16.3/global/pkg/mod/github.com/spf13/cobra@v1.1.3/command.go:897\ngo.etcd.io/etcd/etcdctl/v3/ctlv3.Start\n\t/tmp/etcd-release-3.5.0/etcd/release/etcd/etcdctl/ctlv3/ctl.go:107\ngo.etcd.io/etcd/etcdctl/v3/ctlv3.MustStart\n\t/tmp/etcd-release-3.5.0/etcd/release/etcd/etcdctl/ctlv3/ctl.go:111\nmain.main\n\t/tmp/etcd-release-3.5.0/etcd/release/etcd/etcdctl/main.go:59\nruntime.main\n\t/home/remote/sbatsche/.gvm/gos/go1.16.3/src/runtime/proc.go:225"} 2022-04-27T22:38:10+08:00 info membership/store.go:119 Trimming membership information from the backend... 2022-04-27T22:38:10+08:00 info membership/cluster.go:393 added member {"cluster-id": "cdf818194e3a8c32", "local-member-id": "0", "added-peer-id": "8e9e05c52164694d", "added-peer-peer-urls": ["http://localhost:2380"]} 2022-04-27T22:38:10+08:00 info snapshot/v3_snapshot.go:272 restored snapshot {"path": "/data/20220427_01.db", "wal-dir": "/data/etcd/member/wal", "data-dir": "/data/etcd", "snap-dir": "/data/etcd/member/snap"} 备注:需要将各个节点的数据文件夹重命名,使用备份文件到文件夹 备份与恢复 etcdctl --endpoints=http://192.168.184.191:38083 snapshot save /data/20220427_01.db etcdctl --endpoints=http://192.168.184.191:38083 snapshot restore /data/20220427_01.db --data-dir=/data/etcd

浙公网安备 33010602011771号

浙公网安备 33010602011771号