https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/prometheus https://github.com/ikubernetes/k8s-prom

1) 安装

git clone https://github.com/iKubernetes/k8s-prom.git [root@master opt]# cd k8s-prom/ [root@master k8s-prom]# kubectl apply -f namespace.yaml [root@master k8s-prom]# cd node_exporter/ [root@master node_exporter]# ls node-exporter-ds.yaml node-exporter-svc.yaml [root@master node_exporter]# kubectl apply -f . [root@master node_exporter]# kubectl get pods -n prom NAME READY STATUS RESTARTS AGE prometheus-node-exporter-4h9jv 1/1 Running 0 76s prometheus-node-exporter-hnwv9 1/1 Running 0 76s prometheus-node-exporter-ngk2x 1/1 Running 0 76s [root@master node_exporter]# cd ../prometheus/ [root@master prometheus]# kubectl apply -f . configmap/prometheus-config created deployment.apps/prometheus-server created clusterrole.rbac.authorization.k8s.io/prometheus created serviceaccount/prometheus created clusterrolebinding.rbac.authorization.k8s.io/prometheus created service/prometheus created [root@master prometheus]# kubectl get all -n prom 查看该名称空间下的所有资源 [root@master prometheus]# kubectl get svc -n prom NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE prometheus NodePort 10.104.164.7 <none> 9090:30090/TCP 8m11s prometheus-node-exporter ClusterIP None <none> 9100/TCP 10m

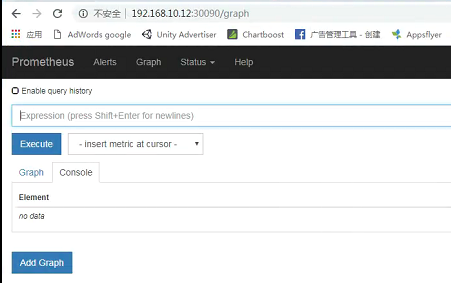

访问:http://192.168.10.12:30090/graph

[root@master k8s-prom]# cd kube-state-metrics/ [root@master kube-state-metrics]# kubectl apply -f . deployment.apps/kube-state-metrics created serviceaccount/kube-state-metrics created clusterrole.rbac.authorization.k8s.io/kube-state-metrics created clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created service/kube-state-metrics created [root@master kube-state-metrics]# kubectl get pods -n prom NAME READY STATUS RESTARTS AGE kube-state-metrics-6b44579cc4-28cgf 0/1 ImagePullBackOff 0 3m9s prometheus-node-exporter-4h9jv 1/1 Running 0 22m prometheus-node-exporter-hnwv9 1/1 Running 0 22m prometheus-node-exporter-ngk2x 1/1 Running 0 22m prometheus-server-75cf46bdbc-z9kh7 1/1 Running 0 19m [root@master kube-state-metrics]# kubectl describe pod kube-state-metrics-6b44579cc4-28cgf -n prom Warning Failed 95s (x4 over 3m40s) kubelet, node02 Error: ErrImagePull Normal BackOff 71s (x6 over 3m40s) kubelet, node02 Back-off pulling image "gcr.io/google_containers/kube-state-metrics-amd64:v1.3.1" Warning Failed 58s (x7 over 3m40s) kubelet, node02 Error: ImagePullBackOff [root@master kube-state-metrics]# cat kube-state-metrics-deploy.yaml |grep image # 更改镜像,重新应用 image: quay.io/coreos/kube-state-metrics:v1.3.1 [root@master kube-state-metrics]# kubectl get pods -n prom NAME READY STATUS RESTARTS AGE kube-state-metrics-6697d66bbb-z9qsw 1/1 Running 0 48s prometheus-node-exporter-4h9jv 1/1 Running 0 31m prometheus-node-exporter-hnwv9 1/1 Running 0 31m prometheus-node-exporter-ngk2x 1/1 Running 0 31m prometheus-server-75cf46bdbc-z9kh7 1/1 Running 0 28m

创建证书

[root@master ~]# cd /etc/kubernetes/pki/ [root@master pki]# ls apiserver.crt apiserver.key ca.crt front-proxy-ca.crt front-proxy-client.key apiserver-etcd-client.crt apiserver-kubelet-client.crt ca.key front-proxy-ca.key sa.key apiserver-etcd-client.key apiserver-kubelet-client.key etcd front-proxy-client.crt sa.pub [root@master pki]# (umask 077; openssl genrsa -out serving.key 2048) #第一步 Generating RSA private key, 2048 bit long modulus .+++ ....+++ e is 65537 (0x10001) [root@master pki]# openssl req -new -key serving.key -out serving.csr -subj "/CN=serving" # 第二步 [root@master pki]# openssl x509 -req -in serving.csr -CA ./ca.crt -CAkey ./ca.key -CAcreateserial -out serving.crt -days 3650 # 第三步 Signature ok subject=/CN=serving Getting CA Private Key [root@master pki]# ls serving.* serving.crt serving.csr serving.key [root@master k8s-prometheus-adapter]# cat custom-metrics-apiserver-deployment.yaml |grep secret secret: secretName: cm-adapter-serving-certs # 需要用到的信息 [root@master pki]# kubectl create secret generic cm-adapter-serving-certs --from-file=serving.crt=./serving.crt --from-file=serving.key=./serving.key secret/cm-adapter-serving-certs created [root@master pki]# kubectl get secret NAME TYPE DATA AGE cm-adapter-serving-certs Opaque 2 5m1s default-token-q546g kubernetes.io/service-account-token 3 44h [root@master pki]# kubectl create secret generic cm-adapter-serving-certs --from-file=serving.crt=./serving.crt --from-file=serving.key=./serving.key -n prom secret/cm-adapter-serving-certs created [root@master pki]# kubectl get secret -n prom NAME TYPE DATA AGE cm-adapter-serving-certs Opaque 2 20s default-token-wr4zt kubernetes.io/service-account-token 3 161m kube-state-metrics-token-9q4bt kubernetes.io/service-account-token 3 140m prometheus-token-kj5rj kubernetes.io/service-account-token 3 157m

问题

[root@master k8s-prometheus-adapter]# pwd /opt/k8s-prom/k8s-prometheus-adapter [root@master k8s-prometheus-adapter]# kubectl apply -f . [root@master k8s-prometheus-adapter]# kubectl get pods -n prom NAME READY STATUS RESTARTS AGE custom-metrics-apiserver-6bb45c6978-rl6zz 0/1 Error 2 54s kube-state-metrics-6697d66bbb-z9qsw 1/1 Running 0 136m prometheus-node-exporter-4h9jv 1/1 Running 0 167m prometheus-node-exporter-hnwv9 1/1 Running 0 167m prometheus-node-exporter-ngk2x 1/1 Running 0 167m prometheus-server-75cf46bdbc-z9kh7 1/1 Running 0 164m

一波三折完成部署

[root@master k8s-prometheus-adapter]# pwd /opt/k8s-prom/k8s-prometheus-adapter [root@master k8s-prometheus-adapter]# kubectl apply -f . [root@master k8s-prometheus-adapter]# kubectl get pods -n prom NAME READY STATUS RESTARTS AGE custom-metrics-apiserver-6bb45c6978-rl6zz 0/1 Error 2 54s kube-state-metrics-6697d66bbb-z9qsw 1/1 Running 0 136m prometheus-node-exporter-4h9jv 1/1 Running 0 167m prometheus-node-exporter-hnwv9 1/1 Running 0 167m prometheus-node-exporter-ngk2x 1/1 Running 0 167m prometheus-server-75cf46bdbc-z9kh7 1/1 Running 0 164m [root@master k8s-prometheus-adapter]# kubectl logs custom-metrics-apiserver-6bb45c6978-rl6zz -n prom unknown flag: --rate-interval # 配置文件出现了未知的参数 Usage of /adapter: unknown flag: --rate-interval [root@master k8s-prometheus-adapter]# mv custom-metrics-apiserver-deployment.yaml{,.bak} https://github.com/directxman12 [root@master k8s-prometheus-adapter]# wget https://raw.githubusercontent.com/DirectXMan12/k8s-prometheus-adapter/master/deploy/manifests/custom-metrics-apiserver-deployment.yaml [root@master k8s-prometheus-adapter]# cp custom-metrics-apiserver-deployment.yaml{,.ori} # 后面对比改了哪些内容 [root@master k8s-prometheus-adapter]# diff custom-metrics-apiserver-deployment.yaml custom-metrics-apiserver-deployment.yaml.ori 7c7 < namespace: prom --- > namespace: custom-metrics [root@master k8s-prometheus-adapter]# wget https://raw.githubusercontent.com/DirectXMan12/k8s-prometheus-adapter/master/deploy/manifests/custom-metrics-config-map.yaml [root@master k8s-prometheus-adapter]# cp custom-metrics-config-map.yaml{,.ori} [root@master k8s-prometheus-adapter]# diff custom-metrics-config-map.yaml{,.ori} 5c5 < namespace: prom --- > namespace: custom-metrics [root@master k8s-prometheus-adapter]# kubectl apply -f custom-metrics-config-map.yaml configmap/adapter-config created [root@master k8s-prometheus-adapter]# kubectl get cm -n prom NAME DATA AGE adapter-config 1 9s prometheus-config 1 3h30m [root@master k8s-prometheus-adapter]# kubectl apply -f custom-metrics-apiserver-deployment.yaml deployment.apps/custom-metrics-apiserver created [root@master k8s-prometheus-adapter]# kubectl get pods -n prom NAME READY STATUS RESTARTS AGE custom-metrics-apiserver-667fd4fffd-j5mzm 1/1 Running 0 12s kube-state-metrics-6697d66bbb-z9qsw 1/1 Running 0 3h6m prometheus-node-exporter-4h9jv 1/1 Running 0 3h37m prometheus-node-exporter-hnwv9 1/1 Running 0 3h37m prometheus-node-exporter-ngk2x 1/1 Running 0 3h37m prometheus-server-75cf46bdbc-z9kh7 1/1 Running 0 3h34m [root@master k8s-prometheus-adapter]# kubectl apply -f custom-metrics-apiserver-deployment.yaml deployment.apps/custom-metrics-apiserver created [root@master k8s-prometheus-adapter]# kubectl get pods -n prom NAME READY STATUS RESTARTS AGE custom-metrics-apiserver-667fd4fffd-j5mzm 1/1 Running 0 12s kube-state-metrics-6697d66bbb-z9qsw 1/1 Running 0 3h6m prometheus-node-exporter-4h9jv 1/1 Running 0 3h37m prometheus-node-exporter-hnwv9 1/1 Running 0 3h37m prometheus-node-exporter-ngk2x 1/1 Running 0 3h37m prometheus-server-75cf46bdbc-z9kh7 1/1 Running 0 3h34m [root@master k8s-prometheus-adapter]# [root@master k8s-prometheus-adapter]# kubectl get all -n prom

一定要出现api

[root@master k8s-prometheus-adapter]# kubectl api-versions|grep custom

custom.metrics.k8s.io/v1beta1

第二种官方安装方式未测试

第二种方式 https://github.com/DirectXMan12/k8s-prometheus-adapter/tree/master/deploy/manifests 下载所以文件,应用。只修改名称空间,改为 prom

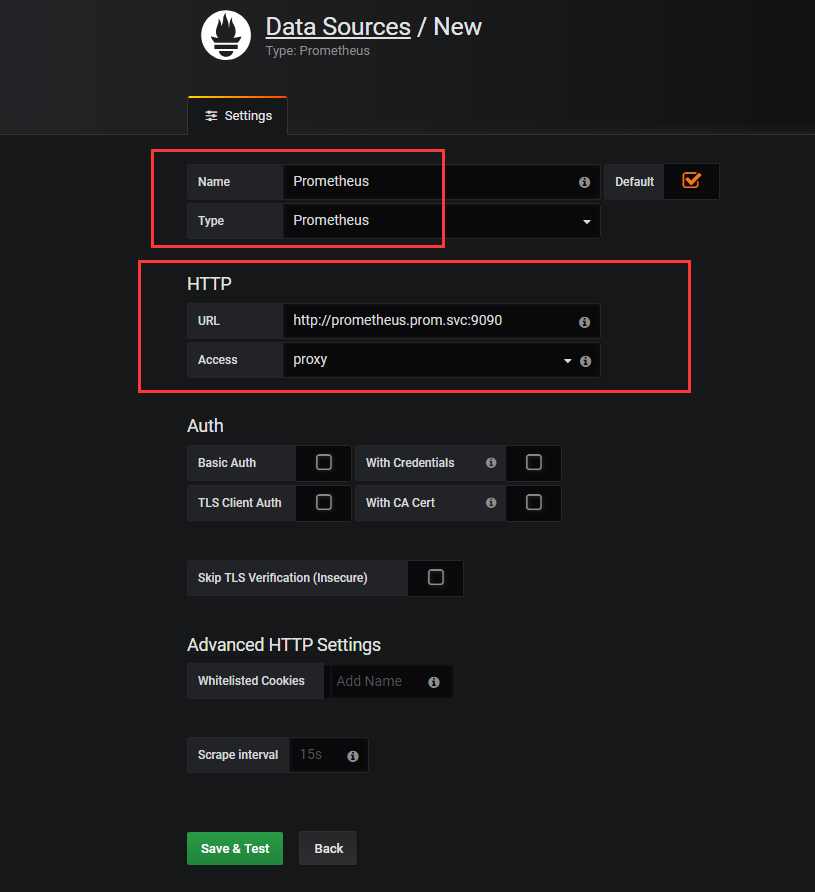

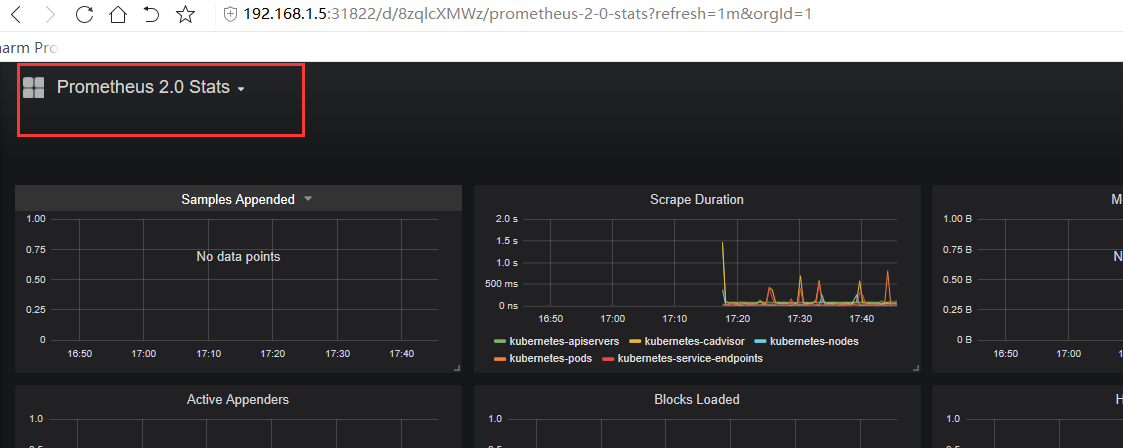

下一步将 普罗米修斯的资源导入grafana

安装服务

[root@master opt]# wget https://raw.githubusercontent.com/kubernetes-retired/heapster/master/deploy/kube-config/influxdb/grafana.yaml [root@master opt]# cp grafana.yaml grafana.yaml.bak [root@master opt]# diff grafana.yaml{,.bak} 5c5 < namespace: prom --- > namespace: kube-system 16c16 < image: registry.cn-hangzhou.aliyuncs.com/google_containers/heapster-grafana-amd64:v5.0.4 --- > image: k8s.gcr.io/heapster-grafana-amd64:v5.0.4 27,28c27,28 < #- name: INFLUXDB_HOST < # value: monitoring-influxdb --- > - name: INFLUXDB_HOST > value: monitoring-influxdb 61c61 < namespace: prom --- > namespace: kube-system 73d72 < type: NodePort [root@master opt]# kubectl apply -f grafana.yaml deployment.extensions/monitoring-grafana created service/monitoring-grafana created [root@master opt]# kubectl get pods -n prom|grep monitoring-grafana monitoring-grafana-75577776dc-47nzb 1/1 Running 0 2m22s

查看svc的服务

[root@master opt]# kubectl get svc -n prom |grep monitoring-grafana monitoring-grafana NodePort 10.110.44.96 <none> 80:30351/TCP 4m52s

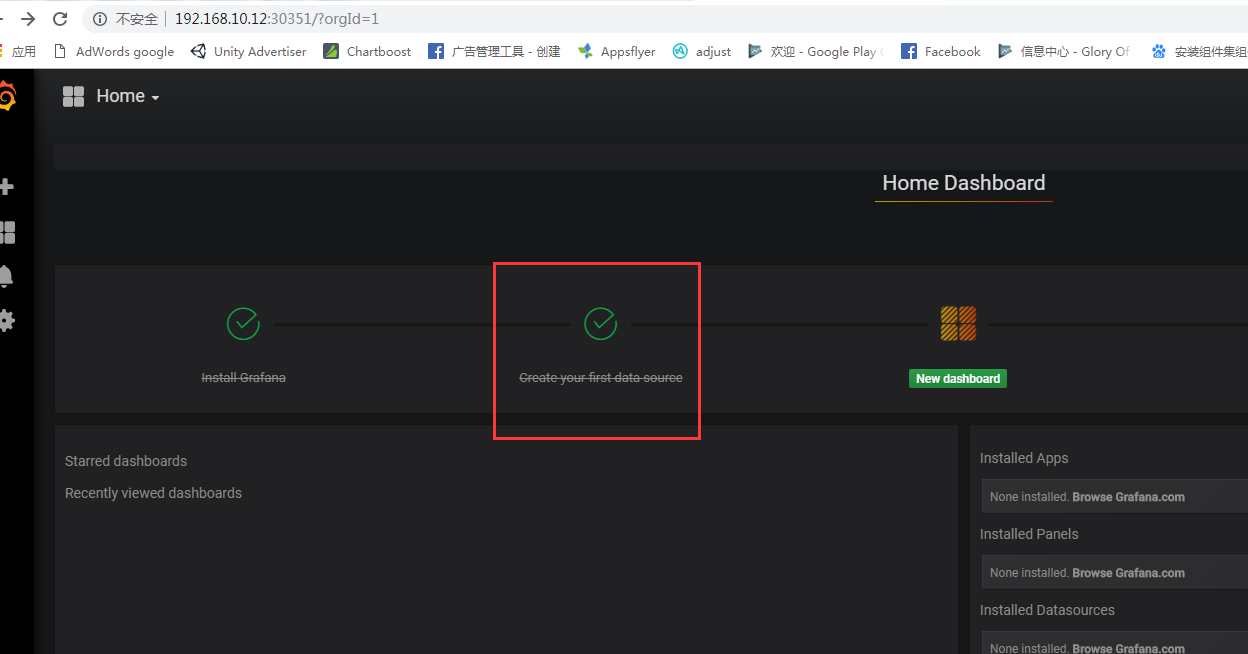

访问 http://192.168.10.12:30351

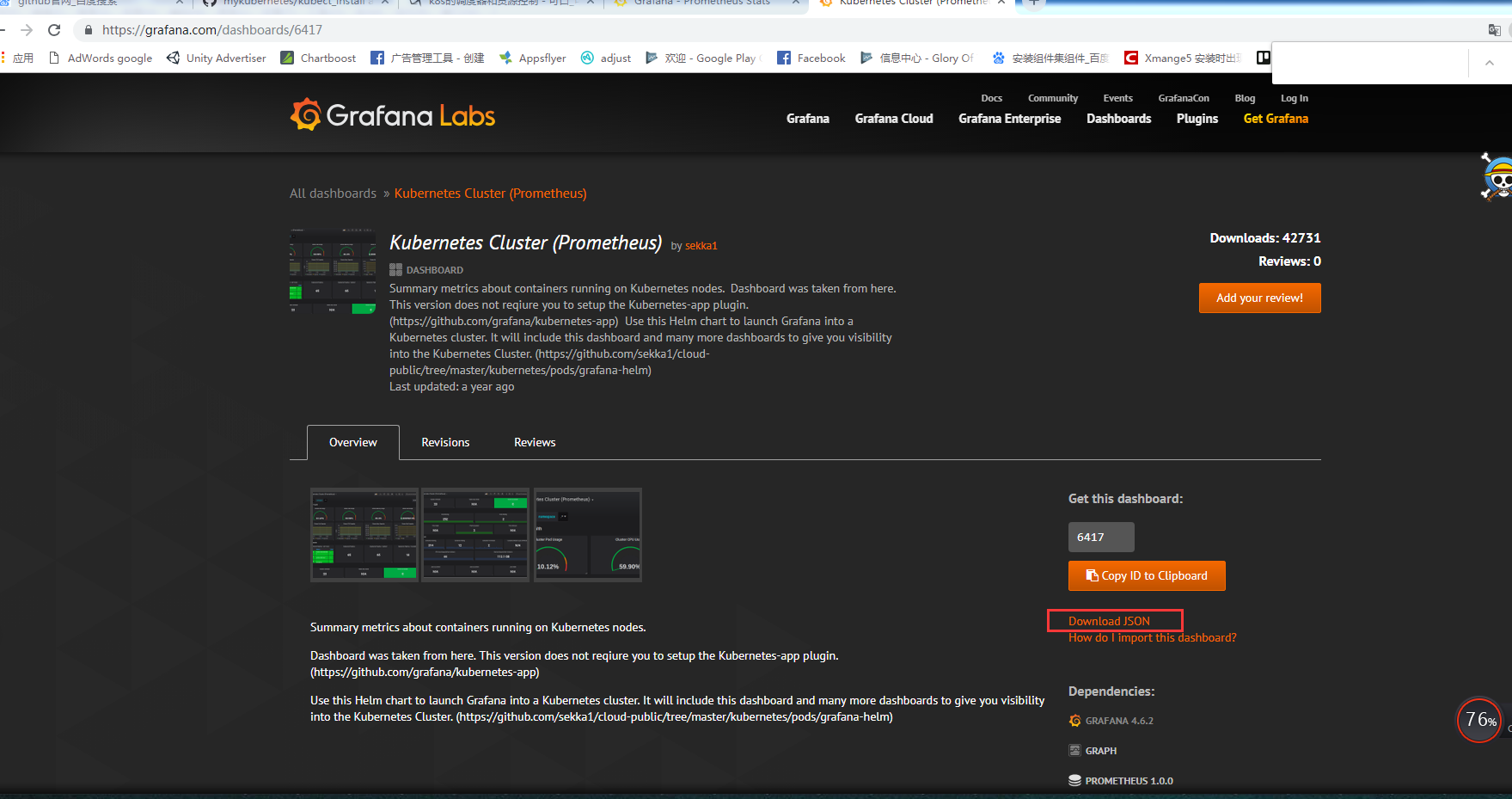

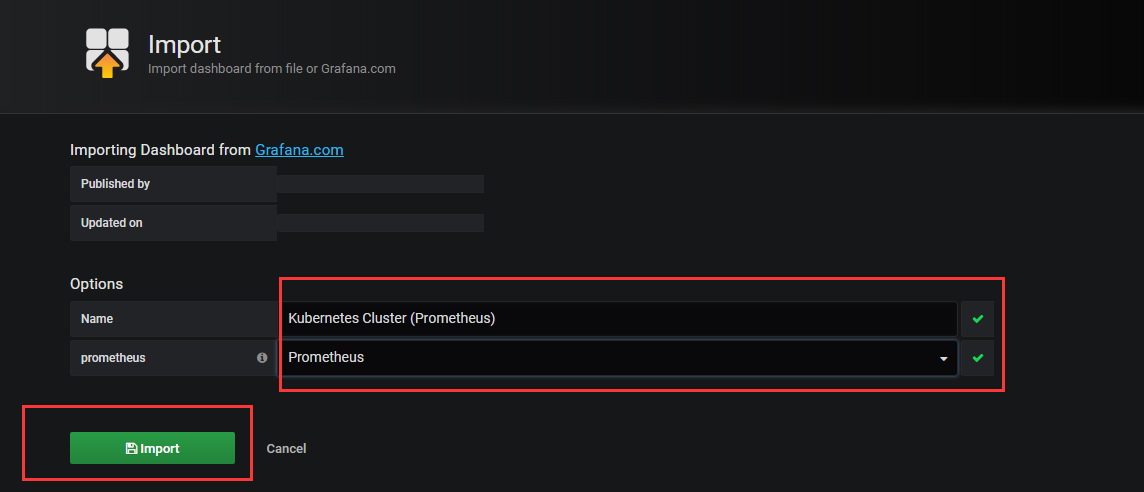

添加监控模板

https://grafana.com/dashboards https://grafana.com/dashboards?search=kubernetes # 搜索 https://grafana.com/dashboards/6417

下载json 文件存入到服务器

[root@master opt]# ls /opt/kubernetes-cluster-prometheus_rev1.json

/opt/kubernetes-cluster-prometheus_rev1.json

点击上面才会弹出下面导入的页面

最后将json 文件导入到grafana

导入下载的 json文件

1)服务器自动伸缩

[root@master opt]# kubectl explain hpa KIND: HorizontalPodAutoscaler VERSION: autoscaling/v1 [root@master opt]# kubectl explain hpa.spec

2)命令行运行资源限制

[root@master opt]# kubectl run myweb --image=ikubernetes/myapp:v1 --replicas=1 --requests='cpu=50m,memory=256Mi' --limits='cpu=50m,memory=256Mi' --labels='app=myweb' --expose --port=80 [root@master opt]# kubectl get pods NAME READY STATUS RESTARTS AGE myapp-8w7bm 1/1 Running 2 3d2h myapp-s9zmd 1/1 Running 2 136m myweb-5dcbdcbf7f-5zhqt 1/1 Running 0 20s

3)添加自动伸缩的服务

[root@master opt]# kubectl autoscale --help [root@master opt]# kubectl autoscale deployment myweb --min=1 --max=8 --cpu-percent=60 horizontalpodautoscaler.autoscaling/myweb autoscaled [root@master opt]# kubectl get hpa NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE myweb Deployment/myweb <unknown>/60% 1 8 0 10s

4)为了让外界访问做压测

[root@master opt]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d4h myapp NodePort 10.99.99.99 <none> 80:30080/TCP 3d myweb ClusterIP 10.100.13.140 <none> 80/TCP 6m23s [root@master opt]# kubectl patch svc myweb -p '{"spec":{"type":"NodePort"}}' service/myweb patched [root@master opt]# kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d4h myapp NodePort 10.99.99.99 <none> 80:30080/TCP 3d myweb NodePort 10.100.13.140 <none> 80:32106/TCP 7m11s

5)ab 压测测试效果

安装ab命令 yum install httpd-tools -y [root@master opt]# curl 192.168.10.12:32106/hostname.html myweb-5dcbdcbf7f-5zhqt [root@master opt]# ab -c 100 -n 500000 http://192.168.10.12:32106/hostname.html [root@master ~]# kubectl describe hpa 查看资源描述 [root@master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE myapp-8w7bm 1/1 Running 2 3d2h myapp-s9zmd 1/1 Running 2 152m myweb-5dcbdcbf7f-5zhqt 1/1 Running 0 16m myweb-5dcbdcbf7f-8k4nv 1/1 Running 0 37s [root@master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE myapp-8w7bm 1/1 Running 2 3d2h myapp-s9zmd 1/1 Running 2 152m myweb-5dcbdcbf7f-5zhqt 1/1 Running 0 16m myweb-5dcbdcbf7f-8k4nv 1/1 Running 0 84s myweb-5dcbdcbf7f-jfwtz 1/1 Running 0 24s

6)hpa的yaml文件控制资源

[root@master hpa]# kubectl get hpa NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE myweb Deployment/myweb 0%/60% 1 8 1 14h [root@master hpa]# kubectl delete hpa myweb horizontalpodautoscaler.autoscaling "myweb" deleted [root@master hpa]# kubectl get hpa No resources found. [root@master hpa]# cat hpa-v2-demo.yaml apiVersion: autoscaling/v2beta1 kind: HorizontalPodAutoscaler metadata: name: myweb-hpa-v2 spec: scaleTargetRef: apiVersion: apps/v1 kind: Deployment name: myweb minReplicas: 1 maxReplicas: 10 metrics: - type: Resource resource: name: cpu targetAverageUtilization: 55 - type: Resource resource: name: memory targetAverageValue: 50Mi [root@master hpa]# kubectl apply -f hpa-v2-demo.yaml horizontalpodautoscaler.autoscaling/myweb-hpa-v2 created [root@master ~]# kubectl get hpa NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE myweb-hpa-v2 Deployment/myweb 7270400/50Mi, 0%/55% 1 10 1 93s [root@master ~]# kubectl get hpa NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE myweb-hpa-v2 Deployment/myweb 7340032/50Mi, 102%/55% 1 10 2 3m45s [root@master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE myapp-8w7bm 1/1 Running 2 3d17h myapp-s9zmd 1/1 Running 2 17h myweb-5dcbdcbf7f-5zhqt 1/1 Running 0 14h myweb-5dcbdcbf7f-j65g7 1/1 Running 0 47s myweb-5dcbdcbf7f-r972k 1/1 Running 0 108s

浙公网安备 33010602011771号

浙公网安备 33010602011771号