2020系统综合实践 第4次实践作业

(1)使用Docker-compose实现Tomcat+Nginx负载均衡

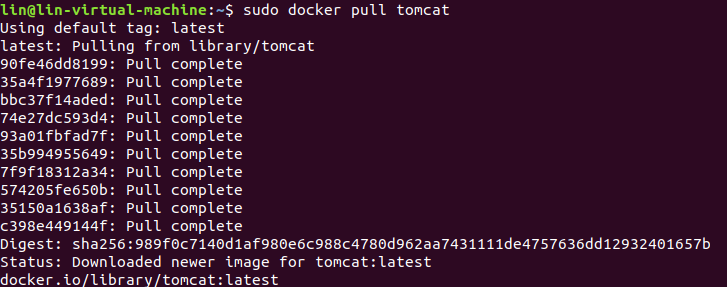

- 拉取镜像

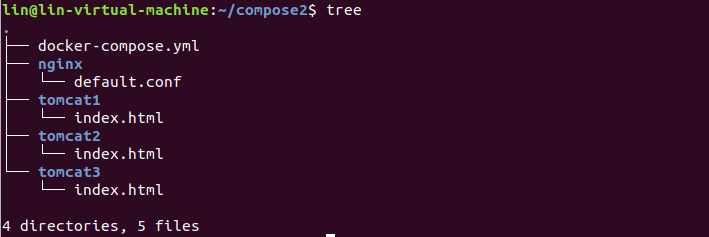

- 在目录下创建如下文件

- docker-compose.yml

version: "3"

services:

nginx:

image: nginx:latest

ports:

- "80:80"

volumes:

- ./nginx/default.conf:/etc/nginx/conf.d/default.conf

depends_on:

- tomcat1

- tomcat2

- tomcat3

tomcat1:

image: tomcat:latest

container_name: tomcat1

volumes:

- ./tomcat1:/usr/local/tomcat/webapps/ROOT

tomcat2:

image: tomcat:latest

container_name: tomcat2

volumes:

- ./tomcat2:/usr/local/tomcat/webapps/ROOT

tomcat3:

image: tomcat:latest

container_name: tomcat3

volumes:

- ./tomcat3:/usr/local/tomcat/webapps/ROOT

- default.conf

upstream tomcats {

server tomcat1:8080;

server tomcat2:8080;

server tomcat3:8080;

}

server {

listen 80;

server_name localhost;

location / {

proxy_pass http://tomcats; # 请求转向tomcats

}

}

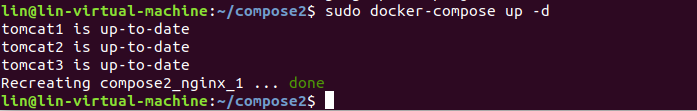

- 运行docker-compose

sudo docker-compose up -d

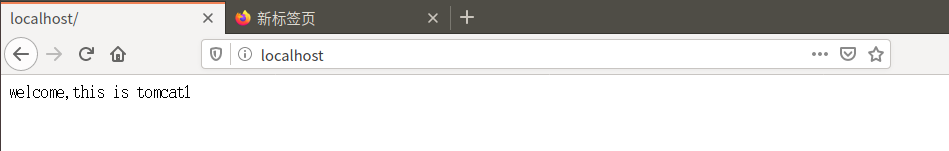

- 用浏览器查看

实现nginx的2种负载均衡策略

-

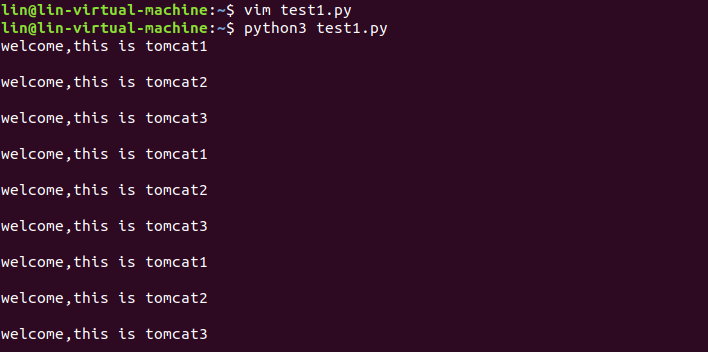

1.负载均衡策略:轮询策略

-

test1.py

import requests

for i in range(0,10):

reponse=requests.get("http://localhost")

print(reponse.text)

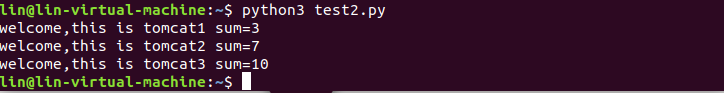

- 2.负载均衡策略:权重策略

- 修改default.conf

upstream tomcats {

server tomcat1:8080 weight=1;

server tomcat2:8080 weight=2;

server tomcat3:8080 weight=3;

}

server {

listen 80;

server_name localhost;

location / {

proxy_pass http://tomcats; # 请求转向tomcats

}

}

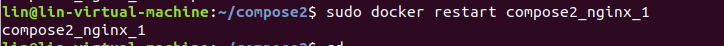

- 重启容器

- test2.py

import requests

import string

sum1=0

sum2=0

sum3=0

str1='welcome,this is tomcat1\n'

str2='welcome,this is tomcat2\n'

str3='welcome,this is tomcat3\n'

url="http://localhost"

for i in range(0,20):

response=requests.get(url)

if response.content == str1:

sum1=sum1+1

if response.text == str2:

sum2=sum2+1

if response.text == str3:

sum3=sum3+1

print('welcome,this is tomcat1 sum={}'.format(sum1))

print('welcome,this is tomcat2 sum={}'.format(sum2))

print('welcome,this is tomcat3 sum={}'.format(sum3))

在20次请求返回的结果中,与我们在nginx配置文件中的权重的比值1:2:3基本一致

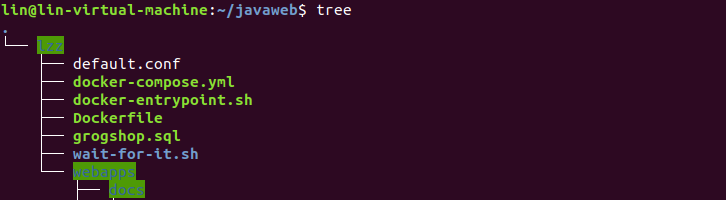

(2) 使用Docker-compose部署javaweb运行环境

- docker-compose.yml

version: "3"

services:

tomcat00:

image: tomcat

hostname: hostname

container_name: tomcat00

ports:

- "5050:8080" #后面访问网页的时候要选择对应的端口号5050

volumes: #数据卷

- "./webapps:/usr/local/tomcat/webapps"

- ./wait-for-it.sh:/wait-for-it.sh

networks: #网络设置静态IP

webnet:

ipv4_address: 15.22.0.15

tomcat01:

image: tomcat

hostname: hostname

container_name: tomcat01

ports:

- "5055:8080"

volumes:

- "./webapps:/usr/local/tomcat/webapps"

- ./wait-for-it.sh:/wait-for-it.sh

networks: #网络设置静态IP

webnet:

ipv4_address: 15.22.0.16

mymysql: #mymysql服务

build: . #通过MySQL的Dockerfile文件构建MySQL

image: mymysql:test

container_name: mymysql

ports:

- "3309:3306"

command: [

'--character-set-server=utf8mb4',

'--collation-server=utf8mb4_unicode_ci'

]

environment:

MYSQL_ROOT_PASSWORD: "123456"

networks:

webnet:

ipv4_address: 15.22.0.6

nginx:

image: nginx

container_name: "nginx-tomcat"

ports:

- 8080:8080

volumes:

- ./default.conf:/etc/nginx/conf.d/default.conf # 挂载配置文件

tty: true

stdin_open: true

networks:

webnet:

ipv4_address: 15.22.0.7

networks: #网络设置

webnet:

driver: bridge #网桥模式

ipam:

config:

-

subnet: 15.22.0.0/24 #子网

- default.conf

upstream tomcat {

server tomcat00:8080;

server tomcat01:8080;

}

server {

listen 8080;

server_name localhost;

location / {

proxy_pass http://tomcat;

}

}

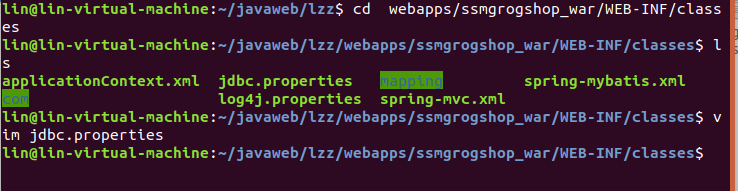

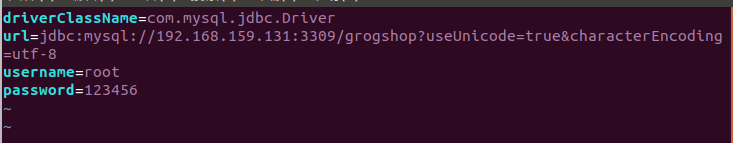

- 修改连接数据库的IP

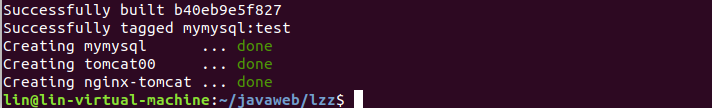

- 启动容器

sudo docker-compose up -d

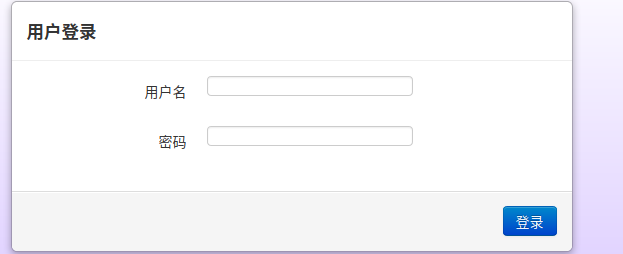

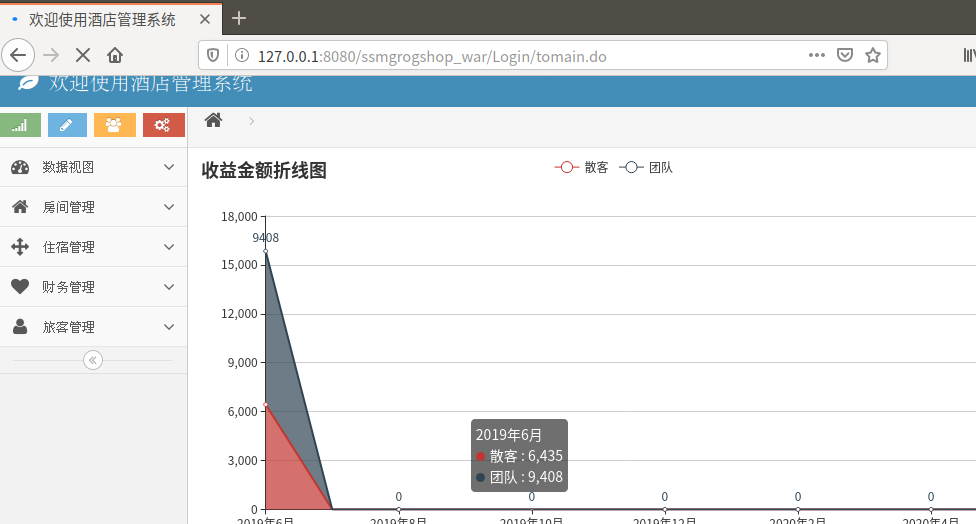

- 浏览器访问前端页面

http://127.0.0.1:8080/ssmgrogshop_war

账号:sa 密码:123

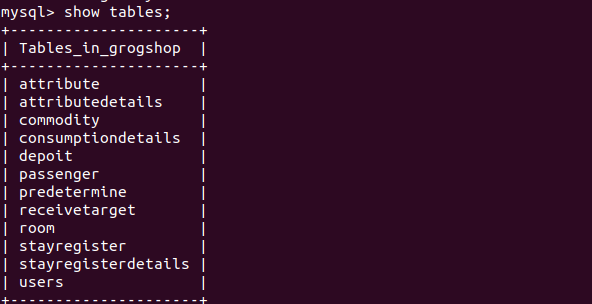

- 终端查看数据库

(3)使用Docker搭建大数据集群环境

- 实验环境

ubuntu 18.04 LST

openjdk 1.8

hadoop 3.1.3

- 换源

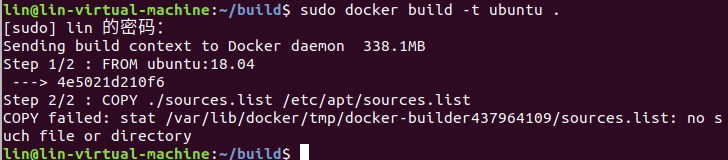

- Dockerfile

FROM ubuntu:18.04

COPY ./sources.list /etc/apt/sources.list

- source.list

# 默认注释了源码镜像以提高 apt update 速度,如有需要可自行取消注释

deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal main restricted universe multiverse

# deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal main restricted universe multiverse

deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-updates main restricted universe multiverse

# deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-updates main restricted universe multiverse

deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-backports main restricted universe multiverse

# deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-backports main restricted universe multiverse

deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-security main restricted universe multiverse

# deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-security main restricted universe multiverse

# 预发布软件源,不建议启用

# deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-proposed main restricted universe multiverse

# deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-proposed main restricted universe multiverse

- 创建镜像运行容器

sudo docker build -t ubuntu .

sudo docker run -it --name ubuntu ubuntu

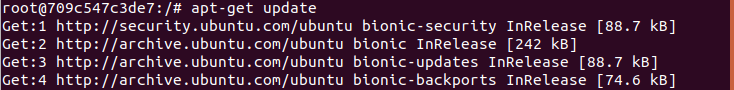

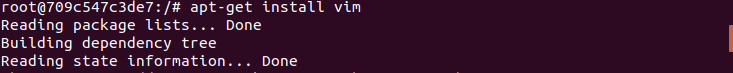

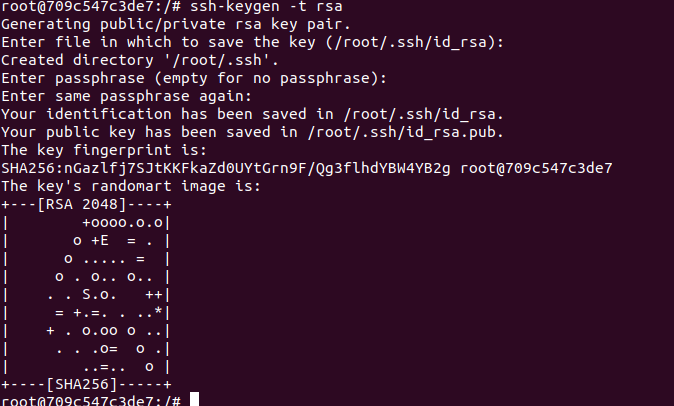

- 更新系统软件源并安装vim和ssh

apt-get update

apt-get install vim

apt-get install ssh

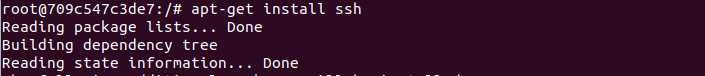

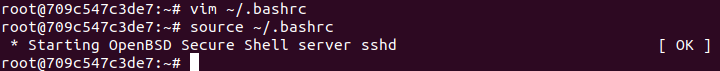

- 运行脚本开启sshd服务器并设置自动启动sshd服务

/etc/init.d/ssh start

vim ~/.bashrc

/etc/init.d/ssh start

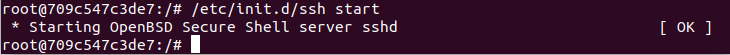

- 配置sshd

ssh-keygen -t rsa

cat id_rsa.pub >> authorized_keys

安装JDK

apt-get install openjdk-8-jdk

- 配置环境变量,打开~/.bashrc文件,在最后输入如下内容,并生效

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64/

export PATH=$PATH:$JAVA_HOME/bin

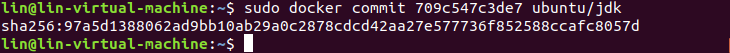

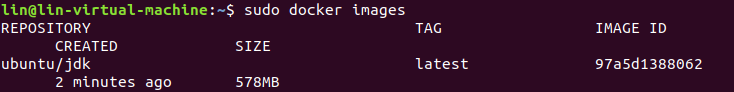

- 保存镜像文件

安装Hadoop

- 运行容器

sudo docker run -it -v /home/lin/build:/root/build --name ubuntu-jdkinstalled ubuntu/jdk

- 解压下载好的Hadoop

cd /root/build

tar -zxvf hadoop-3.1.3.tar.gz -C /usr/local

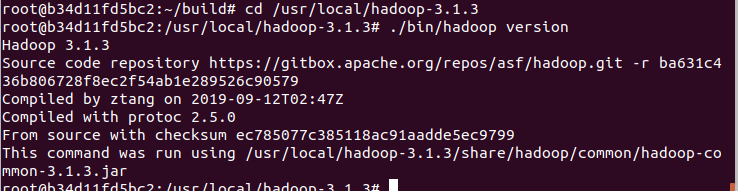

- 查看是否安装

cd /usr/hadoop-3.1.3

./bin/hadoop version

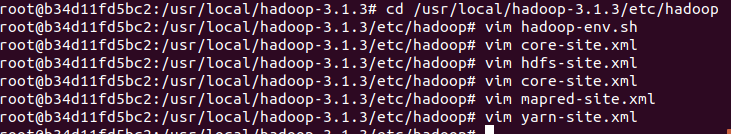

配置Hadoop集群

- hadoop-env.sh

vim hadoop-env.sh

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64/

- core-site.xml

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/usr/local/hadoop-3.1.3/tmp</value>

<description>Abase for other temporary directories.</description>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://master:9000</value>

</property>

</configuration>

- hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/local/hadoop-3.1.3/namenode_dir</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/usr/local/hadoop-3.1.3/datanode_dir</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

</configuration>

- mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>yarn.app.mapreduce.am.env</name>

<value>HADOOP_MAPRED_HOME=/usr/local/hadoop-3.1.3</value>

</property>

<property>

<name>mapreduce.map.env</name>

<value>HADOOP_MAPRED_HOME=/usr/local/hadoop-3.1.3</value>

</property>

<property>

<name>mapreduce.reduce.env</name>

<value>HADOOP_MAPRED_HOME=/usr/local/hadoop-3.1.3</value>

</property>

</configuration>

- yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>master</value>

</property>

<property>

<name>yarn.nodename.vmem-pmem-ratio</name>

<value>2.7</value>

</property>

</configuration>

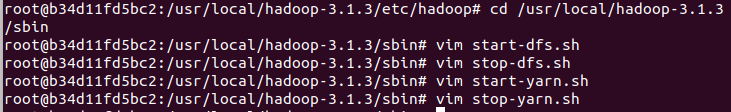

- 修改启动和结束脚本

- 进入目录

cd /usr/local/hadoop-3.1.3/sbin

- 在start-dfs.sh和 stop-dfs.sh文件中添加

HDFS_DATANODE_USER=root

HADOOP_SECURE_DN_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

- 在start-yarn.sh和 stop-yarn.sh文件中添加

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root

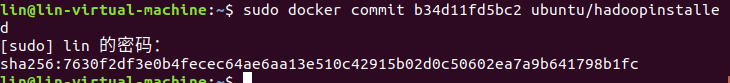

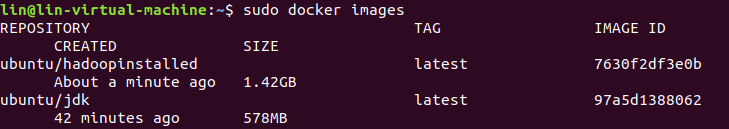

- 保存镜像

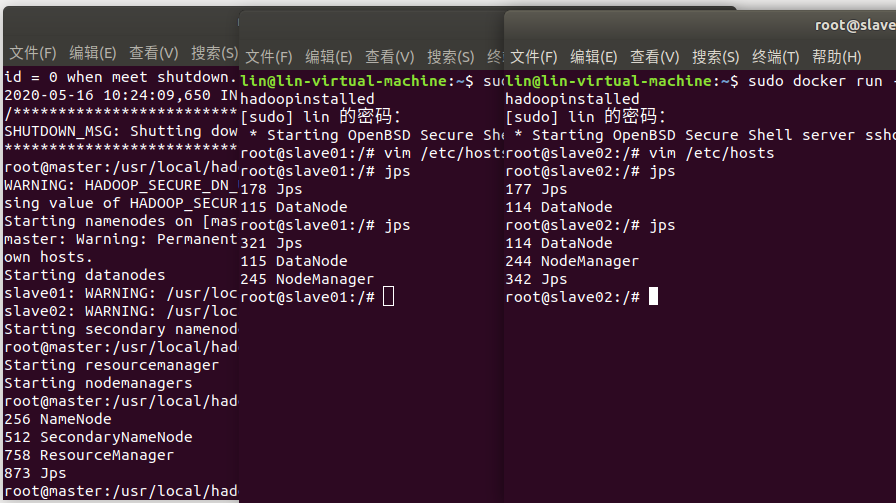

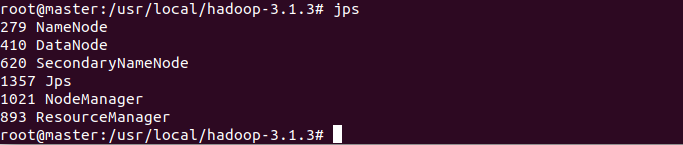

运行Hadoop

- 打开三个终端,分别表示Hadoop集群中的master,slave01和slave02

# 第一个终端

docker run -it -h master --name master ubuntu/hadoopinstalled

# 第二个终端

docker run -it -h slave01 --name slave01 ubuntu/hadoopinstalled

# 第三个终端

docker run -it -h slave02 --name slave02 ubuntu/hadoopinstalled

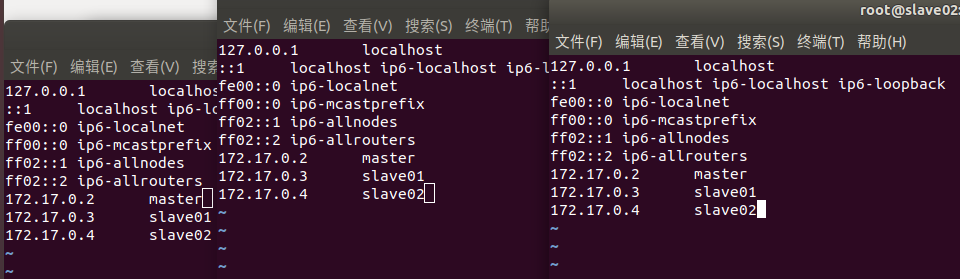

- 配置master,slave01和slave02的地址信息

vim /etc/hosts

172.17.0.2 master

172.17.0.3 slave01

172.17.0.4 slave02

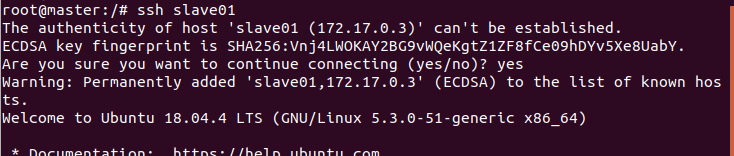

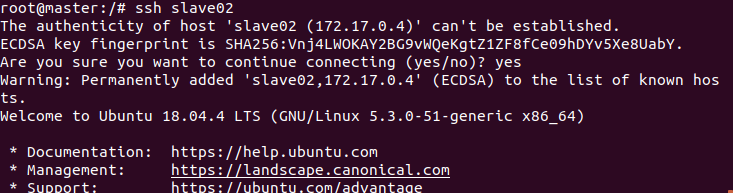

- 测试ssh

ssh slave01

ssh slave02

- 修改workers文件

vim /usr/local/hadoop-3.1.3/etc/hadoop/workers

localhost修改为:

slave01

slave02

- 启动集群

cd /usr/local/hadoop-3.1.3

bin/hdfs namenode -format

sbin/start-dfs.sh

sbin/start-yarn.sh

- 运行实例

./bin/hdfs dfs -mkdir -p /user/root/input

./bin/hdfs -put ./etc/hadoop/*.xml /user/root/input

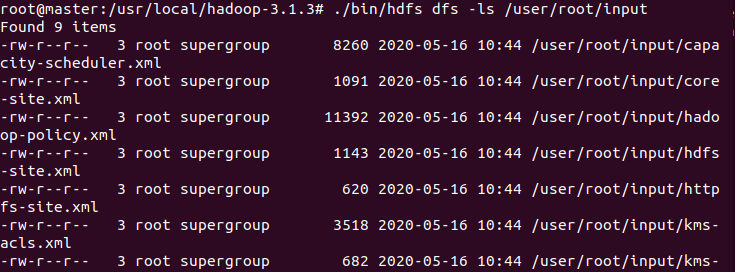

./bin/hdfs dfs -ls /user/root/input

./bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar grep input output 'dfs[a-z.]+'

./bin/hdfs dfs -cat output/*

(4)主要问题和解决方法

(1)在权重策略测试负载均衡实践中,编写python代码时,没注意到爬到的字符串中带有"\n"回车,纠结了好久

(2)在开启hadoop集群时没能全部开启,可能是master的workers没有编写的原因(不太确定),删除容器重新操作一下就可以了

(3)虚拟机的内存最好开大一点,不然开hadoop集群的时候可能卡死,实例中文件太多也可能卡死

(4)hadoop集群使用完后要记得关掉

(5)花费时间

这次实验明显比之前的实验复杂,我大约用了两个白天