Redis 3.2.4集群实战

一、Redis Cluster集群设计

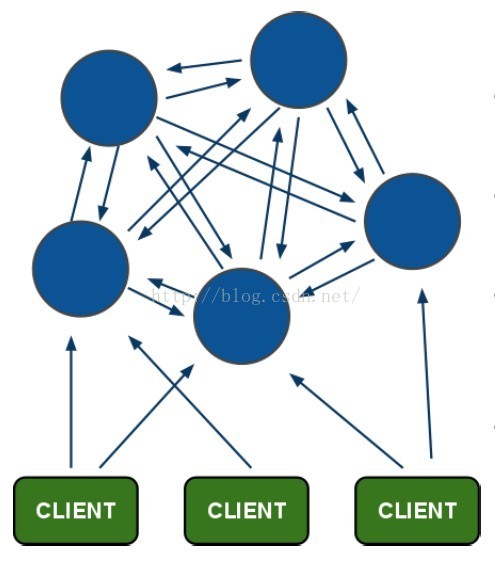

Redis集群搭建的方式有多种,例如使用zookeeper等,但从redis3.0之后版本支持Redis-Cluster集群,Redis-Cluster采用无中心结构,每个节点保存数据和整个集群状态,每个节点都和其他所有节点连接。其redis-cluster架构图如下:

结构特点:

1、所有的redis节点彼此互联(PING-PONG机制),内部使用二进制协议优化传输速度和带宽。

2、节点的fail是通过集群中超过半数的节点检测失效时才生效。

3、客户端与redis节点直连,不需要中间proxy层。客户端不需要连接集群所有节点,连接集群中任何一个可用节点即可。

4、redis-cluster把所有的物理节点映射到[0-16383]slot上(不一定是平均分配),cluster负责维护node<->slot<->value。

5、Redis集群预分好16384个桶,当需要在 Redis 集群中放置一个 key-value 时,根据 CRC16(key) mod 16384的值,决定将一个key放到哪个桶中。

1、redis cluster节点分配

假如现在有三个主节点分别是:A, B, C三个节点,它们可以是一台机器上的三个端口,也可以是三台不同的服务器。那么,采用哈希槽 (hash slot)的方式来分配16384个slot的话,它们三个节点分别承担的slot区间是:

节点A覆盖0-5460;

节点B覆盖5461-10922;

节点C覆盖10923-16383.

获取数据:

如果存入一个值,按照redis cluster哈希槽的算法: CRC16('key')%16384 = 6782。 那么就会把这个 key 的存储分配到节点B上了。同样,当我连接(A,B,C)任何一个节点想获取'key'这个key时,也会这样的算法,然后内部跳转到B节点上获取数据。

新增一个主节点:

新增一个节点D,redis cluster的这种做法是从各个节点的前面各拿取一部分slot到D上,我会在接下来的实践中实验。大致就会变成这样:

节点A覆盖1365-5460

节点B覆盖6827-10922

节点C覆盖12288-16383

节点D覆盖0-1364,5461-6826,10923-12287

同样删除一个节点也是类似,移动完成后就可以删除这个节点了。

2、Redis Cluster主从模式

Redis Cluster为了保证数据的高可用性,加入了主从模式,一个主节点对应一个或多个从节点,主节点提供数据存取,从节点则是从主节点拉取数据备份,当这个主节点挂掉后,就会从从节点选取一个来充当主节点,从而保证集群不会挂掉。

上面那个例子里, 集群有ABC三个主节点, 如果这3个节点都没有加入从节点,如果B挂掉了,我们就无法访问整个集群了。A和C的slot也无法访问。

所以我们在集群建立的时候,一定要为每个主节点都添加从节点, 比如像这样, 集群包含主节点A、B、C, 以及从节点A1、B1、C1, 那么即使B挂掉系统也可以继续正确工作。

B1节点替代了B节点,所以Redis集群将会选择B1节点作为新的主节点,集群将会继续正确地提供服务。 当B重新开启后,它就会变成B1的从节点。

不过需要注意,如果节点B和B1同时挂了,Redis集群就无法继续正确地提供服务了。

二、Redis集群搭建

三、redis集群测试

1、查看集群节点信息

[root@localhost src]# ./redis-cli -c -h 192.168.1.105 -p 7000 192.168.1.105:7000> cluster nodes 4a5dd44e1b57c99c573d03e556f1ca446a1cd907 192.168.1.105:7002 slave afe7e0ca4c438a3f68636e6fc2622d3f910c8c7c 0 1504151069867 4 connected d33b8453a4f6fdecf597e6ef2f3908b065ce1d4c 192.168.1.160:7005 slave c50954482dada38aca9e9e72cb93f90df52e9ab2 0 1504151070368 6 connected afe7e0ca4c438a3f68636e6fc2622d3f910c8c7c 192.168.1.160:7003 master - 0 1504151068364 4 connected 5461-10922 e611fea46dc1c7c9a1be2287cf1164a7e9422718 192.168.1.160:7004 slave 2a780de364a6b69e9cb5cb007929f19acf575c93 0 1504151068864 5 connected 2a780de364a6b69e9cb5cb007929f19acf575c93 192.168.1.105:7000 myself,master - 0 0 1 connected 0-5460 c50954482dada38aca9e9e72cb93f90df52e9ab2 192.168.1.105:7001 master - 0 1504151069867 2 connected 10923-16383

主节点:7000、7001、7003 ,从节点:7002、7004、7005;7000为7004的主节点,7001为7005的主节点,7003为7002的主节点。

节点7000覆盖0-5460

节点7001覆盖10923-16383

节点7003覆盖5461-10922

2、测试存取值

redis-cli客户端连接集群需要带上-c参数,redis-cli -c -p 端口号 -h ip地址 -a redis密码

[root@localhost src]# ./redis-cli -h 192.168.1.160 -p 7005 -c 192.168.1.160:7005> set aa 123 -> Redirected to slot [1180] located at 192.168.1.105:7000 OK 192.168.1.105:7000> get aa "123"

根据redis-cluster的key值分配原理,aa应该分配到节点7000[0-5460]上,上面显示redis cluster自动从7005跳转到7000节点。

我们可以测试一下7002从节点获取aa值

[root@localhost src]# ./redis-cli -h 192.168.1.105 -p 7002 -c 192.168.1.105:7002> get aa -> Redirected to slot [1180] located at 192.168.1.105:7000 "123"

从上面也是自动跳转至7000获取值,这也是redis cluster的特点,它是去中心化,每个节点都是对等的,连接哪个节点都可以获取和设置数据。

四、集群节点选举

现在模拟将7000主节点挂掉,按照redis-cluster选举原理会将7000的从节点7004选举为主节点。

[root@localhost shell]# ps -ef |grep redis root 19793 1 0 08:12 ? 00:00:14 ./redis-server 192.168.1.105:7001 [cluster] root 19794 1 0 08:12 ? 00:00:14 ./redis-server 192.168.1.105:7002 [cluster] root 19795 1 0 08:12 ? 00:00:14 ./redis-server 192.168.1.105:7000 [cluster] root 22434 16193 0 11:33 pts/1 00:00:00 ./redis-cli -h 192.168.1.105 -p 7002 -c root 22645 15815 0 11:56 pts/0 00:00:00 grep --color=auto redis [root@localhost shell]# kill -9 19795

查看集群中的7000节点

[root@localhost src]# ./redis-trib.rb check 192.168.1.105:7000 [ERR] Sorry, can't connect to node 192.168.1.105:7000

无法check 7000节点

[root@localhost src]# ./redis-trib.rb check 192.168.1.105:7001 >>> Performing Cluster Check (using node 192.168.1.105:7001) M: 6befec567ca7090eb3731e48fd5275a9853fb394 192.168.1.105:7001 slots:10923-16383 (5461 slots) master 1 additional replica(s) S: 910e14d9655a1e6e7fc007e799006d3f0d1cebe5 192.168.1.105:7002 slots: (0 slots) slave replicates 94f51658302cb5f1d178f14caaa79f27a9ac3703 S: 54ca4fbc71257fd1be5b58d0f545b95d65f8f6b8 192.168.1.160:7005 slots: (0 slots) slave replicates 6befec567ca7090eb3731e48fd5275a9853fb394 M: cf02aa3d58d48215d9d61121eedd194dc5c50eeb 192.168.1.160:7004 slots:0-5460 (5461 slots) master 0 additional replica(s) M: 94f51658302cb5f1d178f14caaa79f27a9ac3703 192.168.1.160:7003 slots:5461-10922 (5462 slots) master 1 additional replica(s) [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered.

可以看到集群连接不了7000节点,而7004由原来的S节点转换为M节点,代替了原来的7000主节点。

[root@localhost src]# ./redis-cli -p 7005 -c -h 192.168.1.160 192.168.1.160:7005> cluster nodes 28a51a8e34920e2d48fc1650a9c9753ff73dad5d 192.168.1.105:7000 master,fail - 1504065401123 1504065399320 1 disconnected 54ca4fbc71257fd1be5b58d0f545b95d65f8f6b8 192.168.1.160:7005 myself,slave 6befec567ca7090eb3731e48fd5275a9853fb394 0 0 6 connected cf02aa3d58d48215d9d61121eedd194dc5c50eeb 192.168.1.160:7004 master - 0 1504065527701 8 connected 0-5460 94f51658302cb5f1d178f14caaa79f27a9ac3703 192.168.1.160:7003 master - 0 1504065528203 4 connected 5461-10922 6befec567ca7090eb3731e48fd5275a9853fb394 192.168.1.105:7001 master - 0 1504065527702 2 connected 10923-16383 910e14d9655a1e6e7fc007e799006d3f0d1cebe5 192.168.1.105:7002 slave 94f51658302cb5f1d178f14caaa79f27a9ac3703 0 1504065528706 4 connected

7004由原来的S节点转换为M节点,代替了原来的7000节点,7000节点提示master,fail。

现在我们将7000节点恢复,看是否会自动加入集群中以及充当的M节点还是S节点。

[root@localhost src]# ./redis-server ../../redis_cluster/7000/redis.conf &

在check一下7000节点

[root@localhost src]# ./redis-trib.rb check 192.168.1.105:7000 >>> Performing Cluster Check (using node 192.168.1.105:7000) S: 28a51a8e34920e2d48fc1650a9c9753ff73dad5d 192.168.1.105:7000 slots: (0 slots) slave replicates cf02aa3d58d48215d9d61121eedd194dc5c50eeb S: 910e14d9655a1e6e7fc007e799006d3f0d1cebe5 192.168.1.105:7002 slots: (0 slots) slave replicates 94f51658302cb5f1d178f14caaa79f27a9ac3703 M: 94f51658302cb5f1d178f14caaa79f27a9ac3703 192.168.1.160:7003 slots:5461-10922 (5462 slots) master 1 additional replica(s) S: 54ca4fbc71257fd1be5b58d0f545b95d65f8f6b8 192.168.1.160:7005 slots: (0 slots) slave replicates 6befec567ca7090eb3731e48fd5275a9853fb394 M: cf02aa3d58d48215d9d61121eedd194dc5c50eeb 192.168.1.160:7004 slots:0-5460 (5461 slots) master 1 additional replica(s) M: 6befec567ca7090eb3731e48fd5275a9853fb394 192.168.1.105:7001 slots:10923-16383 (5461 slots) master 1 additional replica(s) [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered.

可以看到7000节点变成了cf02aa3d58d48215d9d61121eedd194dc5c50eeb 7004的从节点。

五、集群节点添加

新增节点包括新增主节点、从节点两种情况。以下分别做一下测试:

a、新增主节点

新增一个节点7006作为主节点修改配置文件。

启动7006 redis服务

[root@localhost src]# ./redis-server ../../redis_cluster/7006/redis.conf & [1] 23839 [root@localhost src]# ps -ef|grep redis root 18678 1 0 08:12 ? 00:00:37 ./redis-server 192.168.1.160:7004 [cluster] root 18679 1 0 08:12 ? 00:00:37 ./redis-server 192.168.1.160:7005 [cluster] root 18680 1 0 08:12 ? 00:00:38 ./redis-server 192.168.1.160:7003 [cluster] root 22185 15199 0 11:57 pts/1 00:00:00 ./redis-cli -p 7005 -c -h 192.168.1.160 root 23840 1 0 14:09 ? 00:00:00 ./redis-server 192.168.1.160:7006 [cluster] root 23844 14745 0 14:09 pts/0 00:00:00 grep --color=auto redis [1]+ 完成 ./redis-server ../../redis_cluster/7006/redis.conf

上面可以看到,7006已经启动,现在加入集群中。添加使用redis-trib.rb的add-node命令:

./redis-trib.rb add-node 192.168.1.160:7006 192.168.1.160:7003

add-node是加入集群节点,192.168.1.160:7006为要加入的节点,192.168.1.160:7003表示加入的集群的一个节点,用来辨识是哪个集群,理论上哪个集群的节点都可以。

执行以下add-node

[root@localhost src]# ./redis-trib.rb add-node 192.168.1.160:7006 192.168.1.160:7003 >>> Adding node 192.168.1.160:7006 to cluster 192.168.1.160:7003 >>> Performing Cluster Check (using node 192.168.1.160:7003) M: 94f51658302cb5f1d178f14caaa79f27a9ac3703 192.168.1.160:7003 slots:5461-10922 (5462 slots) master 1 additional replica(s) S: 910e14d9655a1e6e7fc007e799006d3f0d1cebe5 192.168.1.105:7002 slots: (0 slots) slave replicates 94f51658302cb5f1d178f14caaa79f27a9ac3703 M: 6befec567ca7090eb3731e48fd5275a9853fb394 192.168.1.105:7001 slots:10923-16383 (5461 slots) master 1 additional replica(s) S: 28a51a8e34920e2d48fc1650a9c9753ff73dad5d 192.168.1.105:7000 slots: (0 slots) slave replicates cf02aa3d58d48215d9d61121eedd194dc5c50eeb S: 54ca4fbc71257fd1be5b58d0f545b95d65f8f6b8 192.168.1.160:7005 slots: (0 slots) slave replicates 6befec567ca7090eb3731e48fd5275a9853fb394 M: cf02aa3d58d48215d9d61121eedd194dc5c50eeb 192.168.1.160:7004 slots:0-5460 (5461 slots) master 1 additional replica(s) [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered. >>> Send CLUSTER MEET to node 192.168.1.160:7006 to make it join the cluster. [OK] New node added correctly. [1]+ 完成 ./redis-server ../../redis_cluster/7006/redis.conf

可以看到7006加入这个Cluster,并成为一个新的节点。

check下7006节点状态

[root@localhost src]# ./redis-trib.rb check 192.168.1.160:7006 >>> Performing Cluster Check (using node 192.168.1.160:7006) M: 2335477842e1b02e143fabaf0d77d12e1e8e6f56 192.168.1.160:7006 slots: (0 slots) master 0 additional replica(s) M: 6befec567ca7090eb3731e48fd5275a9853fb394 192.168.1.105:7001 slots:10923-16383 (5461 slots) master 1 additional replica(s) S: 28a51a8e34920e2d48fc1650a9c9753ff73dad5d 192.168.1.105:7000 slots: (0 slots) slave replicates cf02aa3d58d48215d9d61121eedd194dc5c50eeb M: 94f51658302cb5f1d178f14caaa79f27a9ac3703 192.168.1.160:7003 slots:5461-10922 (5462 slots) master 1 additional replica(s) S: 54ca4fbc71257fd1be5b58d0f545b95d65f8f6b8 192.168.1.160:7005 slots: (0 slots) slave replicates 6befec567ca7090eb3731e48fd5275a9853fb394 S: 910e14d9655a1e6e7fc007e799006d3f0d1cebe5 192.168.1.105:7002 slots: (0 slots) slave replicates 94f51658302cb5f1d178f14caaa79f27a9ac3703 M: cf02aa3d58d48215d9d61121eedd194dc5c50eeb 192.168.1.160:7004 slots:0-5460 (5461 slots) master 1 additional replica(s) [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered.

M: 2335477842e1b02e143fabaf0d77d12e1e8e6f56 192.168.1.160:7006

slots: (0 slots) master

0 additional replica(s)

上面信息可以看到有4个M节点,3个S节点,7006成为了M主节点,它没有附属的从节点,而且Cluster并未给7006分配哈希卡槽(0 slots)。

redis-cluster在新增节点时并未分配卡槽,需要我们手动对集群进行重新分片迁移数据,需要重新分片命令reshard。

./redis-trib.rb reshard 192.168.1.160:7005

这个命令是用来迁移slot节点的,后面的192.168.1.160:7005是表示是哪个集群,端口填[7000-7007]都可以,执行结果如下:

[root@localhost redis-cluster]# ./redis-trib.rb reshard 192.168.1.160:7005 >>> Performing Cluster Check (using node 192.168.1.160:7005) M: a5db243087d8bd423b9285fa8513eddee9bb59a6 192.168.1.160:7005 slots:5461-10922 (5462 slots) master 1 additional replica(s) S: 50ce1ea59106b4c2c6bc502593a6a7a7dabf5041 192.168.1.160:7004 slots: (0 slots) slave replicates dd19221c404fb2fc4da37229de56bab755c76f2b M: f9886c71e98a53270f7fda961e1c5f730382d48f 192.168.1.160:7003 slots:10923-16383 (5461 slots) master 1 additional replica(s) S: 1f07d76585bfab35f91ec711ac53ab4bc00f2d3a 192.168.1.160:7002 slots: (0 slots) slave replicates a5db243087d8bd423b9285fa8513eddee9bb59a6 M: ee3efb90e5ac0725f15238a64fc60a18a71205d7 192.168.1.160:7007 slots: (0 slots) master 0 additional replica(s) M: dd19221c404fb2fc4da37229de56bab755c76f2b 192.168.1.160:7001 slots:0-5460 (5461 slots) master 1 additional replica(s) S: 8bb3ede48319b46d0015440a91ab277da9353c8b 192.168.1.160:7006 slots: (0 slots) slave replicates f9886c71e98a53270f7fda961e1c5f730382d48f [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered. How many slots do you want to move (from 1 to 16384)?

它提示我们需要迁移多少slot到7006上,我们平分16384个哈希槽给4个节点:16384/4 = 4096,我们需要移动4096个槽点到7006上。

[OK] All 16384 slots covered. How many slots do you want to move (from 1 to 16384)? 4096 What is the receiving node ID?

需要输入7006的节点id,2335477842e1b02e143fabaf0d77d12e1e8e6f56

Please enter all the source node IDs. Type 'all' to use all the nodes as source nodes for the hash slots. Type 'done' once you entered all the source nodes IDs. Source node #1:

redis-trib 会向你询问重新分片的源节点(source node),即要从特定的哪个节点中取出 4096 个哈希槽,还是从全部节点提取4096个哈希槽, 并将这些槽移动到7006节点上面。

如果我们不打算从特定的节点上取出指定数量的哈希槽,那么可以向redis-trib输入 all,这样的话, 集群中的所有主节点都会成为源节点,redis-trib从各个源节点中各取出一部分哈希槽,凑够4096个,然后移动到7006节点上:

Source node #1:all

然后开始从别的主节点迁移哈希槽,并且确认。

Moving slot 1343 from dd19221c404fb2fc4da37229de56bab755c76f2b

Moving slot 1344 from dd19221c404fb2fc4da37229de56bab755c76f2b

Moving slot 1345 from dd19221c404fb2fc4da37229de56bab755c76f2b

Moving slot 1346 from dd19221c404fb2fc4da37229de56bab755c76f2b

Moving slot 1347 from dd19221c404fb2fc4da37229de56bab755c76f2b

Moving slot 1348 from dd19221c404fb2fc4da37229de56bab755c76f2b

Moving slot 1349 from dd19221c404fb2fc4da37229de56bab755c76f2b

Moving slot 1350 from dd19221c404fb2fc4da37229de56bab755c76f2b

Moving slot 1351 from dd19221c404fb2fc4da37229de

确认之后,redis-trib就开始执行分片操作,将哈希槽一个一个从源主节点移动到7006目标主节点。

重新分片结束后我们可以check以下节点的分配情况。

[root@localhost src]# ./redis-trib.rb check 192.168.1.160:7006 >>> Performing Cluster Check (using node 192.168.1.160:7006) M: 2335477842e1b02e143fabaf0d77d12e1e8e6f56 192.168.1.160:7006 slots:0-1179,5461-6826 (2546 slots) master 0 additional replica(s) M: 6befec567ca7090eb3731e48fd5275a9853fb394 192.168.1.105:7001 slots:10923-16383 (5461 slots) master 1 additional replica(s) S: 28a51a8e34920e2d48fc1650a9c9753ff73dad5d 192.168.1.105:7000 slots: (0 slots) slave replicates cf02aa3d58d48215d9d61121eedd194dc5c50eeb M: 94f51658302cb5f1d178f14caaa79f27a9ac3703 192.168.1.160:7003 slots:6827-10922 (4096 slots) master 1 additional replica(s) S: 54ca4fbc71257fd1be5b58d0f545b95d65f8f6b8 192.168.1.160:7005 slots: (0 slots) slave replicates 6befec567ca7090eb3731e48fd5275a9853fb394 S: 910e14d9655a1e6e7fc007e799006d3f0d1cebe5 192.168.1.105:7002 slots: (0 slots) slave replicates 94f51658302cb5f1d178f14caaa79f27a9ac3703 M: cf02aa3d58d48215d9d61121eedd194dc5c50eeb 192.168.1.160:7004 slots:1180-5460 (4281 slots) master 1 additional replica(s) [OK] All nodes agree about slots configuration. slots:0-1179,5461-6826 (2546 slots) master

可以看到7007节点分片的哈希槽片不是连续的,间隔的移动。

[root@localhost src]# ./redis-cli -p 7006 -c -h 192.168.1.160 192.168.1.160:7006> get aa -> Redirected to slot [1180] located at 192.168.1.160:7004 "aa" 192.168.1.160:7004>

可以看到将7006的aa[1180]移动到7004节点上,表明主节点7006添加成功。

2、新增从节点

新增一个节点7007节点,使用add-node --slave命令。

redis-trib增加从节点的命令为:

./redis-trib.rb add-node --slave --master-id $[nodeid] 192.168.1.160:7007 192.168.1.160:7005

nodeid为要加到master主节点的node id,192.168.1.160:7007为新增的从节点,192.168.1.160:7005为集群的一个节点(集群的任意节点都行),用来辨识是哪个集群;如果没有给定那个主节点--master-id的话,redis-trib将会新增的从节点随机到从节点较少的主节点上。

现在指定主节点添加从节点,给7006增加7007从节点。。。

./redis-trib.rb add-node --slave --master-id 2335477842e1b02e143fabaf0d77d12e1e8e6f56 192.168.1.160:7007 192.168.1.160:7005

[root@localhost src]# ./redis-trib.rb add-node --slave --master-id 2335477842e1b02e143fabaf0d77d12e1e8e6f56 192.168.1.160:7007 192.168.1.160:7005 >>> Adding node 192.168.1.160:7007 to cluster 192.168.1.160:7005 >>> Performing Cluster Check (using node 192.168.1.160:7005) S: 54ca4fbc71257fd1be5b58d0f545b95d65f8f6b8 192.168.1.160:7005 slots: (0 slots) slave replicates 6befec567ca7090eb3731e48fd5275a9853fb394 S: 28a51a8e34920e2d48fc1650a9c9753ff73dad5d 192.168.1.105:7000 slots: (0 slots) slave replicates cf02aa3d58d48215d9d61121eedd194dc5c50eeb M: 2335477842e1b02e143fabaf0d77d12e1e8e6f56 192.168.1.160:7006 slots:0-1179,5461-6826 (2546 slots) master 0 additional replica(s) M: cf02aa3d58d48215d9d61121eedd194dc5c50eeb 192.168.1.160:7004 slots:1180-5460 (4281 slots) master 1 additional replica(s) M: 94f51658302cb5f1d178f14caaa79f27a9ac3703 192.168.1.160:7003 slots:6827-10922 (4096 slots) master 1 additional replica(s) M: 6befec567ca7090eb3731e48fd5275a9853fb394 192.168.1.105:7001 slots:10923-16383 (5461 slots) master 1 additional replica(s) S: 910e14d9655a1e6e7fc007e799006d3f0d1cebe5 192.168.1.105:7002 slots: (0 slots) slave replicates 94f51658302cb5f1d178f14caaa79f27a9ac3703 [OK] All nodes agree about slots configuration. >>> Check for open slots... [WARNING] Node 192.168.1.160:7006 has slots in importing state (1180). [WARNING] Node 192.168.1.160:7004 has slots in migrating state (1180). [WARNING] The following slots are open: 1180 >>> Check slots coverage... [OK] All 16384 slots covered. >>> Send CLUSTER MEET to node 192.168.1.160:7007 to make it join the cluster. Waiting for the cluster to join... >>> Configure node as replica of 192.168.1.160:7006. [OK] New node added correctly.

自此从节点添加成功。

六、节点的移除

和添加节点一样,移除节点也有移除主节点,从节点。

1、移除主节点

移除节点使用redis-trib的del-node命令,

./redis-trib.rb del-node 192.168.1.160:7005 ${node-id}

192.168.1.160:7005为集群节点,node-id为要删除的主节点。和添加节点不同,移除节点node-id是必需的,测试删除7006主节点:

./redis-trib.rb del-node 192.168.1.160:7006 2335477842e1b02e143fabaf0d77d12e1e8e6f56 [root@localhost src]# ./redis-trib.rb del-node 192.168.1.160:7005 2335477842e1b02e143fabaf0d77d12e1e8e6f56 >>> Removing node 2335477842e1b02e143fabaf0d77d12e1e8e6f56 from cluster 192.168.1.160:7005 [ERR] Node 192.168.1.160:7006 is not empty! Reshard data away and try again.

redis cluster提示7006已经有数据了,不能够被删除,需要将他的数据转移出去,也就是和新增主节点一样需重新分片。

[root@localhost redis-cluster]# ./redis-trib.rb reshard 192.168.1.160:7006

执行以后会提示我们移除的大小,因为7006占用了2546个槽点

>>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered. How many slots do you want to move (from 1 to 16384)? 2546

提示移动的node id,填写7004的node id,用来接收7006的solt。

How many slots do you want to move (from 1 to 16384)? 4096 What is the receiving node ID? 2335477842e1b02e143fabaf0d77d12e1e8e6f56 #接收的node id

需要移动到全部主节点上还是单个主节点

Please enter all the source node IDs. Type 'all' to use all the nodes as source nodes for the hash slots. Type 'done' once you entered all the source nodes IDs. Source node #1: c1fafa48cb761eeddcc56e8c821eae5fef6a28bc #删除的node id Source node #2: done Do you want to proceed with the proposed reshard plan (yes/no)? yes

确认之后7006的卡槽是否移到到7000上:

[root@localhost src]# ./redis-trib.rb check 192.168.1.160:7007 >>> Performing Cluster Check (using node 192.168.1.160:7007) M: c1fafa48cb761eeddcc56e8c821eae5fef6a28bc 192.168.1.160:7007 slots: (0 slots) master 0 additional replica(s) S: 94f51658302cb5f1d178f14caaa79f27a9ac3703 192.168.1.160:7003 slots: (0 slots) slave replicates 910e14d9655a1e6e7fc007e799006d3f0d1cebe5 S: 54ca4fbc71257fd1be5b58d0f545b95d65f8f6b8 192.168.1.160:7005 slots: (0 slots) slave replicates 6befec567ca7090eb3731e48fd5275a9853fb394 M: 28a51a8e34920e2d48fc1650a9c9753ff73dad5d 192.168.1.105:7000 slots:0-8619,10923-11927 (9625 slots) master 1 additional replica(s) S: cf02aa3d58d48215d9d61121eedd194dc5c50eeb 192.168.1.160:7004 slots: (0 slots) slave replicates 28a51a8e34920e2d48fc1650a9c9753ff73dad5d M: 910e14d9655a1e6e7fc007e799006d3f0d1cebe5 192.168.1.105:7002 slots:8620-10922 (2303 slots) master 1 additional replica(s) M: 6befec567ca7090eb3731e48fd5275a9853fb394 192.168.1.105:7001 slots:11928-16383 (4456 slots) master 1 additional replica(s) [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered.

可以看到7000有9625个卡槽,而7007有0个卡槽。

./redis-trib.rb del-node 192.168.1.160:7006 2335477842e1b02e143fabaf0d77d12e1e8e6f56

2、移除从节点

比如删除7007节点:

[root@localhost src]# ./redis-trib.rb del-node 192.168.1.160:7005 2335477842e1b02e143fabaf0d77d12e1e8e6f56 >>> Removing node 2335477842e1b02e143fabaf0d77d12e1e8e6f56 from cluster 192.168.1.160:7006 >>> Sending CLUSTER FORGET messages to the cluster... >>> SHUTDOWN the node.

ok,测试到这儿吧。

Node has slots in importing state异常解决:

[WARNING] Node 192.168.1.160:7006 has slots in importing state (1180).

[WARNING] Node 192.168.1.160:7004 has slots in migrating state (1180).

[WARNING] The following slots are open: 1180

解决方案:redis-cli客户端登录7004、7006端口,分别执行cluster setslot 1180 stable命令,如下所示:

[root@localhost src]# ./redis-cli -c -h 192.168.1.160 -p 7004 192.168.1.160:7004> cluster setslot 1180 stable OK [root@localhost src]# ./redis-cli -c -h 192.168.1.160 -p 7006 192.168.1.160:7006> cluster setslot 1180 stable OK

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

· Docker 太简单,K8s 太复杂?w7panel 让容器管理更轻松!

2011-08-30 Oracle中Varchar2/Blob/Clob用法详解

2011-08-30 Oracle序列号详解