K8S资源回收机制

原文:https://kubernetes.io/docs/tasks/administer-cluster/out-of-resource/

一 问题现象

服务器的磁盘空间爆满(90%以上),触发某种机制,导致大量pod处于被驱逐状态(Evicted),大部分镜像被删除,所有服务均不可用。

二 问题追踪

发现服务器的存储达到某个状态(假如是超过90%)后会删除自身的一些资源,比较明显的是删除了大量的镜像,因此会出现一些pod的状态是镜像下载失败,包括k8s自身的系统镜像。服务器本身并不存在自动清理根目录,除非是写自动清理的脚本。排查了一圈,并不存在,因此极大概率是k8s自己给删的。我在官方的一篇文档中,找到了这个机制。

GC(Garbage Collector)即垃圾收集清理,kubernetes集群中,kubelet的GC功能将会清理未使用的image和container。其中kubelet对container每分钟执行一次GC,对image每5分钟执行一次GC。这样可以保障kubernetes集群资源紧缺的情况下,保证其NODE节点的稳定性。

分析:

GC时垃圾回收机制,回收资源来保证k8s集群维持健康可用的状态,什么资源?无非就是三种:CPU、内存、硬盘

那k8s中哪些对象涉及到对这三种资源的消耗,答案是:不断创建的POD,不断新下载的镜像

POD占用的是:cpu、内存、硬盘(数据持久化)

镜像占用的是:磁盘

问题:cpu、内存资源kubelet都很方便检测,但是一个物理节点上,磁盘那么多,k8s是如何分辨出来哪一块是给k8s用的,答案是分辨不出来,你必须在配置里明确告诉k8s的kubelet它的分区是哪一块才行,这个就是nodefs

你必须告诉docker引擎它的数目录是什么,这个就是imagesfs,详见第五小节

三 指标说明

每个node上的kubelet都负责定期采集资源占用数据,并与预设的 threshold值进行比对,如果超过 threshold值,kubelet就会尝试杀掉一些Pod以回收相关资源,对Node进行保护。kubelet关注的资源指标threshold大约有如下几种:

memory.available

nodefs.available

nodefs.inodesFree

imagefs.available

imagefs.inodesFree

每种threshold又分为eviction-soft和eviction-hard两组值。soft和hard的区别在于前者在到达threshold值时会给pod一段时间优雅退出,而后者则崇尚“暴力”,直接杀掉pod,没有任何优雅退出的机会。这里还要提一下nodefs和imagefs的区别:

nodefs: 指node自身的存储,存储daemon的运行日志等,一般指root分区/;

imagefs: 指docker daemon用于存储image和容器可写层(writable layer)的磁盘;

在我遇到的问题中,我们的imagefs和nodefs分区是同一个分区,即/分区,占用率很高(96%)。列一下其中一些指标的阈值:

memory.available<100Mi

nodefs.available<10%

nodefs.inodesFree<5%

imagefs.available<15%

(至于其他的指标阈值为啥没有,因为我没找到。)

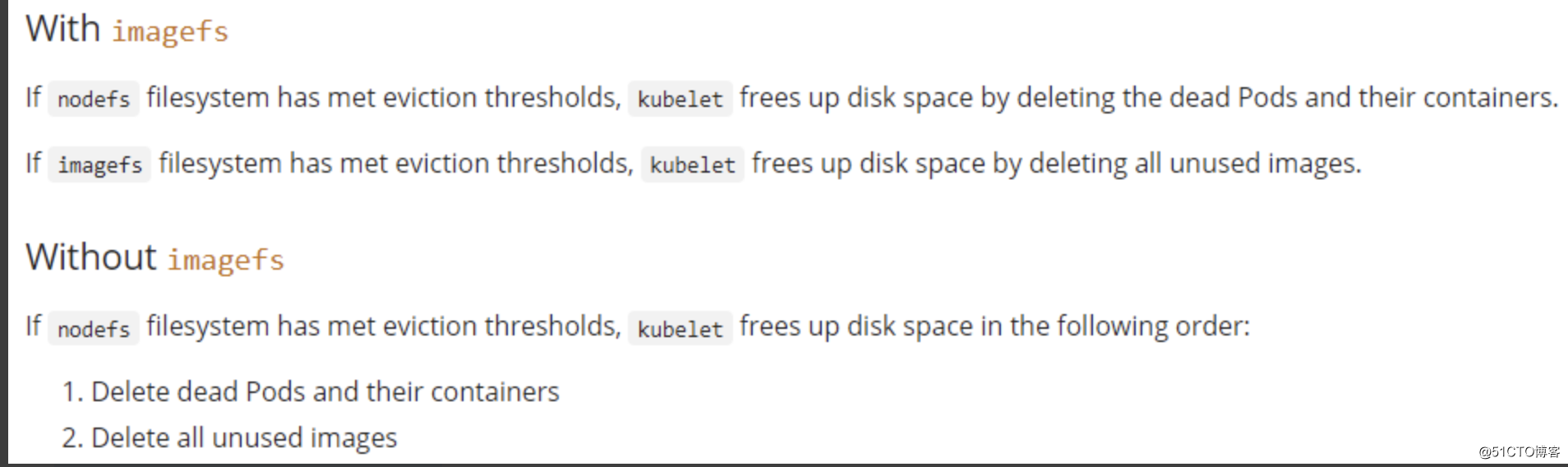

其中比较重要的动作,就是达到nodefs和imagefs的阈值后的回收机制。

简单来说,就是nodefs超过阈值了,k8s会自动干掉本机上的pod和pod对应的容器(这里是k8s的驱逐机制,删除本节点pod,在其他节点启动,在不放守护机制的前提下是不顺滑的,存在一定时间的服务中断);如果imagefs超过阈值了,会删除没有使用到的镜像文件。

那么问题来了:如果nodefs和imagefs是同一个分区,会出现什么问题呢?k8s会首先驱逐本机上的pod到其他节点,然后资源仍然不够,会删除没有被使用到的镜像,直到剩余空间低于设定的阈值,甚至会删除k8s的系统镜像。

四 解决办法

1 修改这几个指标的阈值(不推荐)

2 添加监控,在阈值到达之前提前处理

五 imagesfs与nodefs

先说答案:

1、nodefs是--root-dir目录所在分区,imagefs是docker安装目录所在的分区

2、建议nodefs与imagefs共用一个分区,但是这个分区要设置的大一些。

3、当nodefs与imagefs共用一个分区时,kubelet中的其他几个参数--root-dir、--cert-dir

kubelet可以对磁盘进行管控,但是只能对nodefs与imagefs这两个分区进行管控。

kubelet可以对磁盘进行管控,但是只能对nodefs与imagefs这两个分区进行管控。

kubelet可以对磁盘进行管控,但是只能对nodefs与imagefs这两个分区进行管控。

- imagefs: docker安装目录所在的分区

- nodefs: kubelet的启动参数--root-dir所指定的目录(默认/var/lib/kubelet)所在的分区

接下来,我们来验证一下我们对imagefs与nodefs的理解。

前置条件

k8s集群使用1.8.6版本

$ kubectl get node

NAME STATUS ROLES AGE VERSION

10.142.232.161 Ready <none> 263d v1.8.6

10.142.232.162 NotReady <none> 263d v1.8.6

10.142.232.163 Ready,SchedulingDisabled <none> 227d v1.8.6

10.142.232.161上docker安装在/app/docker目录下,kubelet的--root-dir没有设置,使用默认的/var/lib/kubelet。/app是一块盘,使用率为70%;/是一块盘,使用率为57%;而imagesfs与nodefs此时设置的阈值都为80%,如下:

$ df -hT 文件系统 类型 容量 已用 可用 已用% 挂载点 devtmpfs devtmpfs 16G 0 16G 0% /dev tmpfs tmpfs 16G 0 16G 0% /dev/shm tmpfs tmpfs 16G 1.7G 15G 11% /run tmpfs tmpfs 16G 0 16G 0% /sys/fs/cgroup /dev/mapper/centos-root xfs 45G 26G 20G 57% / /dev/xvda1 xfs 497M 254M 243M 52% /boot /dev/xvde xfs 150G 105G 46G 70% /app $ ps -ef | grep kubelet root 125179 1 37 17:50 ? 00:00:01 /usr/bin/kubelet --address=0.0.0.0 --allow-privileged=true --cluster-dns=10.254.0.10 --cluster-domain=kube.local --fail-swap-on=false --hostname-override=10.142.232.161 --kubeconfig=/etc/kubernetes/kubeconfig --pod-infra-container-image=10.142.233.76:8021/library/pause:latest --port=10250 --enforce-node-allocatable=pods --eviction-hard=memory.available<20%,nodefs.inodesFree<20%,imagefs.inodesFree<20%,nodefs.available<20%,imagefs.available<20% --network-plugin=cni

# ps:如果修改过nodesfs路径可以执行ps aux|grep kubelet可以看到--root-dir=路径 [root@yq01-aip-aikefu19 data]# ps aux |grep kubelet root 10821 21.3 0.1 10311448 239632 ? Ssl Apr21 804:05 /usr/local/bin/kubelet --v=2 。。。。--root-dir=/data/lib/kubelet 。。。。

此时,10.142.232.161该node没有报磁盘的错

$ kubectl describe node 10.142.232.161 ... Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Starting 18s kubelet, 10.142.232.161 Starting kubelet. Normal NodeAllocatableEnforced 18s kubelet, 10.142.232.161 Updated Node Allocatable limit across pods Normal NodeHasSufficientDisk 18s kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeHasSufficientDisk Normal NodeHasSufficientMemory 18s kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeHasSufficientMemory Normal NodeHasNoDiskPressure 18s kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeHasNoDiskPressure Normal NodeNotReady 18s kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeNotReady Normal NodeReady 8s kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeReady

验证方案

- 验证imagefs是/app/docker目录所在分区(/app分区使用率为70%)

- 修改imagefs的阈值为60%,node应该报imagefs超标

- 修改imagefs的阈值为80%,node应该正常

- 验证nodefs是/var/lib/kubelet目录所在的分区(/分区使用率为57%)

- 修改nodefs的阈值为50%,node应该报nodefs超标

- 修改nodefs的阈值为60%,node应该正常

- 修改kubelet启动参数--root-dir,将值设成/app/kubelet

- 修改让imagefs的阈值为80%,nodefs的阈值为60%;此时应该报nodefs超标

- 修改让imagefs的阈值为60%,nodefs的阈值为80%;此时应该报imagefs超标

- 修改让imagefs的阈值为60%,nodefs的阈值为60%;此时应该报两个都超标

- 修改让imagefs的阈值为80%,nodefs的阈值为80%;此时node应该正常

验证步骤

一、验证imagefs是/app/docker目录所在分区

1.1 修改imagefs的阈值为60%,node应该imagefs超标

如下,我们把imagefs的阈值设为60%

$ ps -ef | grep kubelet

root 41234 1 72 18:17 ? 00:00:02 /usr/bin/kubelet --address=0.0.0.0 --allow-privileged=true --cluster-dns=10.254.0.10 --cluster-domain=kube.local --fail-swap-on=false --hostname-override=10.142.232.161 --kubeconfig=/etc/kubernetes/kubeconfig --pod-infra-container-image=10.142.233.76:8021/library/pause:latest --port=10250 --enforce-node-allocatable=pods --eviction-hard=memory.available<20%,nodefs.inodesFree<20%,imagefs.inodesFree<20%,nodefs.available<20%,imagefs.available<40% --network-plugin=cni

然后我们查看节点的状态,Attempting to reclaim imagefs,意思为尝试回收imagefs

$ kubectl describe node 10.142.232.161

...

Normal NodeAllocatableEnforced 1m kubelet, 10.142.232.161 Updated Node Allocatable limit across pods

Normal Starting 1m kubelet, 10.142.232.161 Starting kubelet.

Normal NodeHasSufficientDisk 1m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeHasSufficientDisk

Normal NodeHasSufficientMemory 1m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeHasSufficientMemory

Normal NodeHasNoDiskPressure 1m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeHasNoDiskPressure

Normal NodeNotReady 1m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeNotReady

Normal NodeHasDiskPressure 1m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeHasDiskPressure

Normal NodeReady 1m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeReady

Warning EvictionThresholdMet 18s (x4 over 1m) kubelet, 10.142.232.161 Attempting to reclaim imagefs

1.2 修改imagefs的阈值为80%,node应该正常

我们把imagefs的阈值为80%

$ ps -ef | grep kubelet

root 51402 1 19 18:24 ? 00:00:06 /usr/bin/kubelet --address=0.0.0.0 --allow-privileged=true --cluster-dns=10.254.0.10 --cluster-domain=kube.local --fail-swap-on=false --hostname-override=10.142.232.161 --kubeconfig=/etc/kubernetes/kubeconfig --pod-infra-container-image=10.142.233.76:8021/library/pause:latest --port=10250 --enforce-node-allocatable=pods --eviction-hard=memory.available<20%,nodefs.inodesFree<20%,imagefs.inodesFree<20%,nodefs.available<20%,imagefs.available<20% --network-plugin=cni

然后再来查看node的状态,NodeHasNoDiskPressure,说明imagefs使用率没有超过阈值了

$ kubectl describe node 10.142.232.161

...

Warning EvictionThresholdMet 6m (x22 over 11m) kubelet, 10.142.232.161 Attempting to reclaim imagefs

Normal Starting 5m kubelet, 10.142.232.161 Starting kubelet.

Normal NodeAllocatableEnforced 5m kubelet, 10.142.232.161 Updated Node Allocatable limit across pods

Normal NodeHasSufficientDisk 5m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeHasSufficientDisk

Normal NodeHasSufficientMemory 5m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeHasSufficientMemory

Normal NodeHasNoDiskPressure 5m (x2 over 5m) kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeHasNoDiskPressure

Normal NodeNotReady 5m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeNotReady

Normal NodeReady 4m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeReady

二、验证nodefs是/var/lib/kubelet目录所在的分区(/分区使用率为57%)

2.1 修改nodefs的阈值为50%,node应该报nodefs超标

修改nodefs的阈值为50%

$ ps -ef | grep kubelet

root 72575 1 59 18:35 ? 00:00:04 /usr/bin/kubelet --address=0.0.0.0 --allow-privileged=true --cluster-dns=10.254.0.10 --cluster-domain=kube.local --fail-swap-on=false --hostname-override=10.142.232.161 --kubeconfig=/etc/kubernetes/kubeconfig --pod-infra-container-image=10.142.233.76:8021/library/pause:latest --port=10250 --enforce-node-allocatable=pods --eviction-hard=memory.available<20%,nodefs.inodesFree<20%,imagefs.inodesFree<20%,nodefs.available<50%,imagefs.available<20% --network-plugin=cni

查看node的状态,报Attempting to reclaim nodefs,意思是尝试回收nodefs,也就是nodefs超标了

$ kubectl describe node 10.142.232.161

...

Normal Starting 1m kubelet, 10.142.232.161 Starting kubelet.

Normal NodeAllocatableEnforced 1m kubelet, 10.142.232.161 Updated Node Allocatable limit across pods

Normal NodeHasSufficientDisk 1m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeHasSufficientDisk

Normal NodeHasSufficientMemory 1m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeHasSufficientMemory

Normal NodeHasNoDiskPressure 1m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeHasNoDiskPressure

Normal NodeNotReady 1m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeNotReady

Normal NodeHasDiskPressure 53s kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeHasDiskPressure

Normal NodeReady 53s kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeReady

Warning EvictionThresholdMet 2s (x5 over 1m) kubelet, 10.142.232.161 Attempting to reclaim nodefs

2.2 修改nodefs的阈值为60%,node应该正常

修改nodefs的阈值为60%

$ ps -ef | grep kubelet

root 78664 1 31 18:38 ? 00:00:02 /usr/bin/kubelet --address=0.0.0.0 --allow-privileged=true --cluster-dns=10.254.0.10 --cluster-domain=kube.local --fail-swap-on=false --hostname-override=10.142.232.161 --kubeconfig=/etc/kubernetes/kubeconfig --pod-infra-container-image=10.142.233.76:8021/library/pause:latest --port=10250 --enforce-node-allocatable=pods --eviction-hard=memory.available<20%,nodefs.inodesFree<20%,imagefs.inodesFree<20%,nodefs.available<40%,imagefs.available<20% --network-plugin=cni

此时查看node的状态,已正常

$ kubectl describe node 10.142.232.161

...

Normal Starting 2m kubelet, 10.142.232.161 Starting kubelet.

Normal NodeReady 1m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeReady

三、修改kubelet启动参数--root-dir,将值设成/app/kubelet

以下几个参数的默认值都与/var/lib/kubelet有关

--root-dir # 默认值为 /var/lib/kubelet

--seccomp-profile-root # 默认值为 /var/lib/kubelet/seccomp

--cert-dir # 默认值为 /var/lib/kubelet/pki

--kubeconfig # 默认值为 /var/lib/kubelet/kubeconfig

为了能够不再使用/var/lib/kubelet这个目录,我们需要对这四个参数显示设置。设置如下:

--root-dir=/app/kubelet

--seccomp-profile-root=/app/kubelet/seccomp

--cert-dir=/app/kubelet/pki

--kubeconfig=/etc/kubernetes/kubeconfig

3.1 修改让imagefs的阈值为80%,nodefs的阈值为60%;此时应该报nodefs超标

$ ps -ef | grep kubelet

root 14423 1 10 19:28 ? 00:00:34 /usr/bin/kubelet --address=0.0.0.0 --allow-privileged=true --cluster-dns=10.254.0.10 --cluster-domain=kube.local --fail-swap-on=false --hostname-override=10.142.232.161 --kubeconfig=/etc/kubernetes/kubeconfig --pod-infra-container-image=10.142.233.76:8021/library/pause:latest --port=10250 --enforce-node-allocatable=pods --eviction-hard=memory.available<20%,nodefs.inodesFree<20%,imagefs.inodesFree<20%,nodefs.available<40%,imagefs.available<20% --root-dir=/app/kubelet --seccomp-profile-root=/app/kubelet/seccomp --cert-dir=/app/kubelet/pki --network-plugin=cni

查看节点的状态,只报Attempting to reclaim nodefs,也就是说nodefs超标

$ kubectl describe node 10.142.232.161

...

Normal NodeHasDiskPressure 3m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeHasDiskPressure

Normal NodeReady 3m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeReady

Normal Starting 3m kube-proxy, 10.142.232.161 Starting kube-proxy.

Warning EvictionThresholdMet 27s (x15 over 3m) kubelet, 10.142.232.161 Attempting to reclaim nodefs

3.2 修改让imagefs的阈值为60%,nodefs的阈值为80%;此时应该报imagefs超标

$ ps -ef |grep kubelet

root 21381 1 30 19:36 ? 00:00:02 /usr/bin/kubelet --address=0.0.0.0 --allow-privileged=true --cluster-dns=10.254.0.10 --cluster-domain=kube.local --fail-swap-on=false --hostname-override=10.142.232.161 --kubeconfig=/etc/kubernetes/kubeconfig --pod-infra-container-image=10.142.233.76:8021/library/pause:latest --port=10250 --enforce-node-allocatable=pods --eviction-hard=memory.available<20%,nodefs.inodesFree<20%,imagefs.inodesFree<20%,nodefs.available<20%,imagefs.available<40% --root-dir=/app/kubelet --seccomp-profile-root=/app/kubelet/seccomp --cert-dir=/app/kubelet/pki --network-plugin=cni

我们查看node的状态,只报imagefs超标

$ kubectl describe node 10.142.232.161

...

Normal Starting 1m kubelet, 10.142.232.161 Starting kubelet.

Normal NodeAllocatableEnforced 1m kubelet, 10.142.232.161 Updated Node Allocatable limit across pods

Normal NodeHasSufficientDisk 1m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeHasSufficientDisk

Normal NodeNotReady 1m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeNotReady

Normal NodeHasNoDiskPressure 1m (x2 over 1m) kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeHasNoDiskPressure

Normal NodeHasSufficientMemory 1m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeHasSufficientMemory

Normal NodeReady 1m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeReady

Normal NodeHasDiskPressure 1m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeHasDiskPressure

Warning EvictionThresholdMet 11s (x5 over 1m) kubelet, 10.142.232.161 Attempting to reclaim imagefs

3.3 修改让imagefs的阈值为60%,nodefs的阈值为60%;此时应该报两个都超标

$ ps -ef | grep kubelet

root 24524 1 33 19:39 ? 00:00:01 /usr/bin/kubelet --address=0.0.0.0 --allow-privileged=true --cluster-dns=10.254.0.10 --cluster-domain=kube.local --fail-swap-on=false --hostname-override=10.142.232.161 --kubeconfig=/etc/kubernetes/kubeconfig --pod-infra-container-image=10.142.233.76:8021/library/pause:latest --port=10250 --enforce-node-allocatable=pods --eviction-hard=memory.available<20%,nodefs.inodesFree<20%,imagefs.inodesFree<20%,nodefs.available<40%,imagefs.available<40% --root-dir=/app/kubelet --seccomp-profile-root=/app/kubelet/seccomp --cert-dir=/app/kubelet/pki --network-plugin=cni

我们查看node的状态,果然imagefs与nodefs都超标了

$ kubectl describe node 10.142.232.161

...

Normal Starting 1m kubelet, 10.142.232.161 Starting kubelet.

Normal NodeAllocatableEnforced 1m kubelet, 10.142.232.161 Updated Node Allocatable limit across pods

Normal NodeHasSufficientDisk 1m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeHasSufficientDisk

Normal NodeHasSufficientMemory 1m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeHasSufficientMemory

Normal NodeHasNoDiskPressure 1m (x2 over 1m) kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeHasNoDiskPressure

Normal NodeNotReady 1m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeNotReady

Normal NodeHasDiskPressure 1m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeHasDiskPressure

Normal NodeReady 1m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeReady

Warning EvictionThresholdMet 14s kubelet, 10.142.232.161 Attempting to reclaim imagefs

Warning EvictionThresholdMet 4s (x8 over 1m) kubelet, 10.142.232.161 Attempting to reclaim nodefs

3.4 修改让imagefs的阈值为80%,nodefs的阈值为80%;此时node应该正常

$ ps -ef | grep kubelet

root 27869 1 30 19:43 ? 00:00:01 /usr/bin/kubelet --address=0.0.0.0 --allow-privileged=true --cluster-dns=10.254.0.10 --cluster-domain=kube.local --fail-swap-on=false --hostname-override=10.142.232.161 --kubeconfig=/etc/kubernetes/kubeconfig --pod-infra-container-image=10.142.233.76:8021/library/pause:latest --port=10250 --enforce-node-allocatable=pods --eviction-hard=memory.available<20%,nodefs.inodesFree<20%,imagefs.inodesFree<20%,nodefs.available<20%,imagefs.available<20% --root-dir=/app/kubelet --seccomp-profile-root=/app/kubelet/seccomp --cert-dir=/app/kubelet/pki --network-plugin=cni

我们查看node的状态,果然没有报imagefs与nodefs的错了

$ kubectl decribe node 10.142.232.161

...

Normal Starting 1m kubelet, 10.142.232.161 Starting kubelet.

Normal NodeHasSufficientDisk 1m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeHasSufficientDisk

Normal NodeHasSufficientMemory 1m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeHasSufficientMemory

Normal NodeNotReady 1m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeNotReady

Normal NodeAllocatableEnforced 1m kubelet, 10.142.232.161 Updated Node Allocatable limit across pods

Normal NodeReady 1m kubelet, 10.142.232.161 Node 10.142.232.161 status is now: NodeReady六 资源预留 vs 驱逐 vs OOM

有三个概念我们要分清楚:资源预留、驱逐、OOM。

- 资源预留:影响的是节点的Allocatable的值

- 驱逐:kubelet对Pod进行驱逐时,只根据--eviction-hard参数(支持的指标参考本文),与system-reserved等参数无关。

- OOM:当某个进程的内存超过自己的限制时,该进程会被docker(cgroup)杀掉。容器发生OOM的情况可能有两种:一是容器所使用的内存超出了自身的limit限制;二是所有Pod使用的内存总和超出了

/sys/fs/cgroup/memory/kubepods/memory.limit_in_bytes

详见:https://www.cnblogs.com/linhaifeng/p/16185273.html

补充

在运行一段时候之后,节点上会下载很多镜像,也会有很多因为各种原因退出的容器。为了保证节点能够正常运行,kubelet 要防止镜像太多占满磁盘空间,也要防止退出的容器太多导致系统运行缓慢或者出现错误。 GC 的工作不需要手动干预,kubelet 会周期性去执行,不过在启动 kubelet 进程的时候可以通过参数控制 GC 的策略。kubelet会启动两个GC,分别回收container和image。其中container的回收频率为1分钟一次,而image回收频率为5分钟一次。 1. 默认情况下,container GC 是每分钟进行一次,image GC 是每五分钟一次,如果有不同的需要,可以通过 kubelet 的启动参数进行修改 2. 不要手动清理镜像和容器,因为 kubelet 运行的时候会保存当前节点上镜像和容器的缓存,并定时更新。手动清理镜像和容器会让 kubelet 做出误判,带来不确定的问题 镜像回收:在每个节点的kubelet配置文件/var/lib/kubelet/config.yaml imageMinimumGCAge: 3600s imageGCHighThresholdPercent: 90 imageGCLowThresholdPercent: 80

参考官网链接:https://kubernetes.io/zh/docs/tasks/administer-cluster/out-of-resource/