CFS buddy简析

在内存管理中,我们知道buddy伙伴系统;在进程调度中,也有一个CFS buddy的概念,但是CFS buddy与内存管理的buddy是不太一样的。这次我们就一起来简单看一下CFS buddy是什么。

代码基于CAF-kernel-4.19以及kernel-5.4,不免有错误之处,请指正。

一、数据结构和接口

- 在cfs_rq结构体中保存了cfs buddy相关的数据:

/* CFS-related fields in a runqueue */ struct cfs_rq { .... /* * 'curr' points to currently running entity on this cfs_rq. * It is set to NULL otherwise (i.e when none are currently running). */ struct sched_entity *curr; struct sched_entity *next; struct sched_entity *last; struct sched_entity *skip; .... }

- 设置cfs buddy的api:

static void set_last_buddy(struct sched_entity *se) { if (entity_is_task(se) && unlikely(task_of(se)->policy == SCHED_IDLE)) return; for_each_sched_entity(se) { if (SCHED_WARN_ON(!se->on_rq)) return; cfs_rq_of(se)->last = se; //设置last cfs buddy } } static void set_next_buddy(struct sched_entity *se) { if (entity_is_task(se) && unlikely(task_of(se)->policy == SCHED_IDLE)) return; for_each_sched_entity(se) { if (SCHED_WARN_ON(!se->on_rq)) return; cfs_rq_of(se)->next = se; //设置next cfs buddy } } static void set_skip_buddy(struct sched_entity *se) { for_each_sched_entity(se) cfs_rq_of(se)->skip = se; //设置skip buddy }

- 清除cfs buddy:

static void clear_buddies(struct cfs_rq *cfs_rq, struct sched_entity *se) { if (cfs_rq->last == se) //根据se不同,清零不同的cfs buddy指针 __clear_buddies_last(se); if (cfs_rq->next == se) __clear_buddies_next(se); if (cfs_rq->skip == se) __clear_buddies_skip(se); } //这里只看其中之一。另外2个cfs buddy,逻辑完全一样,只是最后设为NULL的成员不同,需对应。 static void __clear_buddies_last(struct sched_entity *se) { for_each_sched_entity(se) { struct cfs_rq *cfs_rq = cfs_rq_of(se); if (cfs_rq->last != se) break; cfs_rq->last = NULL; //这里设为将cfs_rq的last cfs buddy设为NULL } }

二、调用路径和原理分析

1. next buddy设置和使用路径

1.1 next buddy设置

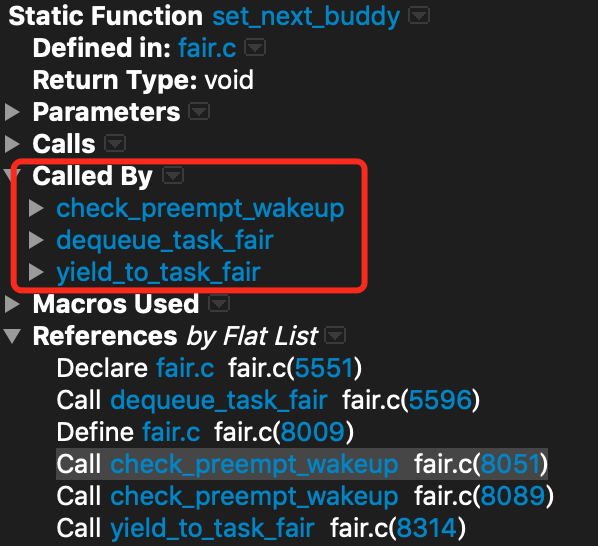

1.1.1 dequeue_task_fair

/* * The dequeue_task method is called before nr_running is * decreased. We remove the task from the rbtree and * update the fair scheduling stats: */ static void dequeue_task_fair(struct rq *rq, struct task_struct *p, int flags) { struct cfs_rq *cfs_rq; struct sched_entity *se = &p->se; int task_sleep = flags & DEQUEUE_SLEEP; //判断flags是否带有DEQUEUE_SLEEP /* * The code below (indirectly) updates schedutil which looks at * the cfs_rq utilization to select a frequency. * Let's update schedtune here to ensure the boost value of the * current task is not more accounted for in the selection of the OPP. */ schedtune_dequeue_task(p, cpu_of(rq)); for_each_sched_entity(se) { cfs_rq = cfs_rq_of(se); dequeue_entity(cfs_rq, se, flags); /* * end evaluation on encountering a throttled cfs_rq * * note: in the case of encountering a throttled cfs_rq we will * post the final h_nr_running decrement below. */ if (cfs_rq_throttled(cfs_rq)) break; cfs_rq->h_nr_running--; walt_dec_cfs_rq_stats(cfs_rq, p); /* Don't dequeue parent if it has other entities besides us */ if (cfs_rq->load.weight) { /* Avoid re-evaluating load for this entity: */ se = parent_entity(se); /* * Bias pick_next to pick a task from this cfs_rq, as //下次选择next task时偏向当前这个task,即设置为next cfs buddy * p is sleeping when it is within its sched_slice. //因为它在自己的调度时间片中进入sleep了 */ if (task_sleep && se && !throttled_hierarchy(cfs_rq)) //如果带有DEQUEUE_SLEEP flags,并且parent se存在,并且没有陷入cfs rq带宽限制 set_next_buddy(se); break; } flags |= DEQUEUE_SLEEP; } for_each_sched_entity(se) { cfs_rq = cfs_rq_of(se); cfs_rq->h_nr_running--; walt_dec_cfs_rq_stats(cfs_rq, p); if (cfs_rq_throttled(cfs_rq)) break; update_load_avg(cfs_rq, se, UPDATE_TG); update_cfs_group(se); } if (!se) { sub_nr_running(rq, 1); dec_rq_walt_stats(rq, p); } util_est_dequeue(&rq->cfs, p, task_sleep); hrtick_update(rq); }

带有SLEEP flags的情况有3种:

---1、__schedule --> deactivate_task(rq, prev, DEQUEUE_SLEEP | DEQUEUE_NOCLOCK) --> dequeue_task() //正常进程切换的时候,task出队

---2、throttle_cfs_rq --> dequeue_entity(qcfs_rq, se, DEQUEUE_SLEEP); //cfs带宽限制中,throttle cfs时也会传DEQUEUE_SLEEP

---3、dequeue_task_fair --> flags |= DEQUEUE_SLEEP //从dequeue的se开始向parent se遍历,直到se为group se,并且其中还有其他的task,就会break,并且把此时的group se设置为next buddy。而遍历过程中的se组内仅包含dequeue的task(通过cfs_rq->load_weight判断,dequeue_entity后,就会减去task se的load.weight。如果只有该task,那减了之后cfs_rq->load.weight就==0了),那么就会赋值DEQUEUE_SLEEP flag,但是这个flag不会影响到dequeue_task_fair设置的next buddy

1.1.2 check_preempt_wakeup

/* * Preempt the current task with a newly woken task if needed: */ static void check_preempt_wakeup(struct rq *rq, struct task_struct *p, int wake_flags) { struct task_struct *curr = rq->curr; struct sched_entity *se = &curr->se, *pse = &p->se; struct cfs_rq *cfs_rq = task_cfs_rq(curr); int scale = cfs_rq->nr_running >= sched_nr_latency; //cfs_rq上所有task数量是否 > 8 int next_buddy_marked = 0; if (unlikely(se == pse)) return; /* * This is possible from callers such as attach_tasks(), in which we * unconditionally check_prempt_curr() after an enqueue (which may have * lead to a throttle). This both saves work and prevents false * next-buddy nomination below. */ if (unlikely(throttled_hierarchy(cfs_rq_of(pse)))) return; if (sched_feat(NEXT_BUDDY) && scale && !(wake_flags & WF_FORK)) { //判断是否打开了NEXT_BUDDY功能(默认关闭),并且当前curr所在cfs_rq上的task数量 > 8,并且wake flags没有设置WF_FORK set_next_buddy(pse); next_buddy_marked = 1; } /* * We can come here with TIF_NEED_RESCHED already set from new task * wake up path. * * Note: this also catches the edge-case of curr being in a throttled * group (e.g. via set_curr_task), since update_curr() (in the * enqueue of curr) will have resulted in resched being set. This * prevents us from potentially nominating it as a false LAST_BUDDY * below. */ if (test_tsk_need_resched(curr)) return; /* Idle tasks are by definition preempted by non-idle tasks. */ if (unlikely(task_has_idle_policy(curr)) && likely(!task_has_idle_policy(p))) goto preempt; /* * Batch and idle tasks do not preempt non-idle tasks (their preemption * is driven by the tick): */ if (unlikely(p->policy != SCHED_NORMAL) || !sched_feat(WAKEUP_PREEMPTION)) return; find_matching_se(&se, &pse); update_curr(cfs_rq_of(se)); BUG_ON(!pse); if (wakeup_preempt_entity(se, pse) == 1) { //判断task pse是否满足抢占curr?==1满足则表示满足 /* * Bias pick_next to pick the sched entity that is * triggering this preemption. */ if (!next_buddy_marked) //如果上面没有设置过next buddy set_next_buddy(pse); //那么就设置pse为next buddy goto preempt; } return; preempt: //跑到这里,说明满足pse抢占curr resched_curr(rq); /* * Only set the backward buddy when the current task is still * on the rq. This can happen when a wakeup gets interleaved * with schedule on the ->pre_schedule() or idle_balance() * point, either of which can * drop the rq lock. * * Also, during early boot the idle thread is in the fair class, * for obvious reasons its a bad idea to schedule back to it. */ if (unlikely(!se->on_rq || curr == rq->idle)) return; if (sched_feat(LAST_BUDDY) && scale && entity_is_task(se)) //判断是否打开了LAST_BUDDY(默认打开),并且当前curr所在cfs_rq上的task数量 > 8, se是task se非group se(task se的my_q成员为NULL,而group se的my_q成员指向自己下属的cfs_rq)

set_last_buddy(se); }

---1、在打开了NEXT_BUDDY调度功feature(默认关闭),并且当前curr进程所在cfs_rq上的task数量 > 8,并且wake flags没有设置WF_FORK(这个flag只有在fork后,子进程wake_up_new_task唤醒抢占时,才会带)。那么就会设置当前抢占的task pse为next buddy。

---2、如果没有走到1中,即没有设置当前抢占的task pse为next buddy。继续判断是否task pse满足抢占curr(通过判断vruntime)。如果满足,则仍然设置当前抢占的task pse为next buddy。

---3、确保pse抢占了curr的情况下,判断在打开了LAST_BUDDY调度功feature(默认打开),并且当前curr进程所在cfs_rq上的task数量 > 8,并且se是task se非group se(task se的my_q成员为NULL,而group se的my_q成员指向自己下属的cfs_rq)。那么就设置curr进程的se为LAST BUDDY。

1.1.3 yield_to_task_fair

static bool yield_to_task_fair(struct rq *rq, struct task_struct *p, bool preempt) { struct sched_entity *se = &p->se; /* throttled hierarchies are not runnable */ if (!se->on_rq || throttled_hierarchy(cfs_rq_of(se))) return false; /* Tell the scheduler that we'd really like pse to run next. */ set_next_buddy(se); yield_task_fair(rq); return true; }

这里只有在kvm中才会调用到。放弃task p的调度权利,但是会设置task p的se为next buddy。

1.2 next buddy使用

1.2.1 pick_next_entity

/* * Pick the next process, keeping these things in mind, in this order: * 1) keep things fair between processes/task groups * 2) pick the "next" process, since someone really wants that to run * 3) pick the "last" process, for cache locality * 4) do not run the "skip" process, if something else is available */ static struct sched_entity * pick_next_entity(struct cfs_rq *cfs_rq, struct sched_entity *curr) { struct sched_entity *left = __pick_first_entity(cfs_rq); struct sched_entity *se; /* * If curr is set we have to see if its left of the leftmost entity * still in the tree, provided there was anything in the tree at all. */ if (!left || (curr && entity_before(curr, left))) left = curr; se = left; /* ideally we run the leftmost entity */ /* * Avoid running the skip buddy, if running something else can * be done without getting too unfair. */ if (cfs_rq->skip == se) { //如果上面从rb tree挑选的task是skip buddy,那么要尽量避免运行它。看看是否有其他的task可以运行 struct sched_entity *second; if (se == curr) { second = __pick_first_entity(cfs_rq); } else { second = __pick_next_entity(se); if (!second || (curr && entity_before(curr, second))) second = curr; } if (second && wakeup_preempt_entity(second, left) < 1) //选出second task的情况下,再判断second的虚拟运行时间 <= se的虚拟运行时间 + 1ms(sysctl_sched_wakeup_granularity)对应到se的虚拟运行时间 se = second; //满足则选择second为下一个运行的se } /* * Prefer last buddy, try to return the CPU to a preempted task. */ if (cfs_rq->last && wakeup_preempt_entity(cfs_rq->last, left) < 1) //如果有设置last buddy,再判断left的虚拟运行时间 <= se的虚拟运行时间 + 1ms(sysctl_sched_wakeup_granularity)对应到se的虚拟运行时间 se = cfs_rq->last; /* * Someone really wants this to run. If it's not unfair, run it. */ if (cfs_rq->next && wakeup_preempt_entity(cfs_rq->next, left) < 1) //如果有设置next buddy,再判断left的虚拟运行时间 <= se的虚拟运行时间 + 1ms(sysctl_sched_wakeup_granularity)对应到se的虚拟运行时间 se = cfs_rq->next; clear_buddies(cfs_rq, se); //清楚se对应的所有buddy设置:next、last、skip return se; }

可以看到,如果虚拟运行时间都没有超过一定限度的话,task se挑选的优先级:next buddy > last buddy > first entity > second entity > skip buddy。

1.2.2 task_hot

这是在负载均衡load balance流程中,判断是否满足迁移条件中的一个判断:

/* * Is this task likely cache-hot: */ static int task_hot(struct task_struct *p, struct lb_env *env) { s64 delta; lockdep_assert_held(&env->src_rq->lock); if (p->sched_class != &fair_sched_class) return 0; if (unlikely(task_has_idle_policy(p))) return 0; /* * Buddy candidates are cache hot: */ if (sched_feat(CACHE_HOT_BUDDY) && env->dst_rq->nr_running && //如果打开了CACHE_HOT_BUDDY调度feature(默认打开),并且迁移目标dsr rq中有task (&p->se == cfs_rq_of(&p->se)->next || //并且迁移的候选task se被设置了next buddy 或者 last buddy &p->se == cfs_rq_of(&p->se)->last)) return 1; if (sysctl_sched_migration_cost == -1) return 1; if (sysctl_sched_migration_cost == 0) return 0; delta = rq_clock_task(env->src_rq) - p->se.exec_start; return delta < (s64)sysctl_sched_migration_cost; }

在负载均衡中,进行agressive的balance条件判断。如果是task hot的(设置过next buddy或者last buddy),那么就不会进行balance。

2. lastbuddy设置和使用路径

2.1 last buddy设置

与next buddy设置地方相同:check_preempt_wakeup,可以参考上面next buddy的详细解析

2.2 last buddy使用

与next buddy使用地方相同:pick_next_entity 和 task_hot,也可以参考上面next buddy的详细解析

整体来讲,last buddy与next buddy的作用类似,只是优先级低于next buddy。

3. skip buddy设置和使用路径

3.1 skip buddy设置

通过系统调用sched_yield对应cfs task的接口:yield_task_fair

/* * sched_yield() is very simple * * The magic of dealing with the ->skip buddy is in pick_next_entity. */ static void yield_task_fair(struct rq *rq) { struct task_struct *curr = rq->curr; //获取rq对应的curr进程 struct cfs_rq *cfs_rq = task_cfs_rq(curr); struct sched_entity *se = &curr->se; //获取curr对应的task se /* * Are we the only task in the tree? */ if (unlikely(rq->nr_running == 1)) //如果cpu rq上只有一个task了,那就不要设置了。假如设了,就没有task运行了 return; clear_buddies(cfs_rq, se); //清理se对应的所有buddy if (curr->policy != SCHED_BATCH) { update_rq_clock(rq); /* * Update run-time statistics of the 'current'. */ update_curr(cfs_rq); /* * Tell update_rq_clock() that we've just updated, * so we don't do microscopic update in schedule() * and double the fastpath cost. */ rq_clock_skip_update(rq); } set_skip_buddy(se); //将se设置为skip buddy }

3.2 skip buddy使用

skip buddy作用的地方在:pick_next_entity,具体可以参考上面next buddy章节。

三、总结

CFS buddy是主动地将task se进行优先级分类,主要是在系统调度选择下一个要运行的task se时,起到作用。使task se挑选优先级调整为:next buddy > last buddy > first entity(rb tree) > second entity(rb tree) > skip buddy。

也会在load balance时作为激进的balance的判断条件。避免对last buddy和next buddy的task进行迁移。

其本身与内存管理的buddy系统没有什么直接的关系;其主要还是针对进程调度的优先进行偏好设置。

那么我们是否可以考虑AB进程互相依赖的状态(比如:生产者消费者模型中,消费较快的场景),进行针对性的设置来对系统进行优化呢?

参考:

https://www.cnblogs.com/hellokitty2/p/15302500.html

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 周边上新:园子的第一款马克杯温暖上架

· Open-Sora 2.0 重磅开源!

· .NET周刊【3月第1期 2025-03-02】

· 分享 3 个 .NET 开源的文件压缩处理库,助力快速实现文件压缩解压功能!

· Ollama——大语言模型本地部署的极速利器