web DevOps / qemu / kvm enviromment / danei enviromment

s

| 序号 | 项目 | 描述 | 备注 |

| 1 | web全栈教学体系 | https://www.codeboy.com/ | |

- 问题2:启动域时出错: 不支持的配置:域需要 KVM,但不可用。

解决2:在主机 BIOS 中启用了检查虚拟化,同时将主机配置为载入 kvm 模块。

[root@hp ~]# virsh start control

错误:开始域 control 失败

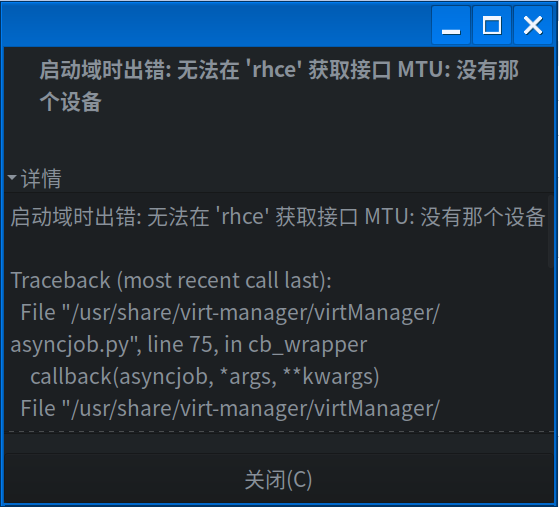

错误:不支持的配置:域需要 KVM,但不可用。在主机 BIOS 中启用了检查虚拟化,同时将主机配置为载入 kvm 模块。- 问题1:[root@tc danei]# virsh start red # 启动失败

-

启动域时出错: 无法在 'rhce' 获取接口 MTU: 没有那个设备 Traceback (most recent call last): File "/usr/share/virt-manager/virtManager/asyncjob.py", line 75, in cb_wrapper callback(asyncjob, *args, **kwargs) File "/usr/share/virt-manager/virtManager/asyncjob.py", line 111, in tmpcb callback(*args, **kwargs) File "/usr/share/virt-manager/virtManager/libvirtobject.py", line 66, in newfn ret = fn(self, *args, **kwargs) File "/usr/share/virt-manager/virtManager/domain.py", line 1420, in startup self._backend.create() File "/usr/lib64/python3.9/site-packages/libvirt.py", line 1234, in create if ret == -1: raise libvirtError ('virDomainCreate() failed', dom=self) libvirt.libvirtError: 无法在 'rhce' 获取接口 MTU: 没有那个设备

解决1:用户名root 密码redhat

- danei /etc/hosts 主机配置

[root@server1 repositories]# more /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.25.0.254 server1.lab0.example.com server1

172.25.0.100 control.lab0.example.com control con

172.25.0.101 node1.lab0.example.com node1 n1

172.25.0.102 node2.lab0.example.com node2 n2

172.25.0.103 node3.lab0.example.com node3 n3

172.25.0.104 node4.lab0.example.com node4 n4

172.25.0.105 node5.lab0.example.com node5 n5

172.25.0.25 red.lab0.example.com red

172.25.0.26 blue.lab0.example.com blue

172.25.0.254 registry.lab.example.com- danei docker环境配置

[root@server1 repositories]# /usr/bin/registry -v

/usr/bin/registry github.com/docker/distribution v2.6.2+unknown

[root@server1 repositories]# ps -eLf | grep registry

root 716 1 716 0 6 10月30 ? 00:00:00 /usr/bin/registry serve /etc/docker-distribution/registry/config.yml

root 716 1 918 0 6 10月30 ? 00:00:01 /usr/bin/registry serve /etc/docker-distribution/registry/config.yml

root 716 1 921 0 6 10月30 ? 00:00:00 /usr/bin/registry serve /etc/docker-distribution/registry/config.yml

root 716 1 922 0 6 10月30 ? 00:00:00 /usr/bin/registry serve /etc/docker-distribution/registry/config.yml

root 716 1 923 0 6 10月30 ? 00:00:00 /usr/bin/registry serve /etc/docker-distribution/registry/config.yml

root 716 1 924 0 6 10月30 ? 00:00:00 /usr/bin/registry serve /etc/docker-distribution/registry/config.yml

student 2421 2018 2421 0 3 10月30 ? 00:00:00 /usr/libexec/at-spi2-registryd --use-gnome-session

student 2421 2018 2652 0 3 10月30 ? 00:00:00 /usr/libexec/at-spi2-registryd --use-gnome-session

student 2421 2018 2653 0 3 10月30 ? 00:00:00 /usr/libexec/at-spi2-registryd --use-gnome-session

root 80160 73160 80160 0 1 14:42 pts/12 00:00:00 grep --color=auto registry

[root@server1 repositories]# ps -eLf | grep docker

root 716 1 716 0 6 10月30 ? 00:00:00 /usr/bin/registry serve /etc/docker-distribution/registry/config.yml

root 716 1 918 0 6 10月30 ? 00:00:01 /usr/bin/registry serve /etc/docker-distribution/registry/config.yml

root 716 1 921 0 6 10月30 ? 00:00:00 /usr/bin/registry serve /etc/docker-distribution/registry/config.yml

root 716 1 922 0 6 10月30 ? 00:00:00 /usr/bin/registry serve /etc/docker-distribution/registry/config.yml

root 716 1 923 0 6 10月30 ? 00:00:00 /usr/bin/registry serve /etc/docker-distribution/registry/config.yml

root 716 1 924 0 6 10月30 ? 00:00:00 /usr/bin/registry serve /etc/docker-distribution/registry/config.yml

root 80162 73160 80162 0 1 14:43 pts/12 00:00:00 grep --color=auto docker

[root@server1 repositories]# more /etc/docker-distribution/registry/config.yml

version: 0.1

log:

fields:

service: registry

storage:

cache:

layerinfo: inmemory

filesystem:

rootdirectory: /var/lib/registry

http:

addr: :5000

[root@server1 repositories]# netstat -atpln | grep 5000

tcp6 0 0 :::5000 :::* LISTEN 716/registry

[root@server1 repositories]# ss -ntplu | grep 5000

tcp LISTEN 0 4096 *:5000 *:* users:(("registry",pid=716,fd=6))

# CentOS 上安装 Docker

[root@lindows ~]#sudo yum install -y yum-utils device-mapper-persistent-data lvm2 # 安装必要的软件包以支持 HTTPS:

[root@lindows ~]#sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo # 添加 Docker 官方 YUM 存储库

[root@lindows ~]#sudo yum install -y docker-ce docker-ce-cli containerd.io # 安装 Docker

[root@lindows ~]#sudo systemctl start docker # 启动 Docker 服务

[root@lindows ~]#sudo systemctl enable docker # 设置 Docker 开机自启

[root@lindows ~]#sudo docker --version # 验证 Docker 安装是否成功- [root@tc danei]# more /etc/libvirt/qemu/networks/rhce.xml # rhce网络配置

<!-- WARNING: THIS IS AN AUTO-GENERATED FILE. CHANGES TO IT ARE LIKELY TO BE OVERWRITTEN AND LOST. Changes to this xml configuration should be made using: virsh net-edit rhce or other application using the libvirt API. --> <network> <name>rhce</name> <uuid>918864ad-822f-4bc5-8d83-b0880d477a49</uuid> <bridge name='virbr3' stp='on' delay='0'/> <mac address='52:54:00:9f:5a:ff'/> <domain name='rhce'/> <ip address='172.25.0.1' netmask='255.255.255.0'> </ip> </network>

-

| 序号 | 项目 | 描述 | 备注 |

| 1 | /usr/local/bin/vm | vm {clone|remove|setip|completion} vm_name | danei vm命令快捷参数 |

| 2 |

/etc/libvirt/qemu/networks/private1.xml /etc/libvirt/qemu/networks/private2.xml /etc/libvirt/qemu/networks/ckavbr.xml /etc/libvirt/qemu/networks/default.xml /etc/libvirt/qemu/networks/rhce.xml - [root@euler-centre autostart]# ll /etc/libvirt/qemu/networks/autostart 总用量 0 lrwxrwxrwx 1 root root 37 9月 27 17:44 ckavbr.xml -> /etc/libvirt/qemu/networks/ckavbr.xml lrwxrwxrwx. 1 root root 14 9月 3 00:45 default.xml -> ../default.xml lrwxrwxrwx 1 root root 40 9月 27 17:40 network66.xml -> /etc/libvirt/qemu/networks/network66.xml lrwxrwxrwx 1 root root 39 9月 27 17:42 private1.xml -> /etc/libvirt/qemu/networks/private1.xml lrwxrwxrwx 1 root root 39 9月 27 17:42 private2.xml -> /etc/libvirt/qemu/networks/private2.xml lrwxrwxrwx 1 root root 35 9月 27 17:46 rhce.xml -> /etc/libvirt/qemu/networks/rhce.xml - |

||

| 3 |

/var/lib/libvirt/images/.node_base.xml /var/lib/libvirt/images/.Rocky.qcow2 |

定制化XML + 原始镜像OS文件 332MB | 默认密码a |

| 4 |

/usr/local/bin/blur_image |

||

- 查看openEuler ssh 环境

[root@euler share]# rpm -qa | grep openssh # 查看ssh,能否被远程ssh必须软件包 openssh-8.8p1-21.oe2203sp2.x86_64 openssh-server-8.8p1-21.oe2203sp2.x86_64 openssh-clients-8.8p1-21.oe2203sp2.x86_64 openssh-askpass-8.8p1-21.oe2203sp2.x86_64 [root@euler share]# pgrep -l sshd # 检索正在运行的进程 1625 sshd [root@euler share]# ps -elf | grep sshd # 查看ssh进程 4 S root 1625 1 0 80 0 - 3473 do_sel 08:24 ? 00:00:00 sshd: /usr/sbin/sshd -D [listener] 0 of 10-100 startups 0 S root 43407 28302 0 80 0 - 5494 pipe_r 16:23 pts/7 00:00:00 grep --color=auto sshd

- 查看openEuler /usr/local/bin 环境

[root@euler bin]# ll /usr/local/bin/ # 查看euler默认环境配置 总用量 30760 -rwxr-xr-x. 1 root root 64984 6月 29 01:23 blur_image -rwxr-xr-x 1 root root 2322376 3月 18 2021 crashpad_handler -rwxr-xr-x 1 root root 29108416 3月 18 2021 qq

- danei环境,定制化/usr/local/bin/rht-vmctl 命令

[root@server1 ~]# vim /usr/local/bin/rht-vmctl

[root@server1 ~]# vim /usr/local/bin/rht-vmctl #!/bin/bash IMG_DIR=/var/lib/libvirt/images XML_DIR=/etc/libvirt/qemu help(){ echo "命令语法: rht-vmctl 子命令 虚拟机名称 子命令可以是: start: 启动虚拟机 stop : 关闭虚拟机 reset: 重置虚拟机 虚拟机名称可以是: red blue control node1 node2 node3 node4 node5" } reset_red(){ sudo virsh destroy red &> /dev/null sudo virsh undefine red &> /dev/null if [ -f $IMG_DIR/red.qcow2 ];then sudo rm -rf $IMG_DIR/red.qcow2 fi sudo qemu-img create -f qcow2 -b $IMG_DIR/.rhel9_red.orig -F qcow2 $IMG_DIR/red.qcow2 &> /dev/null sudo cp $IMG_DIR/.rhel9_red.xml $XML_DIR/red.xml sudo virsh define $XML_DIR/red.xml &>/dev/null echo "Define red vm OK." sudo virsh start red &> /dev/null } reset_blue(){ sudo virsh destroy blue &> /dev/null sudo virsh undefine blue &> /dev/null if [ -f $IMG_DIR/blue.qcow2 ];then sudo rm -rf $IMG_DIR/blue.qcow2 fi if [ -f $IMG_DIR/blue_vdb.qcow2 ];then sudo rm -rf $IMG_DIR/blue_vdb.qcow2 fi if [ -f $IMG_DIR/blue_vdc.qcow2 ];then sudo rm -rf $IMG_DIR/blue_vdc.qcow2 fi sudo qemu-img create -f qcow2 -b $IMG_DIR/.rhel9_blue.orig -F qcow2 $IMG_DIR/blue.qcow2 &> /dev/null sudo qemu-img create -f qcow2 -b $IMG_DIR/.rhel9_blue_vdb.orig -F qcow2 $IMG_DIR/blue_vdb.qcow2 &> /dev/null sudo qemu-img create -f qcow2 -b $IMG_DIR/.rhel9_blue_vdc.orig -F qcow2 $IMG_DIR/blue_vdc.qcow2 &> /dev/null sudo cp $IMG_DIR/.rhel9_blue.xml $XML_DIR/blue.xml sudo virsh define $XML_DIR/blue.xml &>/dev/null echo "Define blue vm OK." sudo virsh start blue &> /dev/null } reset_control(){ sudo virsh destroy control &> /dev/null sudo virsh undefine control &> /dev/null if [ -f $IMG_DIR/control.qcow2 ];then sudo rm -rf $IMG_DIR/control.qcow2 fi sudo qemu-img create -f qcow2 -b $IMG_DIR/.rhel9_control.orig -F qcow2 $IMG_DIR/control.qcow2 &> /dev/null sudo cp $IMG_DIR/.rhel9_control.xml $XML_DIR/control.xml sudo virsh define $XML_DIR/control.xml &>/dev/null echo "Define control vm OK." sudo virsh start control &> /dev/null } reset_node1(){ sudo virsh destroy node1 &> /dev/null sudo virsh undefine node1 &> /dev/null if [ -f $IMG_DIR/node1.qcow2 ];then sudo rm -rf $IMG_DIR/node1.qcow2 fi sudo qemu-img create -f qcow2 -b $IMG_DIR/.rhel9_node1.orig -F qcow2 $IMG_DIR/node1.qcow2 &> /dev/null sudo cp $IMG_DIR/.rhel9_node1.xml $XML_DIR/node1.xml sudo virsh define $XML_DIR/node1.xml &>/dev/null echo "Define node1 vm OK." sudo virsh start node1 &> /dev/null } reset_node2(){ sudo virsh destroy node2 &> /dev/null sudo virsh undefine node2 &> /dev/null if [ -f $IMG_DIR/node2.qcow2 ];then sudo rm -rf $IMG_DIR/node2.qcow2 fi if [ -f $IMG_DIR/node2_vdb.qcow2 ];then sudo rm -rf $IMG_DIR/node2_vdb.qcow2 fi sudo qemu-img create -f qcow2 -b $IMG_DIR/.rhel9_node2.orig -F qcow2 $IMG_DIR/node2.qcow2 &> /dev/null sudo qemu-img create -f qcow2 -b $IMG_DIR/.rhel9_node2_vdb.orig -F qcow2 $IMG_DIR/node2_vdb.qcow2 &> /dev/null sudo qemu-img create -f qcow2 -b $IMG_DIR/.rhel9_node2_vdc.orig -F qcow2 $IMG_DIR/node2_vdc.qcow2 &> /dev/null sudo cp $IMG_DIR/.rhel9_node2.xml $XML_DIR/node2.xml sudo virsh define $XML_DIR/node2.xml &>/dev/null echo "Define node2 vm OK." sudo virsh start node2 &> /dev/null } reset_node3(){ sudo virsh destroy node3 &> /dev/null sudo virsh undefine node3 &> /dev/null if [ -f $IMG_DIR/node3.qcow2 ];then sudo rm -rf $IMG_DIR/node3.qcow2 fi sudo qemu-img create -f qcow2 -b $IMG_DIR/.rhel9_node3.orig -F qcow2 $IMG_DIR/node3.qcow2 &> /dev/null sudo cp $IMG_DIR/.rhel9_node3.xml $XML_DIR/node3.xml sudo virsh define $XML_DIR/node3.xml &>/dev/null echo "Define node3 vm OK." sudo virsh start node3 &> /dev/null } reset_node4(){ sudo virsh destroy node4 &> /dev/null sudo virsh undefine node4 &> /dev/null if [ -f $IMG_DIR/node4.qcow2 ];then sudo rm -rf $IMG_DIR/node4.qcow2 fi if [ -f $IMG_DIR/node4_vdb.qcow2 ];then sudo rm -rf $IMG_DIR/node4_vdb.qcow2 fi sudo qemu-img create -f qcow2 -b $IMG_DIR/.rhel9_node4.orig -F qcow2 $IMG_DIR/node4.qcow2 &> /dev/null sudo qemu-img create -f qcow2 $IMG_DIR/node4_vdb.qcow2 2G &> /dev/null sudo cp $IMG_DIR/.rhel9_node4.xml $XML_DIR/node4.xml sudo virsh define $XML_DIR/node4.xml &>/dev/null echo "Define node4 vm OK." sudo virsh start node4 &> /dev/null } reset_node5(){ sudo virsh destroy node5 &> /dev/null sudo virsh undefine node5 &> /dev/null if [ -f $IMG_DIR/node5.qcow2 ];then sudo rm -rf $IMG_DIR/node5.qcow2 fi if [ -f $IMG_DIR/node5_vdb.qcow2 ];then sudo rm -rf $IMG_DIR/node5_vdb.qcow2 fi sudo qemu-img create -f qcow2 -b $IMG_DIR/.rhel9_node5.orig -F qcow2 $IMG_DIR/node5.qcow2 &> /dev/null sudo qemu-img create -f qcow2 -b $IMG_DIR/.rhel9_node5_vdb.orig -F qcow2 $IMG_DIR/node5_vdb.qcow2 &> /dev/null sudo qemu-img create -f qcow2 $IMG_DIR/node5_vdc.qcow2 1G &> /dev/null sudo cp $IMG_DIR/.rhel9_node5.xml $XML_DIR/node5.xml sudo virsh define $XML_DIR/node5.xml &>/dev/null echo "Define node5 vm OK." sudo virsh start node5 &> /dev/null } startvm(){ tmp=$(virsh list --all | awk -v vm=$1 '$2==vm {print "0"}') if [ "$tmp" == "0" ];then virsh start $1 &>/dev/null echo "Start $1 OK." else echo "Could't found $1 vm." echo "Please reset $1 vm first." fi } stopvm(){ tmp=$(virsh list --all | awk -v vm=$1 '$2==vm {print "0"}') if [ "$tmp" == "0" ];then virsh destroy $1 &>/dev/null echo "Stop $1 OK." else echo "Could't found $1 vm." echo "Please reset $1 vm first." fi } case $1 in start) case $2 in red) startvm red;; blue) startvm blue;; control) startvm control;; node1) startvm node1;; node2) startvm node2;; node3) startvm node3;; node4) startvm node4;; node5) startvm node5;; utility) startvm utility;; *) help;; esac;; stop) case $2 in red) stopvm red;; blue) stopvm blue;; control) stopvm control;; node1) stopvm node1;; node2) stopvm node2;; node3) stopvm node3;; node4) stopvm node4;; node5) stopvm node5;; utility) stopvm utility;; *) help;; esac;; reset) case $2 in red) reset_red;; blue) reset_blue;; control) reset_control;; node1) reset_node1;; node2) reset_node2;; node3) reset_node3;; node4) reset_node4;; node5) reset_node5;; utility) reset_utility;; *) help;; esac;;

- danei环境,定制化/usr/local/bin/vm 命令

- 查看danei 环境定制化/usr/local/bin/vm 命令

[root@lindows ~]# /usr/local/bin/vm # 定制化环境命令 vm {clone|remove|setip|completion} vm_name [root@lindows ~]# more /usr/local/bin/vm # 定制化脚本变量vm #!/bin/bash export LANG=C . /etc/init.d/functions CONF_DIR=/etc/libvirt/qemu IMG_DIR=/var/lib/libvirt/images function clone_vm(){ local clone_IMG=${IMG_DIR}/${1};shift local clone_XML=${IMG_DIR}/${1};shift while ((${#} > 0));do if [ -e ${IMG_DIR}/${1}.img ];then echo_warning echo "vm ${1}.img is exists" return 1 else sudo -u qemu qemu-img create -b ${clone_IMG} -F qcow2 -f qcow2 ${IMG_DIR}/${1}.img 20G >/dev/null sed -e "s,node_base,${1}," ${clone_XML} |sudo tee ${CONF_DIR}/${1}.xml >/dev/null sudo virsh define ${CONF_DIR}/${1}.xml &>/dev/null msg=$(sudo virsh start ${1}) echo_success echo ${msg} fi shift done } function remove_vm(){ if $(sudo virsh list --all --name|grep -Pq "^${1}$");then img=$(sudo virsh domblklist $1 2>/dev/null |grep -Po "/var/lib/libvirt/images/.*") sudo virsh destroy $1 &>/dev/null sudo virsh undefine $1 &>/dev/null sudo rm -f ${img} echo_success echo "vm ${1} delete" fi } function vm_setIP(){ EXEC="sudo virsh qemu-agent-command $1" until $(${EXEC} '{"execute":"guest-ping"}' &>/dev/null);do sleep 1;done file_id=$(${EXEC} '{"execute":"guest-file-open", "arguments":{"path":"/etc/sysconfig/network-scripts/ifcfg-eth0","mode":"w"}}' |\ python3 -c 'import json;print(json.loads(input())["return"])') body=$"# Generated by dracut initrd\nDEVICE=\"eth0\"\nONBOOT=\"yes\"\nNM_CONTROLLED=\"yes\"\nTYPE=\"Ethernet\"\nBOOTPROTO=\"static\"\nIPADDR=\"${2}\"\nPREFIX=24\nGATEWAY=\"${2%.*}.254\"\nDNS1=\"${2%.*}.254\"" base64_body=$(echo -e "${body}"|base64 -w 0) ${EXEC} "{\"execute\":\"guest-file-write\", \"arguments\":{\"handle\":${file_id},\"buf-b64\":\"${base64_body}\"}}" &>/dev/null ${EXEC} "{\"execute\":\"guest-file-close\",\"arguments\":{\"handle\":${file_id}}}" &>/dev/null sudo virsh reboot ${1} &>/dev/null } function vm_completion(){ cat <<"EOF" __start_vm() { COMPREPLY=() local cur cur="${COMP_WORDS[COMP_CWORD]}" if [[ "${COMP_WORDS[0]}" == "vm" ]] && [[ ${#COMP_WORDS[@]} -eq 2 ]];then COMPREPLY=($(compgen -W "clone remove setip" ${cur})) fi if [[ "${COMP_WORDS[1]}" == "remove" ]] && [[ ${#COMP_WORDS[@]} -gt 2 ]];then COMPREPLY=($(compgen -W "$(sudo virsh list --name --all)" ${cur})) fi if [[ "${COMP_WORDS[1]}" == "setip" ]] && [[ ${#COMP_WORDS[@]} -eq 3 ]];then COMPREPLY=($(compgen -W "$(sudo virsh list --name)" ${cur})) fi } if [[ $(type -t compopt) = "builtin" ]]; then complete -o default -F __start_vm vm else complete -o default -o nospace -F __start_vm vm fi EOF } # main case "$1" in clone) shift _img=".Rocky.qcow2" _xml=".node_base.xml" clone_vm ${_img} ${_xml} ${@} ;; remove) while ((${#} > 1));do shift remove_vm ${1} done ;; setip) if (( ${#} == 3 )) && $(sudo virsh list --all --name|grep -Pq "^${2}$");then domid=$(sudo virsh domid $2) if [[ ${domid} != "-" ]] && $(grep -Pq "^((25[0-5]|2[0-4]\d|1?\d?\d)\.){3}(25[0-5]|2[0-4]\d|1?\d?\d)$" <<<"${3}");then vm_setIP "${2}" "$3" fi else echo "${0##*/} setip vm_name ip.xx.xx.xx" fi ;; completion) vm_completion ;; *) echo "${0##*/} {clone|remove|setip|completion} vm_name" ;; esac exit $?

- 查看danei环境,网络配置private1.xml

[root@lindows ~]# more /etc/libvirt/qemu/networks/private1.xml # 查看private1.xml网络配置

<!-- WARNING: THIS IS AN AUTO-GENERATED FILE. CHANGES TO IT ARE LIKELY TO BE OVERWRITTEN AND LOST. Changes to this xml configuration should be made using: virsh net-edit private1 or other application using the libvirt API. --> <network> <name>private1</name> <uuid>668f46ac-4153-4c0c-8bab-87a0fbd6a930</uuid> <forward mode='nat'/> <bridge name='private1' stp='on' delay='0'/> <mac address='52:54:00:1f:13:8c'/> <domain name='localhost' localOnly='no'/> <ip address='192.168.88.254' netmask='255.255.255.0'> <dhcp> <range start='192.168.88.128' end='192.168.88.200'/> </dhcp> </ip> </network>

- 查看danei qemu网络配置private2.xml

[root@lindows ~]# more /etc/libvirt/qemu/networks/private2.xml # 查看private2.xml网络配置

<!-- WARNING: THIS IS AN AUTO-GENERATED FILE. CHANGES TO IT ARE LIKELY TO BE OVERWRITTEN AND LOST. Changes to this xml configuration should be made using: virsh net-edit private2 or other application using the libvirt API. --> <network> <name>private2</name> <uuid>3bbe6f7c-f07a-4fe5-a062-f23954864614</uuid> <bridge name='private2' stp='on' delay='0'/> <mac address='52:54:00:c9:a6:0a'/> <ip address='192.168.99.254' netmask='255.255.255.0'> <dhcp> <range start='192.168.99.128' end='192.168.99.200'/> </dhcp> </ip> </network>

- [root@tc ~]# more /etc/libvirt/qemu/networks/rhce.xml

<!-- WARNING: THIS IS AN AUTO-GENERATED FILE. CHANGES TO IT ARE LIKELY TO BE OVERWRITTEN AND LOST. Changes to this xml configuration should be made using: virsh net-edit rhce or other application using the libvirt API. --> <network> <name>rhce</name> <uuid>918864ad-822f-4bc5-8d83-b0880d477a49</uuid> <bridge name='virbr3' stp='on' delay='0'/> <mac address='52:54:00:9f:5a:ff'/> <domain name='rhce'/> <ip address='172.25.0.1' netmask='255.255.255.0'> </ip> </network>

-

pwd:a

| 序号 | 项目 | 描述 | 备注 |

| 1 | - |

yum remove qemu-kvm

Question Unable to connect to libvirt qemu:///system.

internal error: Cannot find suitable emulator for x86_64

Libvirt URI is: qemu:///system

Traceback (most recent call last):

File "/usr/share/virt-manager/virtManager/connection.py", line 1120, in _open_thread

self._populate_initial_state()

File "/usr/share/virt-manager/virtManager/connection.py", line 1074, in _populate_initial_state

logging.debug("conn version=%s", self._backend.conn_version())

File "/usr/share/virt-manager/virtinst/connection.py", line 325, in conn_version

self._conn_version = self._libvirtconn.getVersion()

File "/usr/lib64/python2.7/site-packages/libvirt.py", line 3991, in getVersion

if ret == -1: raise libvirtError ('virConnectGetVersion() failed', conn=self)

libvirtError: internal error: Cannot find suitable emulator for x86_64

Answer: Error polling connection 'qemu:///system': internal error Cannot find suitable emulator for x86_64 http://blog.chinaunix.net/uid-24807808-id-3465966.html 今天在装virt-manager时,出现这样的错误: [root@centos7 ~]# yum -y install qemu* kvm虚拟化管理平台WebVirtMgr部署 ,http://wiki.cns*****.com/pages/viewpage.action?pageId=34843978 centos7.2 kvm虚拟化管理平台WebVirtMgr部署 ,https://www.cnblogs.com/nulige/p/9236191.html |

|

| 2 |

问题2 [root@euler images]# vm clone web2 |

解决2:/无 |

|

| 3 | vm 命令使用 |

[root@server1 ~]# vm remove lvs{1,2} web{1,2} # 批量删除lvs 、web 机器 [root@server1 ~]# vm clone ceph{1..3} client1 # 创建ceph1、ceph2、ceph3台+ client1 机器 |

|

s

kvm命令行安装 , https://www.cnblogs.com/rm580036/p/12882779.html

virsh常用维护命令 , https://www.cnblogs.com/cyleon/p/9816989.html

1、配置环境

| 主机名 | 外网ip | 内网ip |

| c7-81 | 10.0.0.81 | 172.16.1.81 |

2、查看是否支持虚拟化

[root@ c7-81 ~]# dmesg |grep kvm [root@ c7-81 ~]# egrep -o '(vmx|svm)' /proc/cpuinfo

3、安装kvm用户模块

[root@ c7-81 ~]#

3.1、启动libvirt

[root@ c7-81 ~]# systemctl enable libvirtd [root@ c7-81 ~]# systemctl start libvirtd

4、上传镜像

[root@ c7-81 ~]# cd /opt/

[root@ c7-81 opt]# ll total 940032 -rw-r--r-- 1 root root 962592768 May 13 15:16 CentOS-7-x86_64-Minimal-1810.iso [root@ c7-81 opt]# dd if=/dev/cdrom of=/opt/CentOS-7-x86_64-Minimal-1810.iso

4.1、创建磁盘

[root@ c7-81 opt]# qemu-img create -f qcow2 /opt/c73.qcow2 6G [root@ c7-81 opt]# ll total 4554948 -rw-r--r-- 1 root root 197120 May 13 15:20 c73.qcow2 -rw-r--r-- 1 root root 4664066048 May 13 15:18 CentOS-7-x86_64-Minimal-1810.iso

-f 制定虚拟机格式

/opt/ 存放路径

6G 镜像大小

raw 裸磁盘不支持快照

qcow2 支持快照

qemu-img软件包是quem-kvm-tools依赖安装的

5、安装虚拟机

[root@ c7-81 opt]# yum -y install virt-install [root@ c7-81 opt]# virt-install --virt-type=kvm --name=c73 --vcpus=1 -r 1024 --cdrom=/opt/CentOS-7-x86_64-Minimal-1810.iso--network network=default --graphics vnc,listen=0.0.0.0 --noautoconsole --os-type=linux --os-variant=rhel7 --disk path=/opt/c73.qcow2,size=6,format=qcow2

[root@ c7-81 opt]# virsh list --all Id Name State ---------------------------------------------------- 1 c73 running [root@ c7-81 opt]# netstat -lntup | grep 5900 tcp 0 0 0.0.0.0:5900 0.0.0.0:* LISTEN 2733/qemu-kvm

5.1、开始安装

如果重启之后没有了,到xshell查看

[root@ c7-81 opt]# virsh list --all Id Name State ---------------------------------------------------- - c73 shut off

之后启动机器

[root@ c7-81 opt]# virsh start c73 Domain c73 started [root@ c7-81 opt]# virsh list --all Id Name State ---------------------------------------------------- 2 c73 running

登录虚拟机

6、查看物理机网卡

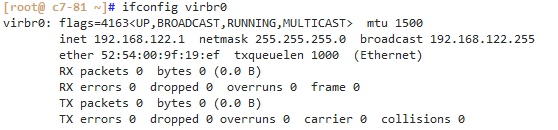

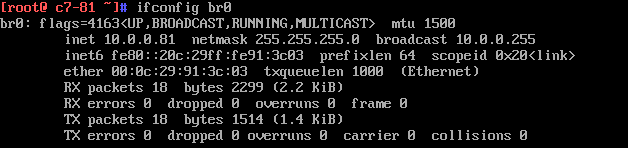

[root@ c7-81 ~]# ifconfig virbr0

7、配置桥接br0

[root@ c7-81 ~]# yum -y install bridge-utils

7.1、配置临时的

[root@ c7-81 ~]# brctl addbr br0

[root@ c7-81 ~]# brctl show

bridge name bridge id STP enabled interfaces

br0 8000.000000000000 no

virbr0 8000.5254009f19ef yes virbr0-nic

vnet0

敲完这个命令之后xshell会断开

[root@ c7-81 ~]# brctl addif br0 ens33

这个不会断

[root@ c7-82 ~]# virsh iface-bridge ens33 br0

[root@ c7-81 ~]# ip addr del dev ens33 10.0.0.81/24 //删除ens33上的ip地址

[root@ c7-81 ~]# ifconfig br0 10.0.0.81/24 up //配置br0的ip地址并启动设备

[root@ c7-81 ~]# route add default gw 10.0.0.254 //重新加入默认网关

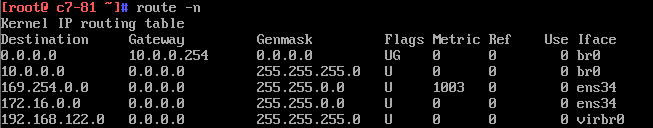

[root@ c7-81 ~]# route -n

因为这个br0是临时的,所以重启之后就没了

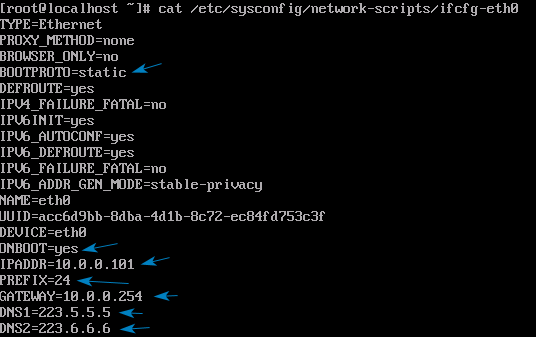

7.2、永久配置

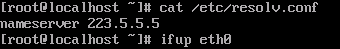

[root@ c7-81 ~]# cp /etc/sysconfig/network-scripts/ifcfg-ens33 . [root@ c7-81 ~]# vim /etc/sysconfig/network-scripts/ifcfg-ens33 [root@ c7-81 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens33 DEVICE=ens33 TYPE=Ethernet ONBOOT=yes BRIDGE=br0 NM_CONTROLLED=no [root@ c7-81 ~]# vim /etc/sysconfig/network-scripts/ifcfg-br0 [root@ c7-81 ~]# cat /etc/sysconfig/network-scripts/ifcfg-br0 DEVICE=br0 TYPE=Bridge ONBOOT=yes BOOTPROTO=static IPADDR=10.0.0.81 NETMASK=255.255.255.0 GATEWAY=10.0.0.254 NM_CONTROLLED=no [root@ c7-81 ~]# systemctl restart network

8、编辑kvm虚拟化的机器

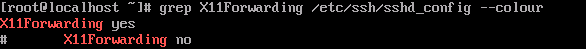

8.1、必须写,不然xshell连不上

8.2、查看sshd是否开启X11转发

8.3、在物理机安装xorg-x11

[root@ c7-81 ~]# yum install -y xorg-x11-font-utils.x86_64 xorg-x11-server-utils.x86_64 xorg-x11-utils.x86_64 xorg-x11-xauth.x86_64 xorg-x11-xinit.x86_64 xorg-x11-drv-ati-firmware

8.4、物理机安装libvirt

libvirt是管理虚拟机的APL库,不仅支持KVM虚拟机,也可以管理Xen等方案下的虚拟机。

[root@ c7-81 ~]# yum -y install virt-manager libvirt libvirt-Python python-virtinst libvirt-client virt-viewer qemu-kvm mesa-libglapi

因为我的主机是服务器,没有图形化界面,想要用virt-manager图形化安装虚拟机,还需要安装X-window。 [root@ c7-81 ~]# yum -y install libXdmcp libXmu libxkbfile xkeyboard-config xorg-x11-xauth xorg-x11-xkb-utils

开启libvirt服务 [root@ c7-81 ~]# systemctl restart libvirtd.service [root@ c7-81 ~]# systemctl enable libvirtd.service

9、配置xshell

9.1、先打开 软件,断开这个会话,重新连上,输入命令:virt-manager

软件,断开这个会话,重新连上,输入命令:virt-manager

[root@ c7-81 ~]# virt-manager

如果出现乱码,请安装以下包

yum -y install dejavu-sans-mono-fonts

9.2、设置为桥接模式

一、virsh常用命令

一些常用命令参数

二、为虚拟机增加网卡

一个完整的数据包从虚拟机到物理机的路径是:虚拟机-->QEMU虚拟机网卡-->虚拟化层-->内核网桥-->物理网卡

KVM默认情况下是由QEMU在Linux的用户空间模拟出来的并提供给虚拟机的。

全虚拟化:即客户机操作系统完全不需要修改就能运行于虚拟机中,客户机看不到真正的硬件设备,与设备的交互全是由纯软件虚拟的

半虚拟化:通过对客户机操作系统进行修改,使其意识到自己运行在虚拟机中。因此,全虚拟化和半虚拟化网卡的区别在于客户机是否需要修改才能运行在宿主机中。

半虚拟化使用virtio技术,virtio驱动因为改造了虚拟机的操作系统,让虚拟机可以直接和虚拟化层通信,从而大大提高了虚拟机性能。

[root@kvm-server ~]# virsh domiflist vm-node1 Interface Type Source Model MAC ------------------------------------------------------- vnet0 bridge br0 virtio 52:54:00:40:75:05 [root@kvm-server ~]# virsh attach-interface vm-node1 --type bridge --source br0 --model virtio #临时增加网卡的方法,关机后再开机新增网卡配置丢失 Interface attached successfully [root@kvm-server ~]# virsh domiflist vm-node1 Interface Type Source Model MAC ------------------------------------------------------- vnet0 bridge br0 virtio 52:54:00:40:75:05 vnet1 bridge br0 virtio 52:54:00:5b:6c:cc

[root@kvm-server ~]# virsh edit vm-node1 #永久生效方法一:修改配置文件增加如下内容 <interface type='bridge'> #永久生效方法二:使用virt-manager管理工具进行操作 <mac address='52:54:00:11:90:7c'/> <source bridge='br0'/> <target dev='vnet1'/> <model type='virtio'/> <alias name='net1'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x08' function='0x0'/> </interface>

[root@kvm-server ~]# virsh domiflist vm-node1 #查找虚拟机网卡的MAC地址 Interface Type Source Model MAC ------------------------------------------------------- vnet0 bridge br0 virtio 52:54:00:40:75:05 vnet1 bridge br0 virtio 52:54:00:84:23:3d [root@kvm-server ~]# virsh detach-interface vm-node1 --type bridge --mac 52:54:00:84:23:3d --current #根据MAC地址删除网卡,即时生效,如果需要最终生效也要使用virsh edit 来修改配置文件 Interface detached successfully [root@kvm-server ~]# virsh domiflist vm-node1 Interface Type Source Model MAC ------------------------------------------------------- vnet0 bridge br0 virtio 52:54:00:40:75:05

三、虚拟机增加磁盘

KVM虚拟机的磁盘镜像从存储方式上看,可以分为两种方式,第一种方式为存储于文件系统上,第二种方式为直接使用裸设备。裸设备的使用方式可以是直接使用裸盘,也可以是用LVM的方式。存于文件系统上的镜像有很多格式,如raw、cloop、cow、qcow、qcow2、vmdlk、vdi等,经常使用的是raw和qcow2。

raw:是简单的二进制镜像文件,一次性会把分配的磁盘空间占用。raw支持稀疏文件特性,稀疏文件特性就是文件系统会把分配的空字节文件记录在元数据中,而不会实际占用磁盘空间。

qcow2:第二代的QEMU写时复制格式,支持很多特性,如快照、在不支持稀疏特性的文件系统上也支持精简方式、AES加密、zlib压缩、后备方式。

[root@kvm-server ~]# qemu-img create -f raw /Data/vm-node1-10G.raw 10G #创建raw格式并且大小为10G的磁盘 Formatting '/Data/vm-node1-10G.raw', fmt=raw size=10737418240

[root@kvm-server ~]# qemu-img info /Data/vm-node1-10G.raw image: /Data/vm-node1-10G.raw file format: raw virtual size: 10G (10737418240 bytes) disk size: 0 [root@kvm-server ~]# virsh attach-disk vm-node1 /Data/vm-node1-10G.raw vdb --cache none #临时生效,关机再开机后失效 Disk attached successfully

[root@kvm-server ~]# virsh dumpxml vm-node1 #通过dumpxml找到下段配置文件

[root@kvm-server ~]# virsh edit vm-node1 #使用edit命令,把找到的内容加到vda磁盘后面即可 <disk type='file' device='disk'> <driver name='qemu' type='raw' cache='none'/> <source file='/Data/vm-node1-10G.raw'/> <backingStore/> <target dev='vdb' bus='virtio'/> <alias name='virtio-disk1'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x09' function='0x0'/> </disk>

[root@vm-node1 ~]# fdisk -l #数据盘已挂载,可以进行分区、格式化、挂载等操作 Disk /dev/vda: 42.9 GB, 42949672960 bytes, 83886080 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk label type: dos Disk identifier: 0x00009df9 Device Boot Start End Blocks Id System /dev/vda1 * 2048 83886079 41942016 83 Linux Disk /dev/vdb: 10.7 GB, 10737418240 bytes, 20971520 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes

磁盘镜像格式的转换方法:

[root@kvm-server ~]# qemu-img create -f raw test.raw 5G

Formatting 'test.raw', fmt=raw size=5368709120

[root@kvm-server ~]# qemu-img convert -p -f raw -O qcow2 test.raw test.qcow2 #参数-p显示进度,-f是指原有的镜像格式,-O是输出的镜像格式,然后是输入文件和输出文件

(100.00/100%)

[root@kvm-server ~]# qemu-img info test.qcow2

image: test.qcow2

file format: qcow2

virtual size: 5.0G (5368709120 bytes)

disk size: 196K

cluster_size: 65536

Format specific information:

compat: 1.1

lazy refcounts: false

[root@kvm-server ~]# ll -sh test.*

196K -rw-r--r-- 1 root root 193K Oct 19 16:19 test.qcow2

0 -rw-r--r-- 1 root root 5.0G Oct 19 16:11 test.raw

四、克隆虚拟机

使用virt-clone克隆虚拟机的方法:

[root@kvm-server ~]# virsh shutdown CentOS-7.2-x86_64 #必须要关机才能进行克隆 Domain CentOS-7.2-x86_64 is being shutdown [root@kvm-server ~]# virsh list --all Id Name State ---------------------------------------------------- - CentOS-7.2-x86_64 shut off - vm-node1 shut off [root@kvm-server ~]# virt-clone -o CentOS-7.2-x86_64 -n vm-node2 -f /opt/vm-node2.raw #参数含义:-o被克隆虚拟机的名字、-n克隆后虚拟机的名字、-f指定磁盘存储位置 WARNING The requested volume capacity will exceed the available pool space when the volume is fully allocated. (40960 M requested capacity > 36403 M available) Allocating 'vm-node2.raw' | 40 GB 00:01:03 Clone 'vm-node2' created successfully. [root@kvm-server ~]# virsh list --all #克隆后为关机状态 Id Name State ---------------------------------------------------- - CentOS-7.2-x86_64 shut off - vm-node1 shut off - vm-node2 shut off

# 为虚拟机node-192.168.5.95-kubeadmin-master磁盘创建快照

# virsh snapshot-create-as --domain node-192.168.5.95-kubeadmin-master --name kubeadmin-sys-init --description '准备完成'

Domain snapshot kubeadmin-sys-init created

# 查看磁盘快照

# virsh snapshot-list node-192.168.5.95-kubeadmin-master

Name Creation Time State

------------------------------------------------------------

kubeadmin-sys-init 2020-07-31 22:05:39 +0800 running

# 恢复磁盘快照

# virsh snapshot-revert node-192.168.5.95-kubeadmin-master kubeadmin-sys-init

# 删除磁盘快照

# virt snapshot-delete node-192.168.5.95-kubeadmin-master kubeadmin-sys-init

五、修改虚拟机的名字

[root@kvm-server ~]# virsh shutdown CentOS-7.2-x86_64 #需要先关机,然后对虚拟机进行改名 [root@kvm-server ~]# cp /etc/libvirt/qemu/vm-node2.xml /etc/libvirt/qemu/vm-test.xml #拷贝xml文件为要修改的名称,如:vm-test [root@kvm-server ~]# grep '<name>' /etc/libvirt/qemu/vm-test.xml #修改vm-test.xml中的name字段为vm-test <name>vm-test</name> [root@kvm-server ~]# virsh undefine vm-node2 #删除之前的虚拟机 Domain vm-node2 has been undefined [root@kvm-server ~]# virsh define /etc/libvirt/qemu/vm-test.xml #定义新的虚拟机 Domain vm-test defined from /etc/libvirt/qemu/vm-test.xml [root@kvm-server ~]# virsh list --all #已完成改名操作 Id Name State ---------------------------------------------------- - CentOS-7.2-x86_64 shut off - vm-node1 shut off - vm-test shut off

六、修改KVM虚拟机的CPU

需要先修改xml配置文件

# 查看默认的CPU和内存大小 virsh edit node-192.168.5.90-test <domain type='kvm'> <name>node-192.168.5.90-test</name> <uuid>de4fe850-2fa7-49be-b785-77642bc95713</uuid> <memory unit='KiB'>4194304</memory> <currentMemory unit='KiB'>4194304</currentMemory> <vcpu placement='static'>2</vcpu> # 修改配置 <domain type='kvm'> <name>node-192.168.5.90-test</name> <uuid>de4fe850-2fa7-49be-b785-77642bc95713</uuid> <memory unit='KiB'>8192000</memory> <currentMemory unit='KiB'>4194304</currentMemory> <vcpu placement='auto' current="1">16</vcpu> # 关闭虚拟机,再开机;注意不要重启,重启配置不生效。 virsh shutdown node-192.168.5.90-test virsh start node-192.168.5.90-test # 动态修改VCPU;VCPU只能热增加,不能减少 # 查看当前逻辑CPU数量 [root@192-168-5-90 ~]# cat /proc/cpuinfo| grep "processor"| wc -l 1 # 动态增加到4个 virsh setvcpus node-192.168.5.90-test 4 --live # 再次查看CPU数量 [root@192-168-5-90 ~]# cat /proc/cpuinfo| grep "processor"| wc -l 4 # 动态修改内存(增减都可以) # virsh qemu-monitor-command node-192.168.5.90-test --hmp --cmd info balloon # 查看当前内存大小 balloon: actual=4096

# virsh qemu-monitor-command node-192.168.5.90-test --hmp --cmd balloon 8190 # 设置当前内存为8G

# virsh qemu-monitor-command node-192.168.5.90-test --hmp --cmd info balloon # 查看当前内存大小 balloon: actual=8000

# virsh qemu-monitor-command node-192.168.5.90-test --hmp --cmd balloon 6000 # 设置当前内存为6G

# virsh qemu-monitor-command node-192.168.5.90-test --hmp --cmd info balloon # 查看当前内存大小 balloon: actual=6000

CentOS下KVM配置NAT网络(网络地址转换模式)

https://www.cnblogs.com/EasonJim/p/9751729.html

https://blog.csdn.net/jiuzuidongpo/article/details/44677565

https://libvirt.org/formatnetwork.html(Linux KVM官方文档)

KVM虚拟机Nat方式上网:

# 查看当前活跃的网络 virsh net-list

# 查看该网络的详细配置 virsh net-dumpxml default

客户机的XML配置文件中interface内容如下即可使用NAT,注意红色字样为关键配置:

<interface type='network'>

<mac address='52:54:00:c7:18:b5'/>

<source network='default'/>

<model type='virtio'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/>

</interface>

# 编辑修改default网络的配置 virsh net-edit default

# 将default网络干掉,然后再重新定义: virsh net-undefine default

# 重新创建一个default.xml文件,自定义其中的内容,可以指定某个mac地址对应某个ip,指定某个ip段。

touch default.xml virsh net-define default.xml

# 例如下面的内容,name对应的是客户机的名字。

<?xml version="1.0" encoding="utf-8"?>

<network>

<name>default</name>

<uuid>dc69ff61-6445-4376-b940-8714a3922bf7</uuid>

<forward mode="nat"/>

<bridge name="virbr0" stp="on" delay="0"/>

<mac address="52:54:00:81:14:18"/>

<ip address="192.168.122.1" netmask="255.255.255.0">

<dhcp>

<range start="192.168.122.2" end="192.168.122.254"/>

<host mac="00:25:90:eb:4b:bb" name="guest1" ip="192.168.5.13"/>

<host mac="00:25:90:eb:34:2c" name="guest2" ip="192.168.7.206"/>

<host mac="00:25:90:eb:e5:de" name="guest3" ip="192.168.7.207"/>

<host mac="00:25:90:eb:7e:11" name="guest4" ip="192.168.7.208"/>

<host mac="00:25:90:eb:b2:11" name="guest5" ip="192.168.7.209"/>

</dhcp>

</ip>

</network>

# 生效 virsh net-start default

- danei bug

https://uc.tmooc.cn/userCenter/toUserCenterPage

HTTP Status 500 - An exception occurred processing JSP page /WEB-INF/tmoocfront/usercenter/jsp/../usercenterheader.jsp at line 4

type Exception report

message An exception occurred processing JSP page /WEB-INF/tmoocfront/usercenter/jsp/../usercenterheader.jsp at line 4

description The server encountered an internal error that prevented it from fulfilling this request.

exception

org.apache.jasper.JasperException: An exception occurred processing JSP page /WEB-INF/tmoocfront/usercenter/jsp/../usercenterheader.jsp at line 4

1: <%@ page language="java" pageEncoding="UTF-8" import="com.tarena.tos.core.common.UserUtil,com.tarena.tos.core.course.api.model.TmoocCourseBo" %>

2:

3: <%

4: String url =UserUtil.getUserByUUID().getPictureUrl();

5: if(url==null){

6: url="https://cdn.tmooc.cn/tmooc-web/css/img/user-head.jpg";

7: }

Stacktrace:

org.apache.jasper.servlet.JspServletWrapper.handleJspException(JspServletWrapper.java:574)

org.apache.jasper.servlet.JspServletWrapper.service(JspServletWrapper.java:476)

org.apache.jasper.servlet.JspServlet.serviceJspFile(JspServlet.java:396)

org.apache.jasper.servlet.JspServlet.service(JspServlet.java:340)

javax.servlet.http.HttpServlet.service(HttpServlet.java:729)

org.apache.tomcat.websocket.server.WsFilter.doFilter(WsFilter.java:52)

org.apache.logging.log4j.web.Log4jServletFilter.doFilter(Log4jServletFilter.java:64)

org.springframework.web.servlet.view.InternalResourceView.renderMergedOutputModel(InternalResourceView.java:168)

org.springframework.web.servlet.view.AbstractView.render(AbstractView.java:303)

org.springframework.web.servlet.DispatcherServlet.render(DispatcherServlet.java:1286)

org.springframework.web.servlet.DispatcherServlet.processDispatchResult(DispatcherServlet.java:1041)

org.springframework.web.servlet.DispatcherServlet.doDispatch(DispatcherServlet.java:984)

org.springframework.web.servlet.DispatcherServlet.doService(DispatcherServlet.java:901)

org.springframework.web.servlet.FrameworkServlet.processRequest(FrameworkServlet.java:970)

org.springframework.web.servlet.FrameworkServlet.doGet(FrameworkServlet.java:861)

javax.servlet.http.HttpServlet.service(HttpServlet.java:622)

org.springframework.web.servlet.FrameworkServlet.service(FrameworkServlet.java:846)

javax.servlet.http.HttpServlet.service(HttpServlet.java:729)

org.apache.tomcat.websocket.server.WsFilter.doFilter(WsFilter.java:52)

org.apache.logging.log4j.web.Log4jServletFilter.doFilter(Log4jServletFilter.java:64)

org.springframework.web.servlet.view.InternalResourceView.renderMergedOutputModel(InternalResourceView.java:168)

org.springframework.web.servlet.view.AbstractView.render(AbstractView.java:303)

org.springframework.web.servlet.DispatcherServlet.render(DispatcherServlet.java:1286)

org.springframework.web.servlet.DispatcherServlet.processDispatchResult(DispatcherServlet.java:1041)

org.springframework.web.servlet.DispatcherServlet.doDispatch(DispatcherServlet.java:984)

org.springframework.web.servlet.DispatcherServlet.doService(DispatcherServlet.java:901)

org.springframework.web.servlet.FrameworkServlet.processRequest(FrameworkServlet.java:970)

org.springframework.web.servlet.FrameworkServlet.doGet(FrameworkServlet.java:861)

javax.servlet.http.HttpServlet.service(HttpServlet.java:622)

org.springframework.web.servlet.FrameworkServlet.service(FrameworkServlet.java:846)

javax.servlet.http.HttpServlet.service(HttpServlet.java:729)

org.springframework.web.filter.HiddenHttpMethodFilter.doFilterInternal(HiddenHttpMethodFilter.java:81)

org.springframework.web.filter.OncePerRequestFilter.doFilter(OncePerRequestFilter.java:107)

org.apache.tomcat.websocket.server.WsFilter.doFilter(WsFilter.java:52)

com.tarena.tmooc.front.filter.SessionFilter.doFilter(SessionFilter.java:152)

org.springframework.web.filter.CharacterEncodingFilter.doFilterInternal(CharacterEncodingFilter.java:197)

org.springframework.web.filter.OncePerRequestFilter.doFilter(OncePerRequestFilter.java:107)

com.tarena.tmooc.front.filter.CharacterFilter.doFilter(CharacterFilter.java:61)

org.apache.logging.log4j.web.Log4jServletFilter.doFilter(Log4jServletFilter.java:71)

root cause

java.lang.NullPointerException

org.apache.jsp.WEB_002dINF.tmoocfront.usercenter.jsp.usersingupcourse_jsp._jspService(usersingupcourse_jsp.java:257)

org.apache.jasper.runtime.HttpJspBase.service(HttpJspBase.java:70)

javax.servlet.http.HttpServlet.service(HttpServlet.java:729)

org.apache.jasper.servlet.JspServletWrapper.service(JspServletWrapper.java:438)

org.apache.jasper.servlet.JspServlet.serviceJspFile(JspServlet.java:396)

org.apache.jasper.servlet.JspServlet.service(JspServlet.java:340)

javax.servlet.http.HttpServlet.service(HttpServlet.java:729)

org.apache.tomcat.websocket.server.WsFilter.doFilter(WsFilter.java:52)

org.apache.logging.log4j.web.Log4jServletFilter.doFilter(Log4jServletFilter.java:64)

org.springframework.web.servlet.view.InternalResourceView.renderMergedOutputModel(InternalResourceView.java:168)

org.springframework.web.servlet.view.AbstractView.render(AbstractView.java:303)

org.springframework.web.servlet.DispatcherServlet.render(DispatcherServlet.java:1286)

org.springframework.web.servlet.DispatcherServlet.processDispatchResult(DispatcherServlet.java:1041)

org.springframework.web.servlet.DispatcherServlet.doDispatch(DispatcherServlet.java:984)

org.springframework.web.servlet.DispatcherServlet.doService(DispatcherServlet.java:901)

org.springframework.web.servlet.FrameworkServlet.processRequest(FrameworkServlet.java:970)

org.springframework.web.servlet.FrameworkServlet.doGet(FrameworkServlet.java:861)

javax.servlet.http.HttpServlet.service(HttpServlet.java:622)

org.springframework.web.servlet.FrameworkServlet.service(FrameworkServlet.java:846)

javax.servlet.http.HttpServlet.service(HttpServlet.java:729)

org.apache.tomcat.websocket.server.WsFilter.doFilter(WsFilter.java:52)

org.apache.logging.log4j.web.Log4jServletFilter.doFilter(Log4jServletFilter.java:64)

org.springframework.web.servlet.view.InternalResourceView.renderMergedOutputModel(InternalResourceView.java:168)

org.springframework.web.servlet.view.AbstractView.render(AbstractView.java:303)

org.springframework.web.servlet.DispatcherServlet.render(DispatcherServlet.java:1286)

org.springframework.web.servlet.DispatcherServlet.processDispatchResult(DispatcherServlet.java:1041)

org.springframework.web.servlet.DispatcherServlet.doDispatch(DispatcherServlet.java:984)

org.springframework.web.servlet.DispatcherServlet.doService(DispatcherServlet.java:901)

org.springframework.web.servlet.FrameworkServlet.processRequest(FrameworkServlet.java:970)

org.springframework.web.servlet.FrameworkServlet.doGet(FrameworkServlet.java:861)

javax.servlet.http.HttpServlet.service(HttpServlet.java:622)

org.springframework.web.servlet.FrameworkServlet.service(FrameworkServlet.java:846)

javax.servlet.http.HttpServlet.service(HttpServlet.java:729)

org.springframework.web.filter.HiddenHttpMethodFilter.doFilterInternal(HiddenHttpMethodFilter.java:81)

org.springframework.web.filter.OncePerRequestFilter.doFilter(OncePerRequestFilter.java:107)

org.apache.tomcat.websocket.server.WsFilter.doFilter(WsFilter.java:52)

com.tarena.tmooc.front.filter.SessionFilter.doFilter(SessionFilter.java:152)

org.springframework.web.filter.CharacterEncodingFilter.doFilterInternal(CharacterEncodingFilter.java:197)

org.springframework.web.filter.OncePerRequestFilter.doFilter(OncePerRequestFilter.java:107)

com.tarena.tmooc.front.filter.CharacterFilter.doFilter(CharacterFilter.java:61)

org.apache.logging.log4j.web.Log4jServletFilter.doFilter(Log4jServletFilter.java:71)

note The full stack trace of the root cause is available in the Apache Tomcat/8.0.33 logs.

Apache Tomcat/8.0.33

end

浙公网安备 33010602011771号

浙公网安备 33010602011771号