中文词频统计与词云生成

作业来源:https://edu.cnblogs.com/campus/gzcc/GZCC-16SE1/homework/2822

中文词频统计

1. 下载一长篇中文小说。

2. 从文件读取待分析文本。

3. 安装并使用jieba进行中文分词。

pip install jieba

import jieba

jieba.lcut(text)

4. 更新词库,加入所分析对象的专业词汇。

jieba.add_word('天罡北斗阵') #逐个添加

jieba.load_userdict(word_dict) #词库文本文件

参考词库下载地址:https://pinyin.sogou.com/dict/

转换代码:scel_to_text

import chardet from wordcloud import WordCloud import matplotlib.pyplot as plt import jieba from bs4 import BeautifulSoup import requests def get_text(url): headers={'User-Agent':'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/55.0.2883.87 Safari/537.36'} response=requests.get(url,headers=headers) response.encoding = chardet.detect(response.content)['encoding'] soup=BeautifulSoup(response.text,'lxml') a=soup.find(id='content').get_text().replace('\u3000','').splitlines()[1:-3] a = ''.join(a) o = '!。,?' for n in o: a =a.replace(n,'') return a def save_file(a): with open('F:\pyprogram\out.text','w',encoding='utf-8') as flie: flie.write(a) def jb(a): jieba.add_word('来了') jieba.load_userdict('F:\pyprogram\dictionary.txt') b=jieba.lcut(a) with open('F:\pyprogram\stops.txt','r',encoding='utf8') as fa: stops=fa.read() tokens = [token for token in b if token not in stops] bookdick={} for i in tokens: bookdick[i]=b.count(i) dictionary=list(bookdick.items()) dictionary.sort(key=lambda x: x[1], reverse=True) for p in range(20): print(dictionary[p]) return tokens

5. 生成词频统计

6. 排序

7. 排除语法型词汇,代词、冠词、连词等停用词。

stops

tokens=[token for token in wordsls if token not in stops]

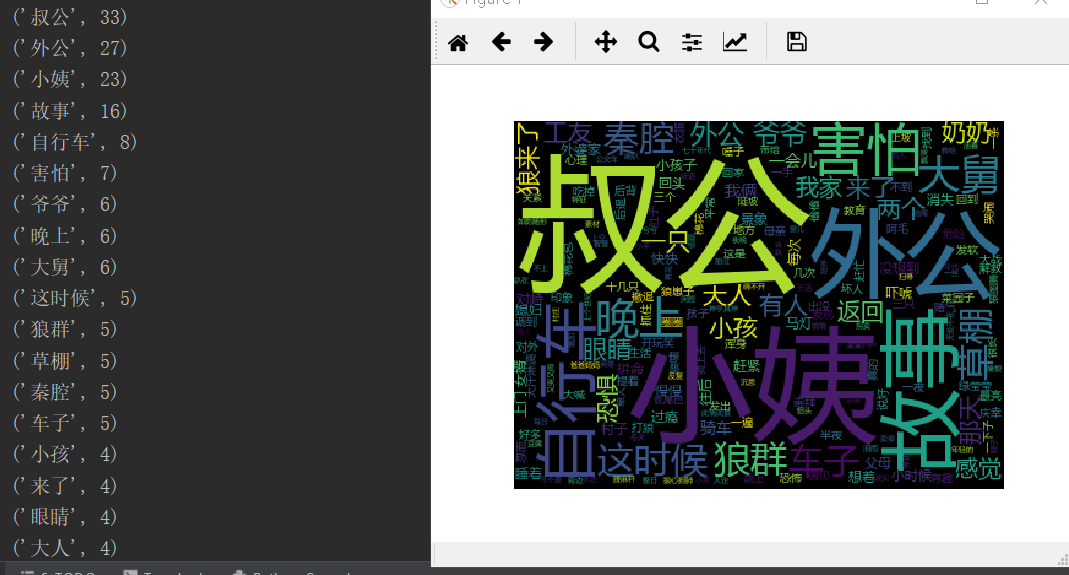

8. 输出词频最大TOP20,把结果存放到文件里

9. 生成词云。

import chardet from wordcloud import WordCloud import matplotlib.pyplot as plt import jieba from bs4 import BeautifulSoup import requests def get_text(url): headers={'User-Agent':'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/55.0.2883.87 Safari/537.36'} response=requests.get(url,headers=headers) response.encoding = chardet.detect(response.content)['encoding'] soup=BeautifulSoup(response.text,'lxml') a=soup.find(id='content').get_text().replace('\u3000','').splitlines()[1:-3] a = ''.join(a) o = '!。,?' for n in o: a =a.replace(n,'') return a def save_file(a): with open('F:\pyprogram\out.text','w',encoding='utf-8') as flie: flie.write(a) def jb(a): jieba.add_word('来了') jieba.load_userdict('F:\pyprogram\dictionary.txt') b=jieba.lcut(a) with open('F:\pyprogram\stops.txt','r',encoding='utf8') as fa: stops=fa.read() tokens = [token for token in b if token not in stops] bookdick={} for i in tokens: bookdick[i]=b.count(i) dictionary=list(bookdick.items()) dictionary.sort(key=lambda x: x[1], reverse=True) for p in range(20): print(dictionary[p]) return tokens def wc(tokens): wl_split=' '.join(tokens) mywc=WordCloud(background_color = '#36f',width=400,height=300,margin = 1).generate(wl_split) plt.imshow(mywc) plt.axis('off') plt.show() if __name__=='__main__': url = 'http://www.changpianxiaoshuo.com/jingpinwenzhang-commend/youlangchumo.html' a=get_text(url) tokens=jb(a) wc(tokens)