k8s部署基于nfs的StorageClass

参考:https://www.cnblogs.com/jojoword/p/12341128.html

storageclass相当于是一个动态的存储,即每个pod需要多少容量,直接在配置资源清单中声明即可;但是nfs默认是不支持storageclass动态存储的.

nfs本身不支持动态存储,而我们找了一个能够转换的pod,把nfs存储全部挂载到了它身上,然后,由它再给用户提供storageclass

总结一下就是:

1. 平时使用过程中,如果是静态的存储,那么过程是先准备好存储,然后基于存储创建PV;然后在创建PVC,根据容量他们会找对应的PV

2. 使用动态存储,那么就是先准备好存储,然后直接创建PVC,storageclass会根据要求的大小自动创建PV

1、部署nfs

1 2 | # yum -y install nfs-server# yum -y install nfs-utils |

修改/etc/exports文件

[root@k8s01-zongshuai nacos]# cat /etc/exports /data/nfs/v1 172.16.43.0/24(rw,no_root_squash,no_all_squash) /data/nfs/v2 172.16.43.0/24(rw,no_root_squash,no_all_squash) /data/nfs/v3 172.16.43.0/24(rw,no_root_squash,no_all_squash)

2、启动nfs-server和rpcbind

1 2 | # systemctl start nfs-server rpcbind# systemctl enable nfs-server rpcbind |

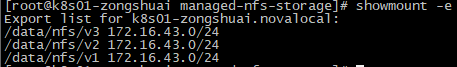

查看nfs是否就绪

1 | # showmount -e |

3、准备yaml文件

https://github.com/kubernetes-retired/external-storage/tree/master/nfs-client/deploy

(1)rbac.yaml

apiVersion: v1 kind: ServiceAccount metadata: name: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: nfs-client --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: nfs-client-provisioner-runner rules: - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["create", "update", "patch"] --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: run-nfs-client-provisioner subjects: - kind: ServiceAccount name: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: nfs-client roleRef: kind: ClusterRole name: nfs-client-provisioner-runner apiGroup: rbac.authorization.k8s.io --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: nfs-client rules: - apiGroups: [""] resources: ["endpoints"] verbs: ["get", "list", "watch", "create", "update", "patch"] --- kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: nfs-client subjects: - kind: ServiceAccount name: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: nfs-client roleRef: kind: Role name: leader-locking-nfs-client-provisioner apiGroup: rbac.authorization.k8s.io

(2)class.yaml

apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: managed-nfs-storage provisioner: k8s-sigs.io/nfs-subdir-external-provisioner # or choose another name, must match deployment's env PROVISIONER_NAME' parameters: archiveOnDelete: "false"

(3)deployment.yaml

apiVersion: apps/v1 kind: Deployment metadata: name: nfs-client-provisioner labels: app: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: nfs-client spec: replicas: 1 strategy: type: Recreate selector: matchLabels: app: nfs-client-provisioner template: metadata: labels: app: nfs-client-provisioner spec: serviceAccountName: nfs-client-provisioner containers: - name: nfs-client-provisioner # image: gcr.io/k8s-staging-sig-storage/nfs-subdir-external-provisioner:v4.0.0 image: 172.16.43.156/nfs-client/nfs-client-provisioner:latest volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes env: - name: PROVISIONER_NAME value: k8s-sigs.io/nfs-subdir-external-provisioner # value: fuseim.pri/ifs - name: NFS_SERVER value: 172.16.43.156 - name: NFS_PATH value: /data/nfs/v1 volumes: - name: nfs-client-root nfs: server: 172.16.43.156 path: /data/nfs/v1

根据文件创建资源

1 2 3 | # kubectl apply -f rbac.yaml# kubectl apply -f deployment.yaml# kubectl apply -f class.yaml |

设置为默认sc

1 | # kubectl patch sc managed-nfs-storage -p '{"metadata": {"annotations": {"storageclass.beta.kubernetes.io/is-default-class": "true"}}}' |

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· 什么是nginx的强缓存和协商缓存

· 一文读懂知识蒸馏

· Manus爆火,是硬核还是营销?