scrapy——基于管道持久化存储

笔记

- 基于管道:

- 编码流程

-数据解析

- 在item类中定义相关的属性

- 将解析的数据封装到item对象中

- 将item类型的对象提交给管道进行持久化存储

- 在管道类的process_item中要将其接收到的item对象中存储的数据进行持久化存储

- 在配置文件中开启管道

- 好处:通用性强代码

import scrapy

from douban.items import DoubanItem

class DouSpider(scrapy.Spider):

name = "dou"

#allowed_domains = ["www.douban.com"]

start_urls = ["https://www.douban.com/doulist/113652271/"]

#管道持久化存储

def parse(self, response):

div_ = response.xpath('/html/body/div[3]/div[1]/div/div[1]')

div_list = div_.xpath('./div[contains(@class, "doulist-item")]')

for div in div_list:

# print(div)

title = div.xpath('./div/div[2]/div[2]/a/text()')[0].extract()

content = div.xpath('./div/div[2]/div[4]/text()').extract_first()

dic = {

'title':title,

'content': content

}

item = DoubanItem()

item['title'] = title

item['content'] = content

#将item提交给管道

yield itempipelines类

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

# useful for handling different item types with a single interface

from itemadapter import ItemAdapter

class DoubanPipeline:

fp = None

#重写弗雷方法 该方法只在开始爬虫时调用一次

def open_spider(self,spider):

print('开始爬虫!!!')

# 打开文件

self.fp = open('douban.txt','w',encoding='utf-8')

# 专门用来处理item类型对象

# 该方法可以接收爬虫文件提交过来的item对象

# 该方法每接收到一个item就会被调用一次

def process_item(self, item, spider):

title_ = item['title']

content_ = item['content']

self.fp.write(title_+':'+content_+'\n')

return item

def close_spider(self,spider):

print('结束爬虫!!!')

self.fp.close()items类

# Define here the models for your scraped items

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/items.html

import scrapy

class DoubanItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

title = scrapy.Field()

content = scrapy.Field()

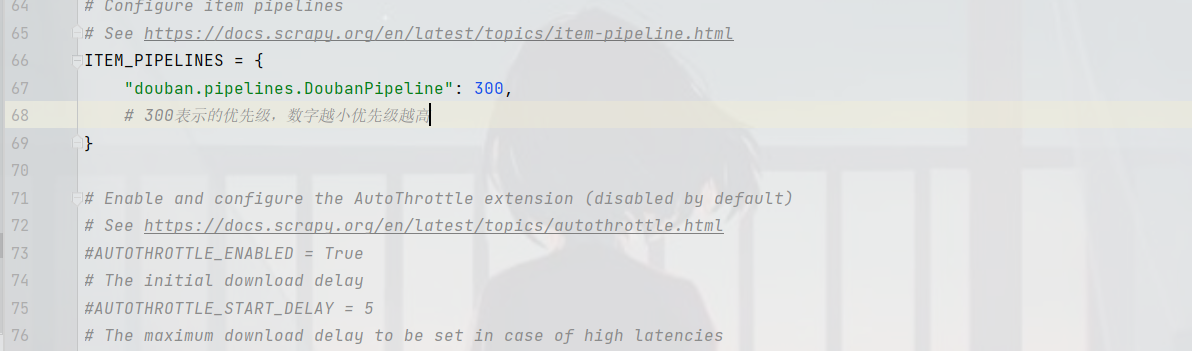

#passsettings类中开启管道存储