MongoDB Sharding(二) -- 搭建分片集群

在上一篇文章中,我们基本了解了分片的概念,本文将着手实践,进行分片集群的搭建

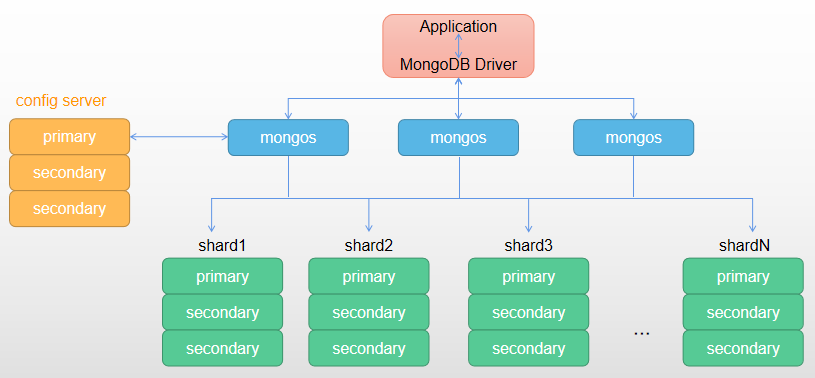

首先我们再来了解一下分片集群的架构,分片集群由三部分构成:

- mongos:查询路由,在客户端程序和分片之间提供接口。本次实验部署2个mongos实例

- config:配置服务器存储集群的元数据,元数据反映分片集群的内所有数据和组件的状态和组织方式,元数据包含每个分片上的块列表以及定义块的范围。从3.4版本开始,已弃用镜像服务器用作配置服务器(SCCC),config Server必须部署为副本集架构(CSRS)。本次实验配置一个3节点的副本集作为配置服务器

- shard:每个shard包含集合的一部分数据,从3.6版本开始,每个shard必须部署为副本集(replica set)架构。本次实验部署3个分片存储数据。

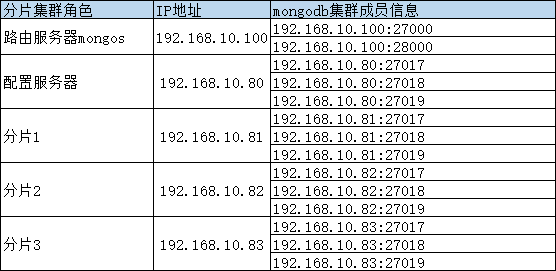

(一)主机信息

(二)配置服务器副本集搭建

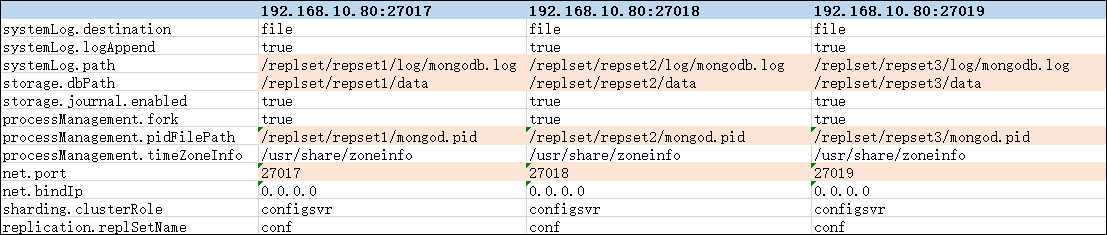

配置服务器三个实例的基础规划如下:

member0 192.168.10.80:27017

member1 192.168.10.80:27018

member2 192.168.10.80:27019

其参数规划如下:

接下来,我们一步一步搭建config server的副本集。

STEP1:解压mongodb安装包到/mongo目录

[root@mongosserver mongo]# pwd /mongo [root@mongosserver mongo]# ls bin LICENSE-Community.txt MPL-2 README THIRD-PARTY-NOTICES THIRD-PARTY-NOTICES.gotools

STEP2:根据上面参数规划,创建数据存放相关路径

# 创建文件路径 mkdir -p /replset/repset1/data mkdir -p /replset/repset1/log mkdir -p /replset/repset2/data mkdir -p /replset/repset2/log mkdir -p /replset/repset3/data mkdir -p /replset/repset3/log [root@mongosserver repset1]# tree /replset/ /replset/ ├── repset1 │ ├── data │ ├── log │ └── mongodb.conf ├── repset2 │ ├── data │ └── log └── repset3 ├── data └── log

STEP3:为3个实例创建参数文件

实例1的参数文件 /replset/repset1/mongodb.conf :

systemLog: destination: file logAppend: true path: /replset/repset1/log/mongodb.log storage: dbPath: /replset/repset1/data journal: enabled: true processManagement: fork: true # fork and run in background pidFilePath: /replset/repset1/mongod.pid # location of pidfile timeZoneInfo: /usr/share/zoneinfo # network interfaces net: port: 27017 bindIp: 0.0.0.0 # shard sharding: clusterRole: configsvr # repliuca set replication: replSetName: conf

实例2的参数文件 /replset/repset2/mongodb.conf :

systemLog: destination: file logAppend: true path: /replset/repset2/log/mongodb.log storage: dbPath: /replset/repset2/data journal: enabled: true processManagement: fork: true # fork and run in background pidFilePath: /replset/repset2/mongod.pid # location of pidfile timeZoneInfo: /usr/share/zoneinfo # network interfaces net: port: 27018 bindIp: 0.0.0.0 # shard sharding: clusterRole: configsvr # repliuca set replication: replSetName: conf

实例3的参数文件 /replset/repset3/mongodb.conf :

systemLog: destination: file logAppend: true path: /replset/repset3/log/mongodb.log storage: dbPath: /replset/repset3/data journal: enabled: true processManagement: fork: true # fork and run in background pidFilePath: /replset/repset3/mongod.pid # location of pidfile timeZoneInfo: /usr/share/zoneinfo # network interfaces net: port: 27019 bindIp: 0.0.0.0 # shard sharding: clusterRole: configsvr # repliuca set replication: replSetName: conf

STEP4:启动三个mongod实例

mongod -f /replset/repset1/mongodb.conf mongod -f /replset/repset2/mongodb.conf mongod -f /replset/repset3/mongodb.conf # 查看是成功否启动 [root@mongosserver mongo]# netstat -nltp |grep mongod tcp 0 0 0.0.0.0:27019 0.0.0.0:* LISTEN 28009/mongod tcp 0 0 0.0.0.0:27017 0.0.0.0:* LISTEN 27928/mongod tcp 0 0 0.0.0.0:27018 0.0.0.0:* LISTEN 27970/mongod

STEP5:进入任意一个实例,初始化配置服务器的副本集

rs.initiate( { _id: "conf", configsvr: true, members: [ { _id : 0, host : "192.168.10.80:27017" }, { _id : 1, host : "192.168.10.80:27018" }, { _id : 2, host : "192.168.10.80:27019" } ] } )

STEP6:[可选] 调整节点优先级,以便于确定主节点

cfg = rs.conf() cfg.members[0].priority = 3 cfg.members[1].priority = 2 cfg.members[2].priority = 1 rs.reconfig(cfg)

对于members[n]的定义:n是members数组中的数组位置,数组以0开始,千万不能将其理解为“members[n]._id”的_id值。

查看节点优先级:

conf:PRIMARY> rs.config()

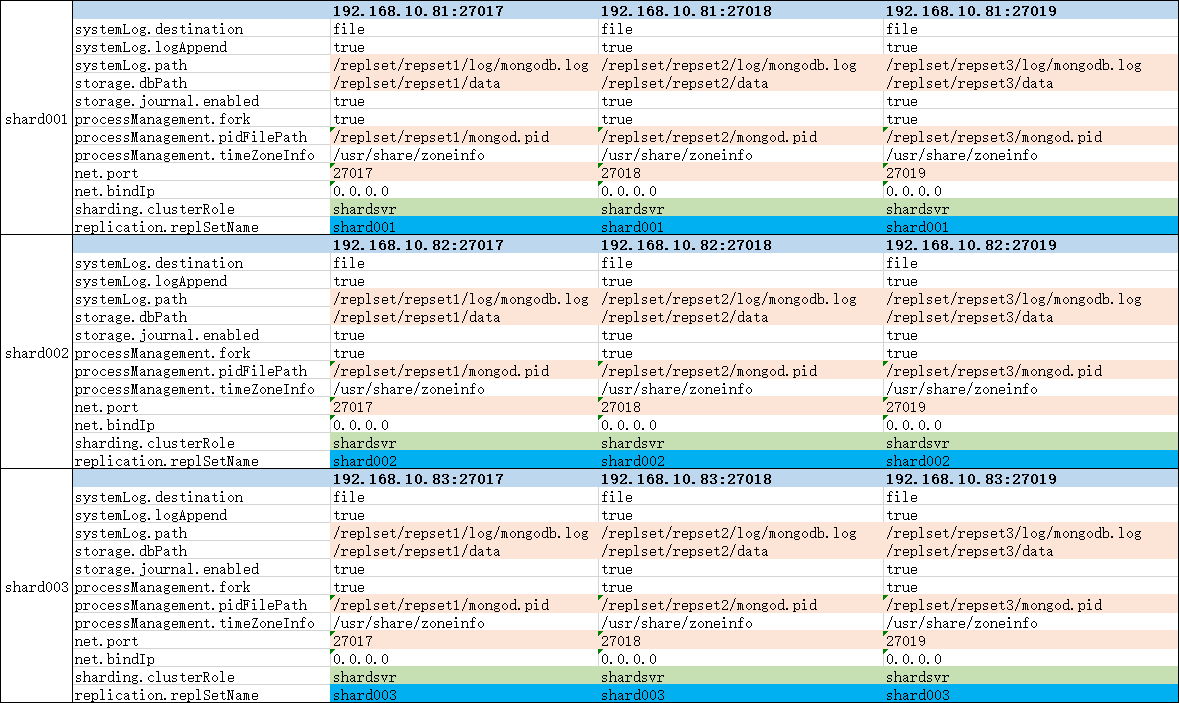

(三)分片副本集搭建

分片1副本集成员:

member0 192.168.10.81:27017

member1 192.168.10.81:27018

member2 192.168.10.81:27019

分片2副本集成员:

member0 192.168.10.82:27017

member1 192.168.10.82:27018

member2 192.168.10.82:27019

分片3副本集成员:

member0 192.168.10.83:27017

member1 192.168.10.83:27018

member2 192.168.10.83:27019

其参数规划如下:

这里一共有3个分片,每个分片都是3个节点的副本集,副本集的搭建过程与上面config server副本集搭建过程相似,这里不再重复赘述,唯一不同的是副本集的初始化。shard副本集的初始化与配置副本集初始化过程相比,少了 configsvr: true 的参数配置。

三个shard副本集的初始化:

# shard001

rs.initiate( { _id: "shard001", members: [ { _id : 0, host : "192.168.10.81:27017" }, { _id : 1, host : "192.168.10.81:27018" }, { _id : 2, host : "192.168.10.81:27019" } ] } )

# shard002

rs.initiate( { _id: "shard002", members: [ { _id : 0, host : "192.168.10.82:27017" }, { _id : 1, host : "192.168.10.82:27018" }, { _id : 2, host : "192.168.10.82:27019" } ] } ) # shard003 rs.initiate( { _id: "shard003", members: [ { _id : 0, host : "192.168.10.83:27017" }, { _id : 1, host : "192.168.10.83:27018" }, { _id : 2, host : "192.168.10.83:27019" } ] } )

(四)配置并启动mongos

本次试验在192.168.10.100服务器上启动2个mongos进程,分别使用端口27000和28000。

STEP1:配置mongos实例的参数

端口27000参数配置,特别注意,需要先创建涉及到的路径:

systemLog: destination: file logAppend: true path: /mongo/log/mongos-27000.log processManagement: fork: true # fork and run in background pidFilePath: /mongo/mongod-27000.pid # location of pidfile timeZoneInfo: /usr/share/zoneinfo # network interfaces net: port: 27000 bindIp: 0.0.0.0 sharding: configDB: conf/192.168.10.80:27017,192.168.10.80:27018,192.168.10.80:27019

端口28000参数配置,特别注意,需要先创建涉及到的路径:

systemLog: destination: file logAppend: true path: /mongo/log/mongos-28000.log processManagement: fork: true # fork and run in background pidFilePath: /mongo/mongod-28000.pid # location of pidfile timeZoneInfo: /usr/share/zoneinfo # network interfaces net: port: 28000 bindIp: 0.0.0.0 sharding: configDB: conf/192.168.10.80:27017,192.168.10.80:27018,192.168.10.80:27019

STEP2:启动mongos实例

# 启动mongos实例 [root@mongosserver mongo]# mongos -f /mongo/mongos-27000.conf [root@mongosserver mongo]# mongos -f /mongo/mongos-28000.conf # 查看实例信息 [root@mongosserver mongo]# netstat -nltp|grep mongos tcp 0 0 0.0.0.0:27000 0.0.0.0:* LISTEN 2209/mongos tcp 0 0 0.0.0.0:28000 0.0.0.0:* LISTEN 2241/mongos

(五)添加分片到集群配置服务器

STEP1:使用mongo连接到mongos

mongo --host 192.168.10.100 --port 27000 # 或者 mongo --host 192.168.10.100 --port 28000

STEP2:添加分片到集群

sh.addShard( "shard001/192.168.10.81:27017,192.168.10.81:27018,192.168.10.81:27019") sh.addShard( "shard002/192.168.10.82:27017,192.168.10.82:27018,192.168.10.82:27019") sh.addShard( "shard003/192.168.10.83:27017,192.168.10.83:27018,192.168.10.83:27019")

STEP3:查看分片信息

mongos> sh.status() --- Sharding Status --- sharding version: { "_id" : 1, "minCompatibleVersion" : 5, "currentVersion" : 6, "clusterId" : ObjectId("5ffc0709b040c53d59c15c66") } shards: { "_id" : "shard001", "host" : "shard001/192.168.10.81:27017,192.168.10.81:27018,192.168.10.81:27019", "state" : 1 } { "_id" : "shard002", "host" : "shard002/192.168.10.82:27017,192.168.10.82:27018,192.168.10.82:27019", "state" : 1 } { "_id" : "shard003", "host" : "shard003/192.168.10.83:27017,192.168.10.83:27018,192.168.10.83:27019", "state" : 1 } active mongoses: "4.2.10" : 2 autosplit: Currently enabled: yes balancer: Currently enabled: yes Currently running: no Failed balancer rounds in last 5 attempts: 0 Migration Results for the last 24 hours: No recent migrations databases: { "_id" : "config", "primary" : "config", "partitioned" : true } mongos>

(六)启用分片

(6.1)对数据库启用分片

分片是以集合为单位进行的,在对一个集合进行分片之前,需要先对其数据库启用分片,对数据库启用分片并不会重新分发数据,只是说明该数据库上的集合可以进行分片操作。

sh.enableSharding("lijiamandb");

(6.2)对集合启用分片

如果集合已经存在数据,必须手动创建在分片键上创建索引,然后再对集合进行分片,如果集合为空,MongoDB会在分片的时候自动在分片键上创建索引。

mongodb提供了2种策略来对集合进行分片:

- 哈希(hash)分片,对单列使用hash索引作为分片键

sh.shardCollection("<database>.<collection>",{shard key field : "hashed"})

- 范围(range)分片,可以使用多个字段作为分片键,并将数据划分为由分片键确定的连续范围

sh.shardCollection("<database>.<collection>",{<shard key field>:1,...} )

例子:对集合user进行hash分片

// 连接到mongos,进入lijiamandb数据库,对新集合users插入10万条数据 use lijiamandb for (i=1;i<100000;i++){ db.user.insert({ "id" : i, "name" : "name"+i, "age" : Math.floor(Math.random()*120), "created" : new Date() }); } // 使用mongostat可以看到,所有数据都写入到了主节点(shard2),每个数据库的主节点可能不同,可以使用sh.status()查看。 [root@mongosserver ~]# mongostat --port 27000 5 --discover host insert query update delete getmore command dirty used flushes mapped vsize res faults qrw arw net_in net_out conn set repl time localhost:27000 352 *0 *0 *0 0 704|0 0 0B 356M 32.0M 0 0|0 0|0 224k 140k 10 RTR Jan 15 10:52:32.046 host insert query update delete getmore command dirty used flushes mapped vsize res faults qrw arw net_in net_out conn set repl time 192.168.10.81:27017 *0 *0 *0 *0 0 2|0 0.3% 0.8% 0 1.90G 133M n/a 0|0 1|0 417b 9.67k 23 shard001 SEC Jan 15 10:52:32.061 192.168.10.81:27018 *0 *0 *0 *0 0 3|0 0.3% 0.8% 1 1.93G 132M n/a 0|0 1|0 1.39k 11.0k 28 shard001 PRI Jan 15 10:52:32.067 192.168.10.81:27019 *0 *0 *0 *0 0 2|0 0.3% 0.8% 0 1.95G 148M n/a 0|0 1|0 942b 10.2k 26 shard001 SEC Jan 15 10:52:32.070 192.168.10.82:27017 352 *0 *0 *0 407 1192|0 2.5% 11.7% 1 1.99G 180M n/a 0|0 1|0 1.52m 1.15m 29 shard002 PRI Jan 15 10:52:32.075 192.168.10.82:27018 *352 *0 *0 *0 409 441|0 4.5% 8.9% 0 1.96G 163M n/a 0|0 1|0 566k 650k 25 shard002 SEC Jan 15 10:52:32.085 192.168.10.82:27019 *352 *0 *0 *0 0 2|0 4.4% 9.7% 0 1.92G 168M n/a 0|0 1|0 406b 9.51k 24 shard002 SEC Jan 15 10:52:32.093 192.168.10.83:27017 *0 *0 *0 *0 0 1|0 0.2% 0.6% 1 1.89G 130M n/a 0|0 1|0 342b 9.17k 22 shard003 SEC Jan 15 10:52:32.099 192.168.10.83:27018 *0 *0 *0 *0 0 2|0 0.2% 0.6% 0 1.95G 139M n/a 0|0 1|0 877b 9.92k 28 shard003 PRI Jan 15 10:52:32.107 192.168.10.83:27019 *0 *0 *0 *0 0 1|0 0.2% 0.6% 0 1.90G 133M n/a 0|0 1|0 342b 9.17k 21 shard003 SEC Jan 15 10:52:32.113 localhost:27000 365 *0 *0 *0 0 731|0 0 0B 356M 32.0M 0 0|0 0|0 233k 145k 10 RTR Jan 15 10:52:37.047 // 使用分片键id创建hash分片,因为id上没有hash索引,会报错 sh.shardCollection("lijiamandb.user",{"id":"hashed"}) /* 1 */ { "ok" : 0.0, "errmsg" : "Please create an index that starts with the proposed shard key before sharding the collection", "code" : 72, "codeName" : "InvalidOptions", "operationTime" : Timestamp(1610679762, 4), "$clusterTime" : { "clusterTime" : Timestamp(1610679762, 4), "signature" : { "hash" : { "$binary" : "AAAAAAAAAAAAAAAAAAAAAAAAAAA=", "$type" : "00" }, "keyId" : NumberLong(0) } } } // 需要手动创建hash索引 db.user.ensureIndex() // 查看索引 /* 1 */ [ { "v" : 2, "key" : { "_id" : 1 }, "name" : "_id_", "ns" : "lijiamandb.user" }, { "v" : 2, "key" : { "id" : "hashed" }, "name" : "id_hashed", "ns" : "lijiamandb.user" } ] # 最后再重新分片即可 sh.shardCollection("lijiamandb".user,{"id":"hashed"})

到这里,我们分片集群环境已经搭建完成,接下来我们将会学习分片键的选择机制。

【完】

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· Manus的开源复刻OpenManus初探

· AI 智能体引爆开源社区「GitHub 热点速览」

· 三行代码完成国际化适配,妙~啊~

· .NET Core 中如何实现缓存的预热?