OmAgent

OmAgent

https://github.com/om-ai-lab/OmAgent

OmAgent is an open-source agent framework designed to streamlines the development of on-device multimodal agents. Our goal is to enable agents that can empower various hardware devices, ranging from smart phone, smart wearables (e.g. glasses), IP cameras to futuristic robots. As a result, OmAgent creates an abstraction over various types of device and simplifies the process of connecting these devices to the state-of-the-art multimodal foundation models and agent algorithms, to allow everyone build the most interesting on-device agents. Moreover, OmAgent focuses on optimize the end-to-end computing pipeline, on in order to provides the most real-time user interaction experience out of the box.

In conclusion, key features of OmAgent include:

Easy Connection to Diverse Devices: we make it really simple to connect to physical devices, e.g. phone, glasses and more, so that agent/model developers can build the applications that not running on web page, but running on devices. We welcome contribution to support more devices!

Speed-optimized SOTA Mutlimodal Models: OmAgent integrates the SOTA commercial and open-source foundation models to provide application developers the most powerful intelligence. Moreover, OmAgent streamlines the audio/video processing and computing process to easily enable natural and fluid interaction between the device and the users.

SOTA Multimodal Agent Algorithms: OmAgent provides an easy workflow orchestration interface for researchers and developers implement the latest agent algorithms, e.g. ReAct, DnC and more. We welcome contributions of any new agent algorithm to enable more complex problem solving abilities.

Scalability and Flexibility: OmAgent provides an intuitive interface for building scalable agents, enabling developers to construct agents tailored to specific roles and highly adaptive to various applications.

Architecture

The design architecture of OmAgent adheres to three fundamental principles:

- Graph-based workflow orchestration;

- Native multimodality;

- Device-centricity.

With OmAgent, one has the opportunity to craft a bespoke intelligent agent program.

For a deeper comprehension of OmAgent, let us elucidate key terms:

Devices: Central to OmAgent's vision is the empowerment of intelligent hardware devices through artificial intelligence agents, rendering devices a pivotal component of OmAgent's essence. By leveraging the downloadable mobile application we have generously provided, your mobile device can become the inaugural foundational node linked to OmAgent. Devices serve to intake environmental stimuli, such as images and sounds, potentially offering responsive feedback. We have evolved a streamlined backend process to manage the app-centric business logic, thereby enabling developers to concentrate on constructing the intelligence agent's logical framework. See client for more details.

Workflow: Within the OmAgent Framework, the architectural structure of intelligent agents is articulated through graphs. Developers possess the liberty to innovate, configure, and sequence node functionalities at will. Presently, we have opted for Conductor as the workflow orchestration engine, lending support to intricate operations like switch-case, fork-join, and do-while. See workflow for more details.

Task and Worker: Throughout the OmAgent workflow development journey, Task and Worker stand as pivotal concepts. Worker embodies the actual operational logic of workflow nodes, whereas Task oversees the orchestration of the workflow's logic. Tasks are categorized into Operators, managing workflow logic (e.g., looping, branching), and Simple Tasks, representing nodes customized by developers. Each Simple Task is correlated with a Worker; when the workflow progresses to a given Simple Task, the task is dispatched to the corresponding worker for execution. See task and worker for more details.

Modularity: Break down the agent's functionality into discrete workers, each responsible for a specific task.

Reusability: Design workers to be reusable across different workflows and agents.

Scalability: Use workflows to scale the agent's capabilities by adding more workers or adjusting the workflow sequence.

Interoperability: Workers can interact with various backends, such as LLMs, databases, or APIs, allowing agents to perform complex operations.

Asynchronous Execution: The workflow engine and task handler manage the execution asynchronously, enabling efficient resource utilization.

We provide exemplary projects to demonstrate the construction of intelligent agents using OmAgent. You can find a comprehensive list in the examples directory. Here is the reference sequence:

step1_simpleVQA illustrates the creation of a simple multimodal VQA agent with OmAgent.

step2_outfit_with_switch demonstrates how to build an agent with switch-case branches using OmAgent.

step3_outfit_with_loop shows the construction of an agent incorporating loops using OmAgent.

step4_outfit_with_ltm exemplifies using OmAgent to create an agent equipped with long-term memory.

dnc_loop demonstrates the development of an agent utilizing the DnC algorithm to tackle complex problems.

video_understanding showcases the creation of a video understanding agent for interpreting video content using OmAgent.

The API documentation is available here.

conductor

https://github.com/conductor-oss/conductor

Conductor (or Netflix Conductor) is a microservices orchestration engine for distributed and asynchronous workflows. It empowers developers to create workflows that define interactions between services, databases, and other external systems.

Conductor is designed to enable flexible, resilient, and scalable workflows. It allows you to compose services into complex workflows without coupling them tightly, simplifying orchestration across cloud-native applications and enterprise systems alike.

- Resilience and Error Handling: Conductor enables automatic retries and fallback mechanisms.

- Scalability: Built to scale with complex workflows in high-traffic environments.

- Observability: Provides monitoring and debugging capabilities for workflows.

- Ease of Integration: Seamlessly integrates with microservices, external APIs, and legacy systems.

- Workflow as Code: Define workflows in JSON and manage them with versioning.

- Rich Task Types: Includes task types like HTTP, JSON, Lambda, Sub Workflow, and Event tasks, allowing for flexible workflow definitions.

- Dynamic Workflow Management: Workflows can evolve independently of the underlying services.

- Built-in UI: A customizable UI is available to monitor and manage workflows.

- Flexible Persistence and Queue Options: Use Redis, MySQL, Postgres, and more.

OmAgent:多模态智能代理框架助力复杂视频理解

https://www.dongaigc.com/a/omagent-multimodal-agent-video-understanding

OmAgent简介

OmAgent是一个sophisticated的多模态智能代理系统,致力于利用多模态大语言模型(MLLM)和其他多模态算法来完成引人入胜的任务。该项目包含了一个轻量级的智能代理框架——omagent_core,专门设计用于解决多模态挑战。

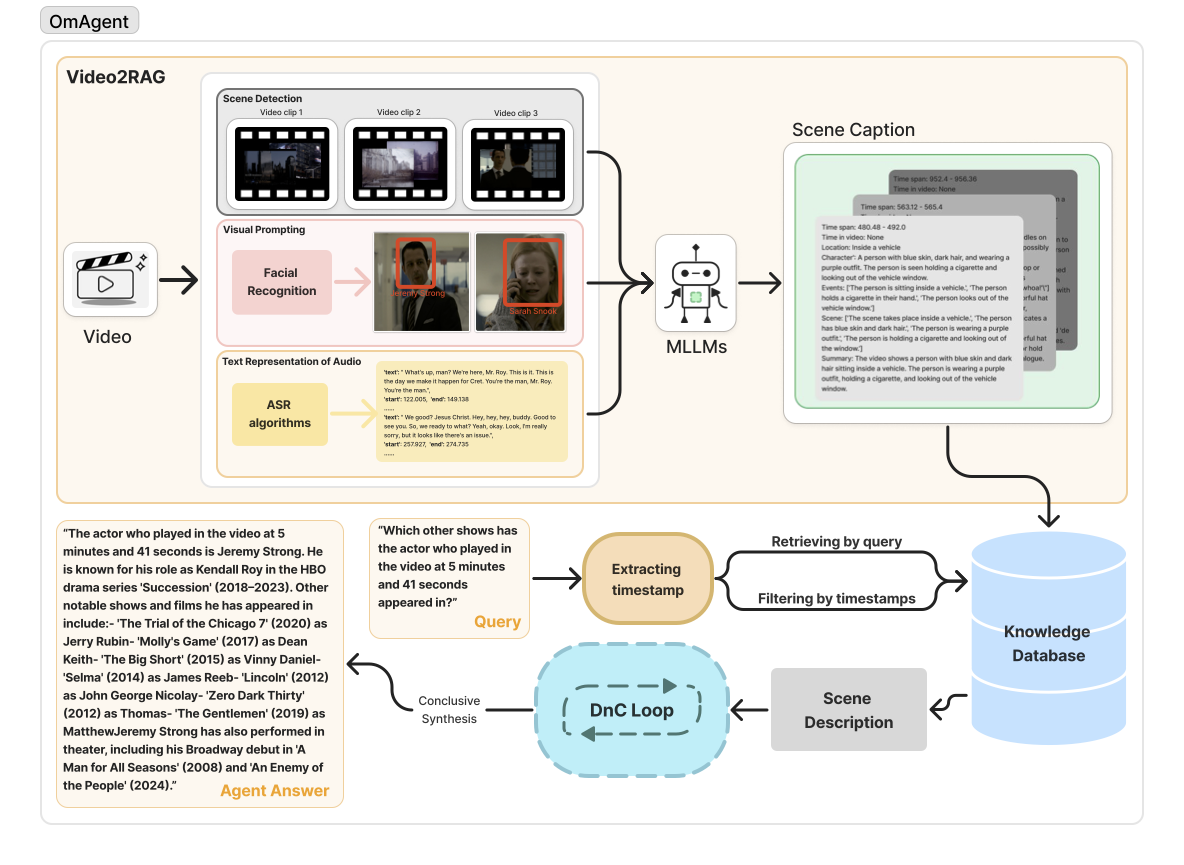

OmAgent项目的核心是构建了一个复杂的长视频理解系统。当然,开发者也可以利用这个框架来实现自己的创新想法。OmAgent由三个核心组件构成:

- Video2RAG

- DnCLoop

- Rewinder Tool

接下来,我们将深入探讨这些组件的工作原理及其在视频理解任务中的应用。

🎥 Video2RAG:视频理解的创新方法

Video2RAG组件的核心理念是将长视频的理解转化为一个多模态检索增强生成(RAG)任务。这种方法的优势在于它突破了视频长度的限制,使得系统能够处理更长的视频内容。

然而,这种预处理方法也可能导致大量视频细节的丢失。为了解决这个问题,OmAgent引入了Rewinder Tool,我们稍后会详细讨论这个工具。

Video2RAG的工作流程大致如下:

- 将长视频切分成多个短片段

- 对每个片段进行特征提取和编码

- 将编码后的特征存储在向量数据库中

- 在查询时,根据问题检索相关的视频片段

- 利用检索到的片段信息来生成回答

这种方法使得OmAgent能够高效地处理长视频,并且在回答问题时能够快速定位到相关的视频内容。

🧩 DnCLoop:分治策略解决复杂任务

DnCLoop(Divide and Conquer Loop)是OmAgent的核心处理逻辑,灵感来源于经典的分治算法范式。这种方法通过迭代地将复杂问题细化为一个任务树,最终将复杂任务转化为一系列可解决的简单任务。

DnCLoop的工作流程如下:

- 接收复杂任务

- 分析任务并将其分解为多个子任务

- 对每个子任务进行评估,判断是否需要进一步分解

- 对可以直接解决的子任务进行处理

- 递归地应用上述步骤,直到所有子任务都被解决

- 整合子任务的结果,生成最终的解决方案

这种方法使得OmAgent能够处理高度复杂的任务,例如长视频的多轮问答、视频内容摘要等。通过将大任务分解成小任务,系统可以更有效地利用多模态模型的能力,提高处理效率和准确性。

⏪ Rewinder Tool:细节不再遗漏

为了解决Video2RAG过程中可能出现的信息丢失问题,OmAgent设计了一个名为Rewinder的"进度条"工具。这个工具可以被代理自主使用,使其能够重新访问任何视频细节,从而获取必要的信息。

Rewinder Tool的主要特点包括:

- 自主操作:代理可以根据需要自动调用Rewinder

- 精确定位:能够快速定位到视频的特定时间点

- 细节提取:可以从定位的时间点提取更多细节信息

- 上下文理解:结合前后文信息,提高理解的准确性

通过Rewinder Tool,OmAgent在保持高效处理长视频的同时,也能够在需要时深入挖掘视频的细节信息,从而提供更加全面和准确的回答。

OmAgent: A Multi-modal Agent Framework for Complex VideoUnderstanding with Task Divide-and-Conquer

https://arxiv.org/pdf/2406.16620

浙公网安备 33010602011771号

浙公网安备 33010602011771号