mlflow

mlflow

https://mlflow.org/#core-concepts

ML and GenAI

made simpleBuild better models and generative AI apps on a unified, end-to-end,

open source MLOps platform

https://github.com/mlflow/mlflow

MLflow is an open-source platform, purpose-built to assist machine learning practitioners and teams in handling the complexities of the machine learning process. MLflow focuses on the full lifecycle for machine learning projects, ensuring that each phase is manageable, traceable, and reproducible

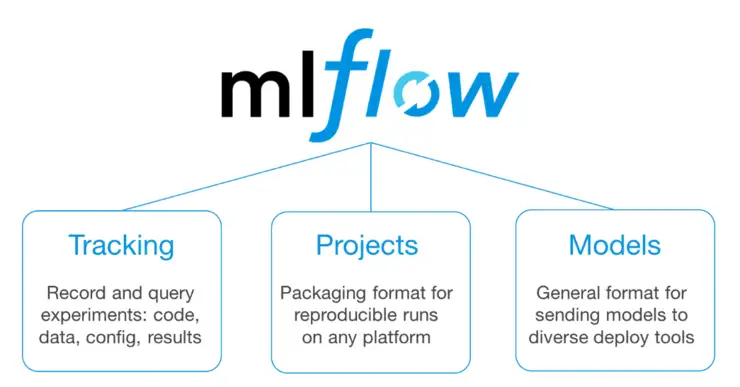

The core components of MLflow are:

- Experiment Tracking 📝: A set of APIs to log models, params, and results in ML experiments and compare them using an interactive UI.

- Model Packaging 📦: A standard format for packaging a model and its metadata, such as dependency versions, ensuring reliable deployment and strong reproducibility.

- Model Registry 💾: A centralized model store, set of APIs, and UI, to collaboratively manage the full lifecycle of MLflow Models.

- Serving 🚀: Tools for seamless model deployment to batch and real-time scoring on platforms like Docker, Kubernetes, Azure ML, and AWS SageMaker.

- Evaluation 📊: A suite of automated model evaluation tools, seamlessly integrated with experiment tracking to record model performance and visually compare results across multiple models.

- Observability 🔍: Tracing integrations with various GenAI libraries and a Python SDK for manual instrumentation, offering smoother debugging experience and supporting online monitoring.

Quickstart: Compare runs, choose a model, and deploy it to a REST API

https://mlflow.org/docs/latest/getting-started/quickstart-2/index.html

In this quickstart, you will:

Run a hyperparameter sweep on a training script

Compare the results of the runs in the MLflow UI

Choose the best run and register it as a model

Deploy the model to a REST API

Build a container image suitable for deployment to a cloud platform

As an ML Engineer or MLOps professional, you can use MLflow to compare, share, and deploy the best models produced by the team. In this quickstart, you will use the MLflow Tracking UI to compare the results of a hyperparameter sweep, choose the best run, and register it as a model. Then, you will deploy the model to a REST API. Finally, you will create a Docker container image suitable for deployment to a cloud platform.

MLflow Tracking Quickstart

https://mlflow.org/docs/latest/getting-started/intro-quickstart/index.html

初试 MLflow 机器学习实验管理平台搭建

https://zhuanlan.zhihu.com/p/161641400

https://www.zhihu.com/question/280162556

Accelerating Production Machine Learning with MLflow

https://www.bilibili.com/video/BV1SE411a7Gi/?spm_id_from=333.337.search-card.all.click&vd_source=57e261300f39bf692de396b55bf8c41b

MLflow 集成用于Ultralytics YOLO

https://docs.ultralytics.com/zh/integrations/mlflow/#what-metrics-and-parameters-can-i-log-using-mlflow-with-ultralytics-yolo

DEMO

https://github.com/fanqingsong/predicting-pulsar-stars

https://github.com/fanqingsong/mlflow-demo

浙公网安备 33010602011771号

浙公网安备 33010602011771号