agent runtime -- agent scope --- agent universe

GoEx: A Runtime for Autonomous LLM Applications

https://gorilla.cs.berkeley.edu/blogs/10_gorilla_exec_engine.html

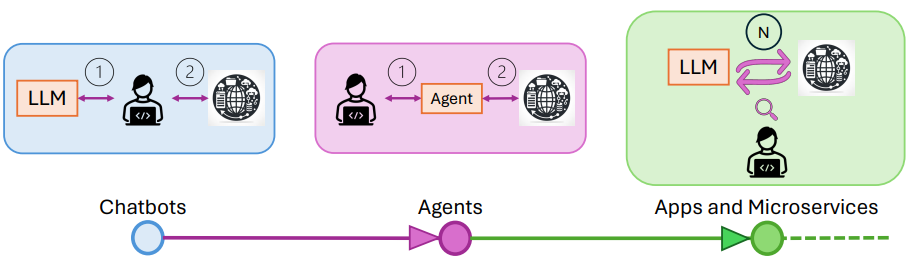

- Moving from Chatbots to Autonomous Agents 🚀

In the example above, the LLM is autonomously using microservices, services, and applications, with little human supervision. Different autonomous LLM-powered applications can be built on top of the GoEx engine, such as a Slack bot that can send messages, a Spotify bot that can create playlists, or a Dropbox bot that can create folders and files. However, in designing such systems, several critical challenges must be addressed:

- Hallucination, stochasticity, and unpredictability. LLM-based applications place an unpredictable and hallucination-prone LLM at the helm of a system traditionally reliant on trust. Currently, services and APIs assume a human-in-the-loop, or clear specifications to govern how and which tools are used in an application. For example, the user clicks the “Send” button after confirming the recipient and body of the email. In contrast, an LLM-powered assistant may send an email that goes against the user's intentions, and may even perform actions unintended by the user.

- Unreliability. Given their unpredictability and impossibility to comprehensively test, it is difficult for a user to trust an LLM off the shelf. However, the growing utility of LLM-based systems means that we need mechanisms to express the safety-utility tradeoff to developers and users.

- Delayed feedback and downstream visibility. Lastly, from a system-design principle, unlike chatbots and agents of today, LLM-powered systems of the future will not have immediate human feedback. This means that the intermediate state of the system is not immediately visible to the user and often only downstream effects are visible. An LLM-powered assistant may interact with many other tools (e.g., querying a database, browsing the web, or filtering push notifications) before composing and sending an email. Such interactions before the email is sent are invisible to the user.

We are moving towards a world where LLM-powered applications are evolving from chatbots to autonomous LLM-agents interacting with external applications and services with minimal and punctuated human supervision. GoEx offers a new way to interface with LLMs, providing abstractions for authorization, execution, and error handling.

A natural language interface for computers

https://github.com/OpenInterpreter/open-interpreter

Open Interpreter lets LLMs run code (Python, Javascript, Shell, and more) locally. You can chat with Open Interpreter through a ChatGPT-like interface in your terminal by running

$ interpreterafter installing.This provides a natural-language interface to your computer's general-purpose capabilities:

- Create and edit photos, videos, PDFs, etc.

- Control a Chrome browser to perform research

- Plot, clean, and analyze large datasets

- ...etc.

https://www.acorn.io/resources/learning-center/open-interpreter

Open Interpreter vs OpenAI’s Code Interpreter

OpenAI’s Code Interpreter is a tool integrated with GPT-3.5 and GPT-4, which can perform various computational tasks using natural language. However, it operates in a hosted, closed-source environment with several restrictions, such as limited access to pre-installed packages and the absence of internet connectivity. Additionally, it has a runtime limit of 120 seconds and a file upload cap of 100MB, which can be limiting for extensive or complex tasks.

Open Interpreter offers a more flexible solution by running directly on your local machine. This local execution provides full access to the internet and allows the use of any package or library needed for your projects. With no restrictions on runtime or file size, Open Interpreter is well-suited for handling large datasets and lengthy computations. Its open-source nature ensures that you have complete control over the tool and your data. It also supports multiple large language models (LLMs) beyond those offered by OpenAI.

agentscope

https://github.com/modelscope/agentscope

https://zhuanlan.zhihu.com/p/695452823

Start building LLM-empowered multi-agent applications in an easier way.

AgentScope是阿里于24年2月开源的多Agent开发平台,其研究论文详细介绍了平台的架构、关键技术、容错机制、多模态应用支持,以及基于 actor 的分布式框架等。此外论文还提供了一些应用案例,以展示 AgentScope 在不同场景下的应用潜力。

基本概念

AgentScope的基本概念中包含四个部分,分别是消息、Agent、服务和工作流。

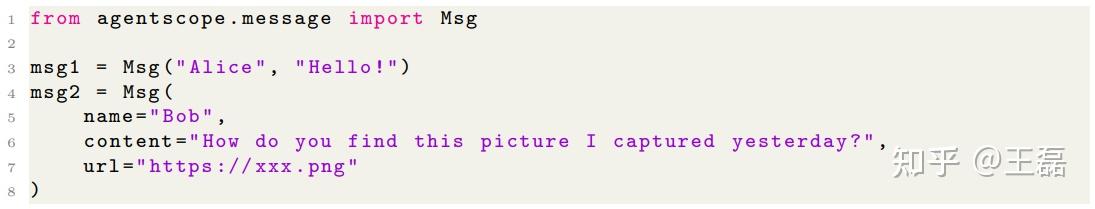

消息(Message):消息是多Agent对话中信息交换的载体,封装了信息的来源和内容。在AgentScope中,消息被实现为具有两个必填字段(名称name和内容content)以及一个可选字段(url)的Python字典。名称字段记录生成消息的Agent的名称,内容字段包含Agent生成的基于文本的信息。url字段旨在包含统一资源定位器(URL),通常链接到多模态数据,如图像或视频。每个消息都由自动生成的UUID和时间戳唯一标识,从而确保可追溯性。

AgentScope中创建消息的示例

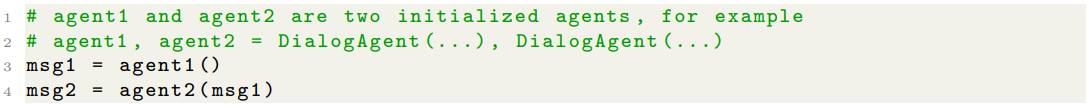

Agent:Agent是多Agent应用中的主要参与者,充当对话的参与者和任务的执行者。在AgentScope中,Agent的行为通过两个接口抽象化:回复(reply)和观察(observe)功能。回复功能以消息为输入并产生响应,而观察功能处理传入的消息而不生成直接回复。

Agent间的消息交互

服务(Service):AgentScope中的服务指的是提供各种工具功能的API。

工作流(Workflow):工作流代表Agent执行和Agent间消息交换的有序序列,类似于TensorFlow中的计算图,但具有适应非DAG结构的灵活性。工作流定义了Agent之间的信息流和任务处理流程,促进并行执行和效率提升。

架构概览

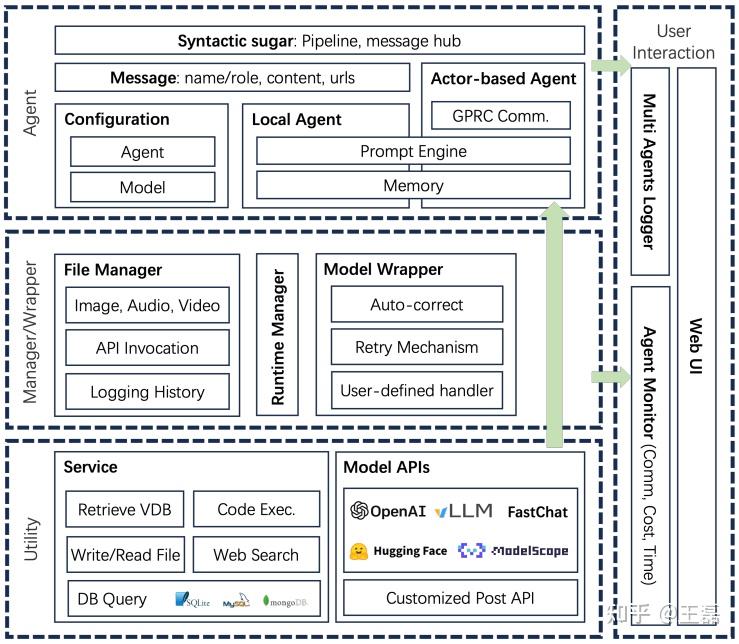

AgentScope架构示意图

文章介绍了AgentScope的架构,包括三个层次:实用层、管理器和包装器层、Agent层。这些层次为多Agent应用提供不同层次的支持,包括单个Agent的基本和高级功能(实用层)、资源和运行时管理(管理和包装层)以及Agent级到工作流级编程接口(Agent层)。

- 实用层(Utility Layer):实用层提供了Agent的核心功能,该层抽象了底层操作的复杂性,如API调用、数据检索和代码执行,使Agent能够专注于它们的主要任务。实用层同时提供了内置的自动重试机制,用于应对意外中断。

- 管理和包装层(Manager and Wrapper Layer):管理器和包装器层管理资源和API服务,确保资源的高可用性,并提供抵御LLMs不良响应的能力。

- Agent层(Agent Layer):Agent层是AgentScope的核心,负责多Agent工作流的交互和通信,通过简化语法和工具,减轻了开发者的编程负担。

- 用户交互(User Interaction):除了分层架构之外,AgentScope还提供了面向多Agent的接口,如终端和Web UI。这些接口允许开发人员轻松监控应用程序的状态和指标,包括Agent通信、执行时间和财务成本。

总体而言,AgentScope的分层构建为开发人员提供了构建利用大型语言模型高级能力定制多Agent应用的基本构件。接下来的部分将深入探讨AgentScope的关键能力设计,这些功能增强了多Agent应用开发的编程体验。

ai-town

https://github.com/a16z-infra/ai-town/tree/main

A MIT-licensed, deployable starter kit for building and customizing your own version of AI town - a virtual town where AI characters live, chat and socialize.

Join our community Discord: AI Stack Devs

AI Town is a virtual town where AI characters live, chat and socialize.

This project is a deployable starter kit for easily building and customizing your own version of AI town. Inspired by the research paper Generative Agents: Interactive Simulacra of Human Behavior.

The primary goal of this project, beyond just being a lot of fun to work on, is to provide a platform with a strong foundation that is meant to be extended. The back-end natively supports shared global state, transactions, and a simulation engine and should be suitable from everything from a simple project to play around with to a scalable, multi-player game. A secondary goal is to make a JS/TS framework available as most simulators in this space (including the original paper above) are written in Python.

AgentUniverse

https://github.com/alipay/AgentUniverse

agentUniverse is a LLM multi-agent framework that allows developers to easily build multi-agent applications.

agentUniverse is a multi-agent framework based on large language models. agentUniverse provides you with the flexible and easily extensible capability to build single agents. At its core, agentUniverse features a rich set of multi-agent collaboration mode components (which can be viewed as a Collaboration Mode Factory, or Pattern Factory). These components allow agents to maximize their effectiveness by specializing in different domains to solve problems. agentUniverse also focuses on the integration of domain expertise, helping you seamlessly incorporate domain knowledge into the work of your agents.🎉🎉🎉

🌈🌈🌈agentUniverse helps developers and enterprises to easily build powerful collaborative agents that perform at an expert level in their respective domains.

We encourage you to practice and share different domain Patterns within the community. The framework comes pre-loaded with several multi-agent collaboration mode components that have been validated in real-world industries and will continue to expand in the future. The components that will be available soon include:

- PEER Mode Component: This pattern uses agents with different responsibilities—Plan, Execute, Express, and Review—to break down complex problems into manageable steps, execute the steps in sequence, and iteratively improve based on feedback, enhancing the performance of reasoning and analysis tasks. Typical use cases: Event interpretation, industry analysis.

- DOE Mode Component: This pattern employs three agents—Data-fining, Opinion-inject, and Express—to improve the effectiveness of tasks that are data-intensive, require high computational precision, and incorporate expert opinions. Typical use cases: Financial report generation.

浙公网安备 33010602011771号

浙公网安备 33010602011771号