TinyAgent

TinyAgent

https://github.com/fanqingsong/tiny-universe/tree/main/content/TinyAgent

content/TinyAgent/agent_demo.py

from tinyAgent.Agent_Ollama import Agent_Ollama # agent = Agent('/root/share/model_repos/internlm2-chat-20b') agent = Agent_Ollama('/home/song/Desktop/github/me/internlm2-chat-7b') print(agent.system_prompt) # print("------------------------ before 1 -----------------------------") # response = agent.text_completion(text='你好', history=[]) # print("------------------------ after 1 -----------------------------") # print("------------------------ before 2 -----------------------------") # response = agent.text_completion(text='特朗普哪一年出生的?', history=[]) # print("------------------------ after 2 -----------------------------") response = agent.text_completion(text='周杰伦是谁?', history=[]) # print(response) # response = agent.text_completion(text='书生浦语是什么?', history=_) # print(response)

content/TinyAgent/tinyAgent/Agent_Ollama.py

from typing import Dict, List, Optional, Tuple, Union import json5 from tinyAgent.LLM_Ollama import InternLM2Chat from tinyAgent.tool_Ollama import Tools TOOL_DESC = """{name_for_model}: Call this tool to interact with the {name_for_human} API. What is the {name_for_human} API useful for? {description_for_model} Parameters: {parameters} Format the arguments as a JSON object.""" REACT_PROMPT = """Answer the following questions as best you can. You have access to the following tools: {tool_descs} Use the following format: Question: the input question you must answer Thought: you should always think about what to do Action: the action to take, should be one of [{tool_names}] Action Input: the input to the action Observation: the result of the action ... (this Thought/Action/Action Input/Observation can be repeated zero or more times) Thought: I now know the final answer Final Answer: the final answer to the original input question Begin! """ class Agent_Ollama: def __init__(self, path: str = '') -> None: self.path = path self.tool = Tools() self.system_prompt = self.build_system_input() self.model = InternLM2Chat(path) def build_system_input(self): tool_descs, tool_names = [], [] for tool in self.tool.toolConfig: tool_descs.append(TOOL_DESC.format(**tool)) tool_names.append(tool['name_for_model']) tool_descs = '\n\n'.join(tool_descs) tool_names = ','.join(tool_names) sys_prompt = REACT_PROMPT.format(tool_descs=tool_descs, tool_names=tool_names) return sys_prompt def parse_latest_plugin_call(self, text): print("--------------- parse_latest_plugin_call ------------") print(text) print("--------------- parse_latest_plugin_call ------------") plugin_name, plugin_args = '', '' i = text.rfind('\nAction:') j = text.rfind('\nAction Input:') k = text.rfind('\nObservation:') if 0 <= i < j: # If the text has `Action` and `Action input`, if k < j: # but does not contain `Observation`, text = text.rstrip() + '\nObservation:' # Add it back. k = text.rfind('\nObservation:') plugin_name = text[i + len('\nAction:') : j].strip() plugin_args = text[j + len('\nAction Input:') : k].strip() text = text[:k] return plugin_name, plugin_args, text def call_plugin(self, plugin_name, plugin_args): plugin_args = json5.loads(plugin_args) if plugin_name == 'bing_search': return '\nObservation:' + self.tool.bing_search(**plugin_args) def text_completion(self, text, history=[]): text = "\nQuestion:" + text response = self.model.chat(text, history, self.system_prompt) print("========== first chat response ==========") print(response) plugin_name, plugin_args, response = self.parse_latest_plugin_call(response) print("========== after call parse latest plugin ==========") print(f'plugin_name={plugin_name}') print(f'plugin_args={plugin_args}') print(f'response={response}') if plugin_name: response += self.call_plugin(plugin_name, plugin_args) print("========== after call plugin ==========") print(response) response = self.model.chat(response, history, self.system_prompt) print("========== second chat response ==========") print(response) return response if __name__ == '__main__': agent = Agent('/root/share/model_repos/internlm2-chat-7b') prompt = agent.build_system_input() print(prompt)

content/TinyAgent/tinyAgent/LLM_Ollama.py

from typing import Dict, List, Optional, Tuple, Union from openai import OpenAI ''' curl http://localhost:11434/v1/chat/completions \ -H "Content-Type: application/json" \ -d '{ "model": "qwen:0.5b", "messages": [ { "role": "system", "content": "You are a helpful assistant." }, { "role": "user", "content": "Hello!" } ] }' ''' class BaseModel: def __init__(self, path: str = '') -> None: # self.path = path pass def chat(self, prompt: str, history: List[dict]): pass def load_model(self): pass class InternLM2Chat(BaseModel): def __init__(self, path: str = '') -> None: super().__init__(path) # self.load_model() client = OpenAI( base_url='http://10.80.11.197:8000/v1', # required but ignored api_key='ollama', ) self.client = client # def load_model(self): # print('================ Loading model ================') # self.tokenizer = AutoTokenizer.from_pretrained(self.path, trust_remote_code=True) # self.model = AutoModelForCausalLM.from_pretrained(self.path, torch_dtype=torch.float16, trust_remote_code=True).cuda().eval() # print('================ Model loaded ================') def chat(self, prompt: str, history: List[dict], meta_instruction:str ='') -> str: # response, history = self.model.chat(self.tokenizer, prompt, history, temperature=0.1, meta_instruction=meta_instruction) # return response, history chat_completion = self.client.chat.completions.create( messages=[ { "role": "system", "content": meta_instruction }, { "role": "user", "content": prompt } ], model='/mnt/AI/models/internlm2_chat_7b/', ) print(chat_completion) ret = chat_completion.choices[0].message.content return ret # if __name__ == '__main__': # model = InternLM2Chat('/root/share/model_repos/internlm2-chat-7b') # print(model.chat('Hello', []))

content/TinyAgent/tinyAgent/tool_Ollama.py

import os, json import requests """ 工具函数 - 首先要在 tools 中添加工具的描述信息 - 然后在 tools 中添加工具的具体实现 - https://serper.dev/dashboard """ class Tools: def __init__(self) -> None: self.toolConfig = self._tools() def _tools(self): tools = [ # { # 'name_for_human': 'Bing搜索', # 'name_for_model': 'bing_search', # 'description_for_model': 'Bing搜索是一个通用搜索引擎,可用于访问互联网、查询百科知识、了解时事新闻等。', # 'parameters': [ # { # 'name': 'search_query', # 'description': '搜索关键词或短语', # 'required': True, # 'schema': {'type': 'string'}, # } # ], # }, { 'name_for_human': 'shell command', 'name_for_model': 'execute_shell', 'description_for_model': 'This tool can help you execute shell command.', 'parameters': [ { 'name': 'search_query', 'description': 'shell command', 'required': True, 'schema': {'type': 'string'}, } ], } ] return tools def bing_search(self, search_query: str): print("------------ mock bing search -------------------") return "Sorry, I don't know." url = "https://google.serper.dev/search" payload = json.dumps({"q": search_query}) headers = { 'X-API-KEY': '修改为你自己的key', 'Content-Type': 'application/json' } response = requests.request("POST", url, headers=headers, data=payload).json() return response['organic'][0]['snippet']

参考:

https://github.com/openai/openai-python

https://cookbook.openai.com/examples/how_to_call_functions_with_chat_models#specifying-a-function-to-execute-sql-queries

https://www.promptingguide.ai/applications/function_calling

https://github.com/QwenLM/Qwen/blob/main/examples/react_prompt.md

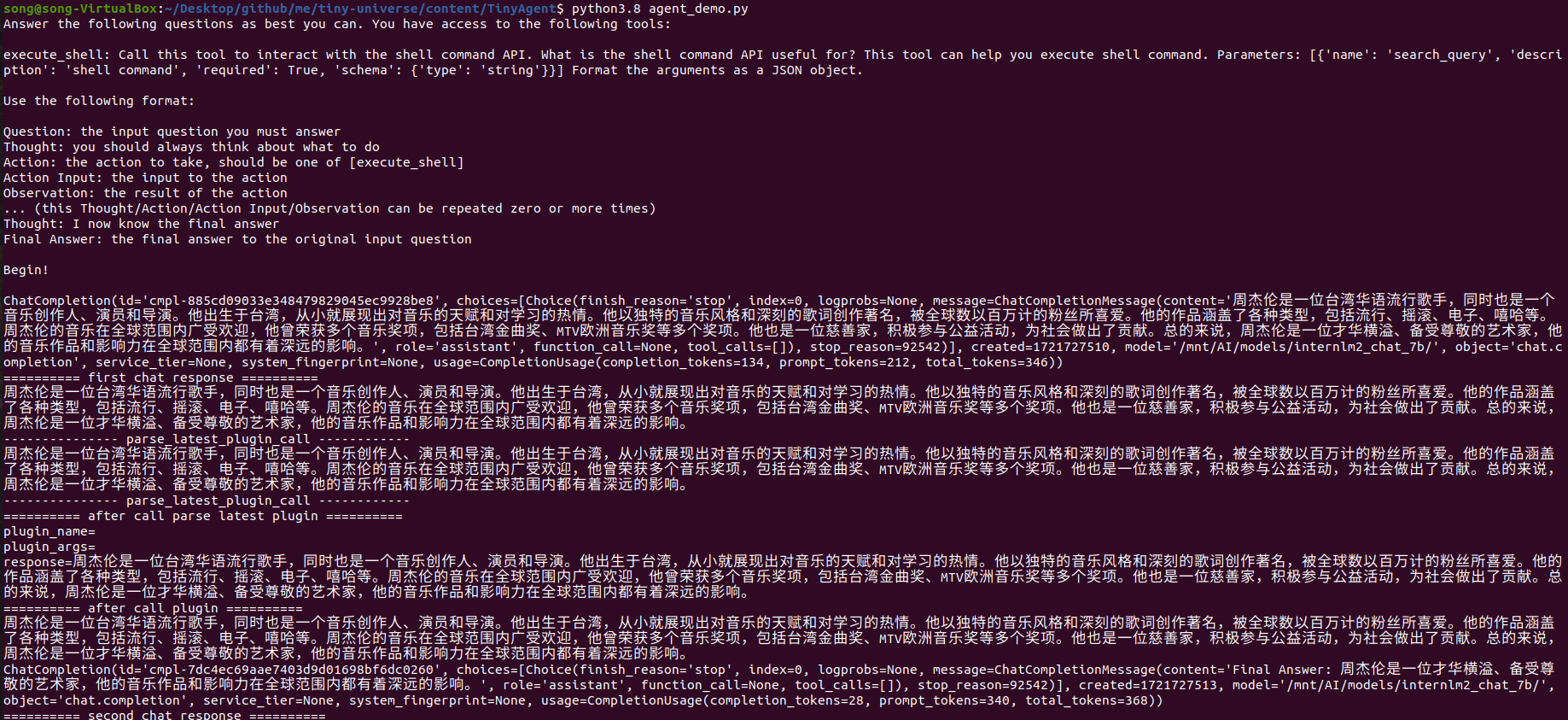

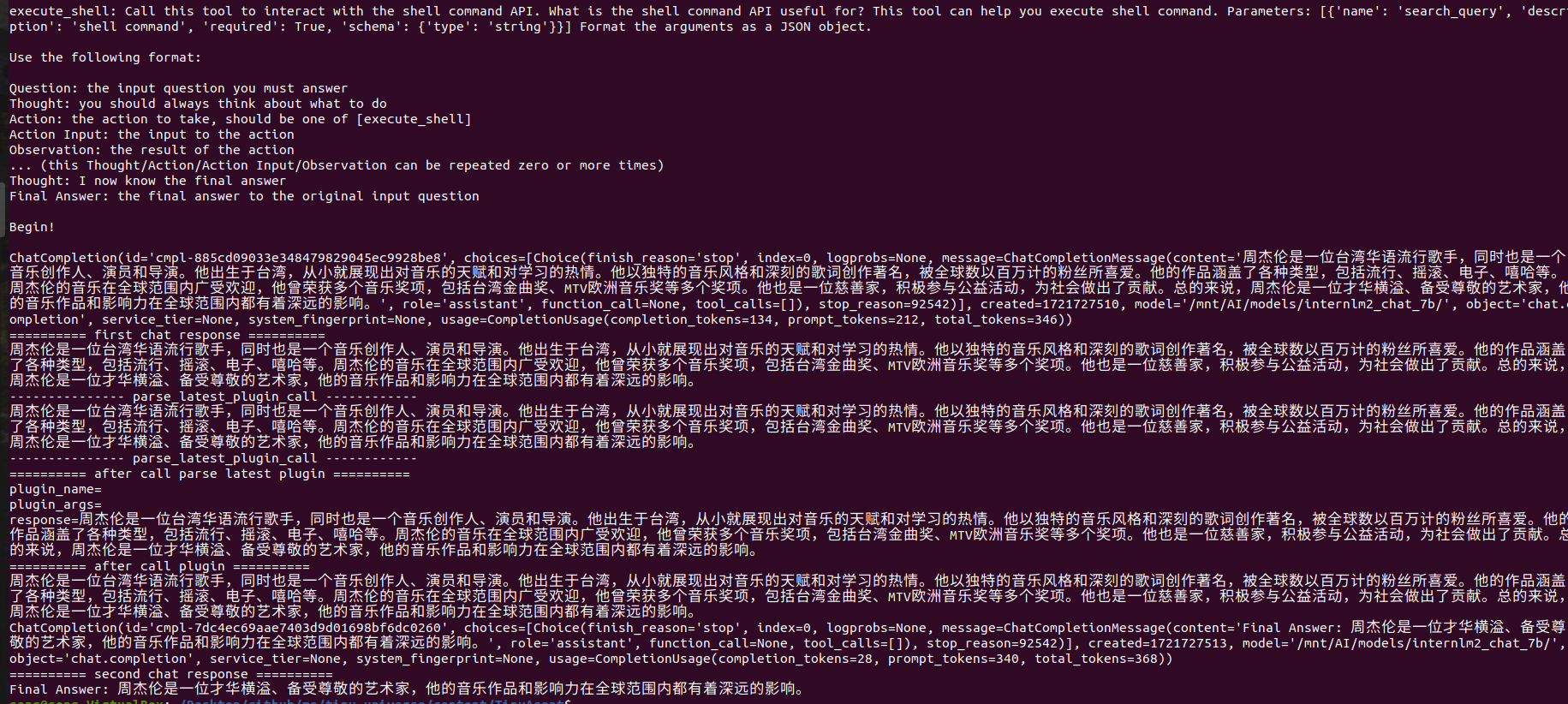

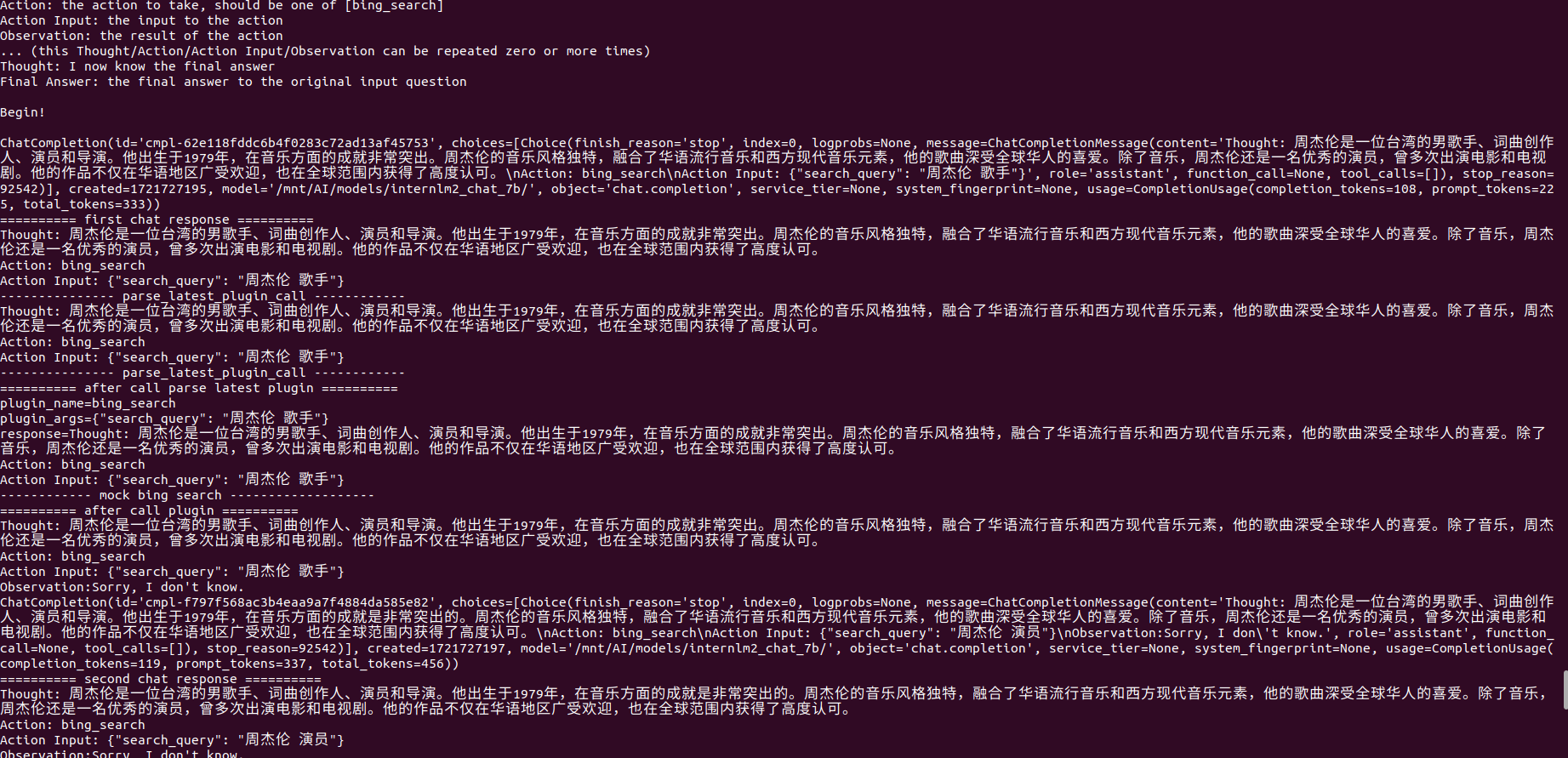

效果

No Tools call

Tools Found call

出处:http://www.cnblogs.com/lightsong/

本文版权归作者和博客园共有,欢迎转载,但未经作者同意必须保留此段声明,且在文章页面明显位置给出原文连接。

浙公网安备 33010602011771号

浙公网安备 33010602011771号