fastchat vs vLLM

vLLM

https://github.com/vllm-project/vllm

https://docs.vllm.ai/en/latest/

推理和服务,但是更加偏向推理。

vLLM is a fast and easy-to-use library for LLM inference and serving.

vLLM is fast with:

- State-of-the-art serving throughput

- Efficient management of attention key and value memory with PagedAttention

- Continuous batching of incoming requests

- Fast model execution with CUDA/HIP graph

- Quantization: GPTQ, AWQ, SqueezeLLM, FP8 KV Cache

- Optimized CUDA kernels

Performance benchmark: We include a performance benchmark that compares the performance of vllm against other LLM serving engines (TensorRT-LLM, text-generation-inference and lmdeploy).

vLLM is flexible and easy to use with:

- Seamless integration with popular Hugging Face models

- High-throughput serving with various decoding algorithms, including parallel sampling, beam search, and more

- Tensor parallelism and pipeline parallelism support for distributed inference

- Streaming outputs

- OpenAI-compatible API server

- Support NVIDIA GPUs, AMD CPUs and GPUs, Intel CPUs and GPUs, PowerPC CPUs

- (Experimental) Prefix caching support

- (Experimental) Multi-lora support

vLLM seamlessly supports most popular open-source models on HuggingFace, including:

- Transformer-like LLMs (e.g., Llama)

- Mixture-of-Expert LLMs (e.g., Mixtral)

- Multi-modal LLMs (e.g., LLaVA)

Find the full list of supported models here.

FastChat

https://github.com/lm-sys/FastChat

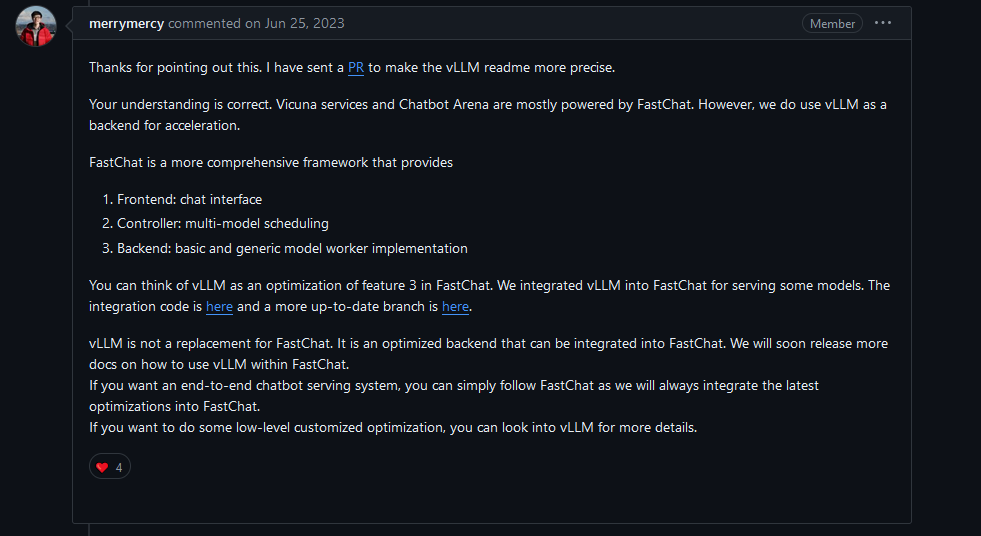

对模型的训练、服务、评估负责,

流行的还是使用其服务功能, 即部署功能(分布式部署,提供webui 和 resetapi), 切后端可以集成vLLM加速推理。

An open platform for training, serving, and evaluating large language models. Release repo for Vicuna and Chatbot Arena.

FastChat is an open platform for training, serving, and evaluating large language model based chatbots.

- FastChat powers Chatbot Arena (https://chat.lmsys.org/), serving over 10 million chat requests for 70+ LLMs.

- Chatbot Arena has collected over 500K human votes from side-by-side LLM battles to compile an online LLM Elo leaderboard.

FastChat's core features include:

- The training and evaluation code for state-of-the-art models (e.g., Vicuna, MT-Bench).

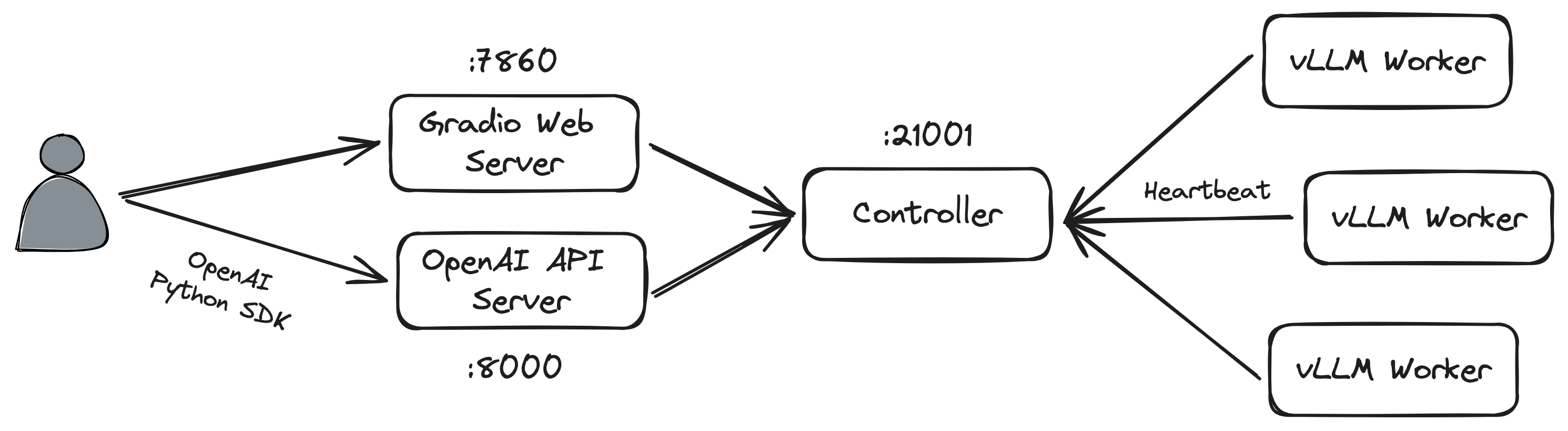

- A distributed multi-model serving system with web UI and OpenAI-compatible RESTful APIs.

https://rudeigerc.dev/posts/llm-inference-with-fastchat/

VS

https://fastchat.mintlify.app/vllm_integration

https://github.com/lm-sys/FastChat/issues/1775

浙公网安备 33010602011771号

浙公网安备 33010602011771号