Recognizing hand-written digits of sklean

由来

https://scikit-learn.org/stable/auto_examples/classification/plot_digits_classification.html#sphx-glr-auto-examples-classification-plot-digits-classification-py

An example showing how the scikit-learn can be used to recognize images of hand-written digits.

This example is commented in the tutorial section of the user manual.

Out:

Classification report for classifier SVC(gamma=0.001): precision recall f1-score support 0 1.00 0.99 0.99 88 1 0.99 0.97 0.98 91 2 0.99 0.99 0.99 86 3 0.98 0.87 0.92 91 4 0.99 0.96 0.97 92 5 0.95 0.97 0.96 91 6 0.99 0.99 0.99 91 7 0.96 0.99 0.97 89 8 0.94 1.00 0.97 88 9 0.93 0.98 0.95 92 accuracy 0.97 899 macro avg 0.97 0.97 0.97 899 weighted avg 0.97 0.97 0.97 899 Confusion matrix: [[87 0 0 0 1 0 0 0 0 0] [ 0 88 1 0 0 0 0 0 1 1] [ 0 0 85 1 0 0 0 0 0 0] [ 0 0 0 79 0 3 0 4 5 0] [ 0 0 0 0 88 0 0 0 0 4] [ 0 0 0 0 0 88 1 0 0 2] [ 0 1 0 0 0 0 90 0 0 0] [ 0 0 0 0 0 1 0 88 0 0] [ 0 0 0 0 0 0 0 0 88 0] [ 0 0 0 1 0 1 0 0 0 90]]

Code

https://github.com/fanqingsong/code_snippet/blob/master/sklearn/recognize_hand_written_digits.ipynb

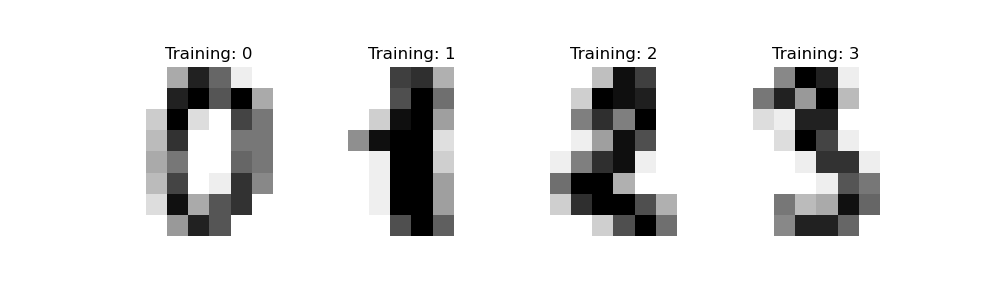

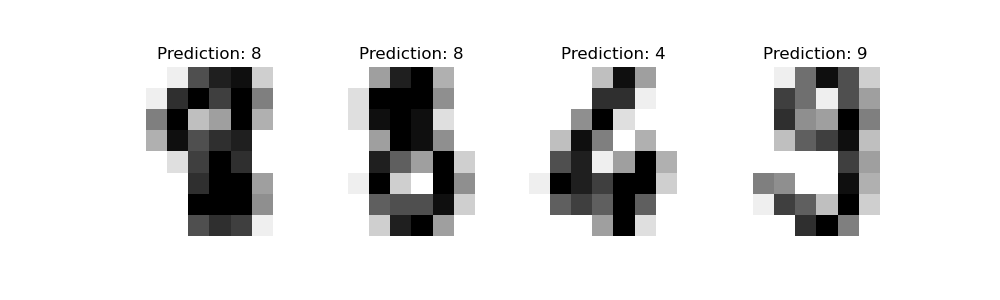

print(__doc__) # Author: Gael Varoquaux <gael dot varoquaux at normalesup dot org> # License: BSD 3 clause # Standard scientific Python imports import matplotlib.pyplot as plt # Import datasets, classifiers and performance metrics from sklearn import datasets, svm, metrics from sklearn.model_selection import train_test_split from sklearn.metrics import confusion_matrix # The digits dataset digits = datasets.load_digits() # The data that we are interested in is made of 8x8 images of digits, let's # have a look at the first 4 images, stored in the `images` attribute of the # dataset. If we were working from image files, we could load them using # matplotlib.pyplot.imread. Note that each image must have the same size. For these # images, we know which digit they represent: it is given in the 'target' of # the dataset. _, axes = plt.subplots(2, 4) images_and_labels = list(zip(digits.images, digits.target)) for ax, (image, label) in zip(axes[0, :], images_and_labels[:4]): ax.set_axis_off() ax.imshow(image, cmap=plt.cm.gray_r, interpolation='nearest') ax.set_title('Training: %i' % label) # To apply a classifier on this data, we need to flatten the image, to # turn the data in a (samples, feature) matrix: n_samples = len(digits.images) print("----------- images shape:") print(digits.images.shape) data = digits.images.reshape((n_samples, -1)) print("----------- data shape:") print(data.shape) # Create a classifier: a support vector classifier classifier = svm.SVC(gamma=0.001) # Split data into train and test subsets X_train, X_test, y_train, y_test = train_test_split( data, digits.target, test_size=0.5, shuffle=True) # We learn the digits on the first half of the digits classifier.fit(X_train, y_train) # Now predict the value of the digit on the second half: predicted = classifier.predict(X_test) # display some predicted instance images_and_predictions = list(zip(digits.images[n_samples // 2:], predicted)) for ax, (image, prediction) in zip(axes[1, :], images_and_predictions[:4]): ax.set_axis_off() ax.imshow(image, cmap=plt.cm.gray_r, interpolation='nearest') ax.set_title('Prediction: %i' % prediction) print("Classification report for classifier %s:\n%s\n" % (classifier, metrics.classification_report(y_test, predicted))) disp = metrics.plot_confusion_matrix(classifier, X_test, y_test) disp.figure_.suptitle("Confusion Matrix") print("Confusion matrix:\n%s" % disp.confusion_matrix) cm = confusion_matrix(y_test, predicted, normalize="true") print("confustion matrix with normalize=true") print(cm) plt.show()

其中将 8*8的二维矩阵 转变为 1维打印

----------- images shape: (1797, 8, 8) ----------- data shape: (1797, 64)

概率混淆矩阵

confustion matrix with normalize=true [[1. 0. 0. 0. 0. 0. 0. 0. 0. 0. ] [0. 1. 0. 0. 0. 0. 0. 0. 0. 0. ] [0. 0. 1. 0. 0. 0. 0. 0. 0. 0. ] [0. 0. 0. 0.98780488 0. 0. 0. 0.01219512 0. 0. ] [0. 0. 0. 0. 0.99019608 0. 0. 0. 0.00980392 0. ] [0. 0. 0. 0. 0. 0.97894737 0.01052632 0. 0. 0.01052632] [0. 0. 0. 0. 0. 0. 1. 0. 0. 0. ] [0. 0. 0. 0. 0. 0. 0. 1. 0. 0. ] [0. 0.03157895 0. 0. 0. 0. 0. 0. 0.96842105 0. ] [0. 0. 0. 0.01136364 0. 0.02272727 0. 0.01136364 0.01136364 0.94318182]]

出处:http://www.cnblogs.com/lightsong/

本文版权归作者和博客园共有,欢迎转载,但未经作者同意必须保留此段声明,且在文章页面明显位置给出原文连接。