Spark

Spark

https://spark.apache.org/

Lightning-fast unified analytics engine

Speed

Run workloads 100x faster.

Apache Spark achieves high performance for both batch and streaming data, using a state-of-the-art DAG scheduler, a query optimizer, and a physical execution engine.

Ease of Use

Write applications quickly in Java, Scala, Python, R, and SQL.

Spark offers over 80 high-level operators that make it easy to build parallel apps. And you can use it interactively from the Scala, Python, R, and SQL shells.

Generality

Combine SQL, streaming, and complex analytics.

Spark powers a stack of libraries including SQL and DataFrames, MLlib for machine learning, GraphX, and Spark Streaming. You can combine these libraries seamlessly in the same application.

Runs Everywhere

Spark runs on Hadoop, Apache Mesos, Kubernetes, standalone, or in the cloud. It can access diverse data sources.

You can run Spark using its standalone cluster mode, on EC2, on Hadoop YARN, on Mesos, or on Kubernetes. Access data in HDFS, Alluxio, Apache Cassandra, Apache HBase, Apache Hive, and hundreds of other data sources.

架构图

https://www.yiibai.com/spark/apache-spark-architecture.html

驱动程序

驱动程序是一个运行应用程序,由

main()函数并创建SparkContext对象的进程。SparkContext的目的是协调spark应用程序,作为集群上的独立进程集运行。要在群集上运行,

SparkContext将连接到不同类型的群集管理器,然后执行以下任务:

- 它在集群中的节点上获取执行程序。

- 它将应用程序代码发送给执行程序。这里,应用程序代码可以通过传递给

SparkContext的JAR或Python文件来定义。- 最后,

SparkContext将任务发送给执行程序以运行。集群管理器

集群管理器的作用是跨应用程序分配资源。Spark能够在大量集群上运行。

它由各种类型的集群管理器组成,例如:Hadoop YARN,Apache Mesos和Standalone Scheduler。

这里,独立调度程序是一个独立的Spark集群管理器,便于在一组空机器上安装Spark。工作节点

- 工作节点是从节点

- 它的作用是在集群中运行应用程序代码。

执行程序

- 执行程序是为工作节点上的应用程序启动的进程。

- 它运行任务并将数据保存在内存或磁盘存储中。

- 它将数据读写到外部源。

- 每个应用程序都包含其执行者。

任务

- 任务被发送给一个执行程序的工作单位。

组件

https://www.yiibai.com/spark/apache-spark-components.html

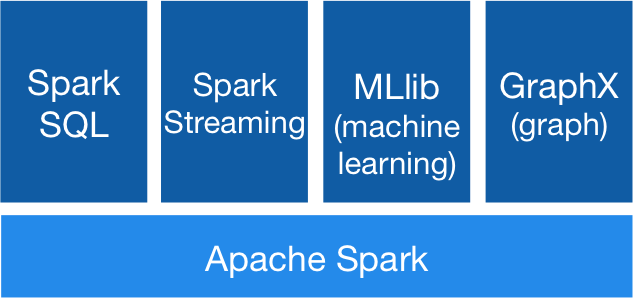

Spark项目由不同类型的紧密集成组件组成。Spark是一个计算引擎,可以组织,分发和监控多个应用程序。

下面我们来详细了解每个Spark组件。

Spark Core

- Spark Core是Spark的核心,并执行核心功能。

- 它包含用于任务调度,故障恢复,与存储系统和内存管理交互的组件。

Spark SQL

- Spark SQL构建于Spark Core之上,它为结构化数据提供支持。

- 它允许通过SQL(结构化查询语言)以及SQL的Apache Hive变体(称为HQL(Hive查询语言))查询数据。

- 它支持JDBC和ODBC连接,这些连接建立Java对象与现有数据库,数据仓库和商业智能工具之间的关系。

- 它还支持各种数据源,如Hive表,Parquet和JSON。

Spark Streaming

- Spark Streaming是一个Spark组件,支持流数据的可伸缩和容错处理。

- 它使用Spark Core的快速调度功能来执行流分析。

- 它接受小批量数据并对数据执行RDD转换。

- 它的设计确保为流数据编写的应用程序可以重复使用,只需很少的修改即可分析批量的历史数据。

- Web服务器生成的日志文件可以视为数据流的实时示例。

MLlib

- MLlib是一个机器学习库,包含各种机器学习算法。

- 它包括相关性和假设检验,分类和回归,聚类和主成分分析。

- 它比Apache Mahout使用的基于磁盘的实现快9倍。

GraphX

- GraphX是一个用于操作图形和执行图形并行计算的库。

- 它有助于创建一个有向图,其中任意属性附加到每个顶点和边。

- 要操纵图形,它支持各种基本运算符,如子图,连接顶点和聚合消息。

Quick Start

https://spark.apache.org/docs/latest/quick-start.html

This tutorial provides a quick introduction to using Spark. We will first introduce the API through Spark’s interactive shell (in Python or Scala), then show how to write applications in Java, Scala, and Python.

RDD -->> DataSet过渡。

但是目前还支持RDD, 但是为了性能,推荐使用DataSet。

RDD的编程指导: https://spark.apache.org/docs/latest/rdd-programming-guide.html

DataSet编程指导: https://spark.apache.org/docs/latest/sql-programming-guide.html

Note that, before Spark 2.0, the main programming interface of Spark was the Resilient Distributed Dataset (RDD). After Spark 2.0, RDDs are replaced by Dataset, which is strongly-typed like an RDD, but with richer optimizations under the hood.

However, we highly recommend you to switch to use Dataset, which has better performance than RDD.

一个计算PI值得算法, 实验方法:Puff投针。

Finally, Spark includes several samples in the

examplesdirectory (Scala, Java, Python, R). You can run them as follows:

# For Python examples, use spark-submit directly: ./bin/spark-submit examples/src/main/python/pi.py

Spark编程指南

https://spark.apache.org/docs/0.9.1/scala-programming-guide.html

帮助建立Spark分布式计算,编程涉及的相关概念。

虽然使用Scala作为示例,不影响理解。

设置运行集群路径,可以是本地,本地指定线程数, spark集群,mesos集群。

Master URLs

The master URL passed to Spark can be in one of the following formats:

Master URL Meaning local Run Spark locally with one worker thread (i.e. no parallelism at all). local[K] Run Spark locally with K worker threads (ideally, set this to the number of cores on your machine). spark://HOST:PORT Connect to the given Spark standalone cluster master. The port must be whichever one your master is configured to use, which is 7077 by default. mesos://HOST:PORT Connect to the given Mesos cluster. The host parameter is the hostname of the Mesos master. The port must be whichever one the master is configured to use, which is 5050 by default.

RDD 弹性分布式数据集合。

Resilent 弹性 - 强调是容错性,遇到错误可以恢复。

RDD定义了一些列变换和动作, 数据操作可以定义为一些列变换和动作的序列, 或者图, 在序列或者图中, 一旦有一个中间的数据集合错线错误, 可以根据其上一个结果,进行本次操作,操作的结果,就是本次丢失的数据集合。

Distributed 分布式 - 说明数据可以分不到不同的机器上, 进行并行计算,以提高计算能力,缩短计算时间。

Resilient Distributed Datasets (RDDs)

Spark revolves around the concept of a resilient distributed dataset (RDD), which is a fault-tolerant collection of elements that can be operated on in parallel. There are currently two types of RDDs: parallelized collections, which take an existing Scala collection and run functions on it in parallel, and Hadoop datasets, which run functions on each record of a file in Hadoop distributed file system or any other storage system supported by Hadoop. Both types of RDDs can be operated on through the same methods.

构建并行数据集合。

Parallelized Collections

Parallelized collections are created by calling

SparkContext’sparallelizemethod on an existing Scala collection (aSeqobject). The elements of the collection are copied to form a distributed dataset that can be operated on in parallel. For example, here is some interpreter output showing how to create a parallel collection from an array:scala> val data = Array(1, 2, 3, 4, 5) data: Array[Int] = Array(1, 2, 3, 4, 5) scala> val distData = sc.parallelize(data) distData: spark.RDD[Int] = spark.ParallelCollection@10d13e3e

或者直接从文件系统(被hadoop支持的)中读取文件,构建并行数据集合。

Hadoop Datasets

Spark can create distributed datasets from any file stored in the Hadoop distributed file system (HDFS) or other storage systems supported by Hadoop (including your local file system, Amazon S3, Hypertable, HBase, etc). Spark supports text files, SequenceFiles, and any other Hadoop InputFormat.

Text file RDDs can be created using

SparkContext’stextFilemethod. This method takes an URI for the file (either a local path on the machine, or ahdfs://,s3n://,kfs://, etc URI). Here is an example invocation:scala> val distFile = sc.textFile("data.txt") distFile: spark.RDD[String] = spark.HadoopRDD@1d4cee08

RDD操作, 包括变换 和 动作。

变换 -- 将一个数据转换为另外一个数据

动作 -- 动作将数据进行归总。

RDD Operations

RDDs support two types of operations: transformations, which create a new dataset from an existing one, and actions, which return a value to the driver program after running a computation on the dataset. For example,

mapis a transformation that passes each dataset element through a function and returns a new distributed dataset representing the results. On the other hand,reduceis an action that aggregates all the elements of the dataset using some function and returns the final result to the driver program (although there is also a parallelreduceByKeythat returns a distributed dataset).

Transformations

Transformation Meaning map(func) Return a new distributed dataset formed by passing each element of the source through a function func. filter(func) Return a new dataset formed by selecting those elements of the source on which func returns true.

Actions

Action Meaning reduce(func) Aggregate the elements of the dataset using a function func (which takes two arguments and returns one). The function should be commutative and associative so that it can be computed correctly in parallel. collect() Return all the elements of the dataset as an array at the driver program. This is usually useful after a filter or other operation that returns a sufficiently small subset of the data.

RDD API : https://spark.apache.org/docs/0.9.1/api/core/index.html#org.apache.spark.rdd.RDD

RDD持久化

RDD Persistence

One of the most important capabilities in Spark is persisting (or caching) a dataset in memory across operations. When you persist an RDD, each node stores any slices of it that it computes in memory and reuses them in other actions on that dataset (or datasets derived from it). This allows future actions to be much faster (often by more than 10x). Caching is a key tool for building iterative algorithms with Spark and for interactive use from the interpreter.

Storage Level Meaning MEMORY_ONLY Store RDD as deserialized Java objects in the JVM. If the RDD does not fit in memory, some partitions will not be cached and will be recomputed on the fly each time they're needed. This is the default level. MEMORY_AND_DISK Store RDD as deserialized Java objects in the JVM. If the RDD does not fit in memory, store the partitions that don't fit on disk, and read them from there when they're needed. MEMORY_ONLY_SER Store RDD as serialized Java objects (one byte array per partition). This is generally more space-efficient than deserialized objects, especially when using a fast serializer, but more CPU-intensive to read. MEMORY_AND_DISK_SER Similar to MEMORY_ONLY_SER, but spill partitions that don't fit in memory to disk instead of recomputing them on the fly each time they're needed. DISK_ONLY Store the RDD partitions only on disk.

共享变量,实现在分布式的worker主机上共享,主程序的环境变量。

广播变量 -- 主程序定义, 所有worker节点共享,不能修改。

累计变量 -- 主程序定义, 所有worker节点共享,只有累加的权利。

Shared Variables

Normally, when a function passed to a Spark operation (such as

maporreduce) is executed on a remote cluster node, it works on separate copies of all the variables used in the function. These variables are copied to each machine, and no updates to the variables on the remote machine are propagated back to the driver program. Supporting general, read-write shared variables across tasks would be inefficient. However, Spark does provide two limited types of shared variables for two common usage patterns: broadcast variables and accumulators.

Broadcast Variables

Broadcast variables allow the programmer to keep a read-only variable cached on each machine rather than shipping a copy of it with tasks. They can be used, for example, to give every node a copy of a large input dataset in an efficient manner. Spark also attempts to distribute broadcast variables using efficient broadcast algorithms to reduce communication cost.

Accumulators

Accumulators are variables that are only “added” to through an associative operation and can therefore be efficiently supported in parallel. They can be used to implement counters (as in MapReduce) or sums. Spark natively supports accumulators of numeric value types and standard mutable collections, and programmers can add support for new types.

Python编程指导

https://spark.apache.org/docs/0.9.1/python-programming-guide.html

./bin/pyspark python/examples/wordcount.py$ ./bin/pysparkThe Python shell can be used explore data interactively and is a simple way to learn the API:

>>> words = sc.textFile("/usr/share/dict/words") >>> words.filter(lambda w: w.startswith("spar")).take(5) [u'spar', u'sparable', u'sparada', u'sparadrap', u'sparagrass'] >>> help(pyspark) # Show all pyspark functions

推荐教程

厦门大学大数据教程,视频在 慕课 app上,属于完成课程,还有配套实验指导的网页,很推荐。

http://dblab.xmu.edu.cn/blog/1709-2/

http://dblab.xmu.edu.cn/blog/1689-2/

- from pyspark import SparkContext

- sc = SparkContext( 'local', 'test')

- logFile = "file:///usr/local/spark/README.md"

- logData = sc.textFile(logFile, 2).cache()

- numAs = logData.filter(lambda line: 'a' in line).count()

- numBs = logData.filter(lambda line: 'b' in line).count()

- print('Lines with a: %s, Lines with b: %s' % (numAs, numBs))

浙公网安备 33010602011771号

浙公网安备 33010602011771号