lightdb逻辑备份、恢复最佳实践

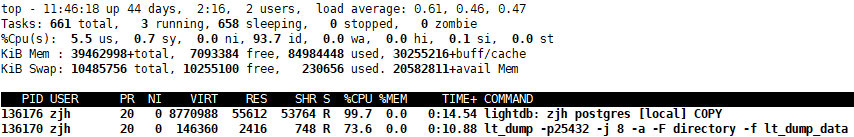

lt_dump采用的是ddl和数据分开的方式导出(虽然数据也支持insert模式,但默认是copy模式,性能最佳,压缩率最高),支持串行和并行导出,并行的时候按照对象级级并发(所以采用lightdb部署模式会非常快,资源可以用完)。

如下:

[zjh@hs-10-20-30-193 lt_dump_data_only]$ lt_dump --help lt_dump dumps a database as a text file or to other formats. Usage: lt_dump [OPTION]... [DBNAME] General options: -f, --file=FILENAME output file or directory name -F, --format=c|d|t|p output file format (custom, directory, tar, plain text (default)) -j, --jobs=NUM use this many parallel jobs to dump -v, --verbose verbose mode -V, --version output version information, then exit -Z, --compress=0-9 compression level for compressed formats --lock-wait-timeout=TIMEOUT fail after waiting TIMEOUT for a table lock --no-sync do not wait for changes to be written safely to disk -?, --help show this help, then exit Options controlling the output content: -a, --data-only dump only the data, not the schema -b, --blobs include large objects in dump -B, --no-blobs exclude large objects in dump -c, --clean clean (drop) database objects before recreating -C, --create include commands to create database in dump -E, --encoding=ENCODING dump the data in encoding ENCODING -n, --schema=PATTERN dump the specified schema(s) only -N, --exclude-schema=PATTERN do NOT dump the specified schema(s) -O, --no-owner skip restoration of object ownership in plain-text format -s, --schema-only dump only the schema, no data -S, --superuser=NAME superuser user name to use in plain-text format -t, --table=PATTERN dump the specified table(s) only -T, --exclude-table=PATTERN do NOT dump the specified table(s) -x, --no-privileges do not dump privileges (grant/revoke) --binary-upgrade for use by upgrade utilities only --column-inserts dump data as INSERT commands with column names --disable-dollar-quoting disable dollar quoting, use SQL standard quoting --disable-triggers disable triggers during data-only restore --enable-row-security enable row security (dump only content user has access to) --exclude-table-data=PATTERN do NOT dump data for the specified table(s) --extra-float-digits=NUM override default setting for extra_float_digits --if-exists use IF EXISTS when dropping objects --include-foreign-data=PATTERN include data of foreign tables on foreign servers matching PATTERN --inserts dump data as INSERT commands, rather than COPY --load-via-partition-root load partitions via the root table --no-comments do not dump comments --no-publications do not dump publications --no-security-labels do not dump security label assignments --no-subscriptions do not dump subscriptions --no-synchronized-snapshots do not use synchronized snapshots in parallel jobs --no-tablespaces do not dump tablespace assignments --no-unlogged-table-data do not dump unlogged table data --on-conflict-do-nothing add ON CONFLICT DO NOTHING to INSERT commands --quote-all-identifiers quote all identifiers, even if not key words --rows-per-insert=NROWS number of rows per INSERT; implies --inserts --section=SECTION dump named section (pre-data, data, or post-data) --serializable-deferrable wait until the dump can run without anomalies --snapshot=SNAPSHOT use given snapshot for the dump --strict-names require table and/or schema include patterns to match at least one entity each --use-set-session-authorization use SET SESSION AUTHORIZATION commands instead of ALTER OWNER commands to set ownership Connection options: -d, --dbname=DBNAME database to dump -h, --host=HOSTNAME database server host or socket directory -p, --port=PORT database server port number -U, --username=NAME connect as specified database user -w, --no-password never prompt for password -W, --password force password prompt (should happen automatically) --role=ROLENAME do SET ROLE before dump If no database name is supplied, then the PGDATABASE environment variable value is used. Report bugs to <https://github.com/hslightdb>. LightDB home page: <https://www.hs.net/lightdb>

默认情况下,lt_dump导出所有对象(包括订阅、发布、无日志表、RLS、表空间等)的定义和数据,并且默认是gzip压缩模式,在数据重复多的场景中,压缩率甚至能够达到50:1。

在并行模式下,默认情况下,lt_dump导出对象时采用的是各自快照,并非全局一致性。不过这个问题不是很大,毕竟备份期间一般不怎么跑业务。

常规用法

同时导出DDL和数据

lt_dump -p25432 -f lt_dump_data.dat --no-publications --no-subscriptions --no-unlogged-table-data postgres -n public

lt_dump -p25432 -j 8 -F d -f lt_dump_data --no-publications --no-subscriptions --no-unlogged-table-data postgres -n public

如果有一个表超级大,那么并行导出的效果就不佳。

导出文件的结构也是三个部分

建表语句

copy from stdin;

建索引、外键、主键等。

分别导出DDL和数据

lt_dump -p25432 -s -f lt_dump_schema_only_data.dat --no-publications --no-subscriptions --no-unlogged-table-data postgres -n public

-- 一般里面可能还包括其他对象,以及一些系统extension,所以不建议包含-C -c选项,即database不删除不重建,不然容易丢失一些三方插件。因为表结构有可能在lt_dump之后发生了变化,所以表结构应该在导入的时候重建,而开源PG并不支持表级别recreate的(lightdb将于22.2版本支持--recreate-table选项,以便在导出的ddl中包含drop table if exists语句)。

lt_dump -p25432 -j 8 -a -F d -f lt_dump_data_only --no-publications --no-subscriptions --no-unlogged-table-data postgres -n public

-- 并行导出数据本身。并行模式下,文件格式必须是directory,因为是并行的粒度是文件(其实就是并行的copy to),默认使用tar.gz压缩(如果编译(可通过lt_config查看)的时候包含了宏LIBZ,则custom和directory模式默认使用zlib默认压缩级别6)。

高效的用法(分基本表结构及对象,数据,后置DDL)

高效、透明的做法建议为将lt_dump分为三个导出脚本:DDL,数据库,后置DDL。

lt_dump -p25432 --section=pre-data -s -f lt_dump_schema_only_predata.dat --no-publications --no-subscriptions --no-unlogged-table-data postgres -n public # 业务表不建议存储在public下

排除或包含必要的表

zjh@mydb=# select * from myns. myns.exclude_t1 myns.t1 myns.t2 ## 排除 exclude_t1表 [zjh@hs-10-20-30-193 ~]$ lt_dump -p 9999 -c -K -F d -f lt_dump_dir -n myns --exclude-table=myns.exclude_t1 --if-exists mydb [zjh@hs-10-20-30-193 ~]$ ll lt_dump_dir/ total 12 -rw-rw-r-- 1 zjh zjh 27 May 29 14:49 3129.dat.gz -rw-rw-r-- 1 zjh zjh 27 May 29 14:49 3130.dat.gz -rw-rw-r-- 1 zjh zjh 1572 May 29 14:49 toc.dat -- 正则模式排除,大小写不敏感 [zjh@hs-10-20-30-193 ~]$ lt_dump -p 9999 -c -K -F d -f lt_dump_dir -n myns --exclude-table=myns.*EX* --if-exists mydb [zjh@hs-10-20-30-193 ~]$ ll lt_dump_dir/ total 12 -rw-rw-r-- 1 zjh zjh 27 May 29 14:46 3129.dat.gz -rw-rw-r-- 1 zjh zjh 27 May 29 14:46 3130.dat.gz -rw-rw-r-- 1 zjh zjh 1572 May 29 14:46 toc.dat [zjh@hs-10-20-30-193 ~]$ rm -rf lt_dump_dir/ ## 多张表的时候,需要-T/--exclude-table声明多次 [zjh@hs-10-20-30-193 ~]$ lt_dump -p 9999 -c -K -F d -f lt_dump_dir -n myns --exclude-table=myns.t1,myns.t2 --if-exists mydb [zjh@hs-10-20-30-193 ~]$ ll lt_dump_dir/ total 16 -rw-rw-r-- 1 zjh zjh 27 May 29 14:46 3129.dat.gz -rw-rw-r-- 1 zjh zjh 27 May 29 14:46 3130.dat.gz -rw-rw-r-- 1 zjh zjh 27 May 29 14:46 3131.dat.gz -rw-rw-r-- 1 zjh zjh 1929 May 29 14:46 toc.dat [zjh@hs-10-20-30-193 ~]$ rm -rf lt_dump_dir/ [zjh@hs-10-20-30-193 ~]$ lt_dump -p 9999 -c -K -F d -f lt_dump_dir -n myns --exclude-table=myns.t1 -T myns.t2 --if-exists mydb [zjh@hs-10-20-30-193 ~]$ ll lt_dump_dir/ total 8 -rw-rw-r-- 1 zjh zjh 27 May 29 14:47 3129.dat.gz -rw-rw-r-- 1 zjh zjh 1279 May 29 14:47 toc.dat

数据导入

导入前性能优化:

work_mem = 32MB

shared_buffers = 4GB

maintenance_work_mem = 2GB

full_page_writes = off

autovacuum = off

wal_buffers = -1LightDB增强

[zjh@hs-10-20-30-193 ~]$ lt_dump --help lt_dump dumps a database as a text file or to other formats. Usage: lt_dump [OPTION]... [DBNAME] General options: -f, --file=FILENAME output file or directory name -F, --format=c|d|t|p output file format (custom, directory, tar, plain text (default)) -j, --jobs=NUM use this many parallel jobs to dump -v, --verbose verbose mode -V, --version output version information, then exit -Z, --compress=0-9 compression level for compressed formats --lock-wait-timeout=TIMEOUT fail after waiting TIMEOUT for a table lock --no-sync do not wait for changes to be written safely to disk -?, --help show this help, then exit Options controlling the output content: -a, --data-only dump only the data, not the schema -b, --blobs include large objects in dump -B, --no-blobs exclude large objects in dump -c, --clean clean (drop) database objects before recreating -C, --create include commands to create database in dump -K, --recreate-schema directly clean (drop) schemas before recreating, more faster then -c command -E, --encoding=ENCODING dump the data in encoding ENCODING

先备份,

lt_dump -p 9999 -c -K -F d -f lt_dump_dir -n myns --exclude-table=myns.t1 -T myns.t2 --if-exists mydb

其次恢复,

[zjh@hs-10-20-30-193 ~]$ lt_restore -c -K -p 9999 -v -n myns lt_dump_dir -d mydb lt_restore: connecting to database for restore lt_restore: dropping SCHEMA myns # 直接删除schema,非常干净、迅速 lt_restore: creating SCHEMA myns lt_restore: creating TABLE "myns.t1" lt_restore: WARNING: LightDB DDL check warn! keyword not allowed with [column name]:id lt_restore: WARNING: LightDB DDL check warn! no primary key! lt_restore: WARNING: LightDB DDL check warn! no gmt_create or no gmt_modified! lt_restore: creating TABLE "myns.t2" lt_restore: WARNING: LightDB DDL check warn! keyword not allowed with [column name]:id lt_restore: WARNING: LightDB DDL check warn! no primary key! lt_restore: WARNING: LightDB DDL check warn! no gmt_create or no gmt_modified! lt_restore: processing data for table "myns.t1" lt_restore: processing data for table "myns.t2" [zjh@hs-10-20-30-193 ~]$

默认pg pg_dump如下:

[zjh@hs-10-20-30-193 ~]$ lt_dump -p 9999 -c -F d -f lt_dump_dir -n myns --if-exists mydb [zjh@hs-10-20-30-193 ~]$ lt_restore -c -p 9999 -v -n myns lt_dump_dir -d mydb lt_restore: connecting to database for restore lt_restore: dropping TABLE t2 lt_restore: dropping TABLE t1 lt_restore: creating TABLE "myns.t1" lt_restore: WARNING: LightDB DDL check warn! keyword not allowed with [column name]:id lt_restore: WARNING: LightDB DDL check warn! no primary key! lt_restore: WARNING: LightDB DDL check warn! no gmt_create or no gmt_modified! lt_restore: creating TABLE "myns.t2" lt_restore: WARNING: LightDB DDL check warn! keyword not allowed with [column name]:id lt_restore: WARNING: LightDB DDL check warn! no primary key! lt_restore: WARNING: LightDB DDL check warn! no gmt_create or no gmt_modified! lt_restore: processing data for table "myns.t1" lt_restore: processing data for table "myns.t2"

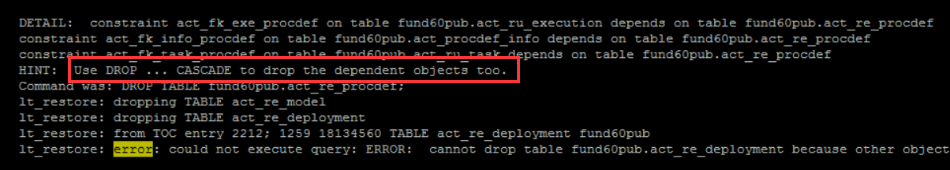

如果没有使用-K选项,LightDB在drop table上增强了drop table xxx cascade选项,可解决因为外键约束。虽然pg_dump在内部已经基于pg_depend做了依赖关系的查询和排序处理。默认生成的DDL如下:

ALTER TABLE IF EXISTS ONLY myns.t2 DROP CONSTRAINT IF EXISTS t2_id_fkey;

ALTER TABLE IF EXISTS ONLY myns.t1 DROP CONSTRAINT IF EXISTS t1_pkey;

DROP TABLE IF EXISTS myns.t2;

DROP TABLE IF EXISTS myns.t1;

DROP SCHEMA IF EXISTS myns;

但是仍然会有pg_dump执行失败的情况,只要关系复杂就会出现这个问题,如下:

而lt_dump就不会有这个问题,生成的DDL如下:

ALTER TABLE IF EXISTS ONLY myns.t2 DROP CONSTRAINT IF EXISTS t2_id_fkey;

ALTER TABLE IF EXISTS ONLY myns.t1 DROP CONSTRAINT IF EXISTS t1_pkey;

DROP TABLE IF EXISTS myns.t2 CASCADE;

DROP TABLE IF EXISTS myns.t1 CASCADE;

DROP SCHEMA IF EXISTS myns;

此外,LightDB 22.2提供了一个额外的客户端工具lt_group_executor,用于代替lt_restore执行--post-data部分进行索引重建,可最大程度的加快数据导入后的索引重建的效率。

浙公网安备 33010602011771号

浙公网安备 33010602011771号