GBDT:原理及python实现

Table of Contents

GBDT概述

GBDT(Gradient Boosting Decision Tree)梯度提升树是一种以决策树为基模型的boosting方法。首先,以MSE为损失函数的GBDT回归树为例,引入GBDT。假设我们前一轮迭代得到的强学习器是\(f_{k-1}(x)\),损失函数是\(L(y,f_{k-1}(x))\)。那本轮的目标就是寻找一个基模型\(h_k(x)\),使得\(L(y, f_{k}(x)) =L(y, f_{k-1}(x)+ h_k(x))\)最小,注意,这里的损失函数是全局的损失函数。

举一个例子来解释GBDT,假设明天的销售额是3000,我们首先训练一棵Cart树,预测明天的销售额是2000,再训练一颗树,以\(3000-2000=1000\)为预测目标来拟合残差,假设预测值为800,然后继续以200为目标训练决策树,直到结束。

GBDT回归(提升树)

算法流程

首先训练一个Cart树

其损失函数为

其负梯度为

计算每条数据的负梯度,得到数据集

负梯度刚好是实际值与模型拟合值之间的偏差,再训练一个模型用于拟合这个偏差

然后更新学习器

得到学习器k,重复此过程直到结束,最终预测值为

可以看到,对于使用MSE作为损失函数的Cart回归树,损失函数的负梯度就是残差,而由于损失函数中两个参数的关系是\(x_1-x_2\),因此

与

是等价的,而后者就是使用MSE作为损失函数的Cart树的损失函数,也就是说,对单棵树的局部最优就是全局最优,可直接调用决策树包而不加修改

python实现

from multiprocessing import Pool

import numpy as np

from sklearn.ensemble import GradientBoostingRegressor

from sklearn.tree import DecisionTreeRegressor

from multiprocessing import Pool

from sklearn import datasets

from sklearn.metrics import mean_squared_error

class GBDTRegressor:

def __init__(self, X, y, n_estimators=100, learning_rate=0.1, max_depth=3):

self.features = np.array(X)

if len(self.features.shape) == 1:

self.features = self.features.reshape(1, -1)

self.labels = np.array(y).reshape(-1, 1)

self.regressors = []

self.n_estimators = n_estimators

self.max_depth = max_depth

self.learning_rate = learning_rate

def fit(self):

estimator0 = DecisionTreeRegressor(

max_depth=self.max_depth).fit(

self.features, self.labels)

self.regressors.append(estimator0)

predicted_y = self.learning_rate * estimator0.predict(self.features)

for i in range(self.n_estimators - 1):

target = self.labels.ravel() - predicted_y

estimator = DecisionTreeRegressor(

max_depth=self.max_depth).fit(

self.features, target)

self.regressors.append(estimator)

predicted_y = predicted_y + self.learning_rate * \

estimator.predict(self.features)

def _predict(self, regressor, X):

return self.learning_rate * regressor.predict(X)

def predict(self, X):

X = np.array(X)

if len(X.shape) == 1:

X = X.reshape(1, -1)

with Pool() as p:

result = np.array(

p.starmap(

self._predict,

[(reg, X) for reg in self.regressors]))

result[-1, :] = result[-1, :] / self.learning_rate

return np.sum(result, axis=0)

if __name__ == '__main__':

d = datasets.fetch_california_housing()

X = d['data']

y = d['target']

gbdt = GBDTRegressor(X, y)

gbdt.fit()

pre_y = gbdt.predict(X)

print(mean_squared_error(pre_y, y))

sklean_gbdt = GradientBoostingRegressor(n_estimators=100).fit(X, y)

pre_y = sklean_gbdt.predict(X)

print(mean_squared_error(pre_y, y))

0.2593586663475436

0.26188431965892933

GBDT分类

算法流程

GBDT分类由于难以处理残差,因此,使用类似逻辑回归的对数损失函数将预测值的残差连续化,也就是说,GBDT分类使用的也是Cart回归树。

\(f(x)\)表示提升树的输出值,与逻辑回归类似,逻辑回归将线性回归的预测值\(X\theta\)使用sigmoid函数映射到0-1之间,作为预测概率,然后使用最大似然并取负对数作为损失函数。

同样的,GBDT将\(y_if(x_i)\)输入sigmoid函数,作为预测概率

使用最大似然估计得到似然函数

取负对数得

以上就是需要最优化的损失函数,对于每个样本,其损失为:

拟合对象负梯度:

损失函数不同是跟回归相比一个明显的区别,下面是拟合负梯度时的决策树的最优值:

前面提到过,在使用MSE作为损失函数时,总体最优与决策树预测值的最优是一致的,也就是说可以直接使用决策树叶节点均值作为预测值。但是当使用对数函数时,就需要直接优化上面的式子。但是要优化上面的式子比较困难,因此,通常使用以下的近似:

- 初始化问题

在初始化\(f(x)\)时,李航老师的书中

XGB论文中,提到初始化

其实这两者是等价的,假设样本中,正例数量为a,反例数量为b,则有

上式可化为

而

故

python实现

import numpy as np

from sklearn.datasets import make_classification

from sklearn.ensemble import GradientBoostingClassifier

from sklearn.tree import DecisionTreeRegressor

from multiprocessing import Pool, Manager

from sklearn.metrics import accuracy_score

from tqdm import tqdm

class GBDTClassifier:

def __init__(self, X, y, n_estimators=100, learning_rate=0.1,max_depth=3):

self.features = np.array(X)

if len(self.features.shape) == 1:

self.features = self.features.reshape(1, -1)

self.labels = np.array(y).reshape(-1, 1)

self.labels[self.labels == 0] = -1

self.estimators = []

self.tree_predict_value = []

self.init_value = np.log((1 + np.mean(self.labels)) / (1 - np.mean(self.labels)))

self.n_estimators = n_estimators

self.learning_rate = learning_rate

self.max_depth = max_depth

def _cal_cj(self, j, r, area_num, i, dic):

'''

计算叶节点j的预测值

:param j: 节点名

:param r: 本轮样本残差(待预测值)

:param area_num: 本轮样本所在节点

:param i: 轮数

:return: 无返回,将计算结果存入字典中

'''

# if tree_predict_value is None:

# tree_predict_value = self.tree_predict_value

rj = r[area_num == j]

c = np.sum(rj) / np.sum(abs(rj) * (1 - abs(rj)))

dic[j] = c

def fit(self):

fk_1 = self.init_value * np.ones([self.labels.shape[0], 1])

for i in tqdm(range(self.n_estimators)):

# 每一轮迭代,首先计算残差r,然后训练回归树,并获取每个样本所在的叶节点

dic = {}

r = self.labels / (1 + np.exp(self.labels * fk_1))

# print('r',np.unique(r))

dtr = DecisionTreeRegressor(random_state=1,max_depth=self.max_depth).fit(self.features, r)

self.estimators.append(dtr)

area_num = dtr.apply(self.features)

# 并行计算每个节点的预测值

for j in np.unique(area_num):

self._cal_cj(j, r, area_num, i, dic)

self.tree_predict_value.append(dic)

ci = np.array(list(map(lambda x: self.tree_predict_value[i][x], area_num)))

# print('ci',i,ci[0],fk_1[0],self.labels[0])

fk_1 = fk_1 + self.learning_rate * ci.reshape(-1,1)

def _predict(self, i, X):

area_num = self.estimators[i].apply(X)

return np.array(list(map(lambda x: self.learning_rate * self.tree_predict_value[i][x], area_num)))

def predict(self, X):

if len(X.shape) == 1:

X = X.reshape(1, -1)

with Pool() as p:

result = np.array(list(p.starmap(self._predict, [(i, X) for i in range(self.n_estimators)])))

result[-1, :] = result[-1, :] / self.learning_rate

return np.array(np.sum(result, axis=0) >= 0).astype(int)

if __name__ == '__main__':

X, y = make_classification(n_samples=1000, n_features=4,

n_informative=2, n_redundant=0,

random_state=0, shuffle=False)

gbdt = GBDTClassifier(X, y)

gbdt.fit()

print('训练完成')

gbdt_sklearn = GradientBoostingClassifier(criterion='mse').fit(X, y)

print(f"本例准确率:{accuracy_score(y, gbdt.predict(X))}")

print(f"sklearn准确率:{accuracy_score(y, gbdt_sklearn.predict(X))}")

print(gbdt_sklearn.score(X,y))

100%|██████████| 100/100 [00:00<00:00, 304.81it/s]

训练完成

本例准确率:0.992

sklearn准确率:0.991

0.991

多分类

多分类使用Softmax来完成,损失函数是交叉熵损失。

多分类与二分类有几点不同,由于使用交叉熵损失函数,因此,多分类的label是一个\(m\times K\)的矩阵,其中\(K\)为多分类的类别数量。是将原来的类别向量做One-hot的结果。比如,原来的4个样本的标签向量是\([1,2,1,3]\),OneHot之后就变成了

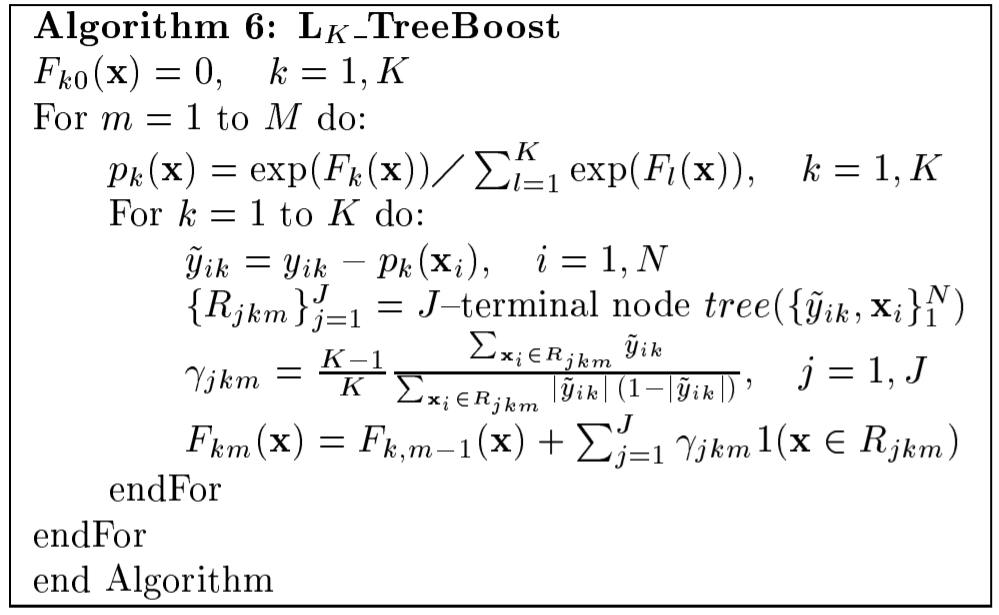

多分类的算法描述如下图:

首先,初始化\(F_{k0}(x)=0\),其中\(K\)是类别数,把这个数代入softmax中,也就是每个样本都初始化一个长度为\(K\)的向量,值为\(\frac1k\),还是上面的例子,就是每个样本初始化向量\(p_0=[\frac13,\frac13,\frac13]\),然后计算负梯度,以第一个样本为例\([\frac23,-\frac13,-\frac13]\),然后训练三棵树分别拟合三列值,方法类似二分类。与二分类的区别就是特征列从1变为3,每轮训练三棵树。