k8s监控终极解决方案(kube-prometheus安装及配置-包含定制化配置)

>>> 目录 <<<

一、概述

二、结构分析

三、Prometheus配置文件修改

四、添加外部监控

五、Scheduler和Controller配置

六、Alertmanager配置

七、监控数据持久化

八、Grafana仪表板配置

九、汇总

十、定制化配置

一、概述

首先Prometheus整体监控结构略微复杂,一个个部署并不简单。另外监控Kubernetes就需要访问内部数据,必定需要进行认证、鉴权、准入控制,

那么这一整套下来将变得难上加难,而且还需要花费一定的时间,如果你没有特别高的要求,还是建议选用开源比较好的一些方案。

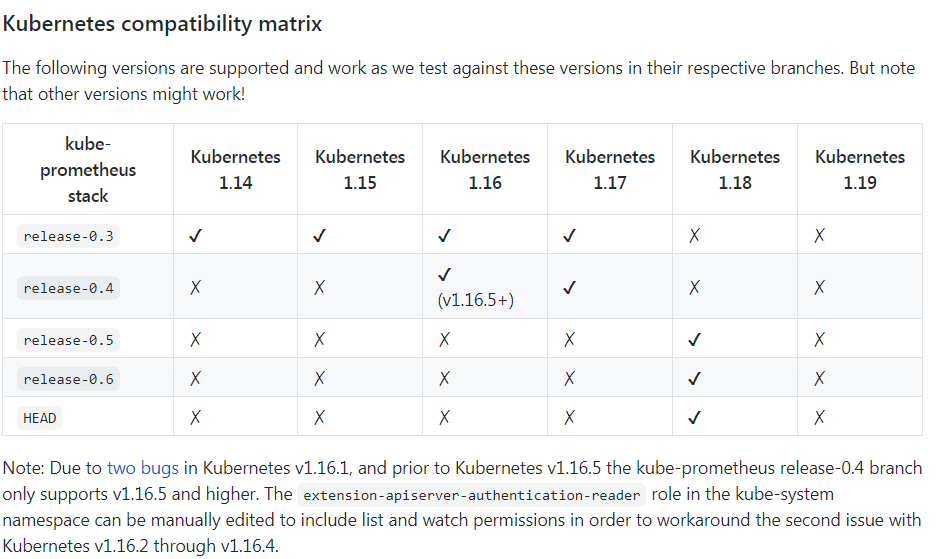

在k8s初期使用Heapster+cAdvisor方式监控,这是Prometheus Operator出现之前的k8s监控方案。后来出现了Prometheus Operator,但是目前Prometheus Operator已经不包含完整功能,完整的解决方案已经变为kube-prometheus。项目地址为:https://github.com/coreos/kube-prometheus关于这个kube-prometheus目前应该是开源最好的方案了,该存储库收集Kubernetes清单,Grafana仪表板和Prometheus规则,以及文档和脚本,以使用Prometheus Operator 通过Prometheus提供易于操作的端到端Kubernetes集群监视。以容器的方式部署到k8s集群,而且还可以自定义配置,非常的方便。

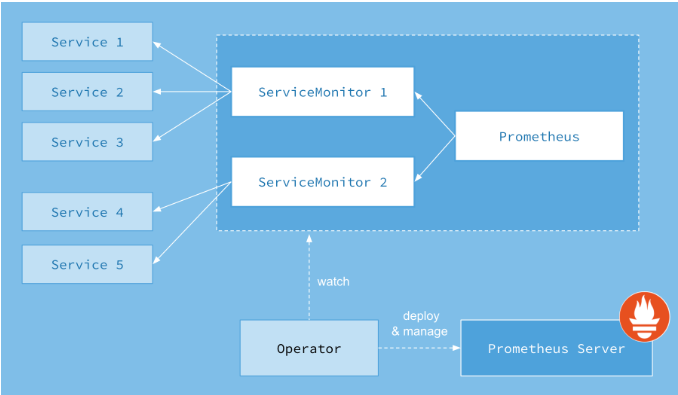

上图是Prometheus-Operator官方提供的架构图,其中Operator是最核心的部分,作为一个控制器,他会去创建Prometheus、ServiceMonitor、AlertManager以及PrometheusRule4个CRD资源对象,然后会一直监控并维持这4个资源对象的状态。

其中创建的prometheus这种资源对象就是作为Prometheus Server存在,而ServiceMonitor就是exporter的各种抽象,是用来提供专门提供metrics数据接口的工具,Prometheus就是通过ServiceMonitor提供的metrics数据接口去 pull 数据的,当然alertmanager这种资源对象就是对应的AlertManager的抽象,而PrometheusRule是用来被Prometheus实例使用的报警规则文件。

这样我们要在集群中监控什么数据,就变成了直接去操作 Kubernetes 集群的资源对象了,是不是方便很多了。上图中的 Service 和 ServiceMonitor 都是 Kubernetes 的资源,一个 ServiceMonitor 可以通过 labelSelector 的方式去匹配一类 Service,Prometheus 也可以通过 labelSelector 去匹配多个ServiceMonitor。

下面是组件的具体介绍:

- Operator: Operator 资源会根据自定义资源(Custom Resource Definition / CRDs)来部署和管理 Prometheus Server,同时监控这些自定义资源事件的变化来做相应的处理,是整个系统的控制中心。

- Prometheus: Prometheus 资源是声明性地描述 Prometheus 部署的期望状态。

- Prometheus Server: Operator 根据自定义资源 Prometheus 类型中定义的内容而部署的 Prometheus Server 集群,这些自定义资源可以看作是用来管理 Prometheus Server 集群的 StatefulSets 资源。

- ServiceMonitor: ServiceMonitor 也是一个自定义资源,它描述了一组被 Prometheus 监控的 targets 列表。该资源通过 Labels 来选取对应的 Service Endpoint,让 Prometheus Server 通过选取的 Service 来获取 Metrics 信息。

- Service: Service 资源主要用来对应 Kubernetes 集群中的 Metrics Server Pod,来提供给 ServiceMonitor 选取让 Prometheus Server 来获取信息。简单的说就是 Prometheus 监控的对象,例如 Node Exporter Service、Mysql Exporter Service 等等。

- Alertmanager: Alertmanager 也是一个自定义资源类型,由 Operator 根据资源描述内容来部署 Alertmanager 集群。

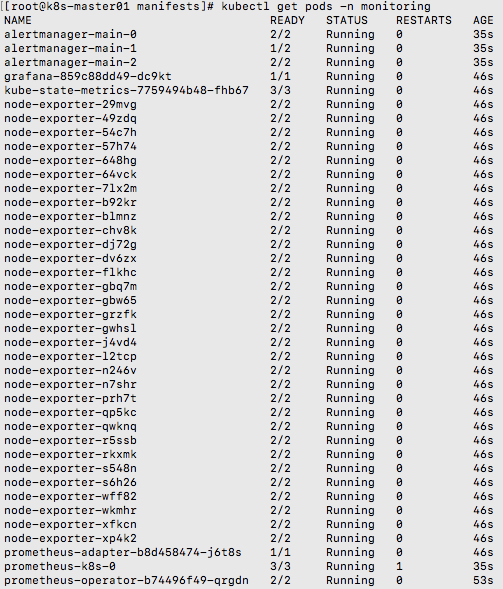

kube-prometheus包含的组件:

- Prometheus Operator

- 高可用的 Prometheus 默认会部署2个pod

- 高可用的 Alertmanager

- Prometheus node-exporter

- Prometheus Adapter for Kubernetes Metrics APIs

- kube-state-metrics

- Grafana

二、结构分析

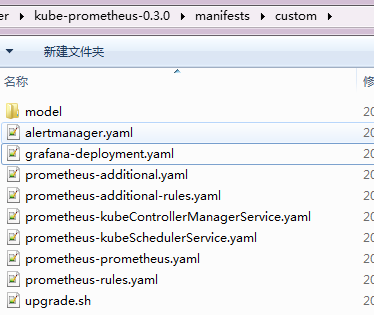

kube-prometheus相关部署文件在manifests目录中,共65个yaml,其中setup文件夹中包含所有自定义资源配置CustomResourceDefinition(一般不用修改,也不要轻易修改),所以部署时必须先执行这个文件夹。

其中包括告警(Alertmanager)、监控(Prometheus)、监控项(PrometheusRule)这三类资源定义,所以如果你想直接在k8s中修改对应控制器配置是没有用的(比如kubectl edit sts prometheus-k8s -n monitoring) 。

这里yaml文件看着很多,只要我们梳理一下就会很容易理解了,首先分为7个组件prometheus-operator、prometheus-adapter、prometheus、alertmanager、grafana、kube-state-metrics、node-exporter,

然后每个组件都会定义控制器、配置文件、集群权限、访问配置、监控配置, 但是我们一般只需要进行自定义告警配置和监控项,这样一筛选发现只需要修改几个文件即可。

[root@ymt108 manifests]# tree

.

├── alertmanager-alertmanager.yaml

├── alertmanager-secret.yaml # 告警配置

├── alertmanager-serviceAccount.yaml

├── alertmanager-serviceMonitor.yaml

├── alertmanager-service.yaml

├── grafana-dashboardDatasources.yaml

├── grafana-dashboardDefinitions.yaml

├── grafana-dashboardSources.yaml

├── grafana-deployment.yaml

├── grafana-serviceAccount.yaml

├── grafana-serviceMonitor.yaml

├── grafana-service.yaml

├── kube-state-metrics-clusterRoleBinding.yaml

├── kube-state-metrics-clusterRole.yaml

├── kube-state-metrics-deployment.yaml

├── kube-state-metrics-roleBinding.yaml

├── kube-state-metrics-role.yaml

├── kube-state-metrics-serviceAccount.yaml

├── kube-state-metrics-serviceMonitor.yaml

├── kube-state-metrics-service.yaml

├── node-exporter-clusterRoleBinding.yaml

├── node-exporter-clusterRole.yaml

├── node-exporter-daemonset.yaml

├── node-exporter-serviceAccount.yaml

├── node-exporter-serviceMonitor.yaml

├── node-exporter-service.yaml

├── prometheus-adapter-apiService.yaml

├── prometheus-adapter-clusterRoleAggregatedMetricsReader.yaml

├── prometheus-adapter-clusterRoleBindingDelegator.yaml

├── prometheus-adapter-clusterRoleBinding.yaml

├── prometheus-adapter-clusterRoleServerResources.yaml

├── prometheus-adapter-clusterRole.yaml

├── prometheus-adapter-configMap.yaml

├── prometheus-adapter-deployment.yaml

├── prometheus-adapter-roleBindingAuthReader.yaml

├── prometheus-adapter-serviceAccount.yaml

├── prometheus-adapter-service.yaml

├── prometheus-clusterRoleBinding.yaml

├── prometheus-clusterRole.yaml

├── prometheus-operator-serviceMonitor.yaml

├── prometheus-prometheus.yaml # 监控配置

├── prometheus-roleBindingConfig.yaml

├── prometheus-roleBindingSpecificNamespaces.yaml

├── prometheus-roleConfig.yaml

├── prometheus-roleSpecificNamespaces.yaml

├── prometheus-rules.yaml # 默认监控项

├── prometheus-serviceAccount.yaml

├── prometheus-serviceMonitorApiserver.yaml

├── prometheus-serviceMonitorCoreDNS.yaml

├── prometheus-serviceMonitorKubeControllerManager.yaml

├── prometheus-serviceMonitorKubelet.yaml

├── prometheus-serviceMonitorKubeScheduler.yaml

├── prometheus-serviceMonitor.yaml

├── prometheus-service.yaml

└── setup

├── 0namespace-namespace.yaml

├── prometheus-operator-0alertmanagerCustomResourceDefinition.yaml

├── prometheus-operator-0podmonitorCustomResourceDefinition.yaml

├── prometheus-operator-0prometheusCustomResourceDefinition.yaml

├── prometheus-operator-0prometheusruleCustomResourceDefinition.yaml

├── prometheus-operator-0servicemonitorCustomResourceDefinition.yaml

├── prometheus-operator-clusterRoleBinding.yaml

├── prometheus-operator-clusterRole.yaml

├── prometheus-operator-deployment.yaml

├── prometheus-operator-serviceAccount.yaml

└── prometheus-operator-service.yaml

1 directories, 65 files

三、Prometheus配置文件修改

为了保留原始文件,建议先备份一份kube-prometheus原文件。

1、修改prometheus-prometheus.yaml,以下为修改后并经生产环境验证过后的配置文件,根据自己的k8s集群环境进行修改

1)replicas:根据项目情况调整副本数

2)retention:修改Prometheus数据保留期限,默认值为“24h”,并且必须与正则表达式“ [0-9] +(ms | s | m | h | d | w | y)”匹配。

3)additionalScrapeConfigs:增加额外监控项配置,具体配置查看第五部分“添加k8s外部监控”。

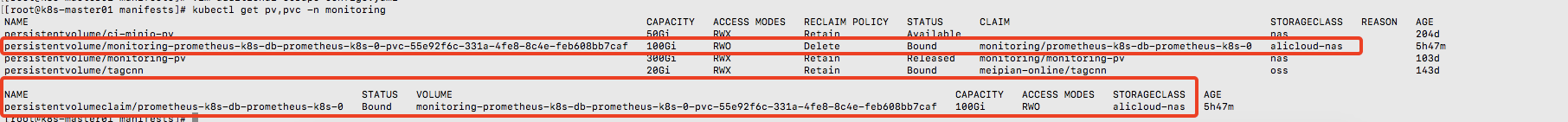

4)storage:volumeClaimTemplate: prometheus数据持久化配置,避免prometheus重启造成数据丢失,此处使用阿里云Nas创建的 StorageClass,创建StorageClass相关文章https://www.cnblogs.com/lidong94/p/14518362.html

5)nodeSelector && tolerations :节点选择器和污点,因为prometheus查询数据量大会导致k8s节点内存升高,如果所在节点上部署着线上服务会受到影响,故独立将prometheus放在特定的节点中(如果不需要可以删除)

apiVersion: monitoring.coreos.com/v1 kind: Prometheus metadata: labels: prometheus: k8s name: k8s namespace: monitoring spec: alerting: alertmanagers: - name: alertmanager-main namespace: monitoring port: web image: quay.io/prometheus/prometheus:v2.20.0 additionalScrapeConfigs: name: additional-scrape-configs key: prometheus-additional.yaml retention: 30d #修改Prometheus数据保留期限,默认值为24h,此处为保留30d, storage: volumeClaimTemplate: spec: storageClassName: alicloud-nas resources: requests: storage: 100Gi nodeSelector: zone: problem tolerations: - effect: NoSchedule key: forever operator: Equal value: problem podMonitorNamespaceSelector: {} podMonitorSelector: {} replicas: 1 #根据项目情况调整副本数,默认是2个 resources: requests: memory: 400Mi ruleSelector: matchLabels: prometheus: k8s role: alert-rules securityContext: fsGroup: 2000 runAsNonRoot: true runAsUser: 1000 serviceAccountName: prometheus-k8s serviceMonitorNamespaceSelector: {} serviceMonitorSelector: {} version: v2.20.0

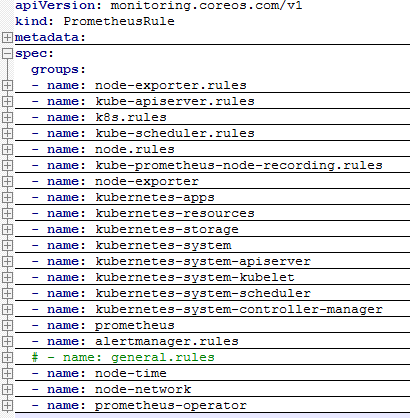

2、PrometheusRule配置文件修改

首先查看默认监控项配置prometheus-rules.yaml,其中包括76个告警项,基本覆盖了k8s常用监控点。