开帖大吉!

利用FFMPEG工作已有一年多,许多学习文档散落在电脑各处,没有一个清晰明确的组织脉络;还有踩过又填平的各种坑,时间久了难免遗忘,再次遭遇时仍然要从头查起;而且事必躬亲也是毫无疑问的低效率,不利于后来同事的成长。因此有了开博的决定,希望记录下自己走过的脚印,见证自己的成长,也能帮助后来人。

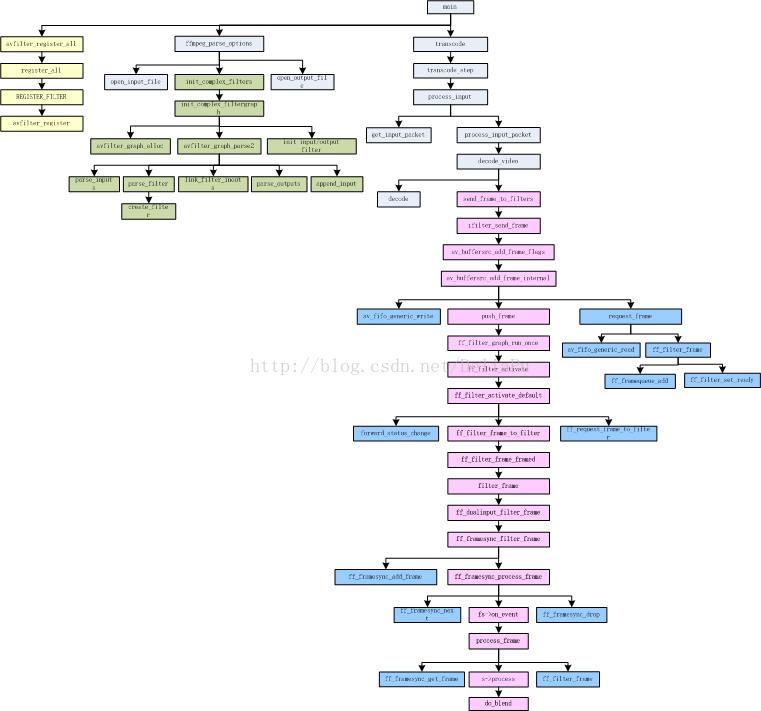

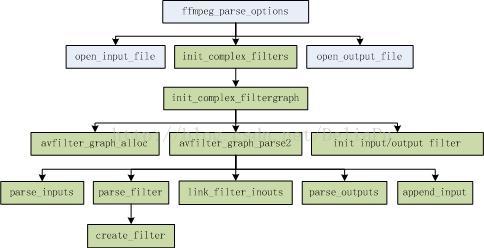

近期在ffmpeg3.3版本上开发一个基于overlay滤镜的新功能,因此花了些时间把双输入滤镜的实现梳理了一番,整理的大致的函数调用关系图如下:最左侧浅黄色分支为滤镜注册;中间分支浅绿色部分是滤镜的解析和初始化;最右侧分支的浅紫色部分是滤镜的主体实现过程。因为涉及到的函数比较多,因此本篇先分析滤镜注册和初始化部分,滤镜的具体实现留到下一篇分析。

使用的命令行如下:

ffmpeg -i input.ts -i logo.png -c:v libx264 -s 1280x720 -b:v 2000k -filter_complex "[0:v][1:v]overlay=100:100" -c:a copy -f mpegts -y overlayout.ts

一、滤镜的注册

avfilter_register_all():ffmpeg中,任何滤镜在使用之前,必须先经过注册。overlay滤镜的注册如下:

REGISTER_FILTER(OVERLAY, overlay, vf);- int avfilter_register(AVFilter *filter)

- {

- AVFilter **f = last_filter;

-

- /* the filter must select generic or internal exclusively */

- av_assert0((filter->flags & AVFILTER_FLAG_SUPPORT_TIMELINE) != AVFILTER_FLAG_SUPPORT_TIMELINE);

-

- filter->next = NULL;

-

- while(*f || avpriv_atomic_ptr_cas((void * volatile *)f, NULL, filter))

- f = &(*f)->next;

-

- last_filter = &filter->next; return 0;

- }

所谓的滤镜注册,也就是把目标滤镜的结构体加入到滤镜结构体链表中。

由于overlay的输入含有两个或以上,因此采用的是complex filter。complex filter的解析和初始化在ffmpeg_parse_options阶段完成,下面我们来逐层分析该阶段对滤镜的操作。

1. init_complex_filters():

- static int init_complex_filters(void)

- {

- int i, ret = 0;

-

- for (i = 0; i < nb_filtergraphs; i++) {

- ret = init_complex_filtergraph(filtergraphs[i]);

- if (ret < 0)

- return ret;

- }

- return 0;

- }

2. init_complex_filtergraph():

- int init_complex_filtergraph(FilterGraph *fg)

- {

- AVFilterInOut *inputs, *outputs, *cur;

- AVFilterGraph *graph;

- int ret = 0;

-

- /* this graph is only used for determining the kinds of inputs

- * and outputs we have, and is discarded on exit from this function */

- graph = avfilter_graph_alloc(); //为临时filter graph分配空间

- if (!graph)

- return AVERROR(ENOMEM);

-

- ret = avfilter_graph_parse2(graph, fg->graph_desc, &inputs, &outputs); //解析并创建filter

- if (ret < 0)

- goto fail;

-

- for (cur = inputs; cur; cur = cur->next) //初始化输入

- init_input_filter(fg, cur);

-

- for (cur = outputs; cur;) { //初始化输出,此例中只有一个输出

- GROW_ARRAY(fg->outputs, fg->nb_outputs);

- fg->outputs[fg->nb_outputs - 1] = av_mallocz(sizeof(*fg->outputs[0]));

- if (!fg->outputs[fg->nb_outputs - 1])

- exit_program(1);

-

- fg->outputs[fg->nb_outputs - 1]->graph = fg;

- fg->outputs[fg->nb_outputs - 1]->out_tmp = cur;

- fg->outputs[fg->nb_outputs - 1]->type = avfilter_pad_get_type(cur->filter_ctx->output_pads,

- cur->pad_idx);

- fg->outputs[fg->nb_outputs - 1]->name = describe_filter_link(fg, cur, 0);

- cur = cur->next;

- fg->outputs[fg->nb_outputs - 1]->out_tmp->next = NULL;

- }

-

- fail:

- avfilter_inout_free(&inputs); //删除分配的临时空间

- avfilter_graph_free(&graph);

- return ret;

- }

3. av_filter_graph_parse2():

- int avfilter_graph_parse2(AVFilterGraph *graph, const char *filters,

- AVFilterInOut **inputs,

- AVFilterInOut **outputs)

- {

- int index = 0, ret = 0;

- char chr = 0;

-

- AVFilterInOut *curr_inputs = NULL, *open_inputs = NULL, *open_outputs = NULL;

-

- filters += strspn(filters, WHITESPACES);

-

- if ((ret = parse_sws_flags(&filters, graph)) < 0)

- goto fail;

-

- do {

- AVFilterContext *filter;

- filters += strspn(filters, WHITESPACES);

-

- if ((ret = parse_inputs(&filters, &curr_inputs, &open_outputs, graph)) < 0) //根据命令行解析filter的输入,此例中为[0:v]和[1:v]

- goto end;

- if ((ret = parse_filter(&filter, &filters, graph, index, graph)) < 0) //解析filter名称及其options,并创建该filter

- goto end;

-

-

- if ((ret = link_filter_inouts(filter, &curr_inputs, &open_inputs, graph)) < 0) //将输入输出加入filter link链表

- goto end;

-

- if ((ret = parse_outputs(&filters, &curr_inputs, &open_inputs, &open_outputs, //解析filter输出

- graph)) < 0)

- goto end;

-

- filters += strspn(filters, WHITESPACES);

- chr = *filters++;

-

- if (chr == ';' && curr_inputs)

- append_inout(&open_outputs, &curr_inputs);

- index++;

- } while (chr == ',' || chr == ';');

-

- if (chr) {

- av_log(graph, AV_LOG_ERROR,

- "Unable to parse graph description substring: \"%s\"\n",

- filters - 1);

- ret = AVERROR(EINVAL);

- goto end;

- }

-

- append_inout(&open_outputs, &curr_inputs);

-

-

- *inputs = open_inputs;

- *outputs = open_outputs;

- return 0;

-

- fail:end:

- while (graph->nb_filters)

- avfilter_free(graph->filters[0]);

- av_freep(&graph->filters);

- avfilter_inout_free(&open_inputs);

- avfilter_inout_free(&open_outputs);

- avfilter_inout_free(&curr_inputs);

-

- *inputs = NULL;

- *outputs = NULL;

-

- return ret;

- }

4. init_input_filter():

- static void init_input_filter(FilterGraph *fg, AVFilterInOut *in)

- {

- InputStream *ist = NULL;

- enum AVMediaType type = avfilter_pad_get_type(in->filter_ctx->input_pads, in->pad_idx); //获取filter输出类型,目前只支持视频类型和音频类型

- int i;

-

- // TODO: support other filter types

- if (type != AVMEDIA_TYPE_VIDEO && type != AVMEDIA_TYPE_AUDIO) {

- av_log(NULL, AV_LOG_FATAL, "Only video and audio filters supported "

- "currently.\n");

- exit_program(1);

- }

-

- if (in->name) { //输入name,此例中为[0:v]或[1:v]

- AVFormatContext *s;

- AVStream *st = NULL;

- char *p;

- int file_idx = strtol(in->name, &p, 0); //通过输入name得到当前输入的file_index

-

- if (file_idx < 0 || file_idx >= nb_input_files) {

- av_log(NULL, AV_LOG_FATAL, "Invalid file index %d in filtergraph description %s.\n",

- file_idx, fg->graph_desc);

- exit_program(1);

- }

- s = input_files[file_idx]->ctx; //当前file的ACFormatContext结构体

-

- for (i = 0; i < s->nb_streams; i++) {

- enum AVMediaType stream_type = s->streams[i]->codecpar->codec_type;

- if (stream_type != type &&

- !(stream_type == AVMEDIA_TYPE_SUBTITLE &&

- type == AVMEDIA_TYPE_VIDEO /* sub2video hack */))

- continue;

- if (check_stream_specifier(s, s->streams[i], *p == ':' ? p + 1 : p) == 1) {

- st = s->streams[i]; //确定当前输入的stream

- break;

- }

- }

- if (!st) {

- av_log(NULL, AV_LOG_FATAL, "Stream specifier '%s' in filtergraph description %s "

- "matches no streams.\n", p, fg->graph_desc);

- exit_program(1);

- }

- ist = input_streams[input_files[file_idx]->ist_index + st->index]; //根据file_index和st->index确定input_stream[]数组中的index

- } else { //如果命令行中没有指定filter的输入,则根据media type确定相应的输入流

- /* find the first unused stream of corresponding type */

- for (i = 0; i < nb_input_streams; i++) {

- ist = input_streams[i];

- if (ist->dec_ctx->codec_type == type && ist->discard)

- break;

- }

- if (i == nb_input_streams) {

- av_log(NULL, AV_LOG_FATAL, "Cannot find a matching stream for "

- "unlabeled input pad %d on filter %s\n", in->pad_idx,

- in->filter_ctx->name);

- exit_program(1);

- }

- }

- av_assert0(ist);

-

- ist->discard = 0;

- ist->decoding_needed |= DECODING_FOR_FILTER;

- ist->st->discard = AVDISCARD_NONE;

-

- GROW_ARRAY(fg->inputs, fg->nb_inputs); //扩展filter graph中input结构体

- if (!(fg->inputs[fg->nb_inputs - 1] = av_mallocz(sizeof(*fg->inputs[0])))) //为新扩展的输入分配空间

- exit_program(1);

- fg->inputs[fg->nb_inputs - 1]->ist = ist; //为新扩展的filter graph输入赋值

- fg->inputs[fg->nb_inputs - 1]->graph = fg;

- fg->inputs[fg->nb_inputs - 1]->format = -1;

- fg->inputs[fg->nb_inputs - 1]->type = ist->st->codecpar->codec_type;

- fg->inputs[fg->nb_inputs - 1]->name = describe_filter_link(fg, in, 1);

-

- fg->inputs[fg->nb_inputs - 1]->frame_queue = av_fifo_alloc(8 * sizeof(AVFrame*));

- if (!fg->inputs[fg->nb_inputs - 1]->frame_queue)

- exit_program(1);

-

- GROW_ARRAY(ist->filters, ist->nb_filters); //扩展输入流中的filter结构体,并将当前的filter graph写入到当前输入流中相应filter结构体

- ist->filters[ist->nb_filters - 1] = fg->inputs[fg->nb_inputs - 1];

- }

5. parse_filter():

下面我们再来分析一下如何解析并创建一个filter实例。

- static int parse_filter(AVFilterContext **filt_ctx, const char **buf, AVFilterGraph *graph,

- int index, void *log_ctx)

- {

- char *opts = NULL;

- char *name = av_get_token(buf, "=,;["); //根据命令行得到filter的名称,这里是"overlay"

- int ret;

-

- if (**buf == '=') { //提取命令行中filter的参数和选项,此处应该是100:00

- (*buf)++;

- opts = av_get_token(buf, "[],;");

- }

-

- ret = create_filter(filt_ctx, graph, index, name, opts, log_ctx); //根据filter的名称和参数创建filter实例

- av_free(name);

- av_free(opts);

- return ret;

- }

6. create_filter():

最后,创建filter实例。

- static int create_filter(AVFilterContext **filt_ctx, AVFilterGraph *ctx, int index,

- const char *filt_name, const char *args, void *log_ctx)

- {

- AVFilter *filt;

- char inst_name[30];

- char *tmp_args = NULL;

- int ret;

-

- snprintf(inst_name, sizeof(inst_name), "Parsed_%s_%d", filt_name, index);

-

- filt = avfilter_get_by_name(filt_name); //根据filter name从注册的AVFilter链表中提取出该filter

-

- if (!filt) {

- av_log(log_ctx, AV_LOG_ERROR,

- "No such filter: '%s'\n", filt_name);

- return AVERROR(EINVAL);

- }

-

- *filt_ctx = avfilter_graph_alloc_filter(ctx, filt, inst_name); //创建filter实例,并加入到filter graph

- if (!*filt_ctx) {

- av_log(log_ctx, AV_LOG_ERROR,

- "Error creating filter '%s'\n", filt_name);

- return AVERROR(ENOMEM);

- }

-

- if (!strcmp(filt_name, "scale") && (!args || !strstr(args, "flags")) &&

- ctx->scale_sws_opts) {

- if (args) {

- tmp_args = av_asprintf("%s:%s",

- args, ctx->scale_sws_opts);

- if (!tmp_args)

- return AVERROR(ENOMEM);

- args = tmp_args;

- } else

- args = ctx->scale_sws_opts;

- }

-

- ret = avfilter_init_str(*filt_ctx, args); //解析filter的参数并加入到该filter的私有域,并完成实例的初始化工作

- if (ret < 0) {

- av_log(log_ctx, AV_LOG_ERROR,

- "Error initializing filter '%s'", filt_name);

- if (args)

- av_log(log_ctx, AV_LOG_ERROR, " with args '%s'", args);

- av_log(log_ctx, AV_LOG_ERROR, "\n");

- avfilter_free(*filt_ctx);

- *filt_ctx = NULL;

- }

-

- av_free(tmp_args);

- return ret;

- }

以上内容只分析了filter的注册、解析、创建和初始化,而对于filter的一些基本概念,例如滤镜图(filter graph)、滤镜链(filter link)、输入输出pad等等,由于篇幅原因,未做详细说明。有时间准备单独开一篇来整理这些概念。

浙公网安备 33010602011771号

浙公网安备 33010602011771号