网络爬虫-京东商品页面的爬取

1、京东页面商品的爬取

import requests def getHTMLText(url): try: r=requests.get(url) r.raise_for_status() #如果状态不是200,触发HTTPError异常 r.encoding=r.apparent_encoding return r.text[:1000] except: return "产生异常" if __name__=="__main__": url="https://item.jd.com/100000177748.html" print(getHTMLText(url))

2、亚马逊页面商品的爬取

更改user-agent访问头部属性,让代码模拟浏览器来向亚马逊服务器提供http请求

import requests def getHTMLText(url): try: kv={'user-agent':'Mozilla/5.0'} r=requests.get(url,headers=kv) r.raise_for_status() #如果状态不是200,触发HTTPError异常 r.encoding=r.apparent_encoding return r.text[:1000] except: return "产生异常" if __name__=="__main__": url="https://www.aliyun.com/?utm_content=se_1000301881" print(getHTMLText(url))

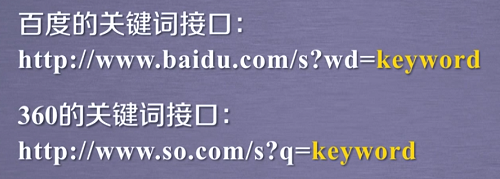

3、百度/360搜索关键字提交

两大搜索引擎关键词URL

#!/usr/bin/python3 import requests kv={'wd':'python'} url='http://www.baidu.com' r=requests.get(url,params=kv) print(r.status_code) print(r.request.url)

4、网络图片的爬取与存储

#!/usr/bin/python3 import requests path='F:/章若楠.jpg' url='http://n.sinaimg.cn/sinacn20112/200/w720h1080/20181211/4894-hprknvu2906379.jpg' r=requests.get(url) print(r.status_code) with open(path,'wb') as f: f.write(r.content) print(path+' 保存成功')

4.1、网络图片的爬取与存储(优化版)

引入os模块,将图片保存在指定目录下;try except捕获异常

#!/usr/bin/python3 import requests import os url1='http://n.sinaimg.cn/sinacn20112/200/w720h1080/20181211/4894-hprknvu2906379.jpg' url2='https://wx2.sinaimg.cn/mw690/9b6feba7ly1g1xme8o229j21sc2dsx6p.jpg' url3='https://wx3.sinaimg.cn/mw690/9b6feba7ly1g1xmdm0ib6j21o728xx6q.jpg' url4='https://wx1.sinaimg.cn/mw690/9b6feba7ly1g1qq8m4wkbj22ds1scb29.jpg' url5='https://wx3.sinaimg.cn/mw690/9b6feba7ly1g1ihqc347ej22c02c07wt.jpg' list_url=[url1,url2,url3,url4,url5] print('url长度为',len(list_url)) root='F:/妹子资源/' list_path=[] for num in range(len(list_url)): list_path.append(root+'章若楠'+str(num)+'.jpg') print('保存路径为:'+list_path[num]) def get_resource(url,path): try: if not os.path.exists(root): os.mkdir(root) if not os.path.exists(path): r=requests.get(url) with open(path,'wb') as f: f.write(r.content) print('资源爬取成功') else: print('该资源已存在') except: print('爬取失败') for url,path in zip(list_url,list_path): get_resource(url,path) #print(url,path)