Rabbitmq -Publish_Subscribe模式- python编码实现

Let's quickly go over what we covered in the previous tutorials:

- A producer is a user application that sends messages.

- A queue is a buffer that stores messages.

- A consumer is a user application that receives messages.

1 | channel.exchange_declare(exchange='logs',type='fanout') |

Listing exchanges

To list the exchanges on the server you can run the ever useful rabbitmqctl:

$ sudo rabbitmqctl list_exchanges

Listing exchanges ...

logs fanout

amq.direct direct

amq.topic topic

amq.fanout fanout

amq.headers headers

...done.

In this list there are some amq.* exchanges and the default (unnamed) exchange. These are created by default, but it is unlikely you'll need to use them at the moment.

Nameless exchange

In previous parts of the tutorial we knew nothing about exchanges, but still were able to send messages to queues. That was possible because we were using a default exchange, which we identify by the empty string ("").

#简单的翻译下: 在前面的教程中我们没有指定exchange也能够发送消息到队列里面,那很有可能我们使用了默认的exchange

Recall how we published a message before:

channel.basic_publish(exchange='',routing_key='hello',body=message)

The exchange parameter(参数) is the the name of the exchange. The empty string denotes the default or nameless exchange: messages are routed to the queue with the name specified byrouting_key, if it exists.

# 这个exchange的参数是exchange的名字,这里没写为空的话代表着使用默认的exchange或者不可能命名的exchange。消息能够被路由到队列是因为使用 routing_key这个特殊的字段。

此时我们修改下代码

Now, we can publish to our named exchange instead:

1 | channel.basic_publish(exchange='logs',routing_key='',body=message) |

Temporary queues

As you may remember previously we were using queues which had a specified name (rememberhello and task_queue?). Being able to name a queue was crucial for us -- we needed to point the workers to the same queue. Giving a queue a name is important when you want to share the queue between producers and consumers.

# 简单的翻译下:你可能还记得我们前面在使用队列的时候指定了特殊的管道名字,这样命名是为了对我们有用,我们需要把那些运行的程序去指向同一个队列,命名一个队列非常重要当我们在生产者和消费者共享队列的时候But that's not the case for our logger. We want to hear about all log messages, not just a subset of them. We're also interested only in currently flowing messages not in the old ones. To solve that we need two things.

Firstly, whenever we connect to Rabbit we need a fresh, empty queue. To do it we could create a queue with a random name, or, even better - let the server choose a random queue name for us. We can do this by not supplying the queue parameter to queue_declare:

1 | result=channel.queue_declare() |

Secondly, once we disconnect the consumer the queue should be deleted. There's an exclusive flag for that:

1 | result=channel.queue_declare(exclusive=True) |

Bindings

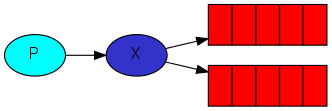

We've already created a fanout exchange and a queue. Now we need to tell the exchange to send messages to our queue. That relationship between exchange and a queue is called a binding

# translate:我们已经创建了扇出的交换器和一个队列,现在我们需要告诉这个交换器发送消息到我们队列,这时,交换器和队列他们之间两者的关系成为捆版

1 | channel.queue_bind(exchange='logs',queue=result.method.queue) |

From now on the logs exchange will append messages to our queue.

Listing bindings

You can list existing bindings using, you guessed it, rabbitmqctl list_bindings.

Putting it all together

The producer program, which emits log messages, doesn't look much different from the previous tutorial. The most important change is that we now want to publish messages to our logs exchange instead of the nameless one. We need to supply a routing_key when sending, but its value is ignored for fanout exchanges. Here goes the code for emit_log.py script:

# translate: 这个生产这程序,能够发送日志消息,看起来不不同于前面的教程,这个最重要的改变是我们现在想发送广播信息到我们的日志交换器而不是未命名的交换器,我们需要提供一个routeing_key当我们发送的时候,但是它的值是可以忽略的对于扇出交换器,这里能够得到这个代码:

github地址:https://github.com/rabbitmq/rabbitmq-tutorials/blob/master/python/emit_log.py

#!/usr/bin/env pythonimport pika import sys connection=pika.BlockingConnection(pika.ConnectionParameters( host='localhost')) channel=connection.channel() channel.exchange_declare(exchange='logs', type='fanout') message=' '.join(sys.argv[1:]) or"info: Hello World!"channel.basic_publish(exchange='logs', routing_key='', body=message) print(" [x] Sent %r"%message) connection.close()

As you see, after establishing the connection we declared the exchange. This step is neccesary as publishing to a non-existing exchange is forbidden.

The messages will be lost if no queue is bound to the exchange yet, but that's okay for us; if no consumer is listening yet we can safely discard the message.The code for receive_logs.py:

# translate: 正如你所看到的,在建立连接后我们可以声明这个交换器,这一步的话广播到不存在的交换器是会被拒绝的,

这个信息会被丢失如果没有队列去限制这个交换器,但是这对我们来说是OK,如果没有消费者去监听的话,我们就可以安全的丢弃这个消息,这个代码如下:

The code for receive_logs.py:

#!/usr/bin/env pythonimport pika connection=pika.BlockingConnection(pika.ConnectionParameters( host='localhost')) channel=connection.channel() channel.exchange_declare(exchange='logs', type='fanout') result=channel.queue_declare(exclusive=True) queue_name=result.method.queuechannel.queue_bind(exchange='logs', queue=queue_name) print(' [*] Waiting for logs. To exit press CTRL+C') defcallback(ch, method, properties, body): print(" [x] %r"%body) channel.basic_consume(callback, queue=queue_name, no_ack=True) channel.start_consuming()

代码地址:https://github.com/rabbitmq/rabbitmq-tutorials/blob/master/python/receive_logs.py

We're done. If you want to save logs to a file, just open a console and type:

1 | $ python receive_logs.py > logs_from_rabbit.log |

If you wish to see the logs on your screen, spawn a new terminal and run:

1 | $ python receive_logs.py |

And of course, to emit logs type:

1 | $ python emit_log.py |

Using rabbitmqctl list_bindings you can verify that the code actually creates bindings and queues as we want. With two receive_logs.py programs running you should see something like:

1 2 3 4 5 | $ sudo rabbitmqctl list_bindingsListing bindings ...logs exchange amq.gen-JzTY20BRgKO-HjmUJj0wLg queue []logs exchange amq.gen-vso0PVvyiRIL2WoV3i48Yg queue []...done. |

The interpretation of the result is straightforward: data from exchange logs goes to two queues with server-assigned names. And that's exactly what we intended.

To find out how to listen for a subset of messages, let's move on to tutorial 4

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 10年+ .NET Coder 心语,封装的思维:从隐藏、稳定开始理解其本质意义

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· 阿里巴巴 QwQ-32B真的超越了 DeepSeek R-1吗?

· 【译】Visual Studio 中新的强大生产力特性

· 10年+ .NET Coder 心语 ── 封装的思维:从隐藏、稳定开始理解其本质意义

· 【设计模式】告别冗长if-else语句:使用策略模式优化代码结构