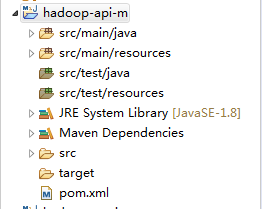

1、目录结构:

2、代码:

2.1 pom.xml

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>org.sw.hadoop.api</groupId>

<artifactId>hadoop-api-m</artifactId>

<version>0.0.1-SNAPSHOT</version>

<properties>

<hadoop.version>2.7.3</hadoop.version>

</properties>

<dependencies>

<dependency>

<groupId>jdk.tools</groupId>

<artifactId>jdk.tools</artifactId>

<version>1.8</version>

<scope>system</scope>

<systemPath>${JAVA_HOME}/lib/tools.jar</systemPath>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-common -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>${hadoop.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-hdfs -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>${hadoop.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-core -->

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-mapreduce-client-core -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-core</artifactId>

<version>${hadoop.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-yarn-common -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-yarn-common</artifactId>

<version>${hadoop.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-mapreduce-client-common -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-common</artifactId>

<version>${hadoop.version}</version>

</dependency>

</dependencies>

<build>

<plugins>

<!-- 设置JDK版本 -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<configuration>

<source>1.8</source>

<target>1.8</target>

<encoding>UTF-8</encoding>

</configuration>

</plugin>

</plugins>

</build>

</project>2.2 java code:

package org.sw.hadoop.api.utils;

import org.apache.hadoop.conf.Configuration;

/**

* 通用提交应用程序到hadoop集群的代码

*

* @author liangsw

*

*/

public class HadoopUtils {

/**

* Hadoop Configuration

* @return

*/

public static Configuration hadoopConf() {

Configuration conf = new Configuration();

// 配置跨平台提交任务

conf.setBoolean("mapreduce.app-submission.cross-platform", true);

// 指定NameNode

conf.set("fs.defaultFS", "hdfs://master:9000");

// 指定Yarn

conf.set("mapreduce.framework.name", "yarn");

// 指定resourcemanager

conf.set("yarn.resourcemanager.address", "master:8032");

// 指定资源分配器

conf.set("yarn.resourcemanager.scheduler.address", "master:8030");

// 指定historyserver

conf.set("mapreduce.jobhistory.address", "slave01:10020");

// 设置Jar路径

// conf.set("mapreduce.job.jar", "C:\\Users\\liangsw\\Desktop\\wordcount.jar");

return conf;

}

}

package org.sw.hadoop.api;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileStatus;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.sw.hadoop.api.utils.HadoopUtils;

/**

* Haoop API操作;

*

* @author liangsw

*

*/

public class HaoopAPI {

public static void main(String[] args) throws IOException {

// ls("hdfs://master:9000/");

String remotePath = "hdfs://master:9000/hadoopApi/";

String localPath = "C:/Users/liangsw/Desktop/error.txt";

mkdir(remotePath);

put(localPath, remotePath);

}

/**

* 上传:将本地文件上传至分布式文件系统指定目录

*

* @throws IOException

*/

public static void put(String localPath, String remotePath) throws IOException {

Configuration conf = HadoopUtils.hadoopConf();

FileSystem fs = FileSystem.get(conf);

Path src = new Path(localPath);

Path dst = new Path(remotePath);

fs.copyFromLocalFile(src, dst);

fs.close();

}

/**

* 读取(cat)

*

* @param remotePath

* @throws IOException

*/

public static void cat(String remotePath) throws IOException {

Configuration conf = HadoopUtils.hadoopConf();

FileSystem fs = FileSystem.get(conf);

Path dst = new Path(remotePath);

// 判断目标文件是否存在

if (fs.exists(dst)) {

// 打开分布式文件操作对象

FSDataInputStream fsdis = fs.open(dst);

// 获取文件状态

FileStatus status = fs.getFileStatus(dst);

byte[] buffer = new byte[Integer.parseInt(String.valueOf(status.getLen()))];

// 读取文件流到buffer

fsdis.readFully(buffer);

// 关闭流

fsdis.close();

// 关闭文件操作对象

fs.close();

// 打印文件流中的数据

System.out.println(buffer.toString());

}

}

/**

* 下载(get)

* @param remotePath

* @param localPath

* @throws IOException

*/

public static void get(String remotePath, String localPath) throws IOException {

Configuration conf = HadoopUtils.hadoopConf();

FileSystem fs = FileSystem.get(conf);

// 分布式文件系统路径

Path dst = new Path(remotePath);

// 本地文件路径

Path src = new Path(localPath);

fs.copyToLocalFile(dst, src);

fs.close();

}

/**

* 删除(rmr)

*

* @param remotePath

* @throws IOException

*/

public static void rmr(String remotePath) throws IOException {

Configuration conf = HadoopUtils.hadoopConf();

FileSystem fs = FileSystem.get(conf);

Path dst = new Path(remotePath);

if(fs.exists(dst)) {

fs.delete(dst, true);

}

fs.close();

}

/**

* 查看目录(ls)

*

* @param remotePath

* @throws IOException

*/

public static void ls(String remotePath) throws IOException {

Configuration conf = HadoopUtils.hadoopConf();

FileSystem fs = FileSystem.get(conf);

Path dst = new Path(remotePath);

FileStatus[] status = fs.listStatus(dst);

for (FileStatus fileStatus : status) {

System.out.println(fileStatus.getPath().getName());

}

fs.close();

}

/**

* 创建目录(mkdir)

* @param remotePath

* @throws IOException

*/

public static void mkdir(String remotePath) throws IOException {

Configuration conf = HadoopUtils.hadoopConf();

FileSystem fs = FileSystem.get(conf);

Path dst = new Path(remotePath);

if(!fs.exists(dst)) {

fs.mkdirs(dst);

// fs.create(dst); // 创建文件,并非文件夹

}

fs.close();

}

}